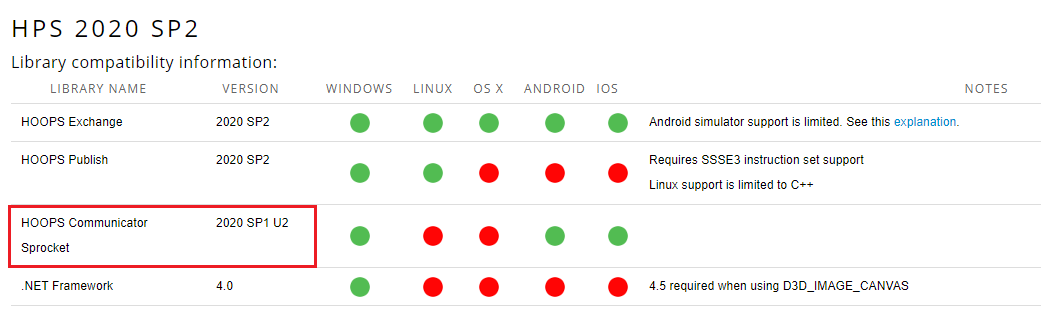

注:本文的SIMD,指的是CPU(base intel x86 architecture)指令架构中的相关概念。不涉及GPU端的算力机制。下面的代码在Win10和Linux上均可用。

基本概念

SSE: Streaming SIMD Extensions, x86 architecture

AVX: Advanced Vector Extensions

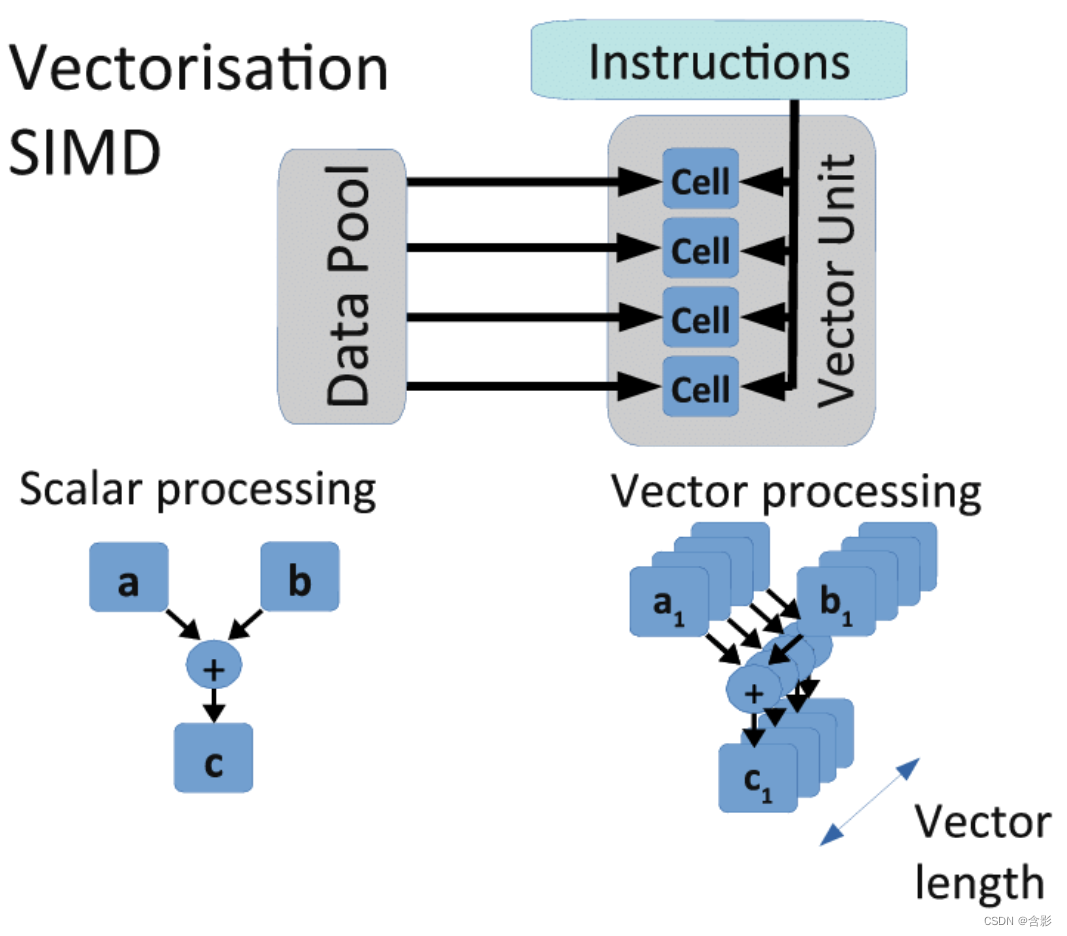

SIMD,Single Instruction/Multiple Data, 即单指流令多数据流,例如一个乘法指令,可以并行的计算8个浮点数的乘法。

SIMD(Single Instruction/Multiple Data, 即)是目前通用的CPU端的指令级并行计算机制,也叫做矢量运算,这些SIMD包括SSE和AVX。

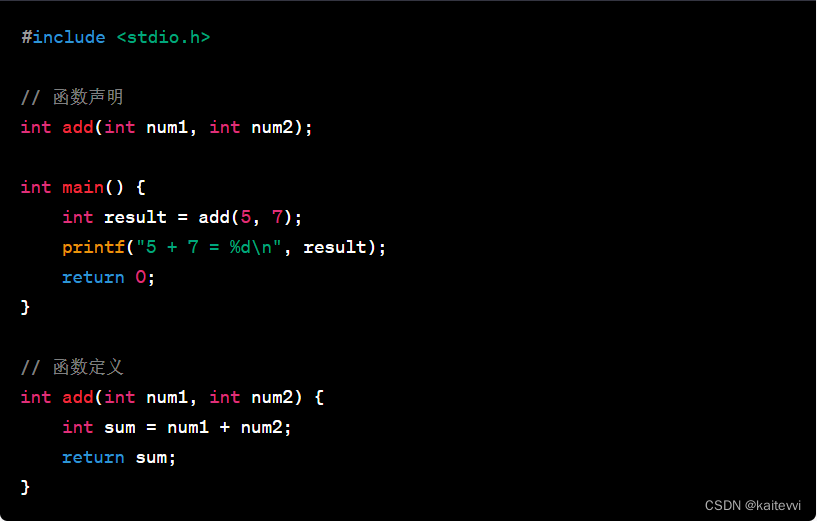

通过代码直观的简介SIMD机制

用下面两份代码做个基本说明

非矢量运算的代码

void mul4_scalar( float* ptr )

{for( int i = 0; i < 4; i++ ){const float f = ptr[ i ];ptr[ i ] = f * f;}

}矢量运算的代码

void mul4_vectorized( float* ptr )

{__m128 f = _mm_loadu_ps( ptr );f = _mm_mul_ps( f, f );_mm_storeu_ps( ptr, f );

}

// __m128 就是sse simd 对象

// _mm_mul_ps 和 _mm_storeu_ps 就是对应的乘法和赋值矢量运算指令(函数)上述两份代码源自于: http://const.me/articles/simd/simd.pdf

不难看出,在密集计算中SIMD程序的效能肯定比常规程序高很多。(这里就不去和异构计算架构下的机制做比较了)

相关指令在源代码中的位置

Linux系统GCC

gcc-master\gcc\config\i386\immintrin.h头文件中包含了各种simd指令的相关头文件

SSE, __m128, 这类SSE指令位置: gcc-master\gcc\config\i386\xmmintrin.h

/* The Intel API is flexible enough that we must allow aliasing with othervector types, and their scalar components. */

typedef float __m128 __attribute__ ((__vector_size__ (16), __may_alias__));SSE2, __v4si, __128d定义在 gcc-master\gcc\config\i386\emmintrin.h中

typedef int __v4si __attribute__ ((__vector_size__ (16)));typedef double __m128d __attribute__ ((__vector_size__ (16), __may_alias__));MMX指令 __m64定义在 gcc-master\gcc\config\i386\mmintrin.h中

typedef int __m64 __attribute__ ((__vector_size__ (8), __may_alias__));

typedef int __m32 __attribute__ ((__vector_size__ (4), __may_alias__));

typedef short __m16 __attribute__ ((__vector_size__ (2), __may_alias__));AVX指令, _v4df, __v8si, __m256定义在gcc-master\gcc\config\i386\avxintrin.h中

typedef double __v4df __attribute__ ((__vector_size__ (32)));typedef int __v8si __attribute__ ((__vector_size__ (32)));typedef unsigned char __v32qu __attribute__ ((__vector_size__ (32)));typedef float __m256 __attribute__ ((__vector_size__ (32),__may_alias__));linux系统中矢量运算 __attribute__ vector_size 定义的说明:

typedef int v4si __attribute__ ((vector_size (16)));

The int type specifies the base type, while the attribute specifies the vector size for the variable, measured in bytes. For example, the declaration above causes the compiler to set the mode for the v4si type to be 16 bytes wide and divided into int sized units. For a 32-bit int this means a vector of 4 units of 4 bytes, and the corresponding mode of foo will be V4SI.

相关细节请见: Vector Extensions (Using the GNU Compiler Collection (GCC))

Windows系统MSVC

头文件 Microsoft Visual Studio\2022\Community\VC\Tools\MSVC\14.33.31629\include\intrin.h,也已经包含了相关指令函数的头文件

__m128d, __m128i定义在 Microsoft Visual Studio\2022\Community\VC\Tools\MSVC\14.33.31629\include\emmintrin.h中

typedef union __declspec(intrin_type) __declspec(align(16)) __m128i {__int8 m128i_i8[16];__int16 m128i_i16[8];__int32 m128i_i32[4];__int64 m128i_i64[2];unsigned __int8 m128i_u8[16];unsigned __int16 m128i_u16[8];unsigned __int32 m128i_u32[4];unsigned __int64 m128i_u64[2];

} __m128i;typedef struct __declspec(intrin_type) __declspec(align(16)) __m128d {double m128d_f64[2];

} __m128d;__m128d, __m128i定义在 Microsoft Visual Studio\2022\Community\VC\Tools\MSVC\14.33.31629\include\emmintrin.h

typedef union __declspec(intrin_type) __declspec(align(16)) __m128i {__int8 m128i_i8[16];__int16 m128i_i16[8];__int32 m128i_i32[4];__int64 m128i_i64[2];unsigned __int8 m128i_u8[16];unsigned __int16 m128i_u16[8];unsigned __int32 m128i_u32[4];unsigned __int64 m128i_u64[2];

} __m128i;typedef struct __declspec(intrin_type) __declspec(align(16)) __m128d {double m128d_f64[2];

} __m128d;AVX(AVX2), __m256d, __m256, __m256i定义在 Microsoft Visual Studio\2022\Community\VC\Tools\MSVC\14.33.31629\include\immintrin.h中

/** Intel(R) AVX compiler intrinsic functions.*/

typedef union __declspec(intrin_type) __declspec(align(32)) __m256 {float m256_f32[8];

} __m256;typedef struct __declspec(intrin_type) __declspec(align(32)) __m256d {double m256d_f64[4];

} __m256d;typedef union __declspec(intrin_type) __declspec(align(32)) __m256i {__int8 m256i_i8[32];__int16 m256i_i16[16];__int32 m256i_i32[8];__int64 m256i_i64[4];unsigned __int8 m256i_u8[32];unsigned __int16 m256i_u16[16];unsigned __int32 m256i_u32[8];unsigned __int64 m256i_u64[4];

} __m256i;两个系统的定义有区别,所以跨平台应用这些SIMD功能需要主要一些细节。

检查Linux系统或者Windows系统对SSE和AVX的支持情况

注:下面的代码本人常用在在Linux Centos7和Windows 10上

检测代码如下:

#include <iostream>

#ifdef _MSC_VER

# include <intrin.h>

void __cpuidSpec(int p0[4], int p1)

{__cpuid(p0, p1);

}

unsigned __int64 __cdecl _xgetbvSpec(unsigned int p)

{return _xgetbv(p);

}

#endif#ifdef __GNUC__

void __cpuidSpec(int* cpuinfo, int info)

{__asm__ __volatile__("xchg %%ebx, %%edi;""cpuid;""xchg %%ebx, %%edi;": "=a"(cpuinfo[0]), "=D"(cpuinfo[1]), "=c"(cpuinfo[2]), "=d"(cpuinfo[3]): "0"(info));

}

unsigned long long _xgetbvSpec(unsigned int index)

{unsigned int eax, edx;__asm__ __volatile__("xgetbv;": "=a"(eax), "=d"(edx): "c"(index));return ((unsigned long long)edx << 32) | eax;

}

# include <immintrin.h>

#endifnamespace sseavx::config

{

void sseavxCheck()

{bool sseSupportted = false;bool sse2Supportted = false;bool sse3Supportted = false;bool ssse3Supportted = false;bool sse4_1Supportted = false;bool sse4_2Supportted = false;bool sse4aSupportted = false;bool sse5Supportted = false;bool avxSupportted = false;int cpuinfo[4];__cpuidSpec(cpuinfo, 1);// Check SSE, SSE2, SSE3, SSSE3, SSE4.1, and SSE4.2 supportsseSupportted = cpuinfo[3] & (1 << 25) || false;sse2Supportted = cpuinfo[3] & (1 << 26) || false;sse3Supportted = cpuinfo[2] & (1 << 0) || false;ssse3Supportted = cpuinfo[2] & (1 << 9) || false;sse4_1Supportted = cpuinfo[2] & (1 << 19) || false;sse4_2Supportted = cpuinfo[2] & (1 << 20) || false;avxSupportted = cpuinfo[2] & (1 << 28) || false;bool osxsaveSupported = cpuinfo[2] & (1 << 27) || false;if (osxsaveSupported && avxSupportted){// _XCR_XFEATURE_ENABLED_MASK = 0unsigned long long xcrFeatureMask = _xgetbvSpec(0);avxSupportted = (xcrFeatureMask & 0x6) == 0x6;}// ----------------------------------------------------------------------// Check SSE4a and SSE5 support// Get the number of valid extended IDs__cpuidSpec(cpuinfo, 0x80000000);int numExtendedIds = cpuinfo[0];if (numExtendedIds >= 0x80000001){__cpuidSpec(cpuinfo, 0x80000001);sse4aSupportted = cpuinfo[2] & (1 << 6) || false;sse5Supportted = cpuinfo[2] & (1 << 11) || false;}// ----------------------------------------------------------------------std::cout << "\n";std::boolalpha(std::cout);std::cout << "Support SSE: " << sseSupportted << std::endl;std::cout << "Support SSE2: " << sse2Supportted << std::endl;std::cout << "Support SSE3: " << sse3Supportted << std::endl;std::cout << "Support SSE4.1: " << sse4_1Supportted << std::endl;std::cout << "Support SSE4.2: " << sse4_2Supportted << std::endl;std::cout << "Support SSE4a: " << sse4aSupportted << std::endl;std::cout << "Support SSE5: " << sse5Supportted << std::endl;std::cout << "Support AVX: " << avxSupportted << std::endl;std::cout << "\n";

}

}windows或linux下c++17及以上版本编译即可。

Linux和Windows下SIMD的跨平台用例详情

c++ simd用例代码如下(包含相关说明):

#include <iostream>

#include <vector>

#include <array>

#include <cassert>

#include <random>

#include <chrono>#ifdef _MSC_VER

# include <intrin.h>

#endif#ifdef __GNUC__

#include <cstring>

#include <immintrin.h>

#endifnamespace sseavx::test

{

namespace

{

// function meaning: value = sqrt(a*a + b*b)

void normal_sqrt_calc(float data1[], float data2[], int len, float out[])

{int i;for (i = 0; i < len; i++){out[i] = sqrt(data1[i] * data1[i] + data2[i] * data2[i]);}

}

// sse

void simd_sqrt_calc(float* data1, float* data2, int len, float out[])

{// g++ -msse3 -O3 -Wall -lrt sseavxBaseTest.cc -o sseavxBaseTest.out -std=c++20// g++ sseavxBaseTest.cc -o sseavxBaseTest.out -std=c++20assert(len % 4 == 0);__m128 *a, *b, *res, t1, t2, t3; // = _mm256_set_ps(1, 1, 1, 1, 1, 1, 1, 1);int i, tlen = len / 4;a = (__m128*)data1;b = (__m128*)data2;res = (__m128*)out;for (i = 0; i < tlen; i++){t1 = _mm_mul_ps(*a, *a);t2 = _mm_mul_ps(*b, *b);t3 = _mm_add_ps(t1, t2);*res = _mm_sqrt_ps(t3);a++;b++;res++;}

}

// avx

void simd256_sqrt_calc(float* data1, float* data2, int len, float out[])

{assert(len % 8 == 0);// AVX g++ cpmpile cmd: g++ -mavx sseavxBaseTest.cc -o sseavxBaseTest.out -std=c++20// AVX g++ cpmpile cmd: g++ -march=native sseavxBaseTest.cc -o sseavxBaseTest.out -std=c++20__m256 *a, *b, *res, t1, t2, t3; // = _mm256_set_ps(1, 1, 1, 1, 1, 1, 1, 1);int i, tlen = len / 8;a = (__m256*)data1;b = (__m256*)data2;res = (__m256*)out;for (i = 0; i < tlen; i++){t1 = _mm256_mul_ps(*a, *a);t2 = _mm256_mul_ps(*b, *b);t3 = _mm256_add_ps(t1, t2);*res = _mm256_sqrt_ps(t3);// _mm256_storeu_psa++;b++;res++;}

}

// sse simd 计算 float类型的vector4和4x4矩阵的乘法

void vec4_mul_mat4(const float vd[4], const std::array<float[4], 4>& md, float out[4])

{__m128* v = (__m128*)vd;__m128 i0 = *((__m128*)md[0]);__m128 i1 = *((__m128*)md[1]);__m128 i2 = *((__m128*)md[2]);__m128 i3 = *((__m128*)md[3]);__m128 m0 = _mm_mul_ps(*v, i0);__m128 m1 = _mm_mul_ps(*v, i1);__m128 m2 = _mm_mul_ps(*v, i2);__m128 m3 = _mm_mul_ps(*v, i3);__m128 u0 = _mm_unpacklo_ps(m0, m1);__m128 u1 = _mm_unpackhi_ps(m0, m1);__m128 a0 = _mm_add_ps(u0, u1);__m128 u2 = _mm_unpacklo_ps(m2, m3);__m128 u3 = _mm_unpackhi_ps(m2, m3);__m128 a1 = _mm_add_ps(u2, u3);__m128 f0 = _mm_movelh_ps(a0, a1);__m128 f1 = _mm_movehl_ps(a1, a0);__m128 f2 = _mm_add_ps(f0, f1);

#ifdef __GNUC__std::memcpy(out, &f2, sizeof(float) * 4);

#elsestd::memcpy(out, f2.m128_f32, sizeof(float) * 4);

#endif

}

} // namespace

void test_sqrt_calc()

{std::cout << "\n... test_sqrt_calc() begin ..." << std::endl;std::random_device rd;std::mt19937 gen(rd());std::uniform_real_distribution<float> distribute(100.5f, 20001.5f);constexpr int data_size = 8192 << 12;// 应用__attribute__ ((aligned (32))) 语法解决 avx linux 运行时内存对齐问题,// 如果没有强制对齐,则会出现运行时 Segmentation fault 错误// 在 MSVC环境 则用: __declspec(align(32))#ifdef __GNUC__// invalid attribute defined syntax in linux heap run time memory env, make runtime avx error: Segmentation fault// __attribute__ ((aligned (32))) float *data1 = new float[data_size]{};// __attribute__ ((aligned (32))) float *data2 = new float[data_size]{};// __attribute__ ((aligned (32))) float *data_out = new float[data_size]{};//// valid attribute defined syntax in linux heap run time memory env, runtime correct.float* data1 = new float __attribute__((aligned(32)))[data_size]{};float* data2 = new float __attribute__((aligned(32)))[data_size]{};float* data_out = new float __attribute__((aligned(32)))[data_size]{};// valid attribute defined syntax in linux code stack run time memory env, runtime correct.// __attribute__ ((aligned (32))) float data1[data_size]{};// __attribute__ ((aligned (32))) float data2[data_size]{};// __attribute__ ((aligned (32))) float data_out[data_size]{};

#elsefloat* data1 = new float[data_size]{};float* data2 = new float[data_size]{};float* data_out = new float[data_size]{};

#endifstd::cout << "data_size: " << data_size << std::endl;std::cout << "data: ";for (int i = 0; i < data_size; ++i){auto v = distribute(gen);data1[i] = v;if (i < 8)std::cout << distribute(gen) << " ";}std::cout << std::endl;for (int i = 0; i < data_size; ++i){auto v = distribute(gen);data2[i] = v;}auto time_start = std::chrono::high_resolution_clock::now();// normal_sqrt_calc(data1, data2, data_size, data_out);// DEbug 146ms, data_size = 8192 << 12, in Win10 MSVC;// simd_sqrt_calc(data1, data2, data_size, data_out);// Debug 38mssimd256_sqrt_calc(data1, data2, data_size, data_out); // Debug 24ms// 目前的代码中的buffer数据定义和使用,看起来与cpu cache的空间局部性原则冲突,// 可能导致较多的cpu cache miss,但是Release下由于编译器有优化,非SIMD计算具体情况// 则另当别论。单有一点是确定的,因为不是soa数据形式,对SIMD机制并不友好。auto time_end = std::chrono::high_resolution_clock::now();auto lossTime = std::chrono::duration_cast<std::chrono::milliseconds>(time_end - time_start).count();std::cout << "loss time: " << lossTime << "ms" << std::endl;std::cout << "data_out: ";for (int i = 0; i < 8; ++i){std::cout << data_out[i] << " ";}std::cout << std::endl;std::cout << "... test_sqrt_calc() end ..." << std::endl;

}

void test_matrix_calc()

{std::cout << "\n... test_matrix_calc() begin ...\n" << std::endl;

#ifdef __GNUC____attribute__((aligned(32))) float vec4_out[4]{};__attribute__((aligned(32))) float vec4_01[4]{1.1f, 2.2f, 3.3f, 1.0f};__attribute__((aligned(32))) std::array<float[4], 4> mat4_01{{1.0f, 0.0f, 0.0f, 0.0f, 0.0f, 1.0f, 0.0f, 0.0f, 0.0f, 0.0f, 1.0f, 0.0f, 0.0f, 0.0f, 0.0f, 1.0f}};

#elsefloat vec4_out[4]{};float vec4_01[4]{1.1f, 2.2f, 3.3f, 1.0f};std::array<float[4], 4> mat4_01{{1.0f, 0.0f, 0.0f, 0.0f, 0.0f, 1.0f, 0.0f, 0.0f, 0.0f, 0.0f, 1.0f, 0.0f, 0.0f, 0.0f, 0.0f, 1.0f}};

#endifvec4_mul_mat4(vec4_01, mat4_01, vec4_out);std::cout << "SSE vec4_mul_mat4 :" << vec4_out[0] << "," << vec4_out[1] << "," << vec4_out[2] << "," << vec4_out[3] << std::endl;std::cout << "\n... test_matrix_calc() end ..." << std::endl;

}

void testBase()

{//g++ -msse3 -O3 -Wall -lrt checkSSEAVX2.cc -o check2.out -std=c++20std::cout << "\n... testBase() begin ...\n"<< std::endl;///*

#ifdef __GNUC__const __m128 zero = _mm_setzero_ps();const __m128 eq = _mm_cmpeq_ps(zero, zero);const int mask = _mm_movemask_ps(eq);std::cout << "testBase(), mask: " << mask << std::endl;union{__m128 v;float vs[4]{4.0f, 4.1f, 4.2f, 4.3f};std::array<float, 4> array;} SIMD4Data{};__m128 a4 = _mm_set_ps(4.0f, 4.1f, 4.2f, 4.3f);__m128 b4 = _mm_set_ps(1.0f, 1.0f, 1.0f, 1.0f);__m128 sum4 = _mm_add_ps(a4, b4);auto f_vs = (float*)&sum4;std::cout << "SSE sum4 A:" << f_vs[0] << "," << f_vs[1] << "," << f_vs[2] << "," << f_vs[3] << std::endl;// 获取值的顺序和你输入的顺序是相反的std::cout << "SSE sum4 B:" << f_vs[3] << "," << f_vs[2] << "," << f_vs[1] << "," << f_vs[0] << std::endl;SIMD4Data.v = _mm_add_ps(a4, b4);std::cout << "SSE Sum SIMD4Data.vs:" << SIMD4Data.vs[0] << "," << SIMD4Data.vs[1] << "," << SIMD4Data.vs[2] << "," << SIMD4Data.vs[3] << std::endl;float result_vs[4]{};_mm_store_ps(result_vs, sum4);std::cout << "SSE Sum result_vs:" << result_vs[0] << "," << result_vs[1] << "," << result_vs[2] << "," << result_vs[3] << std::endl;

#elseunion{__m128 v;float vs[4]{4.0f, 4.1f, 4.2f, 4.3f};std::array<float, 4> array;} SIMD4Data{};__m128 a4 = _mm_set_ps(4.0f, 4.1f, 4.2f, 4.3f);__m128 b4 = _mm_set_ps(1.0f, 1.0f, 1.0f, 1.0f);__m128 sum4 = _mm_add_ps(a4, b4);std::cout << "SSE sum4.m128_f32 A:" << sum4.m128_f32[0] << "," << sum4.m128_f32[1] << "," << sum4.m128_f32[2] << "," << sum4.m128_f32[2] << std::endl;// 获取值的顺序和你输入的顺序是相反的std::cout << "SSE sum4.m128_f32 B:" << sum4.m128_f32[3] << "," << sum4.m128_f32[2] << "," << sum4.m128_f32[1] << "," << sum4.m128_f32[0] << std::endl;std::cout << "SSE sum4.m128_f32[0]:" << sum4.m128_f32[0] << std::endl;SIMD4Data.v = _mm_add_ps(a4, b4);std::cout << "SSE Sum SIMD4Data.vs:" << SIMD4Data.vs[0] << "," << SIMD4Data.vs[1] << "," << SIMD4Data.vs[2] << "," << SIMD4Data.vs[3] << std::endl;std::cout << "SSE Sum SIMD4Data.array:" << SIMD4Data.array[0] << "," << SIMD4Data.array[1] << "," << SIMD4Data.array[2] << "," << SIMD4Data.array[3] << std::endl;std::cout << "\n";union{__m256 v;float vs[8]{};} SIMD8Data{};__m256 a8 = _mm256_set_ps(4.0f, 4.1f, 4.2f, 4.3f, 4.0f, 4.1f, 4.2f, 4.3f);__m256 b8 = _mm256_set_ps(3.0f, 3.3f, 3.2f, 3.3f, 3.0f, 3.1f, 3.2f, 3.3f);__m256 sum8 = _mm256_add_ps(a8, b8);SIMD8Data.v = _mm256_add_ps(a8, b8);std::cout << "SSE sum8.m256_f32:" << sum8.m256_f32[0] << "," << sum8.m256_f32[1] << "," << sum8.m256_f32[2] << std::endl;std::memcpy(SIMD8Data.vs, sum8.m256_f32, sizeof(float));std::cout << "SSE SIMD8Data.vs:" << SIMD8Data.vs[0] << "," << SIMD8Data.vs[1] << "," << SIMD8Data.vs[2] << std::endl;

#endifstd::cout << "\n";std::cout << "... testBase() end ..." << std::endl;

}void testBase2()

{std::cout << "\n... testBase2() begin ...\n";

#ifdef __GNUC__float v0[4] __attribute__((aligned(16))) = {0.0f, 1.0f, 2.0f, 3.0f};float v1[4] __attribute__((aligned(16))) = {4.0f, 5.0f, 6.0f, 7.0f};

#elsefloat v0[4] = {0.0f, 1.0f, 2.0f, 3.0f};float v1[4] = {4.0f, 5.0f, 6.0f, 7.0f};

#endif/*_MM_SHUFFLE macro is supported in both Linux GCC and Windows MSVC, in linux gcc, defined in gcc\config\i386\xmmintrin.h head file.__m128 _mm_shuffle_ps(__m128 lo,__m128 hi,_MM_SHUFFLE(hi3,hi2,lo1,lo0)):Interleave inputs into low 2 floats and high 2 floats of output. Basicallyout[0]=lo[lo0];out[1]=lo[lo1];out[2]=hi[hi2];out[3]=hi[hi3];For example, _mm_shuffle_ps(a,a,_MM_SHUFFLE(i,i,i,i)) copies the float a[i] into all 4 output floats.*/__m128 r0 = _mm_load_ps(v0);__m128 r1 = _mm_load_ps(v1);__m128 r1_b = _mm_set_ps(v1[0], v1[1], v1[2], v1[3]);_mm_store_ps(v0, r1);std::cout << "SSE r1 :" << v0[0] << "," << v0[1] << "," << v0[2] << "," << v0[3] << std::endl;_mm_store_ps(v0, r1_b);std::cout << "SSE r1_b :" << v0[0] << "," << v0[1] << "," << v0[2] << "," << v0[3] << std::endl;auto r1_b_0 = _mm_shuffle_ps(r1_b, r1_b, _MM_SHUFFLE(3, 2, 1, 0));_mm_store_ps(v0, r1_b_0);std::cout << "SSE r1_b_0 :" << v0[0] << "," << v0[1] << "," << v0[2] << "," << v0[3] << std::endl;// output: SSE r1_b_0 : 7, 6, 5, 4auto r1_b_1 = _mm_shuffle_ps(r1_b, r1_b, _MM_SHUFFLE(0, 1, 2, 3));_mm_store_ps(v0, r1_b_1);std::cout << "SSE r1_b_1 :" << v0[0] << "," << v0[1] << "," << v0[2] << "," << v0[3] << std::endl;// output: SSE r1_b_1 : 4, 5, 6, 7// Identity: r2 = r0.std::cout << "Identity:" << std::endl;auto r2 = _mm_shuffle_ps(r0, r0, _MM_SHUFFLE(3, 2, 1, 0));_mm_store_ps(v0, r2);std::cout << "SSE r2 :" << v0[0] << "," << v0[1] << "," << v0[2] << "," << v0[3] << std::endl;auto r2_b = _mm_shuffle_ps(r1, r1, _MM_SHUFFLE(3, 2, 1, 0));_mm_store_ps(v0, r2);std::cout << "SSE r2_b :" << v0[0] << "," << v0[1] << "," << v0[2] << "," << v0[3] << std::endl;auto r2_c = _mm_shuffle_ps(r1, r1, _MM_SHUFFLE(3, 2, 1, 0));_mm_store_ps(v0, r2);std::cout << "SSE r2_c :" << v0[0] << "," << v0[1] << "," << v0[2] << "," << v0[3] << std::endl;_mm_store_ps(v0, r2);for (auto x : v0) { std::cout << x << ' '; }std::cout << std::endl;// Reverse r0std::cout << "Reverse r0:" << std::endl;r2 = _mm_shuffle_ps(r0, r0, _MM_SHUFFLE(0, 1, 2, 3));_mm_store_ps(v0, r2);for (auto x : v0) { std::cout << x << ' '; }std::cout << std::endl;// Even: { r0[ 0 ], r0[ 2 ], r1[ 0 ], r1[ 2 ] }std::cout << "Even: { r0[ 0 ], r0[ 2 ], r1[ 0 ], r1[ 2 ] }" << std::endl;r2 = _mm_shuffle_ps(r0, r1, _MM_SHUFFLE(2, 0, 2, 0));_mm_store_ps(v0, r2);for (auto x : v0) { std::cout << x << ' '; }std::cout << std::endl;// Misc: { r0[ 3 ], r0[ 2 ], r1[ 2 ], r1[ 1 ] }std::cout << "Misc: { r0[ 3 ], r0[ 2 ], r1[ 2 ], r1[ 1 ] }" << std::endl;r2 = _mm_shuffle_ps(r0, r1, _MM_SHUFFLE(1, 2, 2, 3));_mm_store_ps(v0, r2);for (auto x : v0) { std::cout << x << ' '; }std::cout << std::endl;std::cout << "\n... testBase2() end ..." << std::endl;

}

}

// linux compile cmd: g++ -mavx sseavxBaseTest.cc -o sseavxBaseTest.out -std=c++20

int main(int argc, char** argv)

{std::cout << "main() begin.\n";sseavx::test::testBase();sseavx::test::testBase2();sseavx::test::test_sqrt_calc();sseavx::test::test_matrix_calc();std::cout << "\nmain() end.\n";return EXIT_SUCCESS;

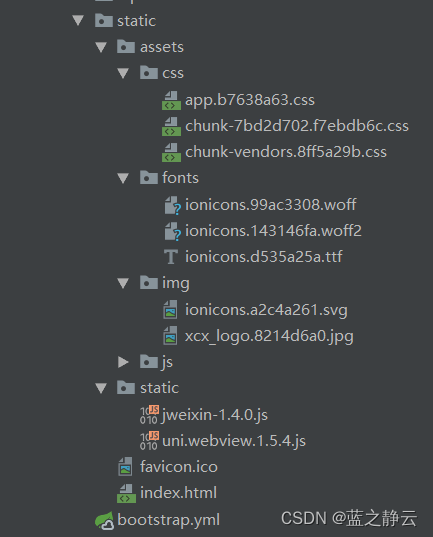

}如果将此代码保存为 sseavxBaseTest.cc, linux下编译命令为:

g++ -mavx sseavxBaseTest.cc -o sseavxBaseTest.out -std=c++20

c++版本最低为c++17。

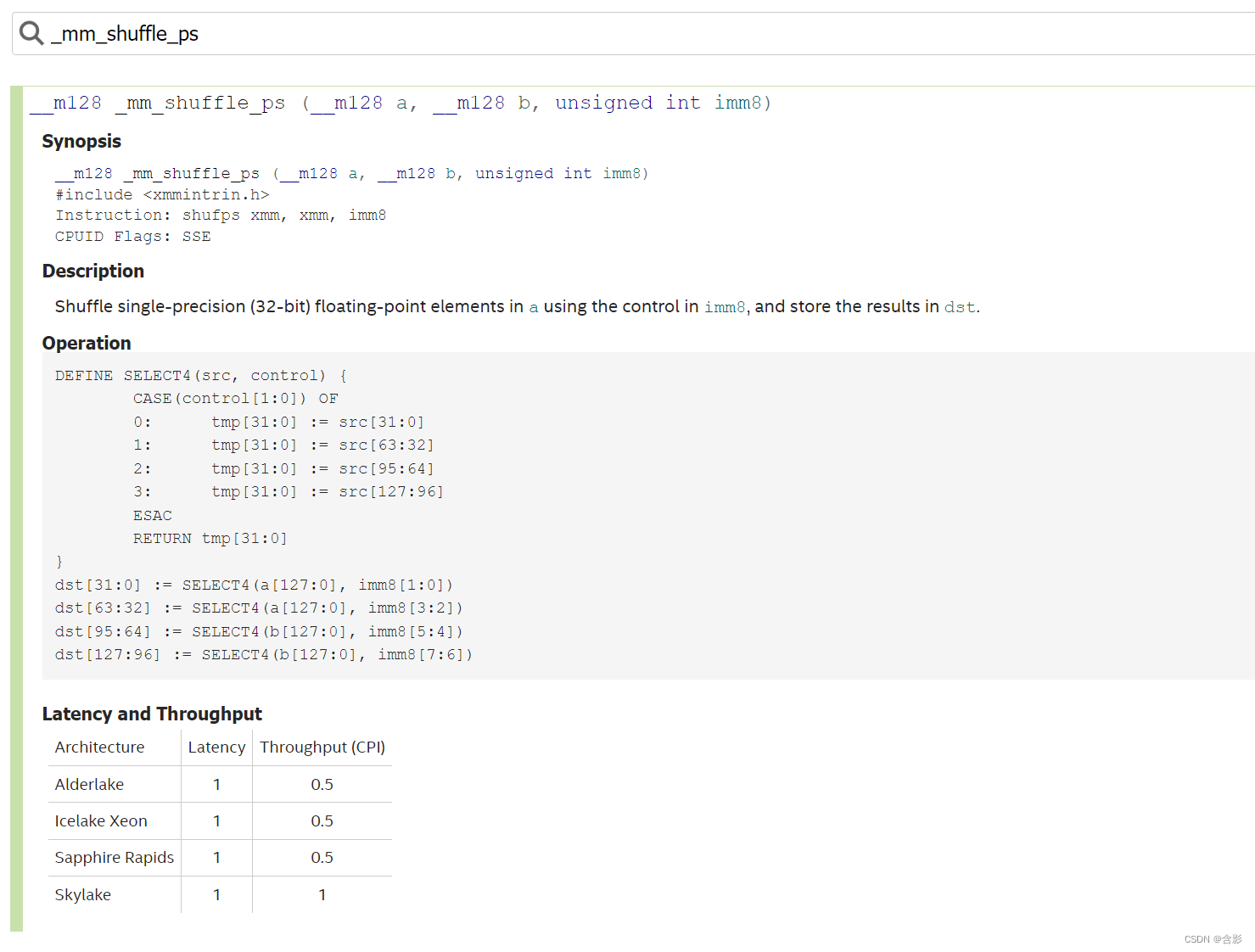

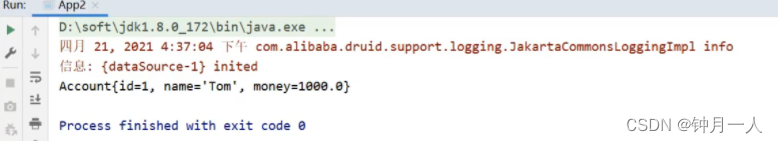

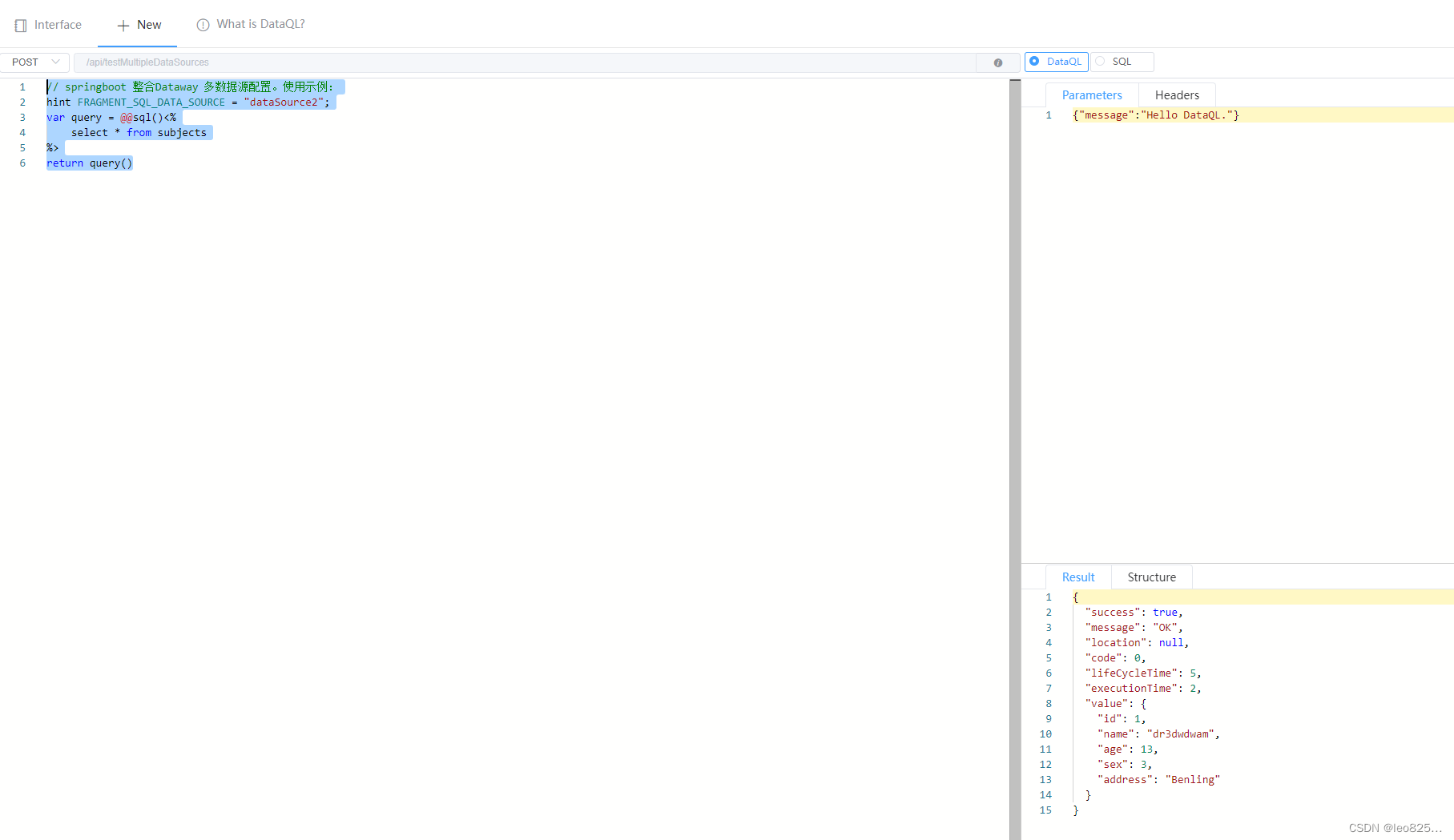

查看更多Intel SIMD指令详情

相关链接: https://www.intel.com/content/www/us/en/docs/intrinsics-guide/index.html

这里面可以搜索对应的指令,查看到的指令细节信息如下图: