C# 使用FFmpeg.Autogen对byte[]进行编解码,参考:https://github.com/vanjoge/CSharpVideoDemo

入口调用类:

using System;

using System.IO;

using System.Drawing;

using System.Runtime.InteropServices;

using FFmpeg.AutoGen;namespace FFmpegAnalyzer

{public class FFmpegWrapper{/// <summary>/// 默认的编码格式/// </summary>public AVCodecID DefaultCodecFormat { get; set; } = AVCodecID.AV_CODEC_ID_H264;/// <summary>/// 注册FFmpeg/// </summary>public static void RegisterFFmpeg(){FFmpegBinariesHelper.RegisterFFmpegBinaries();// 初始化注册ffmpeg相关的编码器ffmpeg.av_register_all();ffmpeg.avcodec_register_all();ffmpeg.avformat_network_init();}/// <summary>/// 注册日志/// <exception cref="NotSupportedException">.NET Framework 不支持日志注册</exception>/// </summary>private unsafe void RegisterFFmpegLogger(){// 设置记录ffmpeg日志级别ffmpeg.av_log_set_level(ffmpeg.AV_LOG_VERBOSE);av_log_set_callback_callback logCallback = (p0, level, format, vl) =>{if (level > ffmpeg.av_log_get_level()) return;var lineSize = 1024;var lineBuffer = stackalloc byte[lineSize];var printPrefix = 1;ffmpeg.av_log_format_line(p0, level, format, vl, lineBuffer, lineSize, &printPrefix);var line = Marshal.PtrToStringAnsi((IntPtr)lineBuffer);Console.Write(line);};ffmpeg.av_log_set_callback(logCallback);}#region 编码器/// <summary>/// 创建编码器/// </summary>/// <param name="frameSize">编码前一帧原始数据的大小</param>/// <param name="isRgb">rgb数据</param>public void CreateEncoder(Size frameSize, bool isRgb = true){_fFmpegEncoder = new FFmpegEncoder(frameSize, isRgb);_fFmpegEncoder.CreateEncoder(DefaultCodecFormat);}/// <summary>/// 编码/// </summary>/// <param name="frameBytes">编码帧数据</param>/// <returns></returns>public byte[] EncodeFrames(byte[] frameBytes){return _fFmpegEncoder.EncodeFrames(frameBytes);}/// <summary>/// 释放编码器/// </summary>public void DisposeEncoder(){_fFmpegEncoder.Dispose();}#endregion#region 解码器/// <summary>/// 创建解码器/// </summary>/// <param name="decodedFrameSize">解码后数据的大小</param>/// <param name="isRgb">Rgb数据</param>public void CreateDecoder(Size decodedFrameSize, bool isRgb = true){_fFmpegDecoder = new FFmpegDecoder(decodedFrameSize, isRgb);_fFmpegDecoder.CreateDecoder(DefaultCodecFormat);}/// <summary>/// 解码/// </summary>/// <param name="frameBytes">解码帧数据</param>/// <returns></returns>public byte[] DecodeFrames(byte[] frameBytes){return _fFmpegDecoder.DecodeFrames(frameBytes);}/// <summary>/// 释放解码器/// </summary>public void DisposeDecoder(){_fFmpegDecoder.Dispose();}#endregion/// <summary>编码器</summary>private FFmpegEncoder _fFmpegEncoder;/// <summary>解码器</summary>private FFmpegDecoder _fFmpegDecoder;}

}

其它业务类:

using System;

using System.IO;

using System.Runtime.InteropServices;namespace FFmpegAnalyzer

{internal class FFmpegBinariesHelper{private const string LD_LIBRARY_PATH = "LD_LIBRARY_PATH";internal static void RegisterFFmpegBinaries(){switch (Environment.OSVersion.Platform){case PlatformID.Win32NT:case PlatformID.Win32S:case PlatformID.Win32Windows:var current = AppDomain.CurrentDomain.BaseDirectory;var probe = $"FFmpeg/bin/{(Environment.Is64BitProcess ? @"x64" : @"x86")}";while (current != null){var ffmpegDirectory = Path.Combine(current, probe);if (Directory.Exists(ffmpegDirectory)){Console.WriteLine($"FFmpeg binaries found in: {ffmpegDirectory}");RegisterLibrariesSearchPath(ffmpegDirectory);return;}current = Directory.GetParent(current)?.FullName;}break;case PlatformID.Unix:case PlatformID.MacOSX:var libraryPath = Environment.GetEnvironmentVariable(LD_LIBRARY_PATH);RegisterLibrariesSearchPath(libraryPath);break;}}private static void RegisterLibrariesSearchPath(string path){switch (Environment.OSVersion.Platform){case PlatformID.Win32NT:case PlatformID.Win32S:case PlatformID.Win32Windows:SetDllDirectory(path);break;case PlatformID.Unix:case PlatformID.MacOSX:string currentValue = Environment.GetEnvironmentVariable(LD_LIBRARY_PATH);if (string.IsNullOrWhiteSpace(currentValue) == false && currentValue.Contains(path) == false){string newValue = currentValue + Path.PathSeparator + path;Environment.SetEnvironmentVariable(LD_LIBRARY_PATH, newValue);}break;}}[DllImport("kernel32", SetLastError = true)]private static extern bool SetDllDirectory(string lpPathName);}

}

using System;

using System.Drawing;

using System.Runtime.InteropServices;

using FFmpeg.AutoGen;namespace FFmpegAnalyzer

{/// <summary>/// 解码器/// </summary>internal unsafe class FFmpegDecoder{/// <param name="decodedFrameSize">解码后数据的大小</param>/// <param name="isRgb">Rgb数据</param>public FFmpegDecoder(Size decodedFrameSize, bool isRgb = true){_decodedFrameSize = decodedFrameSize;_isRgb = isRgb;}/// <summary>/// 创建解码器/// </summary>/// <param name="codecFormat">解码格式</param>public void CreateDecoder(AVCodecID codecFormat){var originPixelFormat = AVPixelFormat.AV_PIX_FMT_YUV420P;var destinationPixelFormat = _isRgb ? AVPixelFormat.AV_PIX_FMT_RGB24 : AVPixelFormat.AV_PIX_FMT_BGRA; //获取解码器_pDecodec = ffmpeg.avcodec_find_decoder(codecFormat);if (_pDecodec == null) throw new InvalidOperationException("Codec not found.");_pDecodecContext = ffmpeg.avcodec_alloc_context3(_pDecodec);_pDecodecContext->width = _decodedFrameSize.Width;_pDecodecContext->height = _decodedFrameSize.Height;_pDecodecContext->time_base = new AVRational { num = 1, den = 30 };_pDecodecContext->pix_fmt = AVPixelFormat.AV_PIX_FMT_YUV420P;_pDecodecContext->framerate = new AVRational { num = 30, den = 1 }; _pDecodecContext->gop_size = 30;// 设置预测算法_pDecodecContext->flags |= ffmpeg.AV_CODEC_FLAG_PSNR;_pDecodecContext->flags2 |= ffmpeg.AV_CODEC_FLAG2_FAST;_pDecodecContext->max_b_frames = 0;ffmpeg.av_opt_set(_pDecodecContext->priv_data, "preset", "veryfast", 0);ffmpeg.av_opt_set(_pDecodecContext->priv_data, "tune", "zerolatency", 0);//打开解码器ffmpeg.avcodec_open2(_pDecodecContext, _pDecodec, null);_pConvertContext = ffmpeg.sws_getContext(_decodedFrameSize.Width,_decodedFrameSize.Height,originPixelFormat,_decodedFrameSize.Width,_decodedFrameSize.Height,destinationPixelFormat,ffmpeg.SWS_FAST_BILINEAR,null, null, null);if (_pConvertContext == null)throw new ApplicationException("Could not initialize the conversion context.");var convertedFrameBufferSize = ffmpeg.av_image_get_buffer_size(destinationPixelFormat, _decodedFrameSize.Width, _decodedFrameSize.Height, 1);_convertedFrameBufferPtr = Marshal.AllocHGlobal(convertedFrameBufferSize);_dstData = new byte_ptrArray4();_dstLineSize = new int_array4();ffmpeg.av_image_fill_arrays(ref _dstData, ref _dstLineSize, (byte*)_convertedFrameBufferPtr, destinationPixelFormat,_decodedFrameSize.Width, _decodedFrameSize.Height, 1);_isCodecRunning = true;}/// <summary>/// 解码/// </summary>/// <param name="frameBytes"></param>/// <returns></returns>public byte[] DecodeFrames(byte[] frameBytes){if (!_isCodecRunning){throw new InvalidOperationException("解码器未运行!");}var waitDecodePacket = ffmpeg.av_packet_alloc();var waitDecoderFrame = ffmpeg.av_frame_alloc();ffmpeg.av_frame_unref(waitDecoderFrame);fixed (byte* waitDecodeData = frameBytes){waitDecodePacket->data = waitDecodeData;waitDecodePacket->size = frameBytes.Length;ffmpeg.av_frame_unref(waitDecoderFrame);try{int error;do{ffmpeg.avcodec_send_packet(_pDecodecContext, waitDecodePacket);error = ffmpeg.avcodec_receive_frame(_pDecodecContext, waitDecoderFrame);} while (error == ffmpeg.AVERROR(ffmpeg.EAGAIN));}finally{ffmpeg.av_packet_unref(waitDecodePacket);}var decodeAfterFrame = ConvertToRgb(waitDecoderFrame);var length = _isRgb? decodeAfterFrame.height * decodeAfterFrame.width * 3: decodeAfterFrame.height * decodeAfterFrame.width * 4;byte[] buffer = new byte[length];Marshal.Copy((IntPtr)decodeAfterFrame.data[0], buffer, 0, buffer.Length);return buffer;}}/// <summary>/// 释放/// </summary>public void Dispose(){_isCodecRunning = false;//释放解码器ffmpeg.avcodec_close(_pDecodecContext);ffmpeg.av_free(_pDecodecContext);//释放转换器Marshal.FreeHGlobal(_convertedFrameBufferPtr);ffmpeg.sws_freeContext(_pConvertContext);}/// <summary>/// 转换成Rgb/// </summary>/// <param name="waitDecoderFrame"></param>/// <returns></returns>private AVFrame ConvertToRgb(AVFrame* waitDecoderFrame){ffmpeg.sws_scale(_pConvertContext, waitDecoderFrame->data, waitDecoderFrame->linesize, 0, waitDecoderFrame->height, _dstData, _dstLineSize);var decodeAfterData = new byte_ptrArray8();decodeAfterData.UpdateFrom(_dstData);var lineSize = new int_array8();lineSize.UpdateFrom(_dstLineSize);ffmpeg.av_frame_unref(waitDecoderFrame);return new AVFrame{data = decodeAfterData,linesize = lineSize,width = _decodedFrameSize.Width,height = _decodedFrameSize.Height};}//解码器private AVCodec* _pDecodec;private AVCodecContext* _pDecodecContext;//转换缓存区private IntPtr _convertedFrameBufferPtr;private byte_ptrArray4 _dstData;private int_array4 _dstLineSize;//格式转换private SwsContext* _pConvertContext;private Size _decodedFrameSize;private readonly bool _isRgb;//解码器正在运行private bool _isCodecRunning;}

}

using System;

using System.Runtime.InteropServices;

using System.Drawing;

using FFmpeg.AutoGen;namespace FFmpegAnalyzer

{/// <summary>/// 编码器/// </summary>internal unsafe class FFmpegEncoder{/// <param name="frameSize">编码前一帧原始数据的大小</param>/// <param name="isRgb">rgb数据</param>public FFmpegEncoder(Size frameSize, bool isRgb = true){_frameSize = frameSize;_isRgb = isRgb;_rowPitch = isRgb ? _frameSize.Width * 3 : _frameSize.Width * 4;}/// <summary>/// 创建编码器/// </summary>public void CreateEncoder(AVCodecID codecFormat){var originPixelFormat = _isRgb ? AVPixelFormat.AV_PIX_FMT_RGB24 : AVPixelFormat.AV_PIX_FMT_BGRA;var destinationPixelFormat = AVPixelFormat.AV_PIX_FMT_YUV420P;_pCodec = ffmpeg.avcodec_find_encoder(codecFormat);if (_pCodec == null)throw new InvalidOperationException("Codec not found.");_pCodecContext = ffmpeg.avcodec_alloc_context3(_pCodec);_pCodecContext->width = _frameSize.Width;_pCodecContext->height = _frameSize.Height;_pCodecContext->framerate = new AVRational { num = 30, den = 1 };_pCodecContext->time_base = new AVRational {num = 1, den = 30};_pCodecContext->gop_size = 30;_pCodecContext->pix_fmt = destinationPixelFormat;// 设置预测算法_pCodecContext->flags |= ffmpeg.AV_CODEC_FLAG_PSNR;_pCodecContext->flags2 |= ffmpeg.AV_CODEC_FLAG2_FAST;_pCodecContext->max_b_frames = 0;ffmpeg.av_opt_set(_pCodecContext->priv_data, "preset", "veryfast", 0);ffmpeg.av_opt_set(_pCodecContext->priv_data, "tune", "zerolatency", 0);//打开编码器ffmpeg.avcodec_open2(_pCodecContext, _pCodec, null);_pConvertContext = ffmpeg.sws_getContext(_frameSize.Width, _frameSize.Height, originPixelFormat, _frameSize.Width, _frameSize.Height, destinationPixelFormat,ffmpeg.SWS_FAST_BILINEAR, null, null, null);if (_pConvertContext == null)throw new ApplicationException("Could not initialize the conversion context.");var convertedFrameBufferSize = ffmpeg.av_image_get_buffer_size(destinationPixelFormat, _frameSize.Width, _frameSize.Height, 1);_convertedFrameBufferPtr = Marshal.AllocHGlobal(convertedFrameBufferSize);_dstData = new byte_ptrArray4();_dstLineSize = new int_array4();ffmpeg.av_image_fill_arrays(ref _dstData, ref _dstLineSize, (byte*)_convertedFrameBufferPtr, destinationPixelFormat, _frameSize.Width, _frameSize.Height, 1);_isCodecRunning = true;}/// <summary>/// 释放/// </summary>public void Dispose(){if (!_isCodecRunning) return;_isCodecRunning = false;//释放编码器ffmpeg.avcodec_close(_pCodecContext);ffmpeg.av_free(_pCodecContext);//释放转换器Marshal.FreeHGlobal(_convertedFrameBufferPtr);ffmpeg.sws_freeContext(_pConvertContext);}/// <summary>/// 编码/// </summary>/// <param name="frameBytes"></param>/// <returns></returns>public byte[] EncodeFrames(byte[] frameBytes){if (!_isCodecRunning){throw new InvalidOperationException("编码器未运行!");}fixed (byte* pBitmapData = frameBytes){var waitToYuvFrame = new AVFrame{data = new byte_ptrArray8 { [0] = pBitmapData },linesize = new int_array8 { [0] = _rowPitch },height = _frameSize.Height};var rgbToYuv = ConvertToYuv(waitToYuvFrame, _frameSize.Width, _frameSize.Height);byte[] buffer;var pPacket = ffmpeg.av_packet_alloc();try{int error;do{ffmpeg.avcodec_send_frame(_pCodecContext, &rgbToYuv);error = ffmpeg.avcodec_receive_packet(_pCodecContext, pPacket);} while (error == ffmpeg.AVERROR(ffmpeg.EAGAIN));buffer = new byte[pPacket->size];Marshal.Copy(new IntPtr(pPacket->data), buffer, 0, pPacket->size);}finally{ffmpeg.av_frame_unref(&rgbToYuv);ffmpeg.av_packet_unref(pPacket);}return buffer;}}/// <summary>/// 转换成Yuv格式/// </summary>/// <param name="waitConvertYuvFrame"></param>/// <param name="width"></param>/// <param name="height"></param>/// <returns></returns>private AVFrame ConvertToYuv(AVFrame waitConvertYuvFrame, int width, int height){ffmpeg.sws_scale(_pConvertContext, waitConvertYuvFrame.data, waitConvertYuvFrame.linesize, 0, waitConvertYuvFrame.height, _dstData, _dstLineSize);var data = new byte_ptrArray8();data.UpdateFrom(_dstData);var lineSize = new int_array8();lineSize.UpdateFrom(_dstLineSize);ffmpeg.av_frame_unref(&waitConvertYuvFrame);return new AVFrame{data = data,linesize = lineSize,width = width,height = height};}//编码器private AVCodec* _pCodec;private AVCodecContext* _pCodecContext;//转换缓存区private IntPtr _convertedFrameBufferPtr;private byte_ptrArray4 _dstData;private int_array4 _dstLineSize;//格式转换private SwsContext* _pConvertContext;private Size _frameSize;private readonly int _rowPitch;private readonly bool _isRgb;//编码器正在运行private bool _isCodecRunning;}

}

using FFmpeg.AutoGen;

using System;

using System.Drawing;

using System.Runtime.InteropServices;

using System.Windows;namespace FFmpegAnalyzer

{public sealed unsafe class VideoFrameConverter : IDisposable{private readonly IntPtr _convertedFrameBufferPtr;private readonly System.Drawing.Size _destinationSize;private readonly byte_ptrArray4 _dstData;private readonly int_array4 _dstLinesize;private readonly SwsContext* _pConvertContext;/// <summary>/// 帧格式转换/// </summary>/// <param name="sourceSize"></param>/// <param name="sourcePixelFormat"></param>/// <param name="destinationSize"></param>/// <param name="destinationPixelFormat"></param>public VideoFrameConverter(System.Drawing.Size sourceSize, AVPixelFormat sourcePixelFormat,System.Drawing.Size destinationSize, AVPixelFormat destinationPixelFormat){_destinationSize = destinationSize;//分配并返回一个SwsContext。您需要它使用sws_scale()执行伸缩/转换操作//主要就是使用SwsContext进行转换!!!_pConvertContext = ffmpeg.sws_getContext((int)sourceSize.Width, (int)sourceSize.Height, sourcePixelFormat,(int)destinationSize.Width,(int)destinationSize.Height, destinationPixelFormat,ffmpeg.SWS_FAST_BILINEAR //默认算法 还有其他算法, null, null, null //额外参数 在flasgs指定的算法,而使用的参数。如果 SWS_BICUBIC SWS_GAUSS SWS_LANCZOS这些算法。 这里没有使用);if (_pConvertContext == null) throw new ApplicationException("Could not initialize the conversion context.");//获取媒体帧所需要的大小var convertedFrameBufferSize = ffmpeg.av_image_get_buffer_size(destinationPixelFormat, (int)destinationSize.Width, (int)destinationSize.Height, 1);//申请非托管内存,unsafe代码_convertedFrameBufferPtr = Marshal.AllocHGlobal(convertedFrameBufferSize);//转换帧的内存指针_dstData = new byte_ptrArray4();_dstLinesize = new int_array4();//挂在帧数据的内存区把_dstData里存的的指针指向_convertedFrameBufferPtrffmpeg.av_image_fill_arrays(ref _dstData, ref _dstLinesize, (byte*)_convertedFrameBufferPtr, destinationPixelFormat, (int)destinationSize.Width, (int)destinationSize.Height, 1);}public void Dispose(){Marshal.FreeHGlobal(_convertedFrameBufferPtr);ffmpeg.sws_freeContext(_pConvertContext);}public AVFrame Convert(AVFrame sourceFrame){//转换格式ffmpeg.sws_scale(_pConvertContext, sourceFrame.data, sourceFrame.linesize, 0, sourceFrame.height, _dstData, _dstLinesize);var data = new byte_ptrArray8();data.UpdateFrom(_dstData);var linesize = new int_array8();linesize.UpdateFrom(_dstLinesize);return new AVFrame{data = data,linesize = linesize,width = (int)_destinationSize.Width,height = (int)_destinationSize.Height};}}

}

using System;

using System.Collections.Generic;

using System.Drawing;

using System.IO;

using System.Runtime.InteropServices;

using System.Windows;

using FFmpeg.AutoGen;namespace FFmpegAnalyzer

{public sealed unsafe class VideoStreamDecoder : IDisposable{private readonly AVCodecContext* _pCodecContext;private readonly AVFormatContext* _pFormatContext;private readonly int _streamIndex;//private readonly AVFrame* _pFrame;//private readonly AVFrame* _receivedFrame;private readonly AVPacket* _pPacket;/// <summary>/// 视频解码器/// </summary>/// <param name="url">视频流URL</param>/// <param name="HWDeviceType">硬件解码器类型(默认AVHWDeviceType.AV_HWDEVICE_TYPE_NONE)</param>public VideoStreamDecoder(string url, AVHWDeviceType HWDeviceType = AVHWDeviceType.AV_HWDEVICE_TYPE_NONE){//分配一个AVFormatContext_pFormatContext = ffmpeg.avformat_alloc_context();//分配一个AVFrame_receivedFrame = ffmpeg.av_frame_alloc();var pFormatContext = _pFormatContext;//将源音视频流传递给ffmpeg即ffmpeg打开源视频流ffmpeg.avformat_open_input(&pFormatContext, url, null, null);//获取音视频流信息ffmpeg.avformat_find_stream_info(_pFormatContext, null);AVCodec* codec = null;//在源里找到最佳的流,如果指定了解码器,则根据解码器寻找流,将解码器传递给codec_streamIndex = ffmpeg.av_find_best_stream(_pFormatContext, AVMediaType.AVMEDIA_TYPE_VIDEO, -1, -1, &codec, 0);//根据解码器分配一个AVCodecContext ,仅仅分配工具,还没有初始化。_pCodecContext = ffmpeg.avcodec_alloc_context3(codec);//如果硬解码if (HWDeviceType != AVHWDeviceType.AV_HWDEVICE_TYPE_NONE){//根据硬件编码类型创建AVHWDeviceContext,存在AVFormatContext.hw_device_ctx (_pCodecContext->hw_device_ctx)ffmpeg.av_hwdevice_ctx_create(&_pCodecContext->hw_device_ctx, HWDeviceType, null, null, 0);}//将最佳流的格式参数传递给codecContextffmpeg.avcodec_parameters_to_context(_pCodecContext, _pFormatContext->streams[_streamIndex]->codecpar);//根据codec初始化pCodecContext 。与_pCodecContext = ffmpeg.avcodec_alloc_context3(codec);对应ffmpeg.avcodec_open2(_pCodecContext, codec, null);CodecName = ffmpeg.avcodec_get_name(codec->id);FrameSize = new System.Drawing.Size(_pCodecContext->width, _pCodecContext->height);PixelFormat = _pCodecContext->pix_fmt;//分配AVPacket/* AVPacket用于存储压缩的数据,分别包括有音频压缩数据,视频压缩数据和字幕压缩数据。它通常在解复用操作后存储压缩数据,然后作为输入传给解码器。或者由编码器输出然后传递给复用器。对于视频压缩数据,一个AVPacket通常包括一个视频帧。对于音频压缩数据,可能包括几个压缩的音频帧。*/_pPacket = ffmpeg.av_packet_alloc();//分配AVFrame/*AVFrame用于存储解码后的音频或者视频数据。AVFrame必须通过av_frame_alloc进行分配,通过av_frame_free释放。*/_pFrame = ffmpeg.av_frame_alloc();}public string CodecName { get; }public System.Drawing.Size FrameSize { get; }public AVPixelFormat PixelFormat { get; }public void Dispose(){ffmpeg.av_frame_unref(_pFrame);ffmpeg.av_free(_pFrame);ffmpeg.av_packet_unref(_pPacket);ffmpeg.av_free(_pPacket);ffmpeg.avcodec_close(_pCodecContext);var pFormatContext = _pFormatContext;ffmpeg.avformat_close_input(&pFormatContext);}/// <summary>/// 解码下一帧帧/// </summary>/// <param name="frame">参数返回解码后的帧</param>/// <returns></returns>public bool TryDecodeNextFrame(out AVFrame frame){//取消帧的引用。帧将不会被任何资源引用ffmpeg.av_frame_unref(_pFrame);ffmpeg.av_frame_unref(_receivedFrame);int error;do{try{#region 读取帧忽略无效帧do{//读取无效帧error = ffmpeg.av_read_frame(_pFormatContext, _pPacket);//根据pFormatContext读取帧,返回到Packet中if (error == ffmpeg.AVERROR_EOF)//如果已经是影视片流末尾则返回{frame = *_pFrame;return false;}} while (_pPacket->stream_index != _streamIndex); //忽略掉音视频流里面与有效流(初始化(构造函数)时标记的_streamIndex)不一致的流#endregion//将帧数据放入解码器ffmpeg.avcodec_send_packet(_pCodecContext, _pPacket); //将原始数据数据(_pPacket)作为输入提供给解码器(_pCodecContext)}finally{//消除对_pPacket的引用ffmpeg.av_packet_unref(_pPacket);}//读取解码器里解码(_pCodecContext)后的帧通过参数返回(_pFrame)error = ffmpeg.avcodec_receive_frame(_pCodecContext, _pFrame);} while (error == ffmpeg.AVERROR(ffmpeg.EAGAIN));//当返回值等于 EAGAIN(再试一次),if (_pCodecContext->hw_device_ctx != null)//如果配置了硬件解码则调用硬件解码器解码{//将_pFrame通过硬件解码后放入_receivedFrameffmpeg.av_hwframe_transfer_data(_receivedFrame, _pFrame, 0);frame = *_receivedFrame;}else{frame = *_pFrame;}return true;}/// <summary>/// 获取媒体TAG信息/// </summary>/// <returns></returns>public IReadOnlyDictionary<string, string> GetContextInfo(){AVDictionaryEntry* tag = null;var result = new Dictionary<string, string>();while ((tag = ffmpeg.av_dict_get(_pFormatContext->metadata, "", tag, ffmpeg.AV_DICT_IGNORE_SUFFIX)) != null){var key = Marshal.PtrToStringAnsi((IntPtr)tag->key);var value = Marshal.PtrToStringAnsi((IntPtr)tag->value);result.Add(key, value);}return result;}}

}

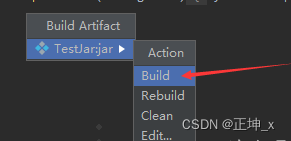

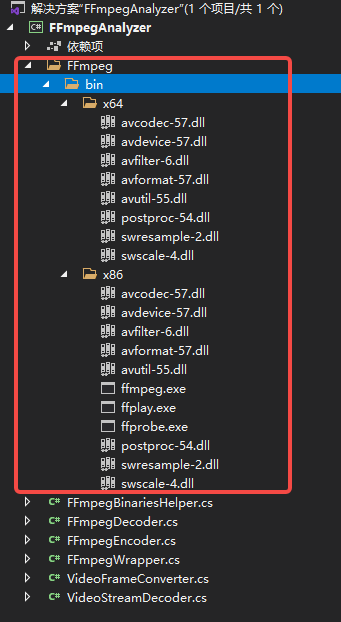

需要将ffmpeg的类库复制到生成目录上(对应FFmpegBinariesHelper.RegisterFFmpegBinaries()中的生成路径)

使用代码:

FFmpegWrapper.RegisterFFmpeg();

_ffMpegWrapper = new FFmpegWrapper();

_ffMpegWrapper.CreateEncoder(new System.Drawing.Size(1920, 1080), true);_ffMpegWrapper1 = new FFmpegWrapper();

_ffMpegWrapper1.CreateDecoder(new System.Drawing.Size(1920, 1080), true);

var encodeFrames = _ffMpegWrapper.EncodeFrames(Data);

var decodeFrames = _ffMpegWrapper1.DecodeFrames(encodeFrames);