lsblk(list block devices):其功能是查看系统的磁盘使用情况

df(disk free):列出文件系统的整体磁盘使用量

du(disk used):检查磁盘空间使用量

fdisk:用于磁盘分区(创建分区)

mkfs:创建并构建一个文件系统(格式化)

mount:挂在分区(使用分区,将磁盘挂在到目录树中)

一、lsdlk

查看系统的磁盘使用情况。

语法格式:lsblk 参数

实例:

lsblk #查看磁盘信息

#添加一个20G的Sata类型的磁盘,和一个30G的NVME类型的硬盘

#注意:添加硬盘,我们要先关闭虚拟机。添加好上面的两个磁盘后,然后再查看磁盘信息

# sda:表示第一块Sata硬盘,如果有第二块名称将会为sdb,第三块以此类推。

# sr0:表示第一块光盘,如果是sr1则表示第二块光盘

# nvme0n1:表示第一块nvme类型的硬盘,nvme0n2表示第二块nvme类型的硬盘

lsblk -m #显示系统磁盘设备的归属及权限信息

lsblk -nl #以列表格式显示磁盘设备信息,并且不显示标题

二、df命令(看磁盘占用率)

目前还剩多少空间等信息

语法格式:df [参数] [目录或者文件名]

实例:

df #将系统内所有的文件系统列出来

df -h #将容量结果以易读的容量格式显示出来

df -aT #将系统内所有的特殊文件格式及名称都列出来

df -h /etc #将/etc底下的可用磁盘容量以易读的容量格式显示

df -T #查看文件系统类型

三、du命令(看文件)

对文件和目录磁盘你使用的空间的查看。

语法格式:du [参数] 文件目录名

实例:

du #只列出当前目录下的所有文件夹容量(包括隐藏文件夹)

du -h test #方便阅读的格式显示test目录所占用的空间情况

du -a #将文件的容量也列出来

du -sm /* #检查根目录底下每个目录所占用的容量

du log2012.log #显示指定文件所占用的空间

四、fdisk

磁盘分区工具

语法格式:fdisk [-l] 装置名称

实例:

fdisk -lu #列出所有分区的信息

fdisk /dev/sda #找出你系统中的根目录所在的磁盘,并查阅该硬盘的相关信息。

常规命令:

d 删除分区

F 列出未分区的空闲区

l 列出已知分区类型

n 添加新分区

p 打印分区表

t 更改分区类型

v 检查分区表

i 打印某个分区的相关信息

分区实验过程:

1、运行以下命令对数据盘进行分区

fdisk -lu

看到/dev/sda的设备信息如下:

接下来,给这个设备进行分区:

fdisk -u /dev/sda

2、输入p查看数据盘的分区情况

本示例中,数据盘未分区。

3、输入n创建新分区

4.输入p选择分区类型为主分区:

说明:创建一个单分区数据盘可以只创建主分区(最多四个主分区)。如果要创建四个以上· 分区,应该至少选择一次e(extended),创建至少一个扩展分区5.输入分区编号,按回车键

本示例中,仅创建一个分区,直接按回车键,采用默认值1。

6.输入扇区编号,按回车键

本示例中,直接按回车键,采用默认值2048。

7.输入最后一个扇区编号,按回车键

本示例中,仅创建一个分区,直接按回车键,采用默认值。

d 可以删除分区(如果弄错的话)

重新分区时,将最后一个扇区修改为+10G

此时分配的分区大小为10G

还剩10G的分区没有被分配

8、将剩下的分到扩展分区

9、按p进行查看分区情况:

10、按w保存退出

11、查看分区情况:lsblk 或fdisk -l /dev/sda

在Linux中,扩展分区不能直接格式化,必须先将扩展分区转换为一个逻辑分区,然后才能对逻辑分区进行格式化。

五、mkfs磁盘格式化

磁盘分割完毕后自然就是要进行文件系统的格式化,格式化的命令为:mkfs(make filesystem)

语法格式:mkfs [-t 文件系统格式] 装置文件名

参数:-t 可以接受文件系统格式,例如ext3,ext2,vfat等(系统有支持才会生效)

实例:

mkfs -t ext4 /dev/sda1 #将刚才已经分的区sda1格式化为ext4文件系统

扩展:

mkfs.ext4 -c /dev/sda #检查指定磁盘设备并进行ext4格式化操作

mkfs.xfs -f /dev/sda #强制修改文件系统类型并覆盖

mkfs.ext4 -m 5 /dev/sda #对指定

mkfs.ext4 -L 'testflag' -b 2048 /dev/sda #对磁盘设备进行格式化操作,添加卷标识,并修改块大小。

使用ls -l /dev/sda*进行查询时,发现所有的分区实际上还是一个块设备

六、mount命令

Linux的磁盘挂载使用mount命令,卸载使用umount命令。

磁盘挂载语法:

mount [-t文件系统] [-L Label名] [-o 额外选项] [-n] 装置文件名 挂载点

磁盘卸载命令:

umount语法:umount [-fn] 装置文件名或挂载点

-f:强制删除

-n:不升级

实例:

mount /dev/sda1 /mnt/linux #将/dev/sda1挂载到了/mnt/linux这个目录下并且/mnt/linux这个目录占用的空间在/dev/sda1上面

已经将/dev/sda1挂载到/mnt/linux下,占用大小为1%

umount /mnt/linux #卸载/mnt/linux(挂载后所有文件将会在挂在的目录下消失,但其内容是在sda1的内存中,下次挂载后就会重新出现)

以上mount都是一次性挂载,若要永久挂载,以下是几种方案:

1、在/etc/fstab文件中写入挂载的信息(不建议)

/dev/sda1 /mnt/linux ext4 defaults 0 0

挂载的设备 挂载的目录 文件类型 默认权限

#注意:/etc/fstab这个文件一旦写错,则服务器无法启动

2、在/etc/rc.local文件中写入挂载命令,加上执行权限,每次启动系统就会进行自动挂载(推荐)

书写错误,挂载失败不会影响服务器。

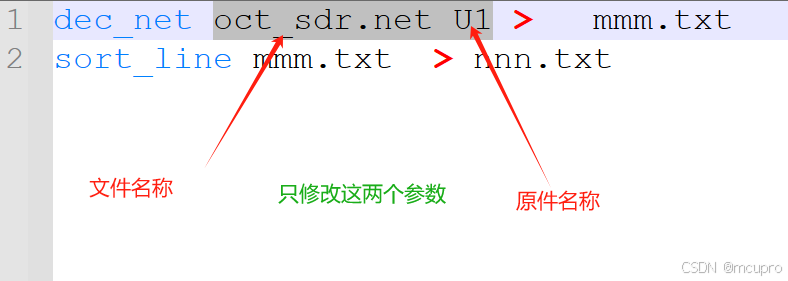

#这两个目录随便修改,即可:

vim /etc/rc.local

vim /etc/rc.d/rc.local

#写入挂载命令

mount -t ext4 /devsda1 /mnt/linux

#给文件/etc/rc.d/rc.local加上执行权限,这样每次系统在启动时候都会执行这个文件的命令

chmod +x /etc/rc.d/rc.local

重启后自动挂载成功。

七、分区(了解)

分区格式:

MBR(Master Boot Record,主引导分区),

支持4个主分区,或三个主分区和一个扩展分区,分区空间大小最大支持2.2TB

硬盘的第一个扇区也就是第0个扇区是用来存放主引导记录(MBR)的。

因此也别称为MBR扇区,一个扇区有512个字节,其中有64字节的分区表。每个 分区信息需要16字节,所以只能由4个主分区。

GPT(GUID Partition Table,全局唯一标识分区表),

最多支持128个分区,且大小支持2.2TB到18EB,并向后兼容MBR,

他是EFI(可扩展固件接口标准)的一部分,用来替代BIOS中的主引导记录

分区表,但因为MBR分区表不支持容量大于2.2T的分区,

所以也有一些BIOS系统为了大容量用GPT取代MBR分区表。

EFI

可扩展固件接口是一种个人电脑系统规格,用来定义操作系统与系统韧体之间

的软件界面,为替代BIOS的升级方案

硬盘分区分为:主磁盘分区,扩展磁盘分区,逻辑分区

一个硬盘可以有一个主分区,一个扩展分区,

也可以只有主分区,没有扩展分区,逻辑分区有若干。

硬盘容量=主分区的容量+扩展分区的容量(硬盘=C盘+其他盘)

扩展分区的容量=各个逻辑分区的容量之和(其他盘=D盘+E盘+等等)

在使用磁盘前,我们需要对磁盘进行分区然后格式化,格式化是针对主分区和逻辑分区的。要格式化是因为这和操作系统管理系统有关系。没有格式化的分区就像一张白纸,要写入数据,必须在白纸上打上“格子”,每个格子里面写一块,而操作系统只认这些格子。

八、逻辑卷(了解)

物理设备一般是指系统存储设备,如:/dev/sda1 /dev/sda2

物理卷(physical volumn,PV),它是由多个分区或整个硬盘组成

卷组(Volumn Group,VG),它可以被看作是单独的逻辑磁盘,一个卷组中至少有一个PV,在卷组创建好了后,可以动态添加PV到卷组中。

逻辑卷(Logical Volumn,LV)相当与物理分区,他时建立在卷组之上。

1、#创建物理卷的命令为‘pvcreate’要创建物理卷的设备或分区

2、#查看pv

#如果看详情,使用pvdisplay

3、卷组管理(VG)

#卷组创建的命令是:vgcreate 物理卷名称

格式化逻辑卷:mkfs.xfs /dev/vgtest/lvtest

4、逻辑卷管理(LV)

#逻辑卷管理命令为:lvcreate -n 逻辑卷名称 -L 逻辑卷大小 卷组名称