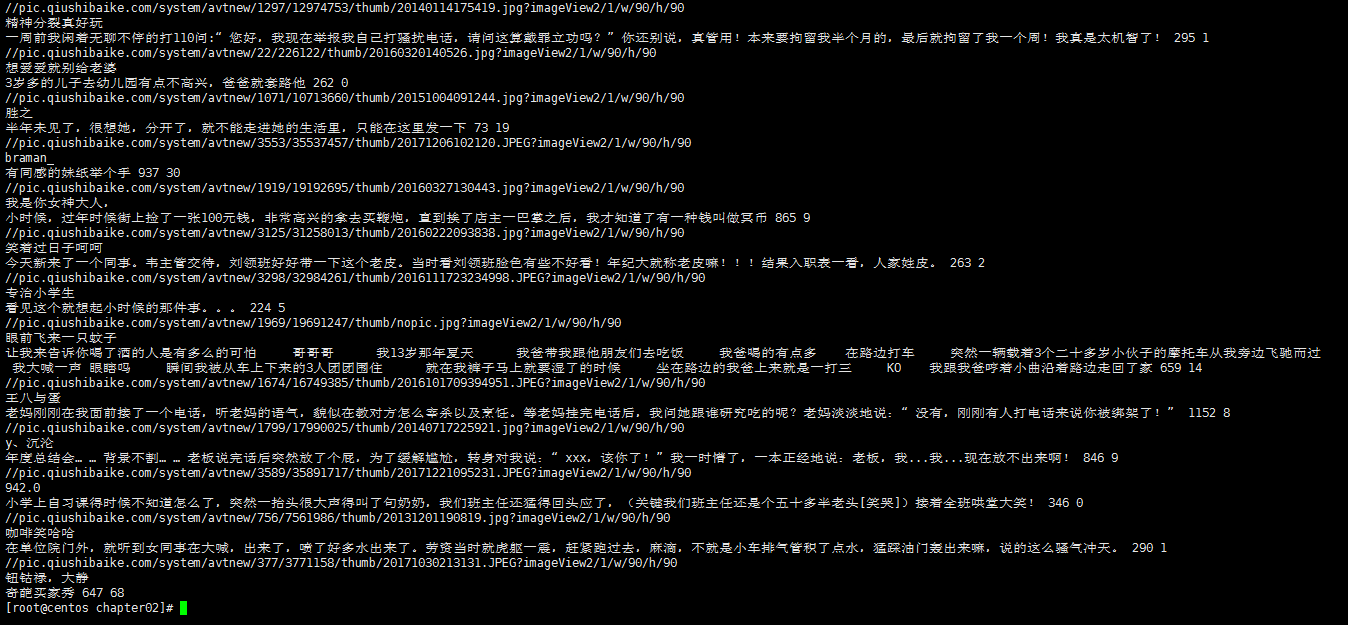

糗事百科实例

爬取糗事百科段子,假设页面的URL是: http://www.qiushibaike.com/8hr/page/1

要求:

- 使用requests获取页面信息,用XPath/re做数据提取

- 获取每个帖子里的用户头像连接、用户姓名、段子内容、点赞次数和评论次数

- 保存到json文件内

参考代码

#-*- coding:utf-8 -*-import requests

from lxml import etreepage = 1

url = 'http://www.qiushibaike.com/8hr/page/' + str(page)

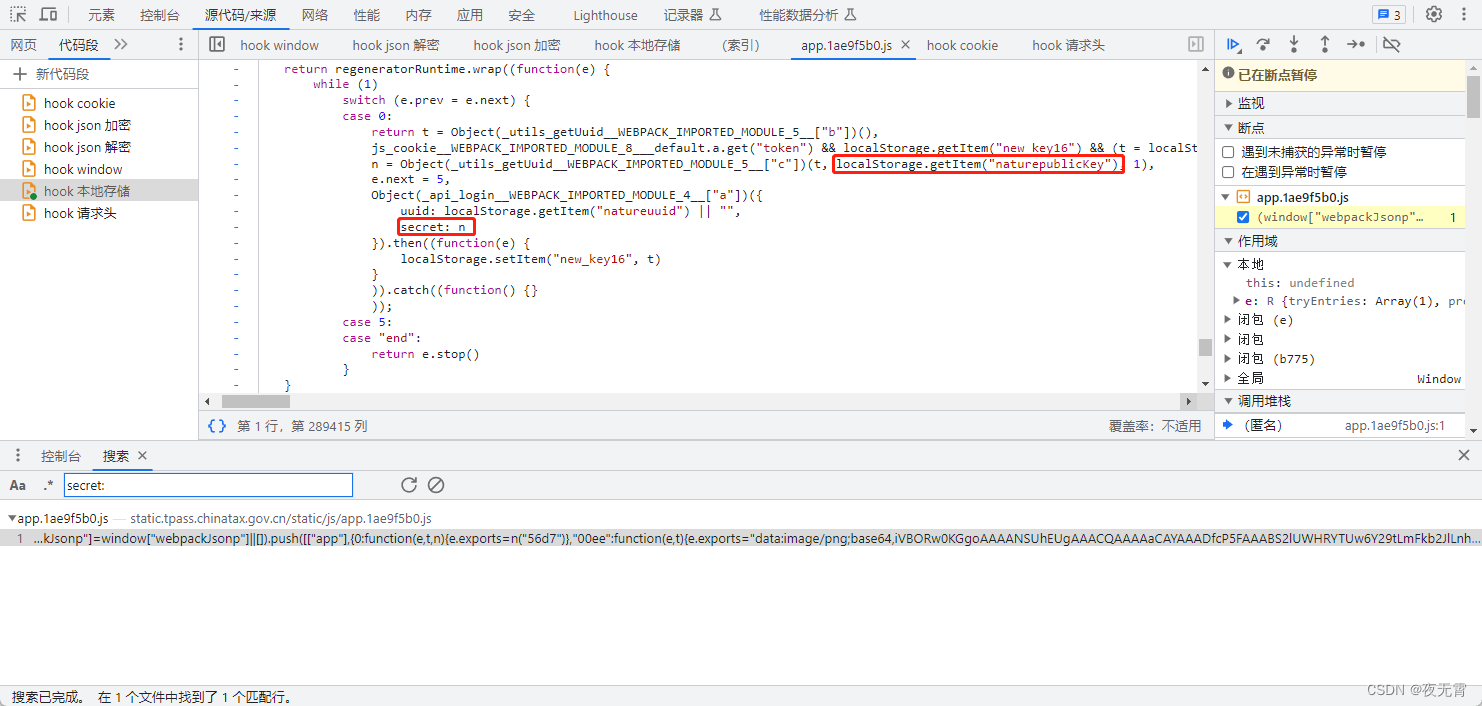

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36','Accept-Language': 'zh-CN,zh;q=0.8'}try:response = requests.get(url, headers=headers)resHtml = response.texthtml = etree.HTML(resHtml)result = html.xpath('//div[contains(@id,"qiushi_tag")]')for site in result:item = {}imgUrl = site.xpath('./div//img/@src')[0].encode('utf-8')# print(imgUrl)username = site.xpath('./div//h2')[0].text# print(username)content = site.xpath('.//div[@class="content"]/span')[0].text.strip().encode('utf-8')# print(content)# 投票次数vote = site.xpath('.//i')[0].text# print(vote)#print site.xpath('.//*[@class="number"]')[0].text# 评论信息comments = site.xpath('.//i')[1].text# print(comments)print imgUrl, username, content, vote, commentsexcept Exception, e:print e

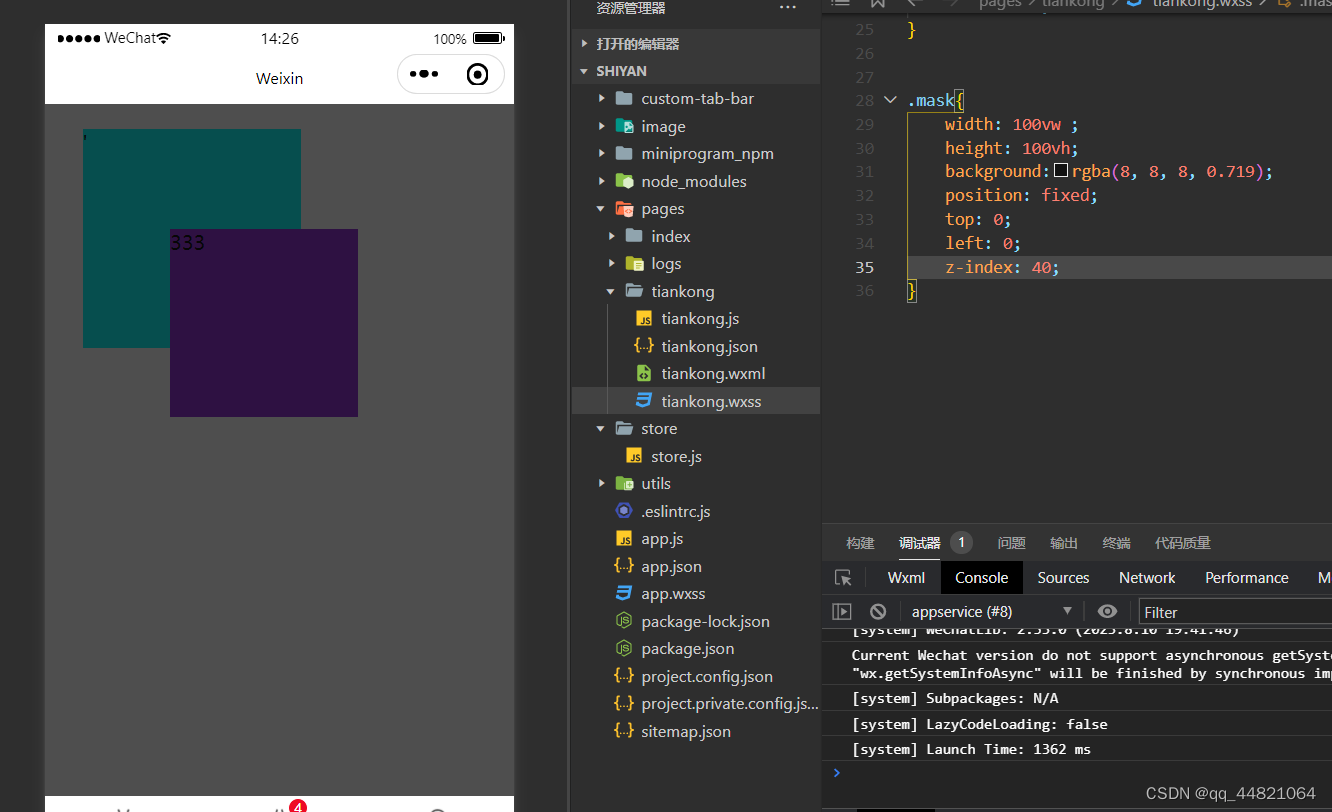

演示效果

糗事百科