目录

- 前言

- 最新ChatGPT GPT-4 自然语言理解NLU实战之实体分类识别与模型微调

- 主题分类

- 精准分类解决手段

- 模型微调步骤

- 核心代码

- 其它NLU应用及实战

- 相关文献

- 参考资料

- 其它资料下载

前言

想象一下,如果您也可以训练一个为特定场景和目的量身打造的大型自然语言模型,以帮助您在实时聊天中更快地、更准确地与用户互动。是不是特别地牛!

ChatGPT可以帮助您非常简单地做到这一点。利用微调模型技术,ChatGPT结合您的自定义语料集,重新训练先前的预训练语言模型,提高模型的精度和可靠性。这使得ChatGPT在特定环境中表现更佳,从而帮助您优化模型,在与客户进行对话时发挥最佳效果。

让我们从零开始,一步一步地学习使用ChatGPT开始构建您的定制化自然语言模型吧。

最新ChatGPT GPT-4 自然语言理解NLU实战之实体分类识别与模型微调

主题分类

在前面"相关API"一节,我们已经介绍了各种分类和实体提取的用法。这里会给大家介绍更具体、常见的任务。

首先是主题分类,简单来说就是给定文本,判断属于哪一类主题。

我们找一个新闻主题分类的数据集看看,数据集取自:CLUEbenchmark/CLUE,共15个类别。

import pnlp

lines = pnlp.read_file_to_list_dict("./dataset/tnews.json")

len(lines)

10000

from collections import Counter

ct = Counter([v["label_desc"] for v in lines])

ct.most_common()

[('news_tech', 1089),('news_finance', 956),('news_entertainment', 910),('news_world', 905),('news_car', 791),('news_sports', 767),('news_culture', 736),('news_military', 716),('news_travel', 693),('news_game', 659),('news_edu', 646),('news_agriculture', 494),('news_house', 378),('news_story', 215),('news_stock', 45)]

stock这个类别太少了,我们先给它去掉:

lines = [v for v in lines if v["label_desc"] != "news_stock"]

len(lines)

9955

def get_prompt(text):prompt = f"""对给定文本进行分类,类别包括:科技、金融、娱乐、世界、汽车、文化、军事、旅游、游戏、教育、农业、房产、社会、股票。给定文本:

{text}

类别:

"""return prompt

lines[0]

{'label': '102','label_desc': 'news_entertainment','sentence': '江疏影甜甜圈自拍,迷之角度竟这么好看,美吸引一切事物','keywords': '江疏影,美少女,经纪人,甜甜圈'}

prompt = get_prompt(lines[0]["sentence"])

print(prompt)

对给定文本进行分类,类别包括:科技、金融、娱乐、世界、汽车、文化、军事、旅游、游戏、教育、农业、房产、社会、股票。给定文本:

江疏影甜甜圈自拍,迷之角度竟这么好看,美吸引一切事物

类别:

import openai

OPENAI_API_KEY = "填入专属的API key"openai.api_key = OPENAI_API_KEY

def complete(prompt):response = openai.Completion.create(prompt=prompt,temperature=0,max_tokens=10,top_p=1,frequency_penalty=0,presence_penalty=0,model="text-davinci-003")ans = response["choices"][0]["text"].strip(" \n")return ans

complete(prompt)

'娱乐'

def ask(content):response = openai.ChatCompletion.create(model="gpt-3.5-turbo", messages=[{"role": "user", "content": content}],temperature=0,max_tokens=10,top_p=1,frequency_penalty=0,presence_penalty=0,)ans = response.get("choices")[0].get("message").get("content")return ans

ask(prompt)

'娱乐'

再试几个其他类别的:

lines[1]

{'label': '110','label_desc': 'news_military','sentence': '以色列大规模空袭开始!伊朗多个军事目标遭遇打击,誓言对等反击','keywords': '伊朗,圣城军,叙利亚,以色列国防军,以色列'}

prompt = get_prompt(lines[1]["sentence"])

print(prompt)

对给定文本进行分类,类别包括:科技、金融、娱乐、世界、汽车、文化、军事、旅游、游戏、教育、农业、房产、社会、股票。给定文本:

以色列大规模空袭开始!伊朗多个军事目标遭遇打击,誓言对等反击

类别:

ask(prompt)

'军事'

complete(prompt)

'军事'

lines[2]

{'label': '104','label_desc': 'news_finance','sentence': '出栏一头猪亏损300元,究竟谁能笑到最后!','keywords': '商品猪,养猪,猪价,仔猪,饲料'}

prompt = get_prompt(lines[2]["sentence"])

print(prompt)

对给定文本进行分类,类别包括:科技、金融、娱乐、世界、汽车、文化、军事、旅游、游戏、教育、农业、房产、社会、股票。给定文本:

出栏一头猪亏损300元,究竟谁能笑到最后!

类别:

ask(prompt)

'农业'

complete(prompt)

'社会'

精准分类解决手段

这个有点迷糊,不过「农业」这个类别感觉也没问题。当然,遇到有错误的情况,我们起码还有两种手段来解决:

- Few-Shot,可以每次随机从数据集里抽几条出来作为Prompt的一部分。

- Fine-Tuning,把我们自己的数据集按指定格式准备好,提交给API,让它帮我们微调一个属于我们自己的模型,它在我们自己的数据集上学习过。

Few-Shot最关键的是如何找到这个「Few」,换句话说,我们拿什么Case给模型当做参考样本。对于类别比较多的多分类(实际工作中,成百上千中Label是很常见的),Few-Shot即使每个Label一个例子,这上下文长度也不得了。不太现实。这时候其实Few-Shot有点不太方便了。当然,如果我们非要用也不是不行,还是最常用的策略:先召回几个相似句,然后把相似句的内容和类别作为Few-Shot的例子,让接口来预测给定句子的类别。

模型微调步骤

不过,我们还可以使用微调(Fine-Tuning)方法在自己的数据集上对模型进行微调,简单来说就是让模型「熟悉」我们独特的数据,进而让其具备在类似数据集上识别出类别的能力。

接下来,就让我们看看具体怎么做,一般包括三个主要步骤:

- 准备数据:按接口要求的格式把数据准备好,这里的数据就是我们自己的数据集,至少包含一段文本和一个类别。

- 微调:使用微调接口将刚刚的数据传递过去,由服务器自动完成微调,微调完成后可以得到一个新的model_id。注意,这个model_id只属于你自己,不要将它公开给其他人。

- 使用新的模型进行推理:嗯,这个很简单,把原来接口里的

model参数内容换成我们的model_id即可。

核心代码

咱们接下来就来调调这个多分类模型,我们只取后500条作为训练集(为了快速和省钱……)。

train_lines = lines[-500:]

import pandas as pd

train = pd.DataFrame(train_lines)

train.shape

(500, 4)

train.head()

| label | label_desc | sentence | keywords | |

|---|---|---|---|---|

| 0 | 103 | news_sports | 为什么斯凯奇与阿迪达斯脚感很相似,价格却差了近一倍? | 达斯勒,阿迪达斯,FOAM,BOOST,斯凯奇 |

| 1 | 100 | news_story | 女儿日渐消瘦,父母发现有怪物,每天吃女儿一遍 | 大将军,怪物 |

| 2 | 104 | news_finance | 另类逼空确认反弹,剑指3200点以上 | 股票,另类逼空,金融,创业板,快速放大 |

| 3 | 100 | news_story | 老公在聚会上让我向他的上司敬酒,现在老公哭了,我笑了 | 远走高飞 |

| 4 | 108 | news_edu | 女孩上初中之后成绩下降,如何才能提升成绩? |

train.label_desc.value_counts()

news_finance 48

news_tech 47

news_game 46

news_entertainment 46

news_travel 44

news_sports 42

news_military 40

news_world 38

news_car 36

news_culture 35

news_edu 27

news_agriculture 20

news_house 19

news_story 12

Name: label_desc, dtype: int64

股票数据稍微少了些,问题不大。

Step1:准备数据

要保证有两列为:prompt和completion。

df_train = train[["sentence", "label_desc"]]

df_train.columns = ["prompt", "completion"]

df_train.head()

| prompt | completion | |

|---|---|---|

| 0 | 为什么斯凯奇与阿迪达斯脚感很相似,价格却差了近一倍? | news_sports |

| 1 | 女儿日渐消瘦,父母发现有怪物,每天吃女儿一遍 | news_story |

| 2 | 另类逼空确认反弹,剑指3200点以上 | news_finance |

| 3 | 老公在聚会上让我向他的上司敬酒,现在老公哭了,我笑了 | news_story |

| 4 | 女孩上初中之后成绩下降,如何才能提升成绩? | news_edu |

存起来:

df_train.to_json("dataset/tnews-finetuning.jsonl", orient='records', lines=True)

使用openai命令行工具进行转换:

!openai tools fine_tunes.prepare_data -f dataset/tnews-finetuning.jsonl -q

Analyzing...- Your file contains 500 prompt-completion pairs

- Based on your data it seems like you're trying to fine-tune a model for classification

- For classification, we recommend you try one of the faster and cheaper models, such as `ada`

- For classification, you can estimate the expected model performance by keeping a held out dataset, which is not used for training

- More than a third of your `prompt` column/key is uppercase. Uppercase prompts tends to perform worse than a mixture of case encountered in normal language. We recommend to lower case the data if that makes sense in your domain. See https://platform.openai.com/docs/guides/fine-tuning/preparing-your-dataset for more details

- Your data does not contain a common separator at the end of your prompts. Having a separator string appended to the end of the prompt makes it clearer to the fine-tuned model where the completion should begin. See https://platform.openai.com/docs/guides/fine-tuning/preparing-your-dataset for more detail and examples. If you intend to do open-ended generation, then you should leave the prompts empty

- All completions start with prefix `news_`. Most of the time you should only add the output data into the completion, without any prefix

- The completion should start with a whitespace character (` `). This tends to produce better results due to the tokenization we use. See https://platform.openai.com/docs/guides/fine-tuning/preparing-your-dataset for more detailsBased on the analysis we will perform the following actions:

- [Recommended] Lowercase all your data in column/key `prompt` [Y/n]: Y

- [Recommended] Add a suffix separator ` ->` to all prompts [Y/n]: Y

- [Recommended] Remove prefix `news_` from all completions [Y/n]: Y

- [Recommended] Add a whitespace character to the beginning of the completion [Y/n]: Y

- [Recommended] Would you like to split into training and validation set? [Y/n]: YYour data will be written to a new JSONL file. Proceed [Y/n]: YWrote modified files to `dataset/tnews-finetuning_prepared_train.jsonl` and `dataset/tnews-finetuning_prepared_valid.jsonl`

Feel free to take a look!Now use that file when fine-tuning:

> openai api fine_tunes.create -t "dataset/tnews-finetuning_prepared_train.jsonl" -v "dataset/tnews-finetuning_prepared_valid.jsonl" --compute_classification_metrics --classification_n_classes 14After you’ve fine-tuned a model, remember that your prompt has to end with the indicator string ` ->` for the model to start generating completions, rather than continuing with the prompt.

Once your model starts training, it'll approximately take 14.33 minutes to train a `curie` model, and less for `ada` and `babbage`. Queue will approximately take half an hour per job ahead of you.

看一下处理成什么样子了:

!head dataset/tnews-finetuning_prepared_train.jsonl

{"prompt":"cf生存特训:火箭弹狂野复仇,为兄弟报仇就要不死不休 ->","completion":" game"}{"prompt":"哈尔滨 东北抗日联军博物馆 ->","completion":" culture"}{"prompt":"中国股市中,庄家为何如此猖獗?一文告诉你真相 ->","completion":" finance"}{"prompt":"天府锦绣又重来 ->","completion":" agriculture"}{"prompt":"生活,游戏,电影中有哪些词汇稍加修改便可以成为一个非常霸气的名字? ->","completion":" game"}{"prompt":"法庭上,生父要争夺孩子抚养权,小男孩的发言让生父当场哑口无言 ->","completion":" entertainment"}{"prompt":"如何才能选到好的深圳大数据培训机构? ->","completion":" edu"}{"prompt":"有哪些娱乐圈里面的明星追星? ->","completion":" entertainment"}{"prompt":"东坞原生态野生茶 ->","completion":" culture"}{"prompt":"亚冠:恒大不胜早有预示,全北失利命中注定 ->","completion":" sports"}

最好再验证一下每个数据集的类型:

train = pnlp.read_file_to_list_dict("./dataset/tnews-finetuning_prepared_train.jsonl")

valid = pnlp.read_file_to_list_dict("./dataset/tnews-finetuning_prepared_valid.jsonl")

len(Counter([v["completion"] for v in train]))

14

len(Counter([v["completion"] for v in valid]))

14

好的,最明显的是给我们每一个prompt后面加了个标记,除此之外还有(请注意看上面的日志):

- 小写

- 去除标签

news_前缀 - 在completion前面加空格

- 切分为训练和验证集

这些都是常见的、推荐的预处理,我们就按这样。

Step2:微调

import os

os.environ.setdefault("OPENAI_API_KEY", "填入专属的API key")

'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'

!openai api fine_tunes.create \-t "./dataset/tnews-finetuning_prepared_train.jsonl" \-v "./dataset/tnews-finetuning_prepared_valid.jsonl" \--compute_classification_metrics --classification_n_classes 14 \-m davinci\--no_check_if_files_exist

Upload progress: 100%|████████████████████| 41.2k/41.2k [00:00<00:00, 15.6Mit/s]

Uploaded file from ./dataset/tnews-finetuning_prepared_train.jsonl: file-DKjBKHqWFJwo7O8MNZGOcj3F

Upload progress: 100%|████████████████████| 10.5k/10.5k [00:00<00:00, 7.85Mit/s]

Uploaded file from ./dataset/tnews-finetuning_prepared_valid.jsonl: file-j088k3GWqGeqY0o2DDAfWfPh

Created fine-tune: ft-QOkrWkHU0aleR6f5IQw1UpVL

Streaming events until fine-tuning is complete...(Ctrl-C will interrupt the stream, but not cancel the fine-tune)

[2023-04-04 21:16:33] Created fine-tune: ft-QOkrWkHU0aleR6f5IQw1UpVLStream interrupted (client disconnected).

To resume the stream, run:openai api fine_tunes.follow -i ft-QOkrWkHU0aleR6f5IQw1UpVL

其中,-t和-v分别指定训练集和验证集,接下来那行用来计算指标,-m指定要微调的模型。最后一行是检查文件是否存在,如果之前传过文件的话,这里可以复用。为了便于演示,我们这里不检查。可以微调的模型和价格参见:Pricing。

另外,值得一提的是:只能微调Completion接口,ChatCompletion不支持微调。也就是说InstructGPT的几个模型是可以微调的,但是ChatGPT不能微调,可参阅:https://platform.openai.com/docs/guides/chat/is-fine-tuning-available-for-gpt-3-5-turbo

我们这里选davinci,也是咱们之前一直用的。

可以看到,上面跑一下就断掉了,这个是正常的,我们可以通过另一个API去查看任务的进度。注意,这里的ID是上面日志打出来的ID,每次执行都会变。

!openai api fine_tunes.get -i ft-QOkrWkHU0aleR6f5IQw1UpVL

{"created_at": 1680614193,"events": [{"created_at": 1680614193,"level": "info","message": "Created fine-tune: ft-QOkrWkHU0aleR6f5IQw1UpVL","object": "fine-tune-event"}],"fine_tuned_model": null,"hyperparams": {"batch_size": null,"classification_n_classes": 14,"compute_classification_metrics": true,"learning_rate_multiplier": null,"n_epochs": 4,"prompt_loss_weight": 0.01},"id": "ft-QOkrWkHU0aleR6f5IQw1UpVL","model": "davinci","object": "fine-tune","organization_id": "org-bKXddeZffpMS2CUNCCXsW7m5","result_files": [],"status": "pending","training_files": [{"bytes": 41212,"created_at": 1680614191,"filename": "./dataset/tnews-finetuning_prepared_train.jsonl","id": "file-DKjBKHqWFJwo7O8MNZGOcj3F","object": "file","purpose": "fine-tune","status": "processed","status_details": null}],"updated_at": 1680614193,"validation_files": [{"bytes": 10507,"created_at": 1680614193,"filename": "./dataset/tnews-finetuning_prepared_valid.jsonl","id": "file-j088k3GWqGeqY0o2DDAfWfPh","object": "file","purpose": "fine-tune","status": "processed","status_details": null}]}

或者用它刚刚给的提示。注意换ID!

!openai api fine_tunes.follow -i ft-QOkrWkHU0aleR6f5IQw1UpVL

[2023-04-04 14:32:14] Created fine-tune: ft-5LDv5IiFqPvLob3KkThWLTUGStream interrupted (client disconnected).

To resume the stream, run:openai api fine_tunes.follow -i ft-5LDv5IiFqPvLob3KkThWLTUG

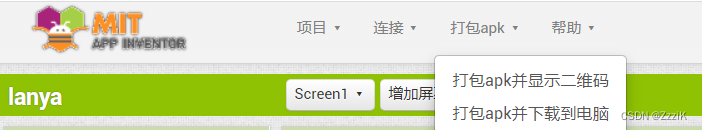

注意,这个是follow,刚刚上面那个是get。大家可以通过openai api --help查看更多:

!openai api --help

usage: openai api [-h]{engines.list,engines.get,engines.update,engines.generate,chat_completions.create,completions.create,deployments.list,deployments.get,deployments.delete,deployments.create,models.list,models.get,models.delete,files.create,files.get,files.delete,files.list,fine_tunes.list,fine_tunes.create,fine_tunes.get,fine_tunes.results,fine_tunes.events,fine_tunes.follow,fine_tunes.cancel,fine_tunes.delete,image.create,image.create_edit,image.create_variation,audio.transcribe,audio.translate}...positional arguments:{engines.list,engines.get,engines.update,engines.generate,chat_completions.create,completions.create,deployments.list,deployments.get,deployments.delete,deployments.create,models.list,models.get,models.delete,files.create,files.get,files.delete,files.list,fine_tunes.list,fine_tunes.create,fine_tunes.get,fine_tunes.results,fine_tunes.events,fine_tunes.follow,fine_tunes.cancel,fine_tunes.delete,image.create,image.create_edit,image.create_variation,audio.transcribe,audio.translate}All API subcommandsoptional arguments:-h, --help show this help message and exit

建议大家过段时间get一下进度就好,不需要一直follow。这里可能要等一段时间,等排队完成后进入训练阶段就很快了。

主要看status是什么状态,依然注意要换ID。

!openai api fine_tunes.get -i ft-QOkrWkHU0aleR6f5IQw1UpVL

{"created_at": 1680614193,"events": [{"created_at": 1680614193,"level": "info","message": "Created fine-tune: ft-QOkrWkHU0aleR6f5IQw1UpVL","object": "fine-tune-event"},{"created_at": 1680614845,"level": "info","message": "Fine-tune costs $2.33","object": "fine-tune-event"},{"created_at": 1680614846,"level": "info","message": "Fine-tune enqueued","object": "fine-tune-event"},{"created_at": 1680617657,"level": "info","message": "Fine-tune is in the queue. Queue number: 31","object": "fine-tune-event"},{"created_at": 1680617785,"level": "info","message": "Fine-tune is in the queue. Queue number: 30","object": "fine-tune-event"},{"created_at": 1680617805,"level": "info","message": "Fine-tune is in the queue. Queue number: 29","object": "fine-tune-event"},{"created_at": 1680617809,"level": "info","message": "Fine-tune is in the queue. Queue number: 28","object": "fine-tune-event"},{"created_at": 1680617918,"level": "info","message": "Fine-tune is in the queue. Queue number: 27","object": "fine-tune-event"},{"created_at": 1680617928,"level": "info","message": "Fine-tune is in the queue. Queue number: 26","object": "fine-tune-event"},{"created_at": 1680618038,"level": "info","message": "Fine-tune is in the queue. Queue number: 25","object": "fine-tune-event"},{"created_at": 1680618050,"level": "info","message": "Fine-tune is in the queue. Queue number: 24","object": "fine-tune-event"},{"created_at": 1680618087,"level": "info","message": "Fine-tune is in the queue. Queue number: 23","object": "fine-tune-event"},{"created_at": 1680618096,"level": "info","message": "Fine-tune is in the queue. Queue number: 22","object": "fine-tune-event"},{"created_at": 1680618207,"level": "info","message": "Fine-tune is in the queue. Queue number: 21","object": "fine-tune-event"},{"created_at": 1680618256,"level": "info","message": "Fine-tune is in the queue. Queue number: 20","object": "fine-tune-event"},{"created_at": 1680618268,"level": "info","message": "Fine-tune is in the queue. Queue number: 19","object": "fine-tune-event"},{"created_at": 1680618336,"level": "info","message": "Fine-tune is in the queue. Queue number: 18","object": "fine-tune-event"},{"created_at": 1680618372,"level": "info","message": "Fine-tune is in the queue. Queue number: 17","object": "fine-tune-event"},{"created_at": 1680618445,"level": "info","message": "Fine-tune is in the queue. Queue number: 16","object": "fine-tune-event"},{"created_at": 1680618488,"level": "info","message": "Fine-tune is in the queue. Queue number: 15","object": "fine-tune-event"},{"created_at": 1680618582,"level": "info","message": "Fine-tune is in the queue. Queue number: 14","object": "fine-tune-event"},{"created_at": 1680618612,"level": "info","message": "Fine-tune is in the queue. Queue number: 13","object": "fine-tune-event"},{"created_at": 1680618652,"level": "info","message": "Fine-tune is in the queue. Queue number: 12","object": "fine-tune-event"},{"created_at": 1680618693,"level": "info","message": "Fine-tune is in the queue. Queue number: 11","object": "fine-tune-event"},{"created_at": 1680618717,"level": "info","message": "Fine-tune is in the queue. Queue number: 10","object": "fine-tune-event"},{"created_at": 1680618759,"level": "info","message": "Fine-tune is in the queue. Queue number: 9","object": "fine-tune-event"},{"created_at": 1680618790,"level": "info","message": "Fine-tune is in the queue. Queue number: 8","object": "fine-tune-event"},{"created_at": 1680618841,"level": "info","message": "Fine-tune is in the queue. Queue number: 7","object": "fine-tune-event"},{"created_at": 1680618886,"level": "info","message": "Fine-tune is in the queue. Queue number: 6","object": "fine-tune-event"},{"created_at": 1680618899,"level": "info","message": "Fine-tune is in the queue. Queue number: 5","object": "fine-tune-event"},{"created_at": 1680618928,"level": "info","message": "Fine-tune is in the queue. Queue number: 4","object": "fine-tune-event"},{"created_at": 1680618979,"level": "info","message": "Fine-tune is in the queue. Queue number: 3","object": "fine-tune-event"},{"created_at": 1680619057,"level": "info","message": "Fine-tune is in the queue. Queue number: 2","object": "fine-tune-event"},{"created_at": 1680619065,"level": "info","message": "Fine-tune is in the queue. Queue number: 1","object": "fine-tune-event"},{"created_at": 1680619127,"level": "info","message": "Fine-tune is in the queue. Queue number: 0","object": "fine-tune-event"},{"created_at": 1680619227,"level": "info","message": "Fine-tune started","object": "fine-tune-event"},{"created_at": 1680619443,"level": "info","message": "Completed epoch 1/4","object": "fine-tune-event"},{"created_at": 1680619573,"level": "info","message": "Completed epoch 2/4","object": "fine-tune-event"},{"created_at": 1680619701,"level": "info","message": "Completed epoch 3/4","object": "fine-tune-event"},{"created_at": 1680619829,"level": "info","message": "Completed epoch 4/4","object": "fine-tune-event"},{"created_at": 1680619890,"level": "info","message": "Uploaded model: davinci:ft-personal-2023-04-04-14-51-29","object": "fine-tune-event"},{"created_at": 1680619891,"level": "info","message": "Uploaded result file: file-xvIjsDl5aFEtXWOVY6nZyLcD","object": "fine-tune-event"},{"created_at": 1680619891,"level": "info","message": "Fine-tune succeeded","object": "fine-tune-event"}],"fine_tuned_model": "davinci:ft-personal-2023-04-04-14-51-29","hyperparams": {"batch_size": 1,"classification_n_classes": 14,"compute_classification_metrics": true,"learning_rate_multiplier": 0.1,"n_epochs": 4,"prompt_loss_weight": 0.01},"id": "ft-QOkrWkHU0aleR6f5IQw1UpVL","model": "davinci","object": "fine-tune","organization_id": "org-bKXddeZffpMS2CUNCCXsW7m5","result_files": [{"bytes": 82416,"created_at": 1680619891,"filename": "compiled_results.csv","id": "file-xvIjsDl5aFEtXWOVY6nZyLcD","object": "file","purpose": "fine-tune-results","status": "processed","status_details": null}],"status": "succeeded","training_files": [{"bytes": 41212,"created_at": 1680614191,"filename": "./dataset/tnews-finetuning_prepared_train.jsonl","id": "file-DKjBKHqWFJwo7O8MNZGOcj3F","object": "file","purpose": "fine-tune","status": "processed","status_details": null}],"updated_at": 1680619892,"validation_files": [{"bytes": 10507,"created_at": 1680614193,"filename": "./dataset/tnews-finetuning_prepared_valid.jsonl","id": "file-j088k3GWqGeqY0o2DDAfWfPh","object": "file","purpose": "fine-tune","status": "processed","status_details": null}]}

过了很长一段时间,终于成功了。微调结束后,我们还可以通过下面的命令查看结果:

# -i 就是上面微调的模型id,就是`id`字段

!openai api fine_tunes.results -i ft-QOkrWkHU0aleR6f5IQw1UpVL > metric.csv

metric = pd.read_csv('metric.csv')

metric[metric['classification/accuracy'].notnull()].tail(1)

| step | elapsed_tokens | elapsed_examples | training_loss | training_sequence_accuracy | training_token_accuracy | validation_loss | validation_sequence_accuracy | validation_token_accuracy | classification/accuracy | classification/weighted_f1_score | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1601 | 1602 | 83226 | 1602 | 0.008739 | 1.0 | 1.0 | NaN | NaN | NaN | 0.63 | 0.619592 |

metric[metric['classification/accuracy'].notnull()]['classification/accuracy'].plot()

<AxesSubplot:>

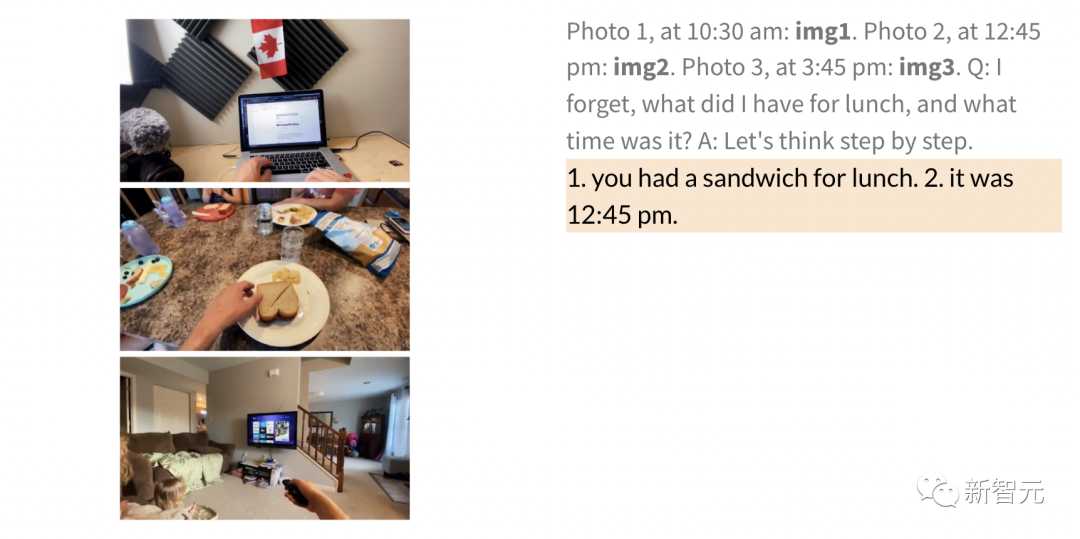

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-usRfhl1e-1683766805146)(ChatGPT%E4%BD%BF%E7%94%A8%E6%8C%87%E5%8D%97%E2%80%94%E2%80%94%E5%8F%A5%E8%AF%8D%E5%88%86%E7%B1%BB_files/ChatGPT%E4%BD%BF%E7%94%A8%E6%8C%87%E5%8D%97%E2%80%94%E2%80%94%E5%8F%A5%E8%AF%8D%E5%88%86%E7%B1%BB_209_1.png)]

这个Accuracy其实是非常一般的,应该是咱们给的语料太少的缘故。我们先看下怎么使用。

Step3:使用

prompt = get_prompt(lines[2]["sentence"])

print(prompt)

对给定文本进行分类,类别包括:科技、金融、娱乐、世界、汽车、文化、军事、旅游、游戏、教育、农业、房产、社会、股票。给定文本:

出栏一头猪亏损300元,究竟谁能笑到最后!

类别:

def complete(prompt, model, max_tokens=2):response = openai.Completion.create(prompt=prompt,temperature=0,max_tokens=max_tokens,top_p=1,frequency_penalty=0,presence_penalty=0,model=model)ans = response["choices"][0]["text"].strip(" \n")return ans

# 原来的,微调之前的

complete(prompt, "text-davinci-003", 5)

'社会'

注意咱们的prompt也要改一下。预期应该要返回一个英文单词,回忆一下训练数据里面的completion。我们这里为了方便说明,特意在前面用了中文标签,微调时用英文(就是希望它能直接输出英文标签,表示微调有效)。大家在实际使用时务必要统一。

我们把模型换成刚刚微调的(就是上面返回结果中的fine_tuned_model字段)。

# 微调后的

prompt = lines[2]["sentence"] + " ->"

complete(prompt, "davinci:ft-personal-2023-04-04-14-51-29", 1)

'agriculture'

咦——居然变成农业了,它和之前ChatGPT(ChatCompletion)的输出一样,不过依然不是我们训练数据集里的「金融」。我想这个句子看起来确实更加像农业主题,放在农业主题下应该也没问题。而且,我们的训练数据集里并没有包含这条样本。所以,这个问题不太大。

如果我们非要它变成金融的,可以把这条数据也丢给微调接口,微调后应该就可以得到我们训练集里给的类别了。

上面我们介绍了主题分类的微调。实体抽取的微调也是类似的,它推荐的输入格式如下:

{"prompt":"<any text, for example news article>\n\n###\n\n", "completion":" <list of entities, separated by a newline> END"}

{'prompt': '<any text, for example news article>\n\n###\n\n','completion': ' <list of entities, separated by a newline> END'}

举个例子:

{"prompt":"Portugal will be removed from the UK's green travel list from Tuesday, amid rising coronavirus cases and concern over a \"Nepal mutation of the so-called Indian variant\". It will join the amber list, meaning holidaymakers should not visit and returnees must isolate for 10 days...\n\n###\n\n", "completion":" Portugal\nUK\nNepal mutation\nIndian variant END"}

{'prompt': 'Portugal will be removed from the UK\'s green travel list from Tuesday, amid rising coronavirus cases and concern over a "Nepal mutation of the so-called Indian variant". It will join the amber list, meaning holidaymakers should not visit and returnees must isolate for 10 days...\n\n###\n\n','completion': ' Portugal\nUK\nNepal mutation\nIndian variant END'}

相信大家应该很容易理解,不妨自己做一些尝试。尤其是给一些专业领域的实体进行微调,对比一下微调前后的效果。

如果大家对这块内容感兴趣,可以进一步阅读【相关文献11和12】。

其它NLU应用及实战

-

最新ChatGPT GPT-4 NLU实战之文档问答类ChatPDF功能

-

最新ChatGPT GPT-4 NLU实战之智能多轮对话机器人

相关文献

- 【1】GPT3 和它的 In-Context Learning | Yam

- 【2】ChatGPT Prompt 工程:设计、实践与思考 | Yam

- 【3】一些 ChatGPT Prompt 示例 | Yam

- 【4】dair-ai/Prompt-Engineering-Guide: 🐙 Guides, papers, lecture, notebooks and resources for prompt engineering

- 【5】Best practices for prompt engineering with OpenAI API | OpenAI Help Center

- 【6】ChatGPT Prompts and Products | PromptVine

- 【7】Prompt Vibes

- 【8】ShareGPT: Share your wildest ChatGPT conversations with one click.

- 【9】Awesome ChatGPT Prompts | This repo includes ChatGPT prompt curation to use ChatGPT better.

- 【10】Learn Prompting | Learn Prompting

- 【11】Fine-tuning - OpenAI API

- 【12】[PUBLIC] Best practices for fine-tuning GPT-3 to classify text - Google Docs

- 【13】hscspring/chatbot: Lab for Chatbot

memo:

- openai-cookbook/text_explanation_examples.md at main · openai/openai-cookbook

- openai-cookbook/Fine-tuned_classification.ipynb at main · openai/openai-cookbook

- openai-cookbook/Gen_QA.ipynb at main · openai/openai-cookbook

- openai-cookbook/Question_answering_using_embeddings.ipynb at main · openai/openai-cookbook

- openai-cookbook/Fine-tuned_classification.ipynb at main · openai/openai-cookbook

参考资料

ChatGPT 使用指南:句词分类 @长琴

相关视频讲解

其它资料下载

如果大家想继续了解人工智能相关学习路线和知识体系,欢迎大家翻阅我的另外一篇博客《重磅 | 完备的人工智能AI 学习——基础知识学习路线,所有资料免关注免套路直接网盘下载》

这篇博客参考了Github知名开源平台,AI技术平台以及相关领域专家:Datawhale,ApacheCN,AI有道和黄海广博士等约有近100G相关资料,希望能帮助到所有小伙伴们。