1、修改/usr/local/hadoop/etc/hadoop/core-site.xml和/usr/local/hadoop/etc/hadoop/hdfs-site.xml文件

core-site.xml内容

<configuration><property><name>hadoop.tmp.dir</name><value>file:/usr/local/hadoop/tmp</value><description>Abase for other temporary directories.</description></property><property><name>fs.defaultFS</name><value>hdfs://localhost:9000</value></property>

</configuration>

hdfs-site.xml文件内容

<configuration><property><name>dfs.namenode.name.dir</name><value>file:/usr/local/hadoop/tmp/dfs/name</value></property><property><name>dfs.replication</name><value>1</value></property><property><name>dfs.namenode.name.dir</name><value>file:/usr/local/hadoop/tmp/dfs/name</value></property><property><name>dfs.datanode.data.dir</name><value>file:/usr/local/hadoop/tmp/dfs/data</value></property>

</configuration>

2、执行名称节点格式化

cd /usr/local/hadoop

./bin/hdfs namenode -format3、启动hadoop

cd /usr/local/hadoop

./sbin/start-dfs.sh 4、查看是否启动成功

jps

关闭hadoop

./sbin/stop-dfs.sh

rm -r ./tmp

#重新格式化节点

./sbin/hdfs namemnode -format

#重启

./sbin/start-dfs.sh5、使用web界面查看hdfs信息

访问:localhost:9870

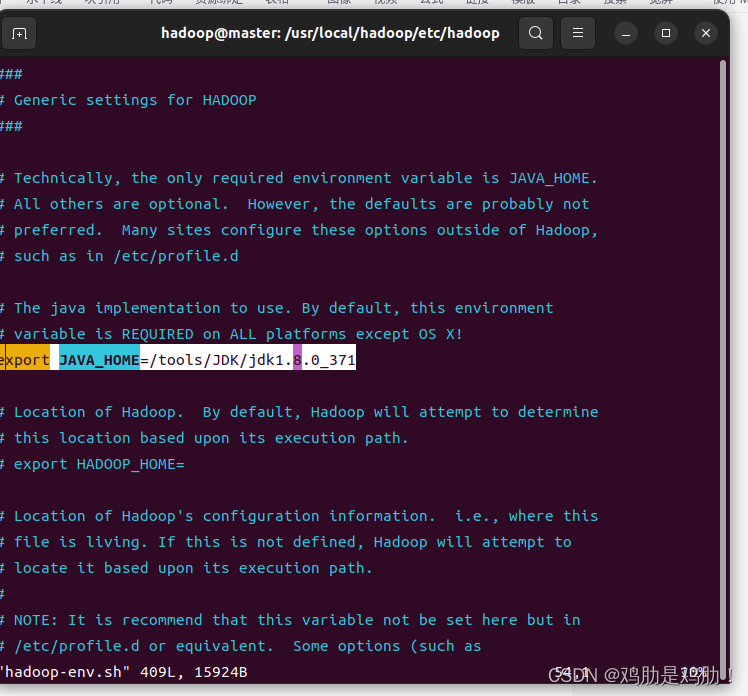

可能的报错,如果运行报jdk的错误的话,则需要更改/usr/local/hadoop/etc/hadoop/hadoop-env.sh文件

在里面加上你jdk的路径

export JAVA_HOME=/tools/JDK/jdk1.8.0_371

![[开学季]ChatPaper全流程教程](https://img-blog.csdnimg.cn/c391263913a147a1979e6597c6bb3166.png)