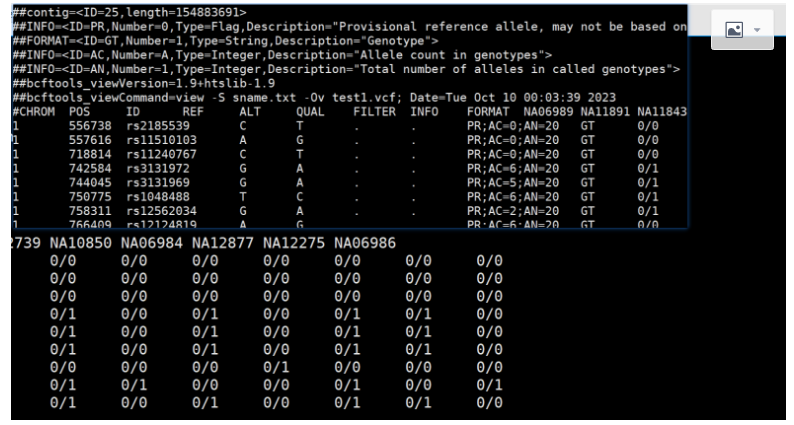

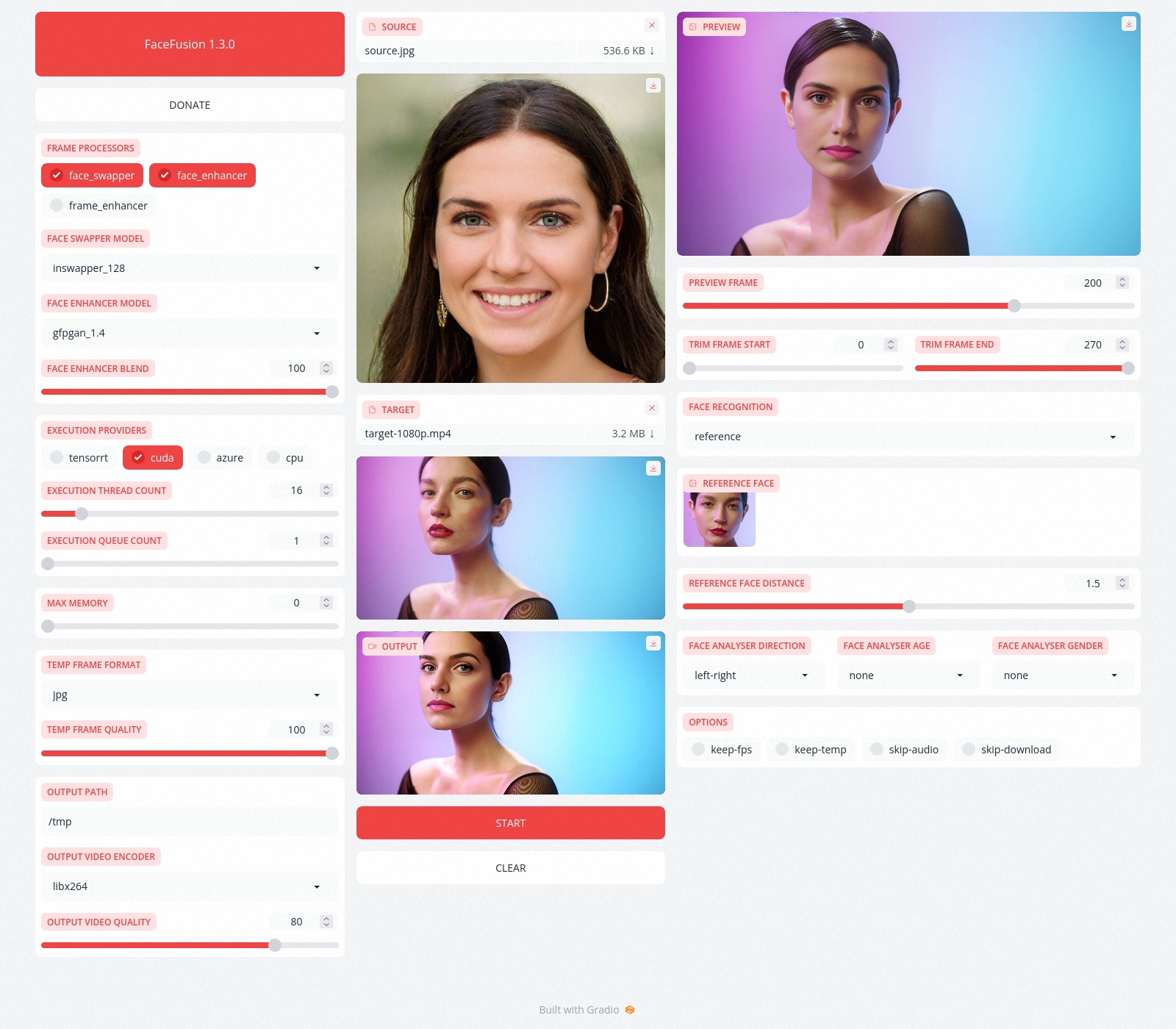

FaceFusion:探索无限创意,创造独一无二的面孔融合艺术!

它使用先进的图像处理技术,允许用户将不同的面部特征融合在一起,创造有趣和令人印象深刻的效果。这个项目的潜在应用包括娱乐、虚拟化妆和艺术创作,为用户提供了创造性的工具

1.效果预览

2.安装

请注意,安装需要技术技能,不适合初学者。请不要在GitHub上打开平台和安装相关问题。我们有一个非常有用的Discord社区,将指导您安装FaceFusion。

Read the installation now.

2.1 使用指南

Run the command:

python run.py [options]options:-h, --help show this help message and exit-s SOURCE_PATH, --source SOURCE_PATH select a source image-t TARGET_PATH, --target TARGET_PATH select a target image or video-o OUTPUT_PATH, --output OUTPUT_PATH specify the output file or directory-v, --version show program's version number and exitmisc:--skip-download omit automate downloads and lookups--headless run the program in headless modeexecution:--execution-providers {cpu} [{cpu} ...] choose from the available execution providers (choices: cpu, ...)--execution-thread-count EXECUTION_THREAD_COUNT specify the number of execution threads--execution-queue-count EXECUTION_QUEUE_COUNT specify the number of execution queries--max-memory MAX_MEMORY specify the maximum amount of ram to be used (in gb)face recognition:--face-recognition {reference,many} specify the method for face recognition--face-analyser-direction {left-right,right-left,top-bottom,bottom-top,small-large,large-small} specify the direction used for face analysis--face-analyser-age {child,teen,adult,senior} specify the age used for face analysis--face-analyser-gender {male,female} specify the gender used for face analysis--reference-face-position REFERENCE_FACE_POSITION specify the position of the reference face--reference-face-distance REFERENCE_FACE_DISTANCE specify the distance between the reference face and the target face--reference-frame-number REFERENCE_FRAME_NUMBER specify the number of the reference frameframe extraction:--trim-frame-start TRIM_FRAME_START specify the start frame for extraction--trim-frame-end TRIM_FRAME_END specify the end frame for extraction--temp-frame-format {jpg,png} specify the image format used for frame extraction--temp-frame-quality [0-100] specify the image quality used for frame extraction--keep-temp retain temporary frames after processingoutput creation:--output-image-quality [0-100] specify the quality used for the output image--output-video-encoder {libx264,libx265,libvpx-vp9,h264_nvenc,hevc_nvenc} specify the encoder used for the output video--output-video-quality [0-100] specify the quality used for the output video--keep-fps preserve the frames per second (fps) of the target--skip-audio omit audio from the targetframe processors:--frame-processors FRAME_PROCESSORS [FRAME_PROCESSORS ...] choose from the available frame processors (choices: face_enhancer, face_swapper, frame_enhancer, ...)--face-enhancer-model {codeformer,gfpgan_1.2,gfpgan_1.3,gfpgan_1.4,gpen_bfr_512} choose from the mode for the frame processor--face-enhancer-blend [0-100] specify the blend factor for the frame processor--face-swapper-model {inswapper_128,inswapper_128_fp16} choose from the mode for the frame processor--frame-enhancer-model {realesrgan_x2plus,realesrgan_x4plus,realesrnet_x4plus} choose from the mode for the frame processor--frame-enhancer-blend [0-100] specify the blend factor for the frame processoruis:--ui-layouts UI_LAYOUTS [UI_LAYOUTS ...] choose from the available ui layouts (choices: benchmark, webcam, default, ...)

2.相关文档

Read the documentation for a deep dive.

更多优质内容请关注公号:汀丶人工智能;会提供一些相关的资源和优质文章,免费获取阅读。