文章目录

- 一、数据集处理

- 二、定义模型

- 训练和画图

- 三、好人的概率/坏人的概率

- 四、生成报告

- 五、行为评分卡模型表现

- 总结

一、数据集处理

import pandas as pd

from sklearn.metrics import roc_auc_score,roc_curve,auc

from sklearn.model_selection import train_test_split

from sklearn import metrics

from sklearn.linear_model import LogisticRegression

import numpy as np

import random

import math

import time

import lightgbm as lgb

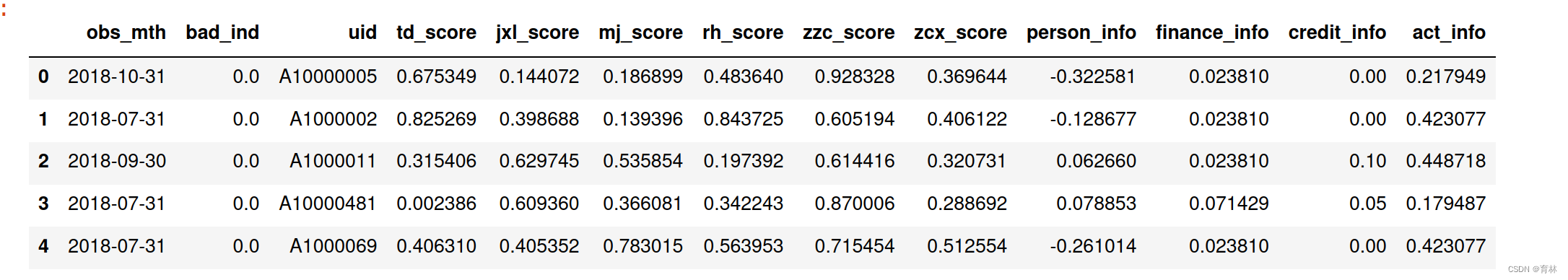

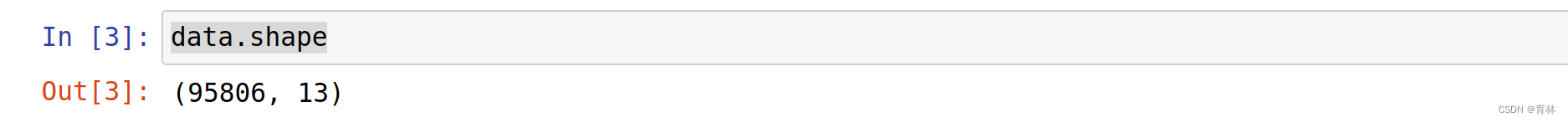

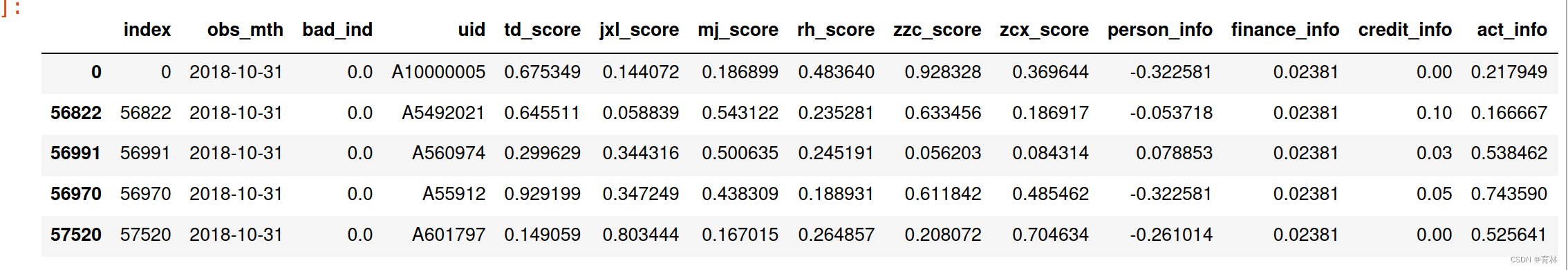

data = pd.read_csv('Bcard.txt')

data.head()

#看一下月份分布,我们用最后一个月做为跨时间验证集合

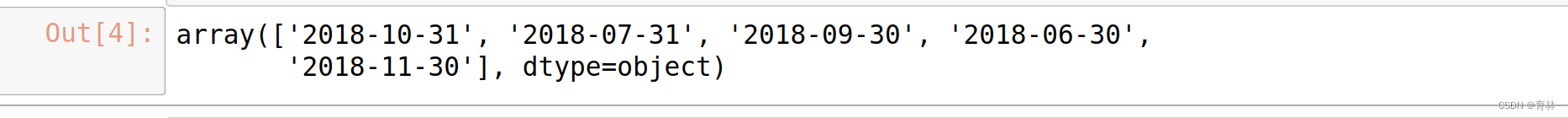

data.obs_mth.unique()

df_train = data[data.obs_mth != '2018-11-30'].reset_index().copy()

val = data[data.obs_mth == '2018-11-30'].reset_index().copy()

#这是我们全部的变量,info结尾的是自己做的无监督系统输出的个人表现,score结尾的是收费的外部征信数据

lst = ['person_info','finance_info','credit_info','act_info','td_score','jxl_score','mj_score','rh_score']

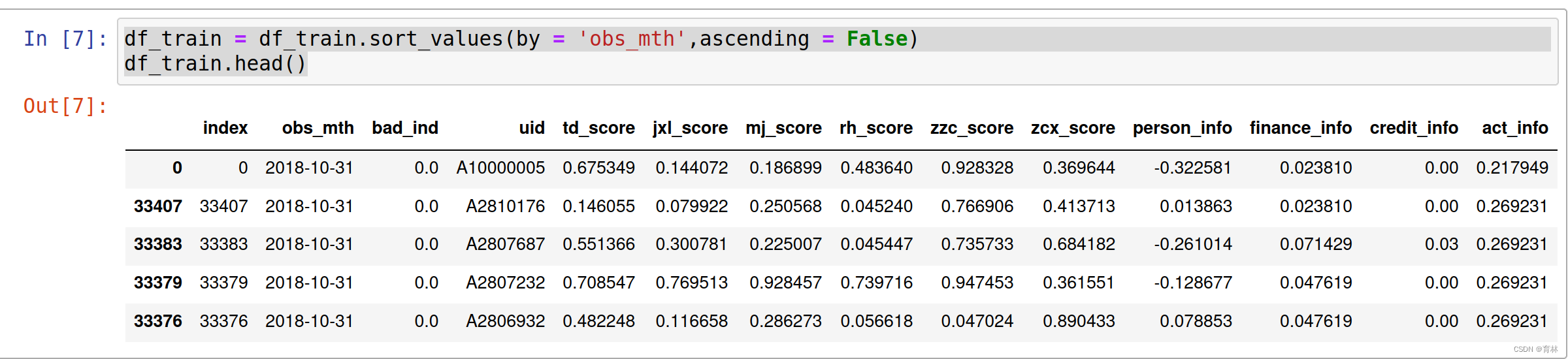

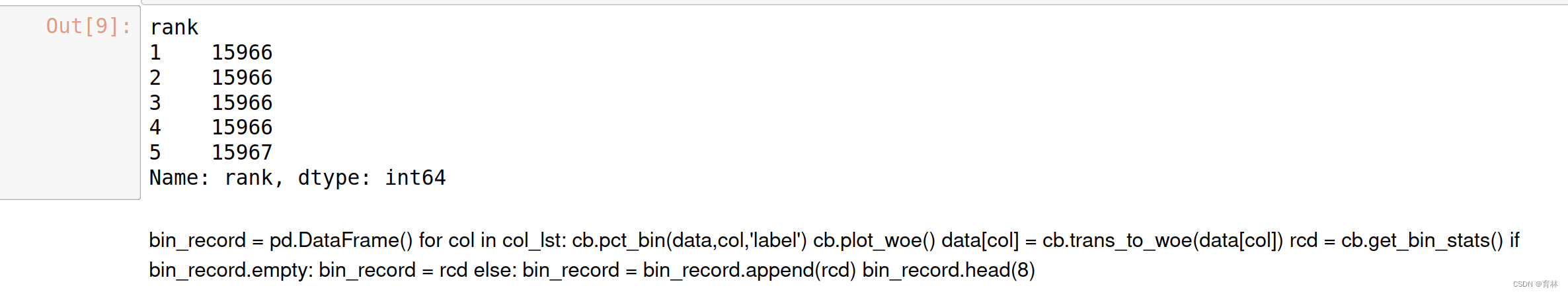

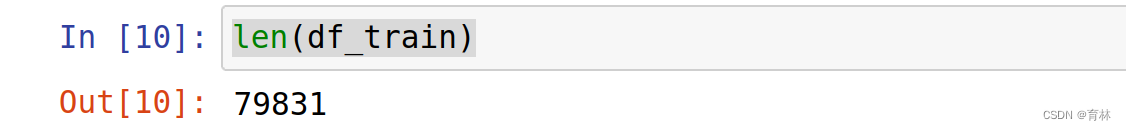

df_train = df_train.sort_values(by = 'obs_mth',ascending = False)rank_lst = []

for i in range(1,len(df_train)+1):rank_lst.append(i)df_train['rank'] = rank_lstdf_train['rank'] = df_train['rank']/len(df_train)pct_lst = []

for x in df_train['rank']:if x <= 0.2:x = 1elif x <= 0.4:x = 2elif x <= 0.6:x = 3elif x <= 0.8:x = 4else:x = 5pct_lst.append(x)

df_train['rank'] = pct_lst

#train = train.drop('obs_mth',axis = 1)

df_train.head()

1.使用sort_values()函数对df_train按照’obs_mth’列进行降序排序。这意味着月份越新的观测值会排在前面。

2.创建一个名为rank_lst的列表,其中包含从 1 到len(df_train)的所有整数。这是为了后续给 DataFrame 的每一行分配一个排名。

3.使用列表推导式,将rank_lst中的每个元素分配给df_train的’rank’列。这样,每一行的’rank’列就表示该行在排序后的 DataFrame 中的排名。

4.将df_train的’rank’列除以len(df_train),将其转换为百分比表示。这样,每一行的’rank’列就表示该行在排序后的 DataFrame 中的排名百分比。

5.创建一个新的列表pct_lst,其中包含转换后的百分比。

6.使用列表推导式,根据df_train的’rank’列的值,将pct_lst中的每个元素分配给df_train的’rank’列。这样,每一行的’rank’列就表示该行在排序后的 DataFrame 中的排名百分比。

7.最后,删除df_train中的’obs_mth’列,因为这已经不再需要。

df_train['rank'].groupby(df_train['rank']).count()

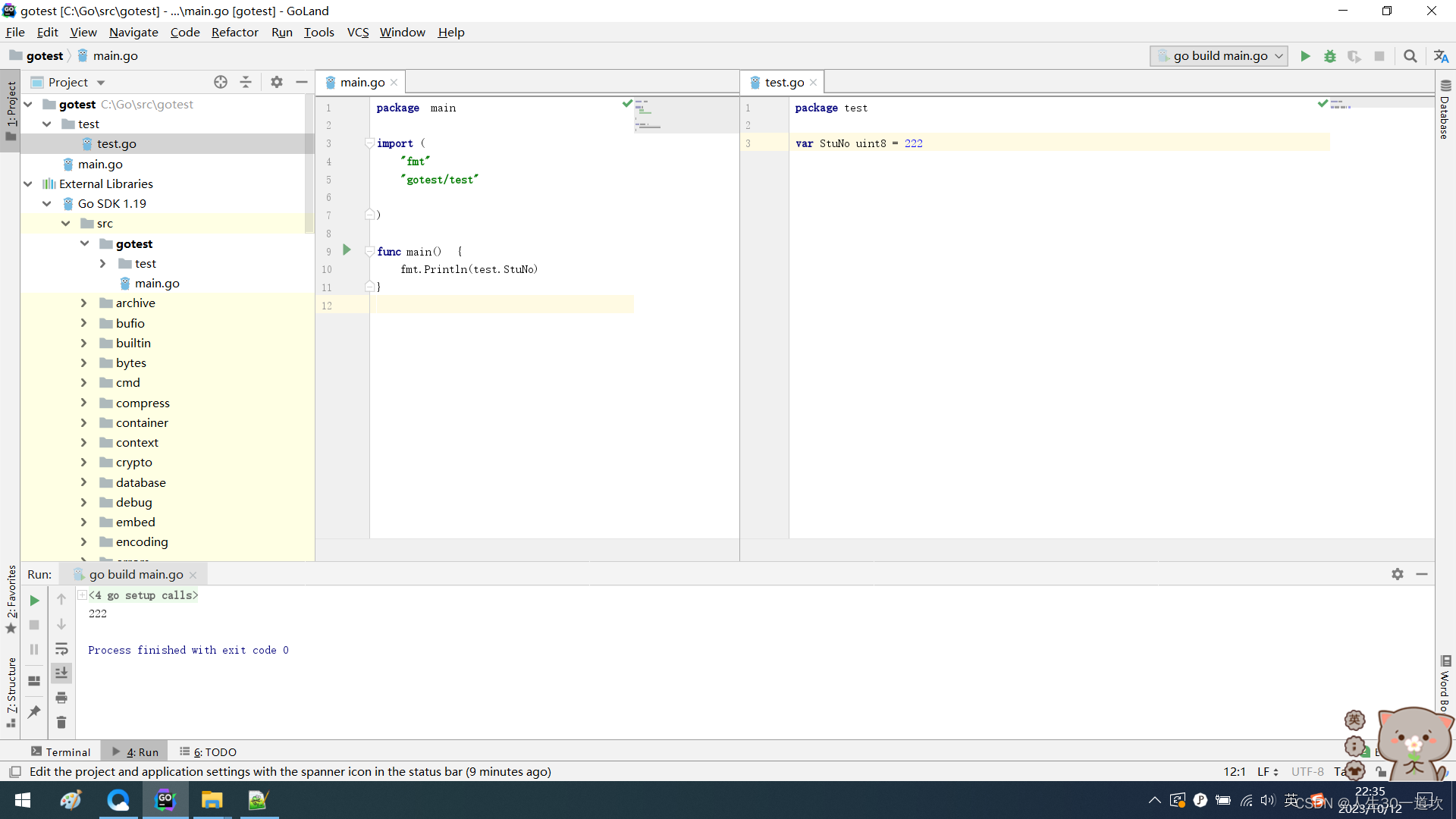

二、定义模型

#定义lgb函数

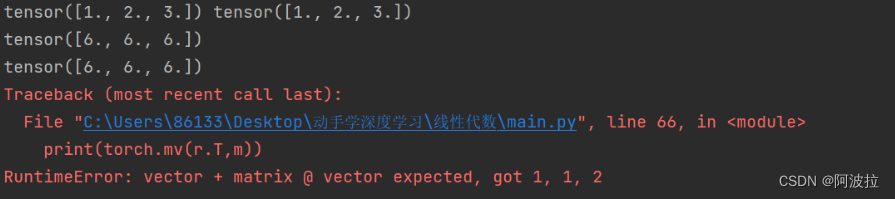

def LGB_test(train_x,train_y,test_x,test_y):from multiprocessing import cpu_countclf = lgb.LGBMClassifier(boosting_type='gbdt', num_leaves=31, reg_alpha=0.0, reg_lambda=1,max_depth=2, n_estimators=800,max_features = 140, objective='binary',subsample=0.7, colsample_bytree=0.7, subsample_freq=1,learning_rate=0.05, min_child_weight=50,random_state=None,n_jobs=cpu_count()-1,num_iterations = 800 #迭代次数)clf.fit(train_x, train_y,eval_set=[(train_x, train_y),(test_x,test_y)],eval_metric='auc')print(clf.n_features_)return clf,clf.best_score_[ 'valid_1']['auc']

feature_lst = {}

ks_train_lst = []

ks_test_lst = []

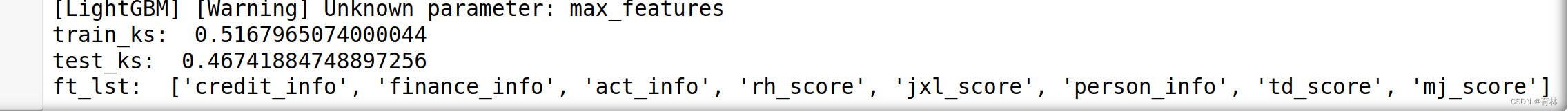

for rk in set(df_train['rank']): # 测试集8.18以后作为跨时间验证集#定义模型训练集与测试集ttest = df_train[df_train['rank'] == rk]ttrain = df_train[df_train['rank'] != rk]train = ttrain[lst]train_y = ttrain.bad_indtest = ttest[lst]test_y = ttest.bad_ind start = time.time()model,auc = LGB_test(train,train_y,test,test_y) end = time.time()#模型贡献度放在feture中feature = pd.DataFrame({'name' : model.booster_.feature_name(),'importance' : model.feature_importances_}).sort_values(by = ['importance'],ascending = False)#计算训练集、测试集、验证集上的KS和AUCy_pred_train_lgb = model.predict_proba(train)[:, 1]y_pred_test_lgb = model.predict_proba(test)[:, 1]train_fpr_lgb, train_tpr_lgb, _ = roc_curve(train_y, y_pred_train_lgb)test_fpr_lgb, test_tpr_lgb, _ = roc_curve(test_y, y_pred_test_lgb)train_ks = abs(train_fpr_lgb - train_tpr_lgb).max()test_ks = abs(test_fpr_lgb - test_tpr_lgb).max()train_auc = metrics.auc(train_fpr_lgb, train_tpr_lgb)test_auc = metrics.auc(test_fpr_lgb, test_tpr_lgb)ks_train_lst.append(train_ks)ks_test_lst.append(test_ks) feature_lst[str(rk)] = feature[feature.importance>=20].name

train_ks = np.mean(ks_train_lst)

test_ks = np.mean(ks_test_lst)ft_lst = {}

for i in range(1,6):ft_lst[str(i)] = feature_lst[str(i)]fn_lst=list(set(ft_lst['1']) & set(ft_lst['2']) & set(ft_lst['3']) & set(ft_lst['4']) &set(ft_lst['5']))print('train_ks: ',train_ks)

print('test_ks: ',test_ks)print('ft_lst: ',fn_lst )

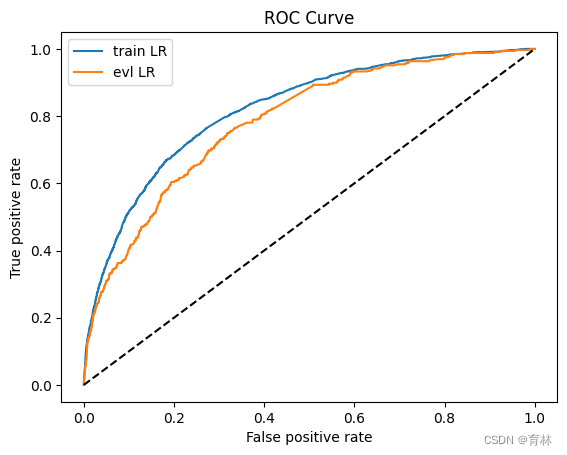

训练和画图

lst = ['person_info','finance_info','credit_info','act_info']train = data[data.obs_mth != '2018-11-30'].reset_index().copy()

evl = data[data.obs_mth == '2018-11-30'].reset_index().copy()x = train[lst]

y = train['bad_ind']evl_x = evl[lst]

evl_y = evl['bad_ind']model,auc = LGB_test(x,y,evl_x,evl_y)y_pred = model.predict_proba(x)[:,1]

fpr_lgb_train,tpr_lgb_train,_ = roc_curve(y,y_pred)

train_ks = abs(fpr_lgb_train - tpr_lgb_train).max()

print('train_ks : ',train_ks)y_pred = model.predict_proba(evl_x)[:,1]

fpr_lgb,tpr_lgb,_ = roc_curve(evl_y,y_pred)

evl_ks = abs(fpr_lgb - tpr_lgb).max()

print('evl_ks : ',evl_ks)from matplotlib import pyplot as plt

plt.plot(fpr_lgb_train,tpr_lgb_train,label = 'train LR')

plt.plot(fpr_lgb,tpr_lgb,label = 'evl LR')

plt.plot([0,1],[0,1],'k--')

plt.xlabel('False positive rate')

plt.ylabel('True positive rate')

plt.title('ROC Curve')

plt.legend(loc = 'best')

plt.show()

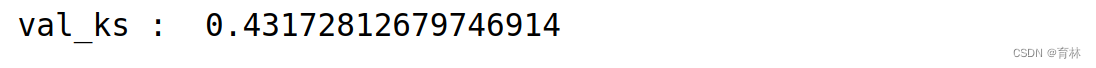

三、好人的概率/坏人的概率

#['person_info','finance_info','credit_info','act_info']

#算分数onekey

def score(xbeta):score = 1000-500*(math.log2(1-xbeta)/xbeta) #好人的概率/坏人的概率return score

evl['xbeta'] = model.predict_proba(evl_x)[:,1]

evl['score'] = evl.apply(lambda x : score(x.xbeta) ,axis=1)

fpr_lr,tpr_lr,_ = roc_curve(evl_y,evl['score'])

evl_ks = abs(fpr_lr - tpr_lr).max()

print('val_ks : ',evl_ks)

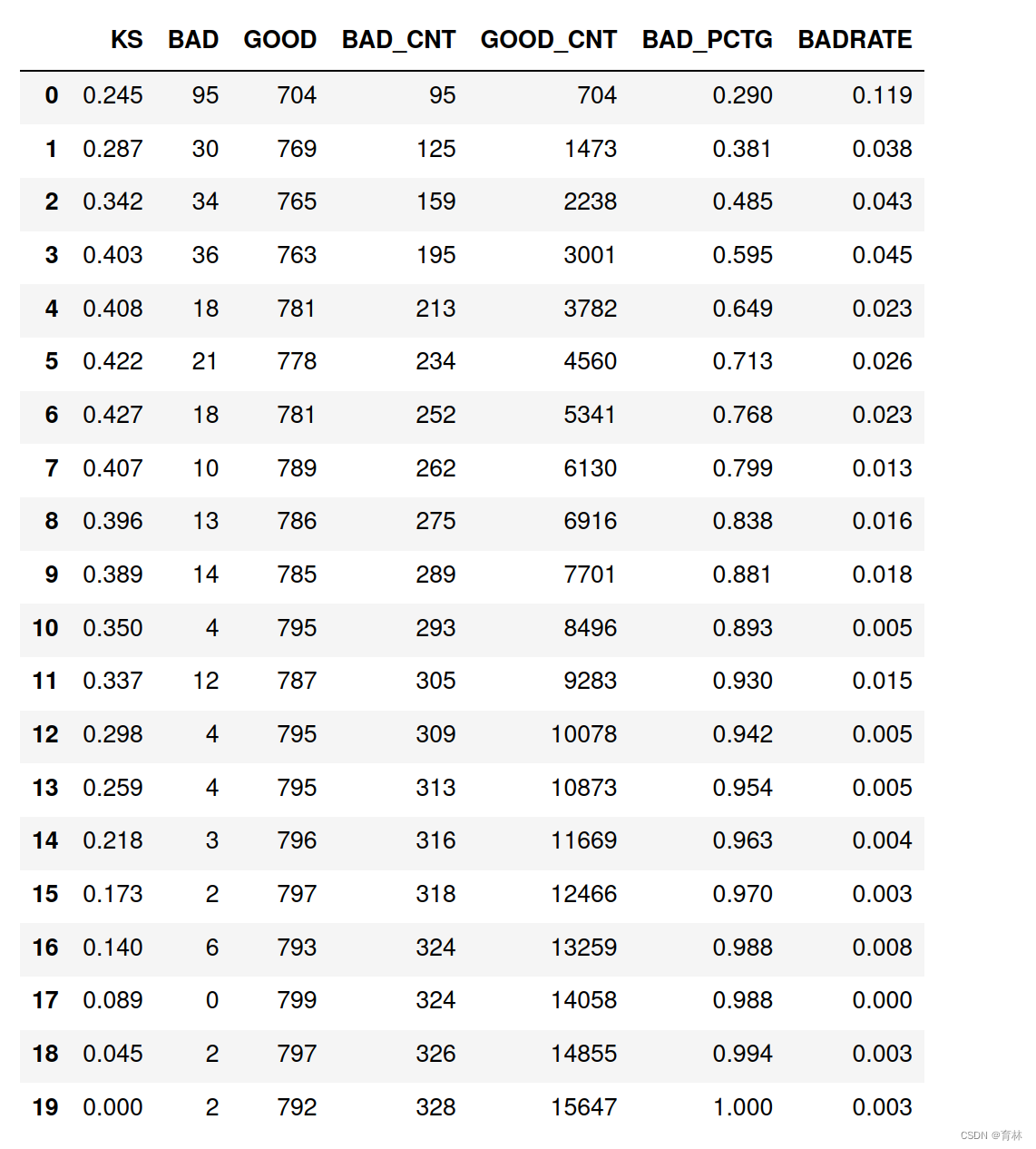

四、生成报告

row_num, col_num = 0, 0

bins = 20

Y_predict = evl['score']

Y = evl_y

nrows = Y.shape[0]

lis = [(Y_predict[i], Y[i]) for i in range(nrows)]

ks_lis = sorted(lis, key=lambda x: x[0], reverse=True)

bin_num = int(nrows/bins+1)

bad = sum([1 for (p, y) in ks_lis if y > 0.5])

good = sum([1 for (p, y) in ks_lis if y <= 0.5])

bad_cnt, good_cnt = 0, 0

KS = []

BAD = []

GOOD = []

BAD_CNT = []

GOOD_CNT = []

BAD_PCTG = []

BADRATE = []

dct_report = {}

for j in range(bins):ds = ks_lis[j*bin_num: min((j+1)*bin_num, nrows)]bad1 = sum([1 for (p, y) in ds if y > 0.5])good1 = sum([1 for (p, y) in ds if y <= 0.5])bad_cnt += bad1good_cnt += good1bad_pctg = round(bad_cnt/sum(evl_y),3)badrate = round(bad1/(bad1+good1),3)ks = round(math.fabs((bad_cnt / bad) - (good_cnt / good)),3)KS.append(ks)BAD.append(bad1)GOOD.append(good1)BAD_CNT.append(bad_cnt)GOOD_CNT.append(good_cnt)BAD_PCTG.append(bad_pctg)BADRATE.append(badrate)dct_report['KS'] = KSdct_report['BAD'] = BADdct_report['GOOD'] = GOODdct_report['BAD_CNT'] = BAD_CNTdct_report['GOOD_CNT'] = GOOD_CNTdct_report['BAD_PCTG'] = BAD_PCTGdct_report['BADRATE'] = BADRATE

val_repot = pd.DataFrame(dct_report)

val_repot

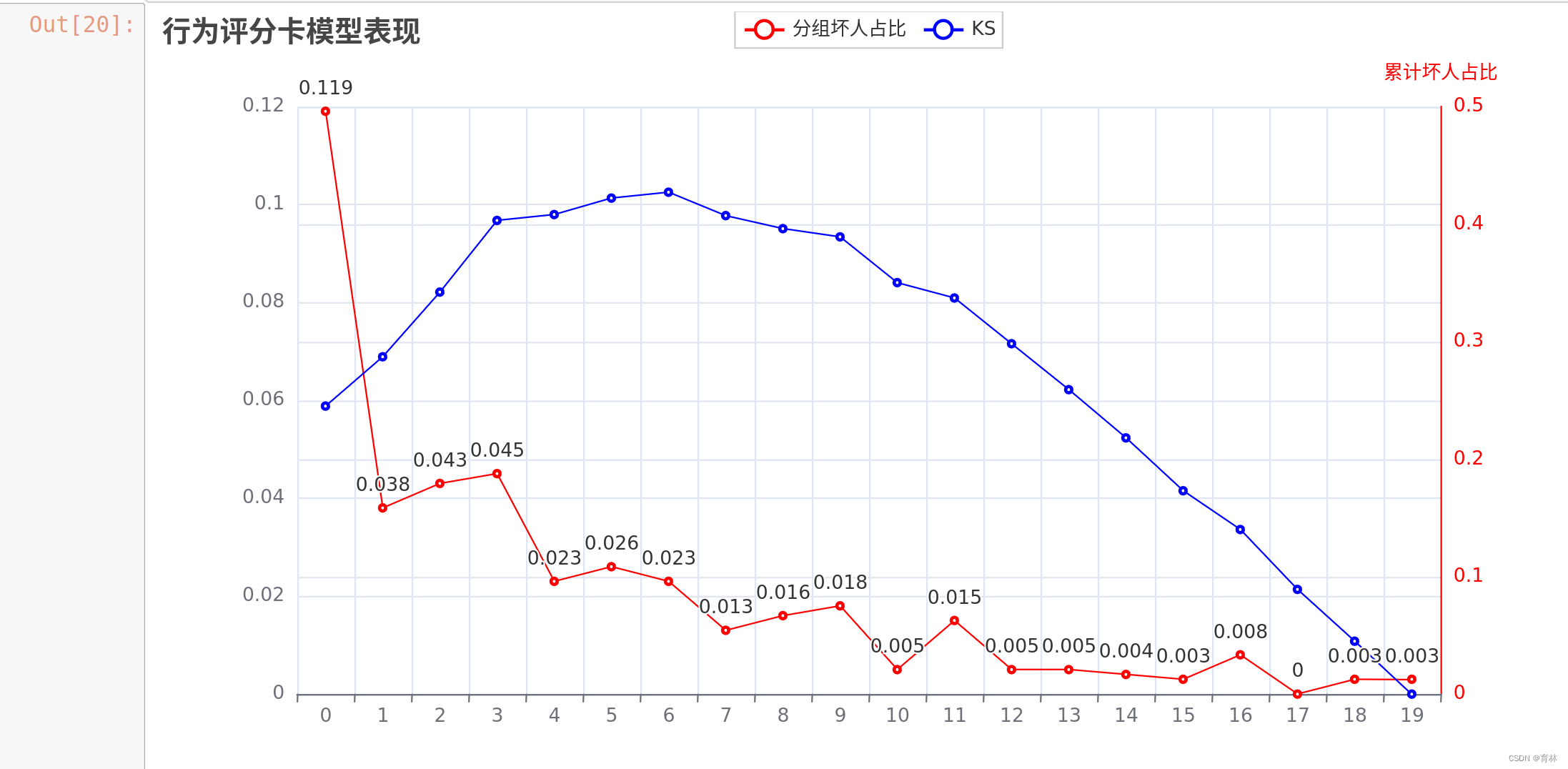

五、行为评分卡模型表现

from pyecharts.charts import *

from pyecharts import options as opts

from pylab import *

mpl.rcParams['font.sans-serif'] = ['SimHei']

np.set_printoptions(suppress=True)

pd.set_option('display.unicode.ambiguous_as_wide', True)

pd.set_option('display.unicode.east_asian_width', True)

line = (Line().add_xaxis(list(val_repot.index)).add_yaxis("分组坏人占比",list(val_repot.BADRATE),yaxis_index=0,color="red",).set_global_opts(title_opts=opts.TitleOpts(title="行为评分卡模型表现"),).extend_axis(yaxis=opts.AxisOpts(name="累计坏人占比",type_="value",min_=0,max_=0.5,position="right",axisline_opts=opts.AxisLineOpts(linestyle_opts=opts.LineStyleOpts(color="red")),axislabel_opts=opts.LabelOpts(formatter="{value}"),)).add_xaxis(list(val_repot.index)).add_yaxis("KS",list(val_repot['KS']),yaxis_index=1,color="blue",label_opts=opts.LabelOpts(is_show=False),)

)

line.render_notebook()