1、安装 Kerberos 服务器和客户端

1.1 规划

-

服务端:

- bigdata3

-

客户端(Hadoop集群):

-

bigdata0

-

bigdata1

-

bigdata2

-

192.168.50.7 bigdata0.example.com bigdata0

192.168.50.8 bigdata1.example.com bigdata1

192.168.50.9 bigdata2.example.com bigdata2

192.168.50.10 bigdata3.example.com bigdata3

1.2 安装 Kerberos 服务器

yum install -y krb5-libs krb5-server krb5-workstation

配置:

/etc/krb5.conf

[logging]default = FILE:/var/log/krb5libs.logkdc = FILE:/var/log/krb5kdc.logadmin_server = FILE:/var/log/kadmind.log[libdefaults]default_realm = EXAMPLE.COMdns_lookup_realm = falsedns_lookup_kdc = falseticket_lifetime = 24hrenew_lifetime = 7dforwardable = true[realms]EXAMPLE.COM = {kdc = bigdata3.example.comadmin_server = bigdata3.example.com}[domain_realm].example.com = EXAMPLE.COMexample.com = EXAMPLE.COM

/var/kerberos/krb5kdc/kdc.conf

kdc_ports = 88kdc_tcp_ports = 88[realms]EXAMPLE.COM = {#master_key_type = aes256-ctsacl_file = /var/kerberos/krb5kdc/kadm5.acldict_file = /usr/share/dict/wordsadmin_keytab = /var/kerberos/krb5kdc/kadm5.keytabsupported_enctypes = aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal}

/var/kerberos/krb5kdc/kadm5.acl

*/admin@EXAMPLE.COM *

初始化Kerberos的数据库

kdb5_util create -s -r EXAMPLE.COM

其中 EXAMPLE.COM 是对应的域,如果你的不同请修改

然后命令要求设置数据库master的密码,要求输入两次,输入 123456即可

这样得到 数据库master账户: K/M@EXAMPLE.COM , 密码: 123456

创建EXAMPLE.COM 域内的管理员

执行:kadmin.local 进入 kerberos 的 admin 命令行界面

# 输入如下内容,添加一个用户

addprinc root/admin@EXAMPLE.COM

# 要求输入密码,输入root作为密码(可自行设置)# 上面的账户就作为EXAMPLE.COM的管理员账户存在 (满足 */admin@EXAMPLE.COM 的规则 拥有全部权限)

# 再创建一个 测试的管理员用户

addprinc krbtest/admin@EXAMPLE.COM # 同样满足 */admin@EXAMPLE.COM 密码设置为krbtest# 查看当前拥有的所有用户

listprincs

重启Kerberos server的组件并设置开机自启

service krb5kdc restart

service kadmin restart

chkconfig krb5kdc on

chkconfig kadmin on

1.3 安装 Kerberos 客户端

yum -y install krb5-libs krb5-workstation

从server机器将 /etc/krb5.conf 复制到各个客户端同样的位置

测试登录admin:

-

执行 kinit krbtest/admin@EXAMPLE.COM 输入密码,正确的结果就是没有任何反应

-

然后输入 klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: krbtest/admin@EXAMPLE.COMValid starting Expires Service principal

09/25/19 11:41:52 09/26/19 11:41:52 krbtgt/EXAMPLE.COM@EXAMPLE.COMrenew until 09/25/19 11:41:52

- 此时,客户端配置完成,使用 kinit 切换对应的账户

2、配置相关的Kerberos账户

-

在每个节点执行 mkdir /etc/security/keytabs

-

配置运行的服务对应的Kerberos账户

配置 bigdata0:

# 执行 kadmin 输入密码, 进入Kerberos的admin后台

kadmin# 创建namenode、secondarynamenode以及用于用于https服务的账户

addprinc -randkey nn/bigdata0.example.com@EXAMPLE.COM

addprinc -randkey sn/bigdata0.example.com@EXAMPLE.COM

addprinc -randkey HTTP/bigdata0.example.com@EXAMPLE.COM# 创建 yarn 的 resourcemanager、job historyserver账户

addprinc -randkey rm/bigdata0.example.com@EXAMPLE.COM

addprinc -randkey jhs/bigdata0.example.com@EXAMPLE.COM# 防止启动或者操作的过程中需要输入密码,创建免密登录的keytab文件

# 创建账户的keytab

ktadd -k /etc/security/keytabs/nn.service.keytab nn/bigdata0.example.com@EXAMPLE.COM

ktadd -k /etc/security/keytabs/sn.service.keytab sn/bigdata0.example.com@EXAMPLE.COM

ktadd -k /etc/security/keytabs/spnego.service.keytab HTTP/bigdata0.example.com@EXAMPLE.COMktadd -k /etc/security/keytabs/rm.service.keytab rm/bigdata0.example.com@EXAMPLE.COM

ktadd -k /etc/security/keytabs/jhs.service.keytab jhs/bigdata0.example.com@EXAMPLE.COM

得到五个 keytab 文件。

-

例如:

[root@bigdata0 bigdata]# mkdir /etc/security/keytabs [root@bigdata0 bigdata]# [root@bigdata0 bigdata]# [root@bigdata0 bigdata]# kadmin Authenticating as principal krbtest/admin@EXAMPLE.COM with password. Password for krbtest/admin@EXAMPLE.COM: kadmin: addprinc -randkey nn/bigdata0.example.com@EXAMPLE.COM WARNING: no policy specified for nn/bigdata0.example.com@EXAMPLE.COM; defaulting to no policy Principal "nn/bigdata0.example.com@EXAMPLE.COM" created. kadmin: addprinc -randkey sn/bigdata0.example.com@EXAMPLE.COM WARNING: no policy specified for sn/bigdata0.example.com@EXAMPLE.COM; defaulting to no policy Principal "sn/bigdata0.example.com@EXAMPLE.COM" created. kadmin: addprinc -randkey HTTP/bigdata0.example.com@EXAMPLE.COM WARNING: no policy specified for HTTP/bigdata0.example.com@EXAMPLE.COM; defaulting to no policy Principal "HTTP/bigdata0.example.com@EXAMPLE.COM" created. kadmin: ktadd -k /etc/security/keytabs/nn.service.keytab nn/bigdata0.example.com@EXAMPLE.COM Entry for principal nn/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type aes256-cts-hmac-sha1-96 added to keytab WRFILE:/etc/security/keytabs/nn.service.keytab. Entry for principal nn/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type aes128-cts-hmac-sha1-96 added to keytab WRFILE:/etc/security/keytabs/nn.service.keytab. Entry for principal nn/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type des3-cbc-sha1 added to keytab WRFILE:/etc/security/keytabs/nn.service.keytab. Entry for principal nn/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type arcfour-hmac added to keytab WRFILE:/etc/security/keytabs/nn.service.keytab. Entry for principal nn/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type des-hmac-sha1 added to keytab WRFILE:/etc/security/keytabs/nn.service.keytab. Entry for principal nn/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type des-cbc-md5 added to keytab WRFILE:/etc/security/keytabs/nn.service.keytab. kadmin: ktadd -k /etc/security/keytabs/sn.service.keytab sn/bigdata0.example.com@EXAMPLE.COM Entry for principal sn/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type aes256-cts-hmac-sha1-96 added to keytab WRFILE:/etc/security/keytabs/sn.service.keytab. Entry for principal sn/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type aes128-cts-hmac-sha1-96 added to keytab WRFILE:/etc/security/keytabs/sn.service.keytab. Entry for principal sn/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type des3-cbc-sha1 added to keytab WRFILE:/etc/security/keytabs/sn.service.keytab. Entry for principal sn/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type arcfour-hmac added to keytab WRFILE:/etc/security/keytabs/sn.service.keytab. Entry for principal sn/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type des-hmac-sha1 added to keytab WRFILE:/etc/security/keytabs/sn.service.keytab. Entry for principal sn/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type des-cbc-md5 added to keytab WRFILE:/etc/security/keytabs/sn.service.keytab. kadmin: ktadd -k /etc/security/keytabs/spnego.service.keytab HTTP/bigdata0.example.com@EXAMPLE.COM Entry for principal HTTP/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type aes256-cts-hmac-sha1-96 added to keytab WRFILE:/etc/security/keytabs/spnego.service.keytab. Entry for principal HTTP/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type aes128-cts-hmac-sha1-96 added to keytab WRFILE:/etc/security/keytabs/spnego.service.keytab. Entry for principal HTTP/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type des3-cbc-sha1 added to keytab WRFILE:/etc/security/keytabs/spnego.service.keytab. Entry for principal HTTP/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type arcfour-hmac added to keytab WRFILE:/etc/security/keytabs/spnego.service.keytab. Entry for principal HTTP/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type des-hmac-sha1 added to keytab WRFILE:/etc/security/keytabs/spnego.service.keytab. Entry for principal HTTP/bigdata0.example.com@EXAMPLE.COM with kvno 2, encryption type des-cbc-md5 added to keytab WRFILE:/etc/security/keytabs/spnego.service.keytab. kadmin: exit [root@bigdata0 bigdata]# ll /etc/security/keytabs/ 总用量 12 -rw-------. 1 root root 454 10月 5 16:31 nn.service.keytab -rw-------. 1 root root 454 10月 5 16:31 sn.service.keytab -rw-------. 1 root root 466 10月 5 16:32 spnego.service.keytab [root@bigdata0 bigdata]#

同样的方式配置 bigdata0、bigdata1上面运行的datanode和http服务对应的Kerberos账户

# 执行 kadmin 输入密码, 进入Kerberos的admin后台

kadmin# 创建账户

addprinc -randkey dn/bigdata1.example.com@EXAMPLE.COM

addprinc -randkey HTTP/bigdata1.example.com@EXAMPLE.COMaddprinc -randkey nm/bigdata1.example.com@EXAMPLE.COM# 防止启动或者操作的过程中需要输入密码,创建免密登录的keytab文件

# 创建nn、sn、HTTP账户的keytab

ktadd -k /etc/security/keytabs/dn.service.keytab dn/bigdata1.example.com@EXAMPLE.COM

ktadd -k /etc/security/keytabs/spnego.service.keytab HTTP/bigdata1.example.com@EXAMPLE.COMktadd -k /etc/security/keytabs/nm.service.keytab nm/bigdata1.example.com@EXAMPLE.COM

# 执行 kadmin 输入密码, 进入Kerberos的admin后台

kadmin# 创建账户

addprinc -randkey dn/bigdata2.example.com@EXAMPLE.COM

addprinc -randkey HTTP/bigdata2.example.com@EXAMPLE.COMaddprinc -randkey nm/bigdata2.example.com@EXAMPLE.COM# 防止启动或者操作的过程中需要输入密码,创建免密登录的keytab文件

# 创建nn、sn、HTTP账户的keytab

ktadd -k /etc/security/keytabs/dn.service.keytab dn/bigdata2.example.com@EXAMPLE.COM

ktadd -k /etc/security/keytabs/spnego.service.keytab HTTP/bigdata2.example.com@EXAMPLE.COMktadd -k /etc/security/keytabs/nm.service.keytab nm/bigdata2.example.com@EXAMPLE.COM

3、Hadoop 配置文件

3.1 权限设置

# 将如下内容保存到.sh内,然后执行 sh xxx.sh 以root执行

# 其中内部的配置根据自己的目录设置修改

HADOOP_HOME=/bigdata/hadoop-3.3.2

DFS_NAMENODE_NAME_DIR=/data/nn

DFS_DATANODE_DATA_DIR=/data/dn

NODEMANAGER_LOCAL_DIR=/data/nm-local

NODEMANAGER_LOG_DIR=/data/nm-log

MR_HISTORY=/data/mr-history

if [ ! -n "$HADOOP_HOME" ];thenecho "请填入hadoop home 路径"exit

fi

chgrp -R hadoop $HADOOP_HOME

chown -R hdfs:hadoop $HADOOP_HOME

chown root:hadoop $HADOOP_HOME

chown hdfs:hadoop $HADOOP_HOME/sbin/distribute-exclude.sh

chown hdfs:hadoop $HADOOP_HOME/sbin/hadoop-daemon.sh

chown hdfs:hadoop $HADOOP_HOME/sbin/hadoop-daemons.sh

chown hdfs:hadoop $HADOOP_HOME/sbin/hdfs-config.cmd

chown hdfs:hadoop $HADOOP_HOME/sbin/hdfs-config.sh

chown mapred:hadoop $HADOOP_HOME/sbin/mr-jobhistory-daemon.sh

chown hdfs:hadoop $HADOOP_HOME/sbin/refresh-namenodes.sh

chown hdfs:hadoop $HADOOP_HOME/sbin/slaves.sh

chown hdfs:hadoop $HADOOP_HOME/sbin/start-all.cmd

chown hdfs:hadoop $HADOOP_HOME/sbin/start-all.sh

chown hdfs:hadoop $HADOOP_HOME/sbin/start-balancer.sh

chown hdfs:hadoop $HADOOP_HOME/sbin/start-dfs.cmd

chown hdfs:hadoop $HADOOP_HOME/sbin/start-dfs.sh

chown hdfs:hadoop $HADOOP_HOME/sbin/start-secure-dns.sh

chown yarn:hadoop $HADOOP_HOME/sbin/start-yarn.cmd

chown yarn:hadoop $HADOOP_HOME/sbin/start-yarn.sh

chown hdfs:hadoop $HADOOP_HOME/sbin/stop-all.cmd

chown hdfs:hadoop $HADOOP_HOME/sbin/stop-all.sh

chown hdfs:hadoop $HADOOP_HOME/sbin/stop-balancer.sh

chown hdfs:hadoop $HADOOP_HOME/sbin/stop-dfs.cmd

chown hdfs:hadoop $HADOOP_HOME/sbin/stop-dfs.sh

chown hdfs:hadoop $HADOOP_HOME/sbin/stop-secure-dns.sh

chown yarn:hadoop $HADOOP_HOME/sbin/stop-yarn.cmd

chown yarn:hadoop $HADOOP_HOME/sbin/stop-yarn.sh

chown yarn:hadoop $HADOOP_HOME/sbin/yarn-daemon.sh

chown yarn:hadoop $HADOOP_HOME/sbin/yarn-daemons.sh

chown mapred:hadoop $HADOOP_HOME/bin/mapred*

chown yarn:hadoop $HADOOP_HOME/bin/yarn*

chown hdfs:hadoop $HADOOP_HOME/bin/hdfs*

chown hdfs:hadoop $HADOOP_HOME/etc/hadoop/capacity-scheduler.xml

chown hdfs:hadoop $HADOOP_HOME/etc/hadoop/configuration.xsl

chown hdfs:hadoop $HADOOP_HOME/etc/hadoop/core-site.xml

chown hdfs:hadoop $HADOOP_HOME/etc/hadoop/hadoop-*

chown hdfs:hadoop $HADOOP_HOME/etc/hadoop/hdfs-*

chown hdfs:hadoop $HADOOP_HOME/etc/hadoop/httpfs-*

chown hdfs:hadoop $HADOOP_HOME/etc/hadoop/kms-*

chown hdfs:hadoop $HADOOP_HOME/etc/hadoop/log4j.properties

chown mapred:hadoop $HADOOP_HOME/etc/hadoop/mapred-*

chown hdfs:hadoop $HADOOP_HOME/etc/hadoop/slaves

chown hdfs:hadoop $HADOOP_HOME/etc/hadoop/ssl-*

chown yarn:hadoop $HADOOP_HOME/etc/hadoop/yarn-*

chmod 755 -R $HADOOP_HOME/etc/hadoop/*

chown root:hadoop $HADOOP_HOME/etc

chown root:hadoop $HADOOP_HOME/etc/hadoop

chown root:hadoop $HADOOP_HOME/etc/hadoop/container-executor.cfg

chown root:hadoop $HADOOP_HOME/bin/container-executor

chown root:hadoop $HADOOP_HOME/bin/test-container-executor

chmod 6050 $HADOOP_HOME/bin/container-executor

chown 6050 $HADOOP_HOME/bin/test-container-executor

mkdir $HADOOP_HOME/logs

mkdir $HADOOP_HOME/logs/hdfs

mkdir $HADOOP_HOME/logs/yarn

chown root:hadoop $HADOOP_HOME/logs

chmod 775 $HADOOP_HOME/logs

chown hdfs:hadoop $HADOOP_HOME/logs/hdfs

chmod 755 -R $HADOOP_HOME/logs/hdfs

chown yarn:hadoop $HADOOP_HOME/logs/yarn

chmod 755 -R $HADOOP_HOME/logs/yarn

chown -R hdfs:hadoop $DFS_DATANODE_DATA_DIR

chown -R hdfs:hadoop $DFS_NAMENODE_NAME_DIR

chmod 700 $DFS_DATANODE_DATA_DIR

chmod 700 $DFS_NAMENODE_NAME_DIR

chown -R yarn:hadoop $NODEMANAGER_LOCAL_DIR

chown -R yarn:hadoop $NODEMANAGER_LOG_DIR

chmod 770 $NODEMANAGER_LOCAL_DIR

chmod 770 $NODEMANAGER_LOG_DIR

chown -R mapred:hadoop $MR_HISTORY

chmod 770 $MR_HISTORY

3.2 hadoop 配置:

环境变量相关配置非必要,如果 /etc/profile 中配置了,可以在 hadoop 配置文件中省略

hadoop-env.sh

# 非必须,如果 /etc/profile 中配置了,这里可以省略

export JAVA_HOME=/usr/local/jdk1.8.0_221

export HADOOP_HOME=/bigdata/hadoop-3.3.0

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HADOOP_LOG_DIR=$HADOOP_HOME/logs/hdfs

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=${HADOOP_HOME}/lib/native"

yarn-env.sh

export JAVA_HOME=/usr/local/jdk1.8.0_221

export HADOOP_HOME=/bigdata/hadoop-3.3.0

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

export YARN_LOG_DIR=$HADOOP_HOME/logs/yarn

mapred-env.sh

export JAVA_HOME=/usr/local/jdk1.8.0_221

core-site.xml

<configuration><property><name>fs.defaultFS</name><value>hdfs://bigdata0.example.com:8020</value><description></description></property><property><name>io.file.buffer.size</name><value>131072</value><description></description></property><!-- 以下是 Kerberos 相关配置 --><property><name>hadoop.security.authorization</name><value>true</value><description>是否开启hadoop的安全认证</description></property><property><name>hadoop.security.authentication</name><value>kerberos</value><description>使用kerberos作为hadoop的安全认证方案</description></property><property><name>hadoop.security.auth_to_local</name><value>RULE:[2:$1@$0](nn@.*EXAMPLE.COM)s/.*/hdfs/RULE:[2:$1@$0](sn@.*EXAMPLE.COM)s/.*/hdfs/RULE:[2:$1@$0](dn@.*EXAMPLE.COM)s/.*/hdfs/RULE:[2:$1@$0](nm@.*EXAMPLE.COM)s/.*/yarn/RULE:[2:$1@$0](rm@.*EXAMPLE.COM)s/.*/yarn/RULE:[2:$1@$0](tl@.*EXAMPLE.COM)s/.*/yarn/RULE:[2:$1@$0](jhs@.*EXAMPLE.COM)s/.*/mapred/RULE:[2:$1@$0](HTTP@.*EXAMPLE.COM)s/.*/hdfs/DEFAULT</value><description>匹配规则,将 Kerberos 账户转换为本地账户。比如第一行将 nn\xx@EXAMPLE.COM 转换为 hdfs 账户</description></property><!-- HIVE KERBEROS --><property><name>hadoop.proxyuser.hive.hosts</name><value>*</value></property><property><name>hadoop.proxyuser.hive.groups</name><value>*</value></property><property><name>hadoop.proxyuser.hdfs.hosts</name><value>*</value></property><property><name>hadoop.proxyuser.hdfs.groups</name><value>*</value></property><property><name>hadoop.proxyuser.HTTP.hosts</name><value>*</value></property><property><name>hadoop.proxyuser.HTTP.groups</name><value>*</value></property></configuration>

hdfs-site.xml

<configuration><property><name>dfs.namenode.name.dir</name><value>/data/nn</value><description>Path on the local filesystem where the NameNode stores thenamespace and transactions logs persistently.</description></property><property><name>dfs.namenode.hosts</name><value>bigdata1.example.com,bigdata2.example.com</value><description>List of permitted DataNodes.</description></property><property><name>dfs.blocksize</name><value>268435456</value><description></description></property><property><name>dfs.namenode.handler.count</name><value>100</value><description></description></property><property><name>dfs.datanode.data.dir</name><value>/data/dn</value></property><property><name>dfs.permissions.supergroup</name><value>hdfs</value></property><property><name>dfs.http.policy</name><value>HTTPS_ONLY</value><description>所有开启的web页面均使用https, 细节在ssl server 和client那个配置文件内配置</description></property><property><name>dfs.data.transfer.protection</name><value>integrity</value></property><property><name>dfs.https.port</name><value>50470</value></property><property><name>dfs.datanode.data.dir.perm</name><value>700</value></property><!-- 以下是 Kerberos 相关配置 --><!-- 配置 Kerberos 认证后,这个配置是必须为 true。否重 Datanode 启动会报错 --><property><name>dfs.block.access.token.enable</name><value>true</value></property><!-- NameNode security config --><property><name>dfs.namenode.kerberos.principal</name><value>nn/_HOST@EXAMPLE.COM</value><description>namenode对应的kerberos账户为 nn/主机名@EXAMPLE.COM, _HOST会自动转换为主机名</description></property><property><name>dfs.namenode.keytab.file</name><!-- path to the HDFS keytab --><value>/etc/security/keytabs/nn.service.keytab</value><description>指定namenode用于免密登录的keytab文件</description></property><property><name>dfs.namenode.kerberos.internal.spnego.principal</name><value>HTTP/_HOST@EXAMPLE.COM</value><description>https 相关(如开启namenodeUI)使用的账户</description></property><!--Secondary NameNode security config --><property><name>dfs.secondary.namenode.kerberos.principal</name><value>sn/_HOST@EXAMPLE.COM</value><description>secondarynamenode使用的账户</description></property><property><name>dfs.secondary.namenode.keytab.file</name><!-- path to the HDFS keytab --><value>/etc/security/keytabs/sn.service.keytab</value><description>sn对应的keytab文件</description></property><property><name>dfs.secondary.namenode.kerberos.internal.spnego.principal</name><value>HTTP/_HOST@EXAMPLE.COM</value><description>sn需要开启http页面用到的账户</description></property><!-- DataNode security config --><property><name>dfs.datanode.kerberos.principal</name><value>dn/_HOST@EXAMPLE.COM</value><description>datanode用到的账户</description></property><property><name>dfs.datanode.keytab.file</name><!-- path to the HDFS keytab --><value>/etc/security/keytabs/dn.service.keytab</value><description>datanode用到的keytab文件路径</description></property><property><name>dfs.web.authentication.kerberos.principal</name><value>HTTP/_HOST@EXAMPLE.COM</value><description>web hdfs 使用的账户</description></property><property><name>dfs.web.authentication.kerberos.keytab</name><value>/etc/security/keytabs/spnego.service.keytab</value><description>对应的keytab文件</description></property></configuration>

yarn-site.xml

<?xml version="1.0"?>

<!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.

-->

<configuration><property><name>yarn.log.server.url</name><value>https://bigdata0.example.com:19890/jobhistory/logs</value><description></description></property><!-- Site specific YARN configuration properties --><property><name>yarn.acl.enable</name><value>false</value><description>Enable ACLs? Defaults to false.</description></property><property><name>yarn.admin.acl</name><value>*</value><description>ACL to set admins on the cluster. ACLs are of for comma-separated-usersspacecomma-separated-groups. Defaults to special value of * which means anyone.Special value of just space means no one has access.</description></property><property><name>yarn.log-aggregation-enable</name><value>true</value><description>Configuration to enable or disable log aggregation</description></property><property><name>yarn.nodemanager.remote-app-log-dir</name><value>/tmp/logs</value><description>Configuration to enable or disable log aggregation</description></property><property><name>yarn.resourcemanager.hostname</name><value>bigdata0.example.com</value><description></description></property><property><name>yarn.resourcemanager.scheduler.class</name><value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value><description></description></property><property><name>yarn.nodemanager.local-dirs</name><value>/data/nm-local</value><description>Comma-separated list of paths on the local filesystem whereintermediate data is written.</description></property><property><name>yarn.nodemanager.log-dirs</name><value>/data/nm-log</value><description>Comma-separated list of paths on the local filesystem where logs arewritten.</description></property><property><name>yarn.nodemanager.log.retain-seconds</name><value>10800</value><description>Default time (in seconds) to retain log files on the NodeManager Onlyapplicable if log-aggregation is disabled.</description></property><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value><description>Shuffle service that needs to be set for Map Reduce applications.</description></property><!-- To enable SSL --><property><name>yarn.http.policy</name><value>HTTPS_ONLY</value></property><property><name>yarn.nodemanager.linux-container-executor.group</name><value>hadoop</value></property><!-- 这个配了可能需要在本机编译 ContainerExecutor --><!-- 可以用以下命令检查环境。 --><!-- hadoop checknative -a --><!-- ldd $HADOOP_HOME/bin/container-executor --><!-- <property><name>yarn.nodemanager.container-executor.class</name><value>org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor</value></property><property><name>yarn.nodemanager.linux-container-executor.path</name><value>/bigdata/hadoop-3.3.2/bin/container-executor</value></property>--><!-- 以下是 Kerberos 相关配置 --><!-- ResourceManager security configs --><property><name>yarn.resourcemanager.principal</name><value>rm/_HOST@EXAMPLE.COM</value></property><property><name>yarn.resourcemanager.keytab</name><value>/etc/security/keytabs/rm.service.keytab</value></property><property><name>yarn.resourcemanager.webapp.delegation-token-auth-filter.enabled</name><value>true</value></property><!-- NodeManager security configs --><property><name>yarn.nodemanager.principal</name><value>nm/_HOST@EXAMPLE.COM</value></property><property><name>yarn.nodemanager.keytab</name><value>/etc/security/keytabs/nm.service.keytab</value></property><!-- TimeLine security configs --><property><name>yarn.timeline-service.principal</name><value>tl/_HOST@EXAMPLE.COM</value></property><property><name>yarn.timeline-service.keytab</name><value>/etc/security/keytabs/tl.service.keytab</value></property><property><name>yarn.timeline-service.http-authentication.type</name><value>kerberos</value></property><property><name>yarn.timeline-service.http-authentication.kerberos.principal</name><value>HTTP/_HOST@EXAMPLE.COM</value></property><property><name>yarn.timeline-service.http-authentication.kerberos.keytab</name><value>/etc/security/keytabs/spnego.service.keytab</value></property></configuration>

mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--Licensed under the Apache License, Version 2.0 (the "License");you may not use this file except in compliance with the License.You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing, softwaredistributed under the License is distributed on an "AS IS" BASIS,WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.See the License for the specific language governing permissions andlimitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration><property><name>mapreduce.framework.name</name><value>yarn</value><description></description></property><property><name>mapreduce.jobhistory.address</name><value>bigdata0.example.com:10020</value><description></description></property><property><name>mapreduce.jobhistory.webapp.address</name><value>bigdata0.example.com:19888</value><description></description></property><property><name>mapreduce.jobhistory.intermediate-done-dir</name><value>/mr-data/mr-history/tmp</value><description></description></property><property><name>mapreduce.jobhistory.done-dir</name> <value>/mr-data/mr-history/done</value><description></description></property><property><name>mapreduce.jobhistory.http.policy</name><value>HTTPS_ONLY</value></property><!-- 以下是 Kerberos 相关配置 --><property><name>mapreduce.jobhistory.keytab</name><value>/etc/security/keytabs/jhs.service.keytab</value></property><property><name>mapreduce.jobhistory.principal</name><value>jhs/_HOST@EXAMPLE.COM</value></property><property><name>mapreduce.jobhistory.webapp.spnego-principal</name><value>HTTP/_HOST@EXAMPLE.COM</value></property><property><name>mapreduce.jobhistory.webapp.spnego-keytab-file</name><value>/etc/security/keytabs/spnego.service.keytab</value></property></configuration>

创建https证书

openssl req -new -x509 -keyout bd_ca_key -out bd_ca_cert -days 9999 -subj '/C=CN/ST=beijing/L=beijing/O=test/OU=test/CN=test'

scp -r /etc/security/cdh.https bigdata1:/etc/security/

scp -r /etc/security/cdh.https bigdata2:/etc/security/

[root@bigdata0 cdh.https]# openssl req -new -x509 -keyout bd_ca_key -out bd_ca_cert -days 9999 -subj '/C=CN/ST=beijing/L=beijing/O=test/OU=test/CN=test'

Generating a 2048 bit RSA private key

..............+++

..........................+++

writing new private key to 'bd_ca_key'

Enter PEM pass phrase:

Verifying - Enter PEM pass phrase:

-----

[root@bigdata0 cdh.https]#

[root@bigdata0 cdh.https]# ll

总用量 8

-rw-r--r--. 1 root root 1298 10月 5 17:14 bd_ca_cert

-rw-r--r--. 1 root root 1834 10月 5 17:14 bd_ca_key

[root@bigdata0 cdh.https]# scp -r /etc/security/cdh.https bigdata1:/etc/security/

bd_ca_key 100% 1834 913.9KB/s 00:00

bd_ca_cert 100% 1298 1.3MB/s 00:00

[root@bigdata0 cdh.https]# scp -r /etc/security/cdh.https bigdata2:/etc/security/

bd_ca_key 100% 1834 1.7MB/s 00:00

bd_ca_cert 100% 1298 1.3MB/s 00:00

[root@bigdata0 cdh.https]#在三个节点依次执行

cd /etc/security/cdh.https# 所有需要输入密码的地方全部输入123456(方便起见,如果你对密码有要求请自行修改)# 1 输入密码和确认密码:123456,此命令成功后输出keystore文件

keytool -keystore keystore -alias localhost -validity 9999 -genkey -keyalg RSA -keysize 2048 -dname "CN=test, OU=test, O=test, L=beijing, ST=beijing, C=CN"# 2 输入密码和确认密码:123456,提示是否信任证书:输入yes,此命令成功后输出truststore文件

keytool -keystore truststore -alias CARoot -import -file bd_ca_cert# 3 输入密码和确认密码:123456,此命令成功后输出cert文件

keytool -certreq -alias localhost -keystore keystore -file cert# 4 此命令成功后输出cert_signed文件

openssl x509 -req -CA bd_ca_cert -CAkey bd_ca_key -in cert -out cert_signed -days 9999 -CAcreateserial -passin pass:123456# 5 输入密码和确认密码:123456,是否信任证书,输入yes,此命令成功后更新keystore文件

keytool -keystore keystore -alias CARoot -import -file bd_ca_cert# 6 输入密码和确认密码:123456

keytool -keystore keystore -alias localhost -import -file cert_signed最终得到:

-rw-r--r-- 1 root root 1294 Sep 26 11:31 bd_ca_cert

-rw-r--r-- 1 root root 17 Sep 26 11:36 bd_ca_cert.srl

-rw-r--r-- 1 root root 1834 Sep 26 11:31 bd_ca_key

-rw-r--r-- 1 root root 1081 Sep 26 11:36 cert

-rw-r--r-- 1 root root 1176 Sep 26 11:36 cert_signed

-rw-r--r-- 1 root root 4055 Sep 26 11:37 keystore

-rw-r--r-- 1 root root 978 Sep 26 11:35 truststore

配置ssl-server.xml和ssl-client.xml

ssl-sserver.xml

<configuration><property><name>ssl.server.truststore.location</name><value>/etc/security/cdh.https/truststore</value><description>Truststore to be used by NN and DN. Must be specified.</description></property><property><name>ssl.server.truststore.password</name><value>123456</value><description>Optional. Default value is "".</description></property><property><name>ssl.server.truststore.type</name><value>jks</value><description>Optional. The keystore file format, default value is "jks".</description></property><property><name>ssl.server.truststore.reload.interval</name><value>10000</value><description>Truststore reload check interval, in milliseconds.Default value is 10000 (10 seconds).</description></property><property><name>ssl.server.keystore.location</name><value>/etc/security/cdh.https/keystore</value><description>Keystore to be used by NN and DN. Must be specified.</description></property><property><name>ssl.server.keystore.password</name><value>123456</value><description>Must be specified.</description></property><property><name>ssl.server.keystore.keypassword</name><value>123456</value><description>Must be specified.</description></property><property><name>ssl.server.keystore.type</name><value>jks</value><description>Optional. The keystore file format, default value is "jks".</description></property><property><name>ssl.server.exclude.cipher.list</name><value>TLS_EE_RSA_WITH_RC4_128_SHA,SSL_DHE_RSA_EXPORT_WITH_DES40_CBC_SHA,SSL_RSA_WITH_DES_CBC_SHA,SSL_DHE_RSA_WITH_DES_CBC_SHA,SSL_RSA_EXPORT_WITH_RC4_40_MD5,SSL_RSA_EXPORT_WITH_DES40_CBC_SHA,SSL_RSA_WITH_RC4_128_MD5</value><description>Optional. The weak security cipher suites that you want excludedfrom SSL communication.</description></property></configuration>

ssl-client.xml

<configuration><property><name>ssl.client.truststore.location</name><value>/etc/security/cdh.https/truststore</value><description>Truststore to be used by clients like distcp. Must bespecified.</description></property><property><name>ssl.client.truststore.password</name><value>123456</value><description>Optional. Default value is "".</description></property><property><name>ssl.client.truststore.type</name><value>jks</value><description>Optional. The keystore file format, default value is "jks".</description></property><property><name>ssl.client.truststore.reload.interval</name><value>10000</value><description>Truststore reload check interval, in milliseconds.Default value is 10000 (10 seconds).</description></property><property><name>ssl.client.keystore.location</name><value>/etc/security/cdh.https/keystore</value><description>Keystore to be used by clients like distcp. Must bespecified.</description></property><property><name>ssl.client.keystore.password</name><value>123456</value><description>Optional. Default value is "".</description></property><property><name>ssl.client.keystore.keypassword</name><value>123456</value><description>Optional. Default value is "".</description></property><property><name>ssl.client.keystore.type</name><value>jks</value><description>Optional. The keystore file format, default value is "jks".</description></property></configuration>

分发配置

scp $HADOOP_HOME/etc/hadoop/* bigdata1:/bigdata/hadoop-3.3.2/etc/hadoop/

scp $HADOOP_HOME/etc/hadoop/* bigdata2:/bigdata/hadoop-3.3.2/etc/hadoop/

4、启动服务

ps:初始化 namenode 后可以直接 sbin/start-all.sh

5、一些错误:

5.1 Kerberos 相关错误

连不上 realm

Cannot contact any KDC for realm

一般是网络不通

1、可能是没关闭防火墙:

[root@bigdata0 bigdata]# kinit krbtest/admin@EXAMPLE.COM

kinit: Cannot contact any KDC for realm 'EXAMPLE.COM' while getting initial credentials

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]# systemctl stop firewalld.service

[root@bigdata0 bigdata]# systemctl disable firewalld.service

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@bigdata0 bigdata]# kinit krbtest/admin@EXAMPLE.COM

Password for krbtest/admin@EXAMPLE.COM:

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: krbtest/admin@EXAMPLE.COMValid starting Expires Service principal

2023-10-05T16:19:58 2023-10-06T16:19:58 krbtgt/EXAMPLE.COM@EXAMPLE.COM

[root@bigdata0 bigdata]#

2、可能是 没有在 hosts 中配置 kdc

编辑 /etc/hosts 添加 kdc 和对应 ip 的映射

# {kdc ip} {kdc}

192.168.50.10 bigdata3.example.com

https://serverfault.com/questions/612869/kinit-cannot-contact-any-kdc-for-realm-ubuntu-while-getting-initial-credentia

kinit 命令找错依赖

kinit: relocation error: kinit: symbol krb5_get_init_creds_opt_set_pac_request, version krb5_3_MIT not defined in file libkrb5.so.3 with link time reference

用 ldd ( w h i c h k i n i t ) 发现指向了非 / l i b 64 下面的 l i b k r b 5. s o . 3 依赖。执行 e x p o r t L D L I B R A R Y P A T H = / l i b 64 : (which kinit) 发现指向了非 /lib64 下面的 libkrb5.so.3 依赖。执行 export LD_LIBRARY_PATH=/lib64: (whichkinit)发现指向了非/lib64下面的libkrb5.so.3依赖。执行exportLDLIBRARYPATH=/lib64:{LD_LIBRARY_PATH} 即可。

https://community.broadcom.com/communities/community-home/digestviewer/viewthread?MID=797304#:~:text=To%20solve%20this%20error%2C%20the%20only%20workaround%20found,to%20use%20system%20libraries%20%24%20export%20LD_LIBRARY_PATH%3D%2Flib64%3A%24%20%7BLD_LIBRARY_PATH%7D

ccache id 非法

kinit: Invalid Uid in persistent keyring name while getting default ccache

修改 /etc/krb5.conf 中的 default_ccache_name 值。

https://unix.stackexchange.com/questions/712857/kinit-invalid-uid-in-persistent-keyring-name-while-getting-default-ccache-while#:~:text=If%20running%20unset%20KRB5CCNAME%20did%20not%20resolve%20it%2C,of%20%22default_ccache_name%22%20in%20%2Fetc%2Fkrb5.conf%20to%20a%20local%20file%3A

KrbException: Message stream modified (41)

据说和 jre 版本有关系,删除 krb5.conf 配置文件里的 renew_lifetime = xxx 即可。

5.2 Hadoop 相关错误

哪个节点的哪个服务有错误,可以在对应日志(或manager日志)中查看是否有异常信息。比如一些 so 文件找不到,不上即可。

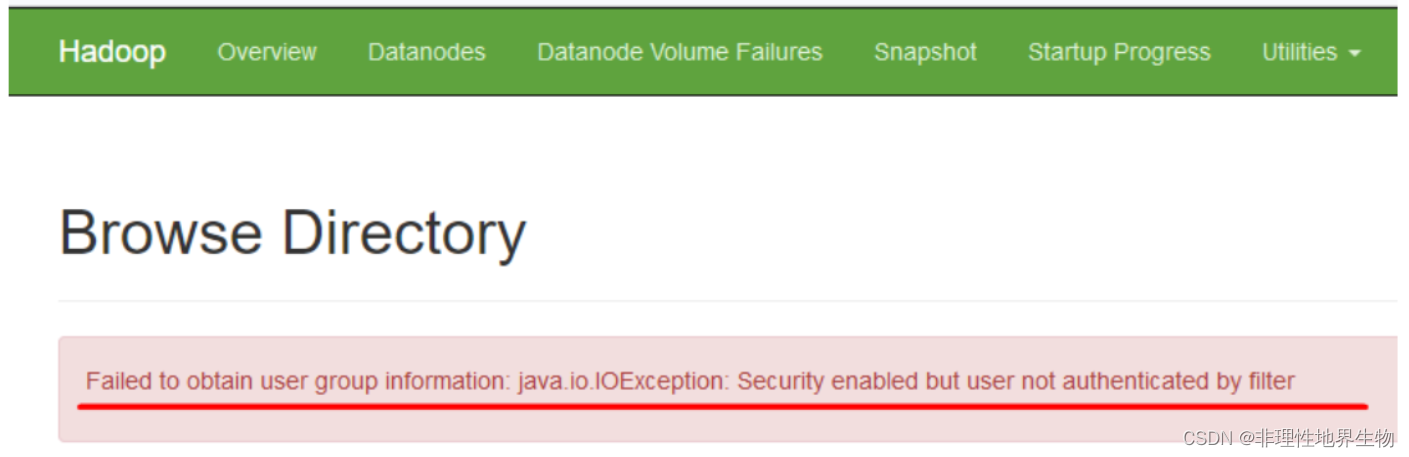

hdfs web 上报错 Failed to obtain user group information: java.io.IOException: Security enabled but user not authenticated by filter

https://issues.apache.org/jira/browse/HDFS-16441

https://issues.apache.org/jira/browse/HDFS-16441

6 提交 Spark on yarn

1、直接提交:

命令:

bin/spark-submit \ --master yarn \--deploy-mode cluster --class org.apache.spark.examples.SparkPi \examples/jars/spark-examples_2.12-3.3.0.jar

[root@bigdata3 spark-3.3.0-bin-hadoop3]# bin/spark-submit --master yarn --deploy-mode cluster --class org.apache.spark.examples.SparkPi examples/jars/spark-examples_2.12-3.3.0.jar23/10/12 13:53:05 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable23/10/12 13:53:05 INFO DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at bigdata0.example.com/192.168.50.7:803223/10/12 13:53:06 WARN Client: Exception encountered while connecting to the serverorg.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]at org.apache.hadoop.security.SaslRpcClient.selectSaslClient(SaslRpcClient.java:179)at org.apache.hadoop.security.SaslRpcClient.saslConnect(SaslRpcClient.java:392)at org.apache.hadoop.ipc.Client$Connection.setupSaslConnection(Client.java:623)at org.apache.hadoop.ipc.Client$Connection.access$2300(Client.java:414)at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:843)at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:839)at java.security.AccessController.doPrivileged(Native Method)at javax.security.auth.Subject.doAs(Subject.java:422)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1878)at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:839)at org.apache.hadoop.ipc.Client$Connection.access$3800(Client.java:414)at org.apache.hadoop.ipc.Client.getConnection(Client.java:1677)at org.apache.hadoop.ipc.Client.call(Client.java:1502)at org.apache.hadoop.ipc.Client.call(Client.java:1455)at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:242)at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:129)at com.sun.proxy.$Proxy24.getNewApplication(Unknown Source)at org.apache.hadoop.yarn.api.impl.pb.client.ApplicationClientProtocolPBClientImpl.getNewApplication(ApplicationClientProtocolPBClientImpl.java:286)at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)at java.lang.reflect.Method.invoke(Method.java:498)at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422)at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165)at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157)at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359)at com.sun.proxy.$Proxy25.getNewApplication(Unknown Source)at org.apache.hadoop.yarn.client.api.impl.YarnClientImpl.getNewApplication(YarnClientImpl.java:284)at org.apache.hadoop.yarn.client.api.impl.YarnClientImpl.createApplication(YarnClientImpl.java:292)at org.apache.spark.deploy.yarn.Client.submitApplication(Client.scala:200)at org.apache.spark.deploy.yarn.Client.run(Client.scala:1327)at org.apache.spark.deploy.yarn.YarnClusterApplication.start(Client.scala:1764)at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:958)at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1046)at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1055)at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)Exception in thread "main" java.io.IOException: DestHost:destPort bigdata0.example.com:8032 , LocalHost:localPort bigdata3.example.com/192.168.50.10:0. Failed on local exception: java.io.IOException: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)at java.lang.reflect.Constructor.newInstance(Constructor.java:423)at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:913)at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:888)at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1616)at org.apache.hadoop.ipc.Client.call(Client.java:1558)at org.apache.hadoop.ipc.Client.call(Client.java:1455)at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:242)at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:129)at com.sun.proxy.$Proxy24.getNewApplication(Unknown Source)at org.apache.hadoop.yarn.api.impl.pb.client.ApplicationClientProtocolPBClientImpl.getNewApplication(ApplicationClientProtocolPBClientImpl.java:286)at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)at java.lang.reflect.Method.invoke(Method.java:498)at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422)at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165)at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157)at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359)at com.sun.proxy.$Proxy25.getNewApplication(Unknown Source)at org.apache.hadoop.yarn.client.api.impl.YarnClientImpl.getNewApplication(YarnClientImpl.java:284)at org.apache.hadoop.yarn.client.api.impl.YarnClientImpl.createApplication(YarnClientImpl.java:292)at org.apache.spark.deploy.yarn.Client.submitApplication(Client.scala:200)at org.apache.spark.deploy.yarn.Client.run(Client.scala:1327)at org.apache.spark.deploy.yarn.YarnClusterApplication.start(Client.scala:1764)at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:958)at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1046)at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1055)at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)Caused by: java.io.IOException: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]at org.apache.hadoop.ipc.Client$Connection$1.run(Client.java:798)at java.security.AccessController.doPrivileged(Native Method)at javax.security.auth.Subject.doAs(Subject.java:422)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1878)at org.apache.hadoop.ipc.Client$Connection.handleSaslConnectionFailure(Client.java:752)at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:856)at org.apache.hadoop.ipc.Client$Connection.access$3800(Client.java:414)at org.apache.hadoop.ipc.Client.getConnection(Client.java:1677)at org.apache.hadoop.ipc.Client.call(Client.java:1502)... 27 moreCaused by: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]at org.apache.hadoop.security.SaslRpcClient.selectSaslClient(SaslRpcClient.java:179)at org.apache.hadoop.security.SaslRpcClient.saslConnect(SaslRpcClient.java:392)at org.apache.hadoop.ipc.Client$Connection.setupSaslConnection(Client.java:623)at org.apache.hadoop.ipc.Client$Connection.access$2300(Client.java:414)at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:843)at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:839)at java.security.AccessController.doPrivileged(Native Method)at javax.security.auth.Subject.doAs(Subject.java:422)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1878)at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:839)... 30 more23/10/12 13:53:06 INFO ShutdownHookManager: Shutdown hook called23/10/12 13:53:06 INFO ShutdownHookManager: Deleting directory /tmp/spark-b9b19f53-4839-4cf0-82d0-065f4956f830

2、添加 spark.kerberos.keytab 与 spark.kerberos.principal 参数提交。

在提交的机器上添加 Kerberos 账户提交

[root@bigdata3 spark-3.3.0-bin-hadoop3]# kadmin.localAuthenticating as principal root/admin@EXAMPLE.COM with password.kadmin.local:kadmin.local: addprinc -randkey nn/bigdata3.example.com@EXAMPLE.COMWARNING: no policy specified for nn/bigdata3.example.com@EXAMPLE.COM; defaulting to no policyPrincipal "nn/bigdata3.example.com@EXAMPLE.COM" created.kadmin.local: ktadd -k /etc/security/keytabs/nn.service.keytab nn/bigdata3.example.com@EXAMPLE.COM

提交命令:

bin/spark-submit \ --master yarn \--deploy-mode cluster --conf spark.kerberos.keytab=/etc/security/keytabs/nn.service.keytab \--conf spark.kerberos.principal=nn/bigdata3.example.com@EXAMPLE.COM \--class org.apache.spark.examples.SparkPi \examples/jars/spark-examples_2.12-3.3.0.jar

结果:

[root@bigdata3 spark-3.3.0-bin-hadoop3]# bin/spark-submit --master yarn --deploy-mode cluster --conf spark.kerberos.keytab=/etc/security/keytabs/nn.service.keytab --conf spark.kerberos.principal=nn/bigdata3.example.com@EXAMPLE.COM --class org.apache.spark.examples.SparkPi examples/jars/spark-examples_2.12-3.3.0.jar23/10/12 13:53:30 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable23/10/12 13:53:31 INFO Client: Kerberos credentials: principal = nn/bigdata3.example.com@EXAMPLE.COM, keytab = /etc/security/keytabs/nn.service.keytab23/10/12 13:53:31 INFO DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at bigdata0.example.com/192.168.50.7:803223/10/12 13:53:32 INFO Configuration: resource-types.xml not found23/10/12 13:53:32 INFO ResourceUtils: Unable to find 'resource-types.xml'.23/10/12 13:53:32 INFO Client: Verifying our application has not requested more than the maximum memory capability of the cluster (8192 MB per container)23/10/12 13:53:32 INFO Client: Will allocate AM container, with 1408 MB memory including 384 MB overhead23/10/12 13:53:32 INFO Client: Setting up container launch context for our AM23/10/12 13:53:32 INFO Client: Setting up the launch environment for our AM container23/10/12 13:53:32 INFO Client: Preparing resources for our AM container23/10/12 13:53:32 INFO Client: To enable the AM to login from keytab, credentials are being copied over to the AM via the YARN Secure Distributed Cache.23/10/12 13:53:32 INFO Client: Uploading resource file:/etc/security/keytabs/nn.service.keytab -> hdfs://bigdata0.example.com:8020/user/hdfs/.sparkStaging/application_1697089814250_0001/nn.service.keytab23/10/12 13:53:34 WARN Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.23/10/12 13:53:44 INFO Client: Uploading resource file:/tmp/spark-83cea039-6e72-4958-b097-4537a008e792/__spark_libs__1911433509069709237.zip -> hdfs://bigdata0.example.com:8020/user/hdfs/.sparkStaging/application_1697089814250_0001/__spark_libs__1911433509069709237.zip23/10/12 13:54:16 INFO Client: Uploading resource file:/bigdata/spark-3.3.0-bin-hadoop3/examples/jars/spark-examples_2.12-3.3.0.jar -> hdfs://bigdata0.example.com:8020/user/hdfs/.sparkStaging/application_1697089814250_0001/spark-examples_2.12-3.3.0.jar23/10/12 13:54:16 INFO Client: Uploading resource file:/tmp/spark-83cea039-6e72-4958-b097-4537a008e792/__spark_conf__8266642042189366964.zip -> hdfs://bigdata0.example.com:8020/user/hdfs/.sparkStaging/application_1697089814250_0001/__spark_conf__.zip23/10/12 13:54:17 INFO SecurityManager: Changing view acls to: root,hdfs23/10/12 13:54:17 INFO SecurityManager: Changing modify acls to: root,hdfs23/10/12 13:54:17 INFO SecurityManager: Changing view acls groups to:23/10/12 13:54:17 INFO SecurityManager: Changing modify acls groups to:23/10/12 13:54:17 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root, hdfs); groups with view permissions: Set(); users with modify permissions: Set(root, hdfs); groups with modify permissions: Set()23/10/12 13:54:17 INFO HadoopDelegationTokenManager: Attempting to login to KDC using principal: nn/bigdata3.example.com@EXAMPLE.COM23/10/12 13:54:17 INFO HadoopDelegationTokenManager: Successfully logged into KDC.23/10/12 13:54:17 INFO HiveConf: Found configuration file null23/10/12 13:54:17 INFO HadoopFSDelegationTokenProvider: getting token for: DFS[DFSClient[clientName=DFSClient_NONMAPREDUCE_1847188333_1, ugi=nn/bigdata3.example.com@EXAMPLE.COM (auth:KERBEROS)]] with renewer rm/bigdata0.example.com@EXAMPLE.COM23/10/12 13:54:17 INFO DFSClient: Created token for hdfs: HDFS_DELEGATION_TOKEN owner=nn/bigdata3.example.com@EXAMPLE.COM, renewer=yarn, realUser=, issueDate=1697090057412, maxDate=1697694857412, sequenceNumber=7, masterKeyId=8 on 192.168.50.7:802023/10/12 13:54:17 INFO HadoopFSDelegationTokenProvider: getting token for: DFS[DFSClient[clientName=DFSClient_NONMAPREDUCE_1847188333_1, ugi=nn/bigdata3.example.com@EXAMPLE.COM (auth:KERBEROS)]] with renewer nn/bigdata3.example.com@EXAMPLE.COM23/10/12 13:54:17 INFO DFSClient: Created token for hdfs: HDFS_DELEGATION_TOKEN owner=nn/bigdata3.example.com@EXAMPLE.COM, renewer=hdfs, realUser=, issueDate=1697090057438, maxDate=1697694857438, sequenceNumber=8, masterKeyId=8 on 192.168.50.7:802023/10/12 13:54:17 INFO HadoopFSDelegationTokenProvider: Renewal interval is 86400032 for token HDFS_DELEGATION_TOKEN23/10/12 13:54:19 INFO Client: Submitting application application_1697089814250_0001 to ResourceManager23/10/12 13:54:21 INFO YarnClientImpl: Submitted application application_1697089814250_000123/10/12 13:54:22 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:22 INFO Client:client token: Token { kind: YARN_CLIENT_TOKEN, service: }diagnostics: N/AApplicationMaster host: N/AApplicationMaster RPC port: -1queue: root.hdfsstart time: 1697090060093final status: UNDEFINEDtracking URL: https://bigdata0.example.com:8090/proxy/application_1697089814250_0001/user: hdfs23/10/12 13:54:23 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:24 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:25 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:26 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:27 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:28 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:29 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:30 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:31 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:32 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:33 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:34 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:35 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:37 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:38 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:39 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:40 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:41 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:42 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:43 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:45 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:46 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:47 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:48 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:50 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:51 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:52 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:53 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:54 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:55 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:56 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:57 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:58 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:54:59 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:01 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:02 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:03 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:04 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:05 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:06 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:07 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:08 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:09 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:10 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:11 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:12 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:13 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:14 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:15 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:16 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:17 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:18 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:19 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:20 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:22 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:23 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:24 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:25 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:26 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:27 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:28 INFO Client: Application report for application_1697089814250_0001 (state: ACCEPTED)23/10/12 13:55:29 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:29 INFO Client:client token: Token { kind: YARN_CLIENT_TOKEN, service: }diagnostics: N/AApplicationMaster host: bigdata0.example.comApplicationMaster RPC port: 35967queue: root.hdfsstart time: 1697090060093final status: UNDEFINEDtracking URL: https://bigdata0.example.com:8090/proxy/application_1697089814250_0001/user: hdfs23/10/12 13:55:30 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:31 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:32 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:33 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:34 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:35 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:36 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:37 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:38 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:39 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:40 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:41 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:42 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:43 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:44 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:45 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:46 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:47 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:48 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:49 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:50 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:51 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:52 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:53 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:54 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:55 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:56 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:57 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:58 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:55:59 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:56:00 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:56:01 INFO Client: Application report for application_1697089814250_0001 (state: RUNNING)23/10/12 13:56:02 INFO Client: Application report for application_1697089814250_0001 (state: FINISHED)23/10/12 13:56:02 INFO Client:client token: N/Adiagnostics: N/AApplicationMaster host: bigdata0.example.comApplicationMaster RPC port: 35967queue: root.hdfsstart time: 1697090060093final status: SUCCEEDEDtracking URL: https://bigdata0.example.com:8090/proxy/application_1697089814250_0001/user: hdfs23/10/12 13:56:02 INFO ShutdownHookManager: Shutdown hook called23/10/12 13:56:02 INFO ShutdownHookManager: Deleting directory /tmp/spark-83cea039-6e72-4958-b097-4537a008e79223/10/12 13:56:02 INFO ShutdownHookManager: Deleting directory /tmp/spark-90b58a61-a7ef-43a9-b470-5c3fa4ea03ac[root@bigdata3 spark-3.3.0-bin-hadoop3]#```