使用传统的计算机视觉方法定位图像中的车牌,参考了部分网上的文章,实际定位效果对于我目前使用的网上的图片来说还可以。实测发现对于车身本身是蓝色、或是车牌本身上方有明显边缘的情况这类图片定位效果较差。纯练手项目,仅供参考。代码中imagePreProcess对某些图片定位率相比于imagePreProcess2做预处理的效果要好。后续可以尝试做一个如果imagePreProcess2识别无效后使用imagePreProcess再处理,或者加上阈值自适应打分的机制优化。目前对于我做的练手项目来说足够了。

注意:以下代码是参考了网上的一些文章后,按照自己的思路写的,定位效果尚可。参考的文章有:python-opencv实战:车牌识别(一):精度还不错的车牌定位_基于阈值分割的车牌定位识别-CSDN博客

https://www.cnblogs.com/fyunaru/p/12083856.html

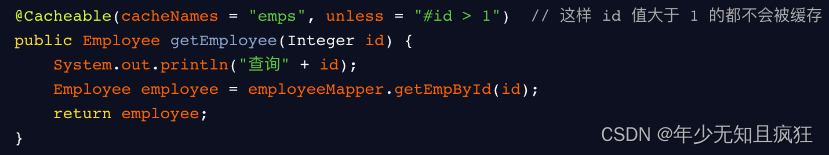

import cv2 as cv

import numpy as np

import matplotlib.pyplot as plt#过滤矩形的参数

minRectW = 100

minRectH = 50

#判断车牌颜色的参数

#一般情况下,蓝色车牌H分量的值通常都在115附近徘徊

# S分量和V分量因光照不同而差异较大(opencv中H分量的取值范围是0到179,而不是图像学中的0到360;S分量和V分量的取值范围是到255)

deltaH = 15

hsvLower = np.array([115 - deltaH,60,60])

hsvUpper = np.array([115 + deltaH,255,255])#灰度拉伸

def grayScaleStretch(img):maxGray = float(img.max())minGray = float(img.min())for i in range(img.shape[0]):for j in range(img.shape[1]):img[i,j] = 255 / (maxGray - minGray) * (img[i,j] - minGray)return img#图像二值化

def image2Binary(img):#选取灰度最大最小值的中间值maxGray = float(img.max())minGray = float(img.min())threshold = (minGray + maxGray) / 2ret,bin = cv.threshold(img, threshold, 255, cv.THRESH_BINARY)return bin#图像预处理

def imagePreProcess(img):#转换为灰度图imgGray = cv.cvtColor(img, cv.COLOR_BGR2GRAY)#灰度拉伸imgGray = grayScaleStretch(imgGray)#plt.imshow(imgGray, cmap='gray')kernel = cv.getStructuringElement(cv.MORPH_ELLIPSE, (3,3))#做开运算imgOpen = cv.morphologyEx(imgGray, cv.MORPH_OPEN, kernel)#plt.imshow(imgOpen, cmap='gray')#获得差分图imgDiff = cv.absdiff(imgGray, imgOpen)#plt.imshow(imgDiff, cmap='gray')imgDiff = cv.GaussianBlur(imgDiff, (3,3), 5)#plt.imshow(imgDiff, cmap='gray')#图像二值化imgBinary = image2Binary(imgDiff)#plt.imshow(imgBinary, cmap='gray')cannyEdges = cv.Canny(imgBinary, 127, 200)#plt.imshow(cannyEdges, cmap='gray')#对Canny检测边缘结果做处理kernel = np.ones((3,3), np.uint8)imgOut = cv.morphologyEx(cannyEdges, cv.MORPH_CLOSE, kernel)imgOut = cv.dilate(imgOut, kernel, iterations=1)imgOut = cv.morphologyEx(imgOut, cv.MORPH_OPEN, kernel)#imgOut = cv.erode(imgOut, kernel, iterations=1)imgOut = cv.morphologyEx(imgOut, cv.MORPH_CLOSE, kernel)#plt.imshow(imgOut, cmap='gray')return imgOut#图像预处理2 - 对于某些

def imagePreProcess2(img):imgGray = cv.cvtColor(img, cv.COLOR_BGR2GRAY)#灰度拉伸imgGray = grayScaleStretch(imgGray)imgGray = cv.GaussianBlur(imgGray, (3,3), 5)#进行边缘检测cannyEdges = cv.Canny(imgGray, 180, 230)#二值化imgBinary = image2Binary(cannyEdges)#plt.imshow(imgBinary, cmap='gray')#先做闭运算再做开运算kernel = np.ones((3,3), np.uint8)imgOut = cv.morphologyEx(imgBinary, cv.MORPH_CLOSE, kernel)imgOut = cv.morphologyEx(imgOut, cv.MORPH_OPEN, kernel)imgOut = cv.absdiff(imgBinary, imgOut)imgOut = cv.morphologyEx(imgOut, cv.MORPH_CLOSE, kernel)imgOut = cv.dilate(imgOut, kernel, iterations=1)plt.imshow(imgOut, cmap='gray')return imgOut#debug

def printHSV(hsvSrc):for i in range(hsvSrc.shape[0]):for j in range(hsvSrc.shape[1]):(h,s,v) = hsvSrc[i][j]print(h,s,v)#定位车牌

def locate_plate(imgProcessing, imgOriginal):contours,hierarchy = cv.findContours(imgProcessing, cv.RETR_EXTERNAL, cv.CHAIN_APPROX_SIMPLE)carPlateCandidates = []for contour in contours:(x,y,w,h) = cv.boundingRect(contour)#过滤掉一些小的矩形if (w < minRectW or h < minRectH):continue#cv.rectangle(imgOriginal, (int(x), int(y)), (int(x + w),int(y + h)), (0,255,0), 2)carPlateCandidates.append([int(x),int(y),int(x + w),int(y + h)])#plt.imshow(imgOriginal[:,:,::-1])maxMean = 0target = []target_mask = []#依次检查候选车牌列表,用HSV颜色空间判别是否是车牌for candidate in carPlateCandidates:(x0,y0,x1,y1) = candidatecandidateROI = imgOriginal[y0:y1,x0:x1]hsvROI = cv.cvtColor(candidateROI, cv.COLOR_BGR2HSV)mask = cv.inRange(hsvROI, hsvLower, hsvUpper)#print(mask)#plt.imshow(mask, cmap='gray')#使用均值找出蓝色最多的区域mean = cv.mean(mask)#print(mean)if mean[0] > maxMean:maxMean = mean[0]target = candidatetarget_mask = mask#对target的范围进行缩小,找出蓝色刚开始和结束的坐标print(target_mask)nonZeroPoints = cv.findNonZero(target_mask)#print(nonZeroPoints)sortByX = np.sort(nonZeroPoints, axis=0)xMin = sortByX[0][0][0]xMax = sortByX[-1][0][0]print(sortByX)sortByY = np.sort(nonZeroPoints, axis=1)yMin = sortByY[0][0][1]yMax = sortByY[-1][0][1]print(sortByY)print("X min:" + str(xMin) + " X max:" + str(xMax) + " Y min:" + str(yMin) + " Y max:" + str(yMax))(x0,y0,x1,y1) = targetprint("Original:" + str(x0) + "," + str(y0) + "," + str(x1) + "," + str(y1))#target = (x0 + xMin, y0 + yMin, x0 + (xMax - xMin), y0 + yMax - yMin)target = [x0 + xMin, y0 + yMin, x0 + xMax, y0 + yMax]return target#读取图像

imgCarPlate = cv.imread("../../SampleImages/carplate/carplate_chongqing.jpg", cv.IMREAD_COLOR)

#plt.imshow(imgCarPlate[:,:,::-1])

img4locate = imagePreProcess2(imgCarPlate)

target = locate_plate(img4locate, imgCarPlate)

(x0,y0,x1,y1) = target

cv.rectangle(imgCarPlate, (x0,y0), (x1,y1), (0,255,0), 2)

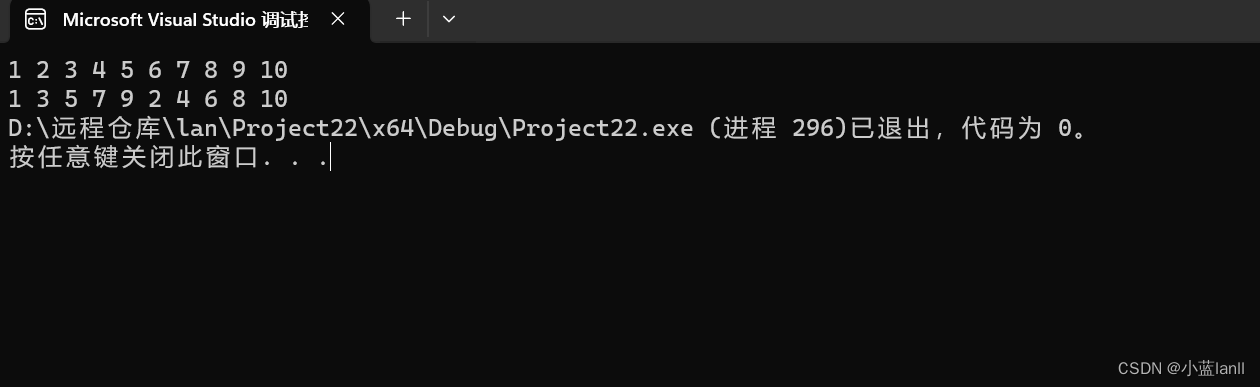

plt.imshow(imgCarPlate[:,:,::-1])成功的例子:

不太成功的例子(轮廓检测的不太好,并且轮廓中蓝色的值过早出现,可以优化判断为连续的蓝色而不是零散的蓝色)

失败的例子(没能检测出小轮廓,车身本身为蓝色,替换为imagePreProcess后能够成功):