文献速递:生成对抗网络医学影像中的应用—— CG-3DSRGAN:用于从低剂量PET图像恢复图像质量的分类指导的3D生成对抗网络

本周给大家分享文献的主题是生成对抗网络(Generative adversarial networks, GANs)在医学影像中的应用。文献的研究内容包括同模态影像生成、跨模态影像生成、GAN在分类和分割方面的应用等。生成对抗网络与其他方法相比展示出了优越的数据生成能力,使它们在医学图像应用中广受欢迎。这些特性引起了医学成像领域研究人员的浓厚兴趣,导致这些技术在各种传统和新颖应用中迅速实施,如图像重建、分割、检测、分类和跨模态合成。

01

文献速递介绍

正电子发射断层扫描(PET)是一种超灵敏且非侵入性的分子成像技术,已成为肿瘤学 和神经学 的主要工具。与磁共振(MR)和计算机断层扫描(CT)等其他成像方式相比,PET能够通过向体内注射放射性示踪剂,成像活体组织的功能特性,并检测器官内与疾病相关的功能活动 。不幸的是,用于PET成像的注射放射性示踪剂对患者产生的电离辐射剂量限制了其应用 。根据示踪剂的剂量水平,重建的PET图像可以分为标准剂量(sPET)和低剂量PET(lPET)图像。与lPET相比,sPET图像具有更高的信噪比(SNR)、更多的结构特征和更优的整体图像质量。然而,sPET将不可避免地导致高累积辐射暴露。受这些挑战的激励,人们已经在图像分析方法上取得了进展,旨在在保持低注射剂量的同时从lPET图像中恢复sPET图像。

基于卷积神经网络(CNN)的深度学习方法在医学图像分析相关任务中取得了巨大成功,例如自动肿瘤分割和分类 。研究人员还使用深度学习从lPET图像中恢复sPET图像 。Xiang等人 结合了多个CNN模块,采用自动上下文策略通过多次迭代估计sPET,他们采用了膨胀内核的U-Net架构来增加感受野。不幸的是,CNN中的池化层用于减少特征图的空间分辨率,这可能导致信息丢失和细节丢失,例如边缘和纹理。为了解决这些挑战,研究人员尝试使用生成对抗网络(GAN)通过扩展网络,并配备一个鉴别器来区分真/合成图像,以保留结构细节。Bi等人 开发了多通道GAN,以从相应的CT扫描和肿瘤标签合成高质量PET。通过使用条件GAN模型和对抗性训练策略,Wang等人能够从低剂量PET恢复全剂量PET图像 。然而,基于GAN的方法在恢复高维细节(如上下文信息和临床意义的纹理特征,如图像的强度值)方面存在困难。这主要是因为这些方法没有探索sPET和lPET图像之间的空间相关性。为了克服GAN引起的伪影,第二阶段的细化是纠正前一阶段造成的映射误差的理想方式。受这一未满足需求的启发,我们精心设计了一个超分辨率网络 - Contextual-Net,目标是重建更多的上下文细节,以使精制的合成PET与真实的sPET空间对齐。

任务驱动策略在医学图像分析领域已经被积极研究 。基本上,任务驱动的方法引入

Title

题目

CG-3DSRGAN: A classification guided 3D generative adversarial network for image quality recovery from low-dose PET images

CG-3DSRGAN:用于从低剂量PET图像恢复图像质量的分类指导的3D生成对抗网络

Abstract

摘要

Positron emission tomography (PET) is the most sensitive molecular imaging modality routinely applied in our modern healthcare. High radioactivity caused by the injected tracer dose is a major concern in PET imaging and limits its clinical applications. However, reducing the dose leads to inadequate image quality for diagnostic practice. Motivated by**the need to produce high quality images with minimum ‘low dose’, convolutional neural networks (CNNs) based methods have been developed for high quality PET synthesis from its low dose counterparts. Previous CNNs-based studies usually directly map low-dose PET into features space without consideration of different dose reduction level. In this study, a novel approach named CG-3DSRGAN (Classification-Guided Generative Adversarial Network with Super Resolution Refinement) is presented. Specifically, a multi-tasking coarse generator, guided by a classification head, allows for a more comprehensive understanding of the noise-level features present in the low-dose data, resulting in improved image synthesis. Moreover, to recover spatial details of standard PET, an auxiliary super resolution network – Contextual-Net – is proposed as a second stage training to narrow the gap between coarse prediction and standard PET. We compared our method to the state-of-the-art methods on whole-body PET with different dose reduction factors (DRF). Experiments demonstrate our method can outperform others on all DRF.

正电子发射断层扫描(PET)是我们现代医疗中常用的最敏感的分子成像方式。由注射的示踪剂剂量引起的高放射性是PET成像的一个主要问题,限制了其临床应用。然而,减少剂量会导致诊断实践中图像质量不足。基于需要使用最低“低剂量”产生高质量图像的动机,基于卷积神经网络(CNNs)的方法已经被开发出来,用于从低剂量PET合成高质量PET。以往基于CNN的研究通常直接将低剂量PET映射到特征空间,而没有考虑不同的剂量减少级别。在这项研究中,提出了一个名为CG-3DSRGAN(分类引导的具有超分辨率细化的生成对抗网络)的新方法。具体来说,一个由分类头引导的多任务粗生成器,使我们能够更全面地理解低剂量数据中存在的噪声级特征,从而改善图像合成。此外,为了恢复标准PET的空间细节,提出了一个辅助超分辨率网络 - Contextual-Net - 作为第二阶段训练,以缩小粗预测与标准PET之间的差距。我们将我们的方法与不同剂量减少因素(DRF)的全身PET的最新方法进行了比较。实验表明我们的方法可以在所有DRF上胜过其他方法。

Methods

方法

In total, 90 patients from three centers (30 CT-MR prostate pairs/center) underwent treatment using volumetric modulated arc therapy for prostate cancer (PCa) (60 Gy in 20 fractions). T2 MRI images were acquired in addition to computed tomography (CT) images for treatment planning. The DL model was a 2D supervised conditional generative adversarial network (Pix2Pix). Patient images underwent preprocessing steps, including nonrigid registration. Seven different supervised models were trained, incorporating patients from one, two, or three centers. Each model was trained on 24 CT-MR prostate pairs. A generic model was trained using patients from all three centers. To compare sCT and CT, the mean absolute error in Hounsfield units was calculated for the entire pelvis, prostate, bladder, rectum, and bones. For dose analysis, mean dose differences of D99% for CTV, V95% for PTV, Dmax for rectum and bladder, and 3D gamma analysis (local, 1%/1mm) were calculated from CT and sCT. Furthermore, Wilcoxon tests were performed to compare the image and dose results obtained with the generic model to those with the other trained models.

总共有90名患者来自三个中心(每个中心30对CT-MR前列腺图像对),接受了体积调制弧放射疗法治疗前列腺癌(60 Gy,分20次)。除了用于治疗规划的计算机断层扫描(CT)图像外,还获取了T2磁共振成像(MRI)图像。深度学习模型是一个2D监督条件生成对抗网络(Pix2Pix)。患者图像经过预处理步骤,包括非刚性配准。共训练了七个不同的监督模型,包括来自一个、两个或三个中心的患者。每个模型都是在24对CT-MR前列腺图像上进行训练的。使用来自所有三个中心的患者进行了通用模型的训练。为了比较sCT和CT,计算了在Hounsfield单位中的整个骨盆、前列腺、膀胱、直肠和骨骼的平均绝对误差。对于剂量分析,从CT和sCT计算了CTV的D99%的平均剂量差异,PTV的V95%,直肠和膀胱的Dmax,以及3D伽马分析(局部,1%/1mm)。此外,进行了Wilcoxon检验,以比较通用模型与其他训练模型获得的图像和剂量结果

**Conclusions

**

结论

In this work, we proposed a novel high quality PET synthesis model – Classification-guided 3D super resolution GAN. Our results demonstrated the proposed method has optimal performance on standard PET synthesis from lPET quantitively and qualitatively compared to other existing state of-the-art. As future work, we will investigate the use of residual estimation that can further improve synthesis sPET quality by minimizing the distribution gap between prediction and ground truth. Self-supervised pre-training strategy may also further improve the model representation.

在这项工作中,我们提出了一个新颖的高质量PET合成模型——分类引导的3D超分辨率生成对抗网络(GAN)。我们的结果表明,与现有的最先进技术相比,所提出的方法在从lPET合成标准PET方面在定量和定性上都具有最优性能。作为未来的工作,我们将研究使用残差估计,这可以通过最小化预测与真实值之间的分布差距,进一步提高合成sPET的质量。自监督的预训练策略也可能进一步提高模型的表现。

Figure

图

Figure 1. The workflow of proposed CG-3DSRGAN.

图1. 所提出的CG-3DSRGAN的工作流程。

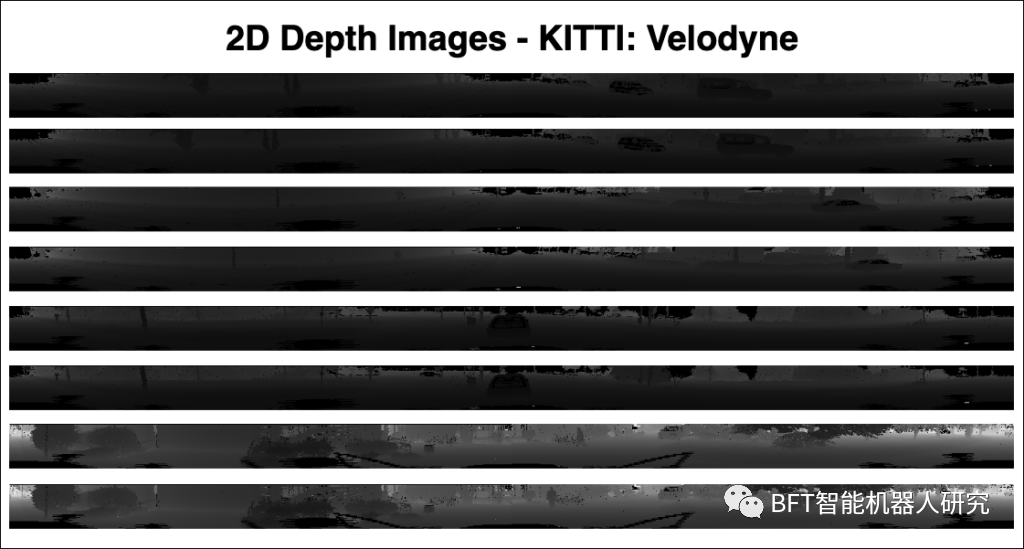

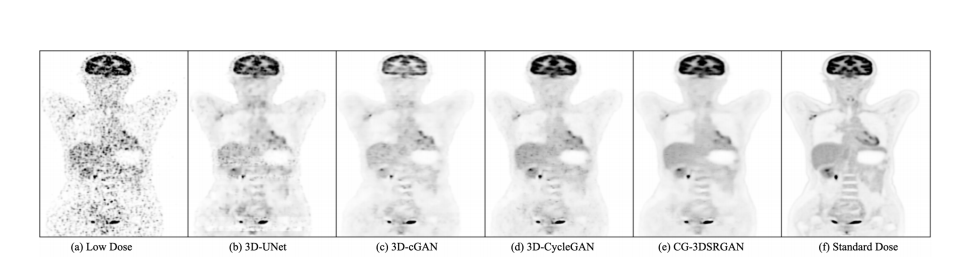

Figure 2. Visualization Results of synthetic sPET from four methods on DRF 100 dataset.

图2. 四种方法在DRF 100数据集上合成sPET的可视化结果。

Table

表

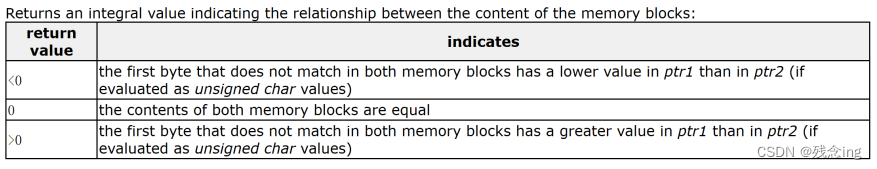

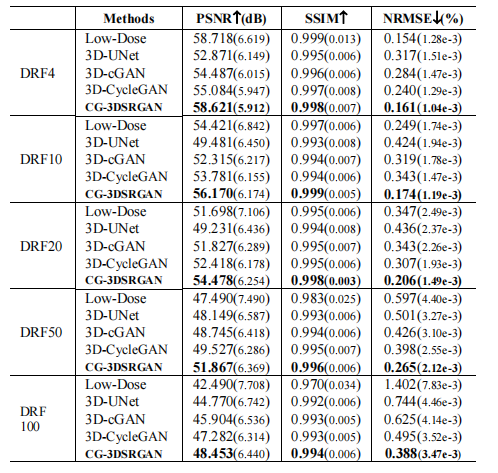

TABLE I. quantitaice compapison results (mean with standard deviation)of the imafes synthesized by different methods for different methods for different drfs

表I. 不同DRFS下不同方法合成图像的定量比较结果(均值及标准偏差)

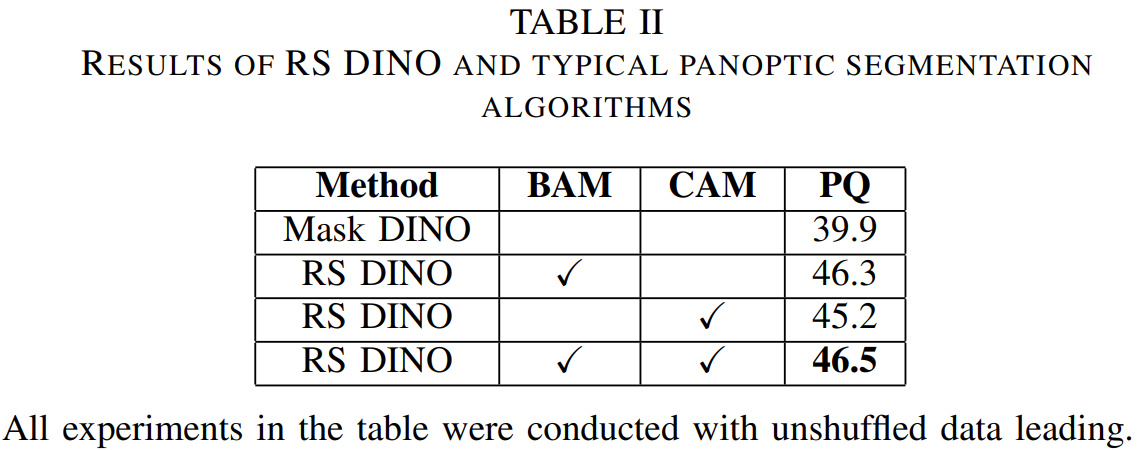

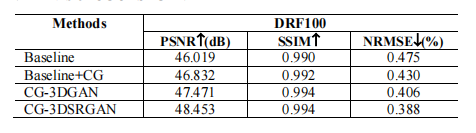

TABLE II.quantitative comparison results of different variants of CG-3DSRGAN

表II.CG-3DSRGAN不同变体的定量比较结果

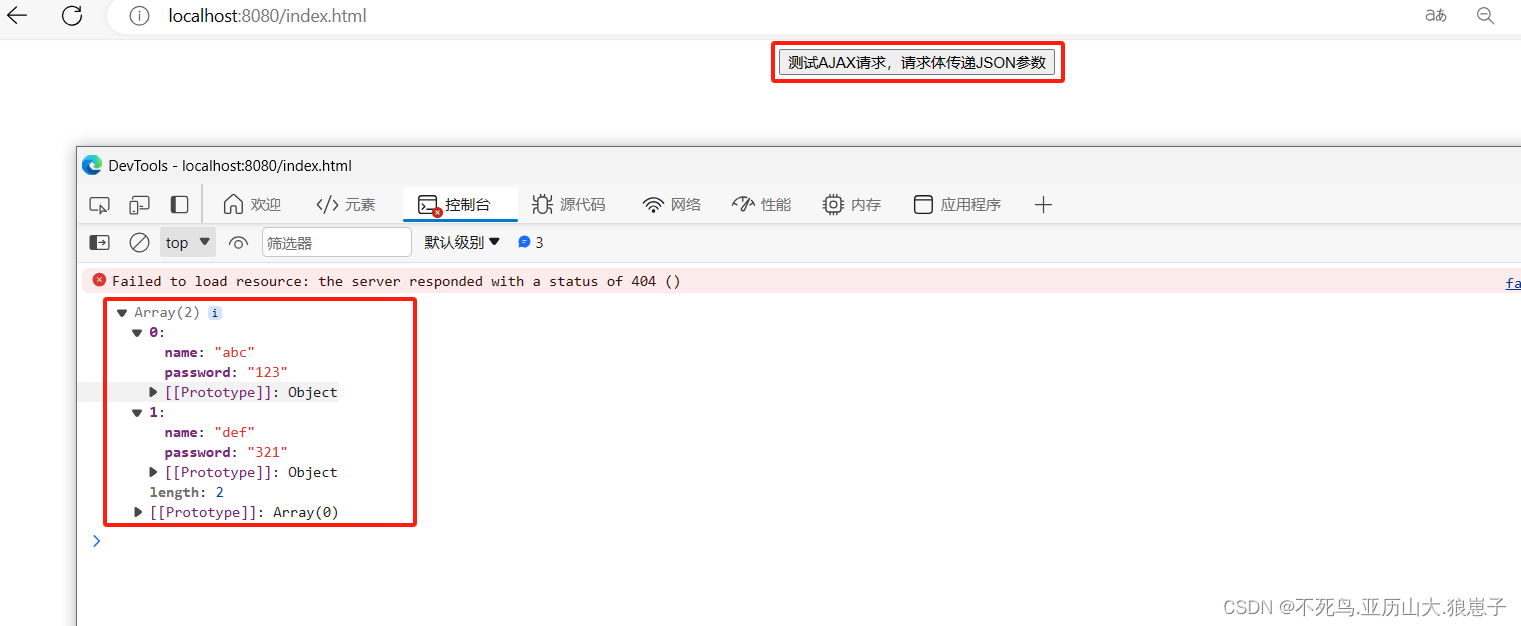

![[已解决]HttpMessageNotReadableException: JSON parse error: Unexpected character:解析JSON时出现异常的问题分析与解决方案](https://img-blog.csdnimg.cn/b2cbd913c75642ac89a2fdd597f62daf.png)