爬取页面展示

- 热门榜单——酷狗top500:

https://www.kugou.com/yy/rank/home/1-8888.html?from=rank

- 特色榜单——影视金曲榜:

https://www.kugou.com/yy/rank/home/1-33163.html?from=rank

项目分析

对于酷狗top500:

- 打开network发现酷狗并没有将榜单封装在json里面,所以还是使用BeautifulSoup爬取

- 观察页面发现,并没有下一页的选项,只有下载客户端

- 再观察url发现 :https://www.kugou.com/yy/rank/home/1-8888.html?from=rank,出现了1-8888,故猜测下一个页面是2-8888吗,推测正确,按这个规律,应该有23个页面

对于酷狗影视金曲榜(100首):

同理:

综上:对于酷狗的整个榜单模块只需要替换页数跟榜单id值即可随便爬取

项目结构

词云轮廓图

- wc.jpg

- kugoutop500.py

import time

import requests

from bs4 import BeautifulSoup

import pandas as pd

from matplotlib import pyplot as plt

from wordcloud import WordCloud

from PIL import Image

import numpy as np

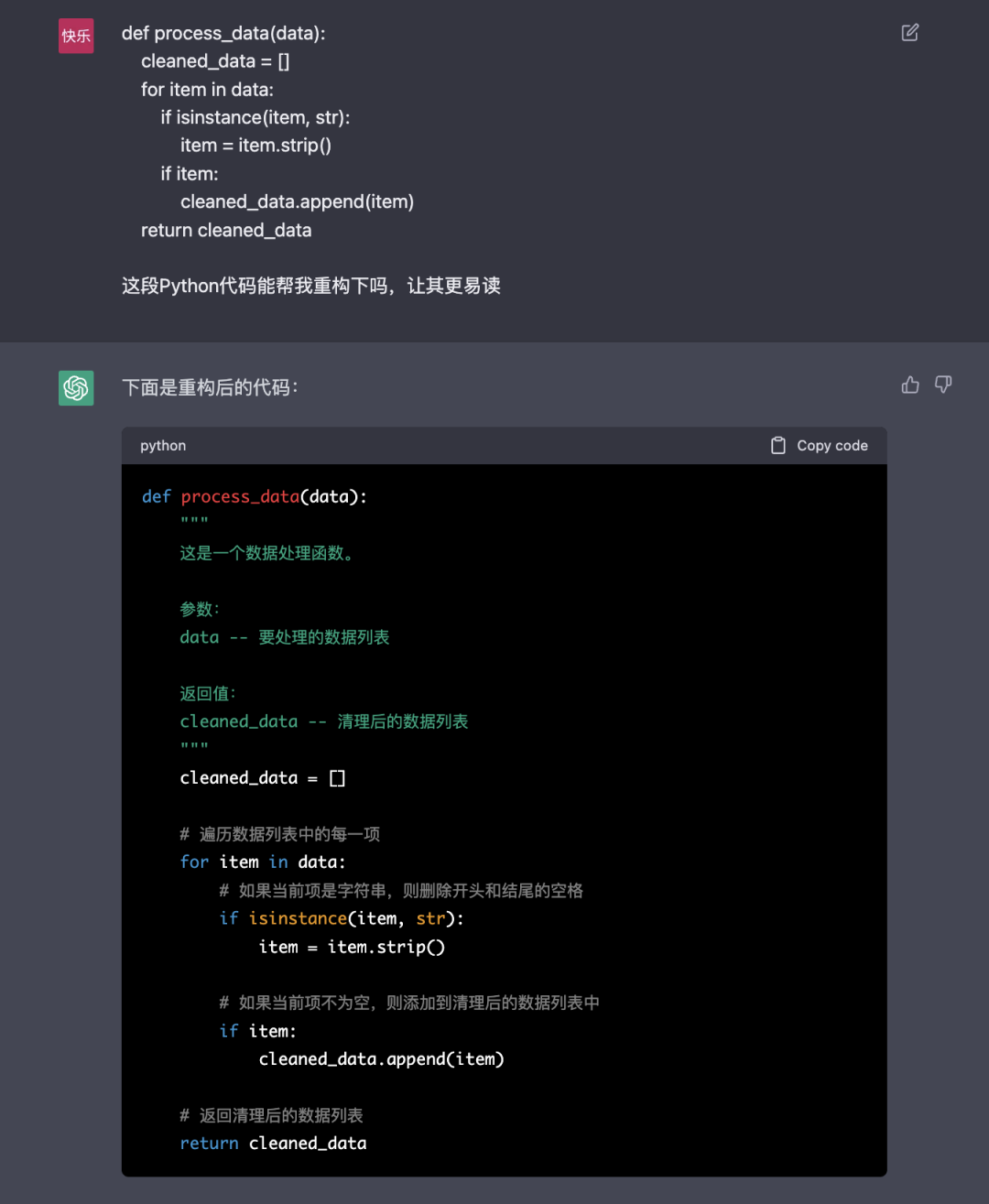

def main():dic = get_data()items = process_data(dic)print(len(items), items)word_cloud(items)

def get_data():dic = {}for i in range(1, 24):urls = 'https://www.kugou.com/yy/rank/home/%d-8888.html?from=rank' % ihead = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) ""Chrome/92.0.4577.63 Safari/537.36}"}html = requests.get(urls, headers=head)soup = BeautifulSoup(html.text, 'lxml')titles = soup.select('.pc_temp_songname')href = soup.select('.pc_temp_songname')times = soup.select('.pc_temp_time')data_all = []for titles, times, href in zip(titles, times, href):data = {'歌名': titles.get_text().replace('\n', '').replace('\t', '').replace('\r', '').split('-')[0],'歌手': titles.get_text().replace('\n', '').replace('\t', '').replace('\r', '').split('-')[1],'时长': times.get_text().strip().replace('\n', '').replace('\t', '').replace('\r', ''),'链接': href.get('href')}print(data)cnt_songer(data['歌手'], dic)data_all.append(data)time.sleep(2)return dic

def cnt_songer(songer, dic):if songer not in dic:dic[songer] = 1else:dic[songer] = dic[songer] + 1

def process_data(dic):items = dict(sorted(dic.items(), key=lambda x: x[1], reverse=True))items = {key: value for key, value in items.items() if value > 1}print(items)return items

def word_cloud(items):img = Image.open(r'wc.jpg')imgarr = np.array(img)wc = WordCloud(background_color='black',mask=imgarr,font_path='C:/Windows/Fonts/msyh.ttc',scale=20,prefer_horizontal=0.5,# 表示在水平如果不合适,就旋转为垂直方向random_state=55)wc.generate_from_frequencies(items)plt.figure(5)plt.imshow(wc)plt.axis('off')plt.show()wc.to_file("酷狗TOP500词云1.png")

if __name__ == '__main__':main()

- 运行截图:

56 {' 周杰伦': 37, ' 林俊杰': 18, ' 王靖雯': 6, ' 张杰': 6, ' 陈奕迅': 4, ' 任然': 4, ' 海来阿木': 4, ' 蓝心羽': 4, ' 王杰': 4, ' 莫叫姐姐': 3, ' 毛不易': 3, ' 王菲': 3, ' 海伦': 3, ' 阿YueYue': 3, ' 郁可唯': 3, ' 半吨兄弟': 3, ' 许巍': 3, ' 张信哲': 3, ' 李荣浩': 3, ' F.I.R.飞儿乐团': 3, ' 不是花火呀': 3, ' 张碧晨': 2, ' 队长': 2, ' 程jiajia': 2, ' BEYOND': 2, ' 蔡健雅': 2, ' 洛先生': 2, ' 陈慧娴': 2, ' 程响': 2, ' 王小帅': 2, ' 徐佳莹': 2, ' Charlie Puth': 2, ' 小蓝背心': 2, ' IN': 2, ' Taylor Swift': 2, ' 七叔(叶泽浩)': 2, ' 周深': 2, ' 大欢': 2, ' 戴羽彤': 2, ' 小阿枫': 2, ' 苏星婕': 2, ' 刘德华': 2, ' 周传雄': 2, ' 苏谭谭': 2, ' 孙燕姿': 2, ' 王忻辰、苏星婕': 2, ' 李克勤': 2, ' 林子祥': 2, ' 庄心妍': 2, ' 闻人听書_': 2, ' 朱添泽': 2, ' 陈子晴': 2, ' 胡66': 2, ' aespa (에스파)': 2, ' OneRepublic': 2, ' 凤凰传奇': 2}

酷狗top500词云:(这才是真正的“华语乐坛”)

- 酷狗影视金曲.py

import time

import requests

from bs4 import BeautifulSoup

import pandas as pd

from matplotlib import pyplot as plt

from wordcloud import WordCloud

from PIL import Image

import numpy as np

def main():dic = get_data()items = process_data(dic)print(len(items), items)word_cloud(items)

def get_data():dic = {}for i in range(1, 5):urls = 'https://www.kugou.com/yy/rank/home/%d-33163.html?from=rank' % ihead = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) ""Chrome/92.0.4577.63 Safari/537.36}"}html = requests.get(urls, headers=head)soup = BeautifulSoup(html.text, 'lxml')titles = soup.select('.pc_temp_songname')href = soup.select('.pc_temp_songname')times = soup.select('.pc_temp_time')data_all = []for titles, times, href in zip(titles, times, href):data = {'歌名': titles.get_text().replace('\n', '').replace('\t', '').replace('\r', '').split('-')[0],'歌手': titles.get_text().replace('\n', '').replace('\t', '').replace('\r', '').split('-')[1],'时长': times.get_text().strip().replace('\n', '').replace('\t', '').replace('\r', ''),'链接': href.get('href')}print(data)cnt_songer(data['歌手'], dic)data_all.append(data)time.sleep(2)return dic

def cnt_songer(songer, dic):if songer not in dic:dic[songer] = 1else:dic[songer] = dic[songer] + 1

def process_data(dic):items = dict(sorted(dic.items(), key=lambda x: x[1], reverse=True))items = {key: value for key, value in items.items() if value > 1}print(items)return itemsdef word_cloud(items):img = Image.open(r'wc.jpg')imgarr = np.array(img)wc = WordCloud(background_color='black',mask=imgarr,font_path='C:/Windows/Fonts/msyh.ttc',scale=20,prefer_horizontal=0.5,# 表示在水平如果不合适,就旋转为垂直方向random_state=55)wc.generate_from_frequencies(items)plt.figure(5)plt.imshow(wc)plt.axis('off')plt.show()wc.to_file("酷狗影视金曲词云.png")

if __name__ == '__main__':main()

- 运行截图:

15 {' BEYOND': 5, ' 陈奕迅': 4, ' 李克勤': 3, ' 林俊杰': 3, ' 王菲': 2, ' 莫文蔚': 2, ' 周杰伦': 2, ' 郁可唯': 2, ' 刘若英': 2, ' 王杰': 2, ' 毛不易': 2, ' 朴树': 2, ' 薛之谦': 2, ' 杨丞琳': 2, ' 刘德华': 2}

- 影视金曲榜词云:(梅开二度)