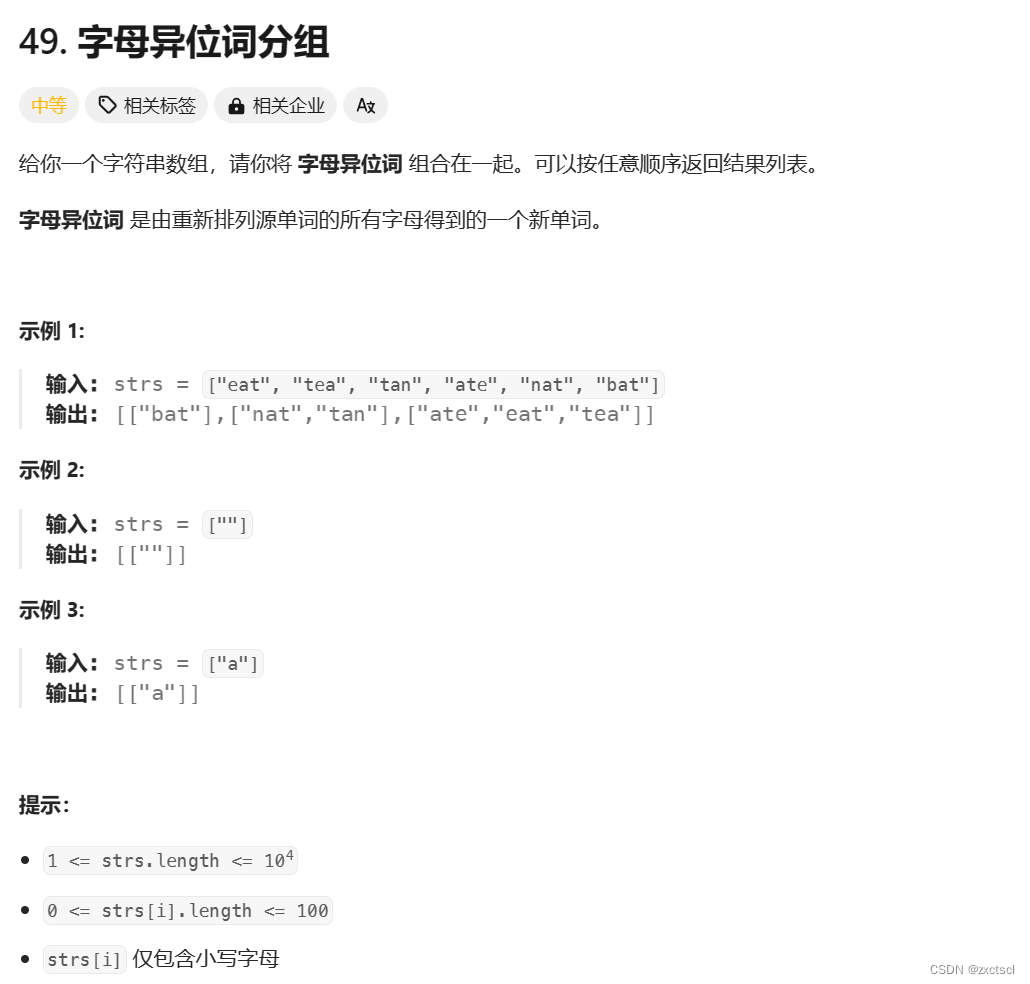

我们先看下最终效果:

1. ES索引新建

PUT administrative_division

{"mappings": {"properties": {"province": {"type": "keyword"},"province_code": {"type": "keyword"},"city": {"type": "keyword"},"city_code": {"type": "keyword"},"district": {"type": "keyword"},"district_code": {"type": "keyword"},"town": {"type": "keyword"},"town_code": {"type": "keyword"},"committee": {"type": "keyword"},"committee_code": {"type": "keyword"},"type_code": {"type": "keyword"}}},"settings": {"number_of_replicas": 0,"number_of_shards": 1}

}

2. 代码编写

此处代码找的网上大神写的个人认为较为简洁的,直接拿来用改下存储

from lxml import etree

import requests

import time

import random"""

国家统计局行政区划获取

"""

from elasticsearch import helpers, Elasticsearchdef init_es_client(es_host):es = Elasticsearch(hosts=[es_host], verify_certs=False)return eses_client = init_es_client('http://127.0.0.1:9200')actions = list()

count = 0def get_html(url):response = requests.get(url)response.encoding = "utf8"res = response.texthtml = etree.HTML(res)return htmlbase_url = "http://www.stats.gov.cn/sj/tjbz/tjyqhdmhcxhfdm/2023/"

url = base_url + "index.html"

province_html = get_html(url)

province_list = province_html.xpath('//tr[@class="provincetr"]/td')

province_code = province_list[0].xpath('//td/a/@href')

province_name = province_list[0].xpath('//td/a/text()')

province = dict(zip([p.split(".")[0] for p in province_code], province_name))actions = list()

for p_key in province.keys():url_city = base_url + p_key + ".html"time.sleep(random.randint(0, 3))city_html = get_html(url_city)if city_html is None:print("city_html is None", url_city)continuecity_code = city_html.xpath('//tr[@class="citytr"]/td[1]/a/text()')city_name = city_html.xpath('//tr[@class="citytr"]/td[2]/a/text()')city_url = city_html.xpath('//tr[@class="citytr"]/td[1]/a/@href')for c_num in range(len(city_url)):county_url = base_url + city_url[c_num]time.sleep(random.randint(0, 3))county_html = get_html(county_url)if county_html is None:print("county_html is None", county_url)continuecounty_code = county_html.xpath('//tr[@class="countytr"]/td[1]/a/text()')county_name = county_html.xpath('//tr[@class="countytr"]/td[2]/a/text()')county_url = county_html.xpath('//tr[@class="countytr"]/td[1]/a/@href')for t_num in range(len(county_url)):town_url = base_url + "/" + city_url[c_num].split('/')[0] + "/" + county_url[t_num]time.sleep(random.randint(0, 3))town_html = get_html(town_url)if town_html is None:print("town_html is None", town_url)continuetown_code = town_html.xpath('//tr[@class="towntr"]/td[1]/a/text()')town_name = town_html.xpath('//tr[@class="towntr"]/td[2]/a/text()')town_url = town_html.xpath('//tr[@class="towntr"]/td[1]/a/@href')for v_num in range(len(town_url)):code_ = town_url[v_num].split("/")[1].rstrip(".html")village_url = base_url + code_[0:2] + "/" + code_[2:4] + "/" + town_url[v_num]time.sleep(random.randint(0, 3))village_html = get_html(village_url)if village_html is None:print("village_html is None", village_url)continue# 居委村委代码village_code = village_html.xpath('//tr[@class="villagetr"]/td[1]/text()')# 居委村委城乡分类代码village_type_code = village_html.xpath('//tr[@class="villagetr"]/td[2]/text()')# 居委村委名称village_name = village_html.xpath('//tr[@class="villagetr"]/td[3]/text()')for num in range(len(village_code)):v_name = village_name[num]v_code = village_code[num]type_code = village_type_code[num]info = dict()info['province'] = str(p_key).ljust(12, '0')info['province_code'] = province[p_key]info['city_code'] = city_code[c_num]info['city'] = city_name[c_num]info['district_code'] = county_code[t_num]info['district'] = county_name[t_num]info['town_code'] = town_code[v_num]info['town'] = town_name[v_num]info['type_code'] = type_codeinfo['committee_code'] = v_codeinfo['committee'] = v_nameaction = {"_op_type": "index","_index": "administrative_division","_id": v_code,"_source": info}actions.append(action)if len(actions) == 10:helpers.bulk(es_client, actions)count += len(actions)print(count)actions.clear()

if len(actions) > 0:helpers.bulk(es_client, actions)count += len(actions)print(count)actions.clear()

好了,每年更新一次,慢慢跑着吧,当然我们没有考虑历史变更情况,欢迎关注公众号 算法小生,获取第一资讯