Transformer

文章目录

- Transformer

- 1. What

- 2. Why

- 3. How

- 3.1 Encoder

- 3.2 Decoder

- 3.3 Attention

- 3.4 Application

- 3.5 Position-wise Feed-Forward Networks(The second sublayer)

- 3.6 Embeddings and Softmax

- 3.7 Positional Encoding

- 3.8 Why Self-Attention

1. What

A new simple network architecture called Transformer, based solely on attention mechanisms, dispensing with recurrence and convolutions entirely.

2. Why

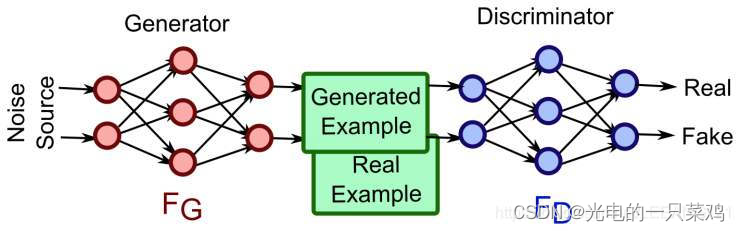

The dominant sequence transduction models are based on complex recurrent or convolutional neural networks that include an encoder and a decoder.

Recurrent neural networks have been sota in most sequence modeling tasks, but memory constraints in RNN limit batching across examples.

The goal of reducing sequential computation also forms the use of convolutional neural networks. But it’s hard for them to handle the dependencies between distant positions because convolution can only see a small window in a whole image. If we want to use convolution to see the far-away parts, it needs several convolutions. In the transformer, it reduces to a constant number of operations because it can overview the whole image.

Meanwhile, similar to the idea of using many convolution kernels in RNN, we introduce the Multi-Head Attention to make up for this feature.

3. How

3.1 Encoder

The encoder is the block on the left with 6 identical layers. Each layer has two sublayers. Combined with the residual connection, it can be represented as:

LayerNorm(x+Sublayer(x)) \text{LayerNorm(x+Sublayer(x))} LayerNorm(x+Sublayer(x))

Each sub-layer is followed by layer normalization. We will introduce it in detail.

Firstly, we will introduce batch normalization and layer normalization, which are shown below as the blue and yellow squares.

In the 2D dimension, the data can be represented as feature × \times × batch. And batch normalization is to normalize one feature in different batches. The layer normalization is equivalent to the transposition of batch normalization, which can be seen as the normalization of one batch with different features.

In the 3D dimension, every sentence is a sequence and each word is a vector. So we can visualize it as below:

The blue and yellow squares represent batch normalization and layer normalization in 3D data. Consider if the sequence length is different among sentences, the normalization will be different:

The batch normalization will consider all of the data, so if new data has an extreme length, the predicted normalization will be inaccurate. So on the contrary, we will use layer normalization in transform, which only makes sense in its own sequence and will not be affected by global data.

3.2 Decoder

The decoder inserts a third sub-layer, which performs multi-head attention over the output of the encoder stack. It was added a mask to prevent positions from attending to subsequent positions.

3.3 Attention

An attention function can be described as mapping a query and a set of key-value pairs to an output, where the query, keys, values, and output are all vectors.

The output is computed as a weighted sum of the values, where the weight assigned to each value is computed by a compatibility function of the query with the corresponding key. That is:

The key and value are paired. The weight for each value depends on the compatibility between the query and key.

Mathematically,

Attention ( Q , K , V ) = softmax ( Q K T d k ) V . \text{Attention}(Q,K,V)=\text{softmax}(\frac{QK^T}{\sqrt {d_k}})V. Attention(Q,K,V)=softmax(dkQKT)V.

where the query is a matrix and we use softmax \text{softmax} softmax to gain the relative weights. The scaling factor d k \sqrt {d_k} dk is used to avoid the extreme length.

The matrix multiplication can be represented as:

We will also use masks in this block, it will set the value after v t v_t vt to a big negative number. So it will be small after softmax.

As for the multi-head attention, some different, learned linear projections are used for the Q , K , V Q,K,V Q,K,V to compress dimension from d m o d e l d_{model} dmodel to d k , d k , d v d_k,d_k,d_v dk,dk,dv. It is shown below:

And mathematically,

M u l t i H e a d ( Q , K , V ) = C o n c a t ( h e a d 1 , . . . , h e a d h ) W O w h e r e h e a d i = A t t e n t i o n ( Q W i Q , K W i K , V W i V ) \begin{aligned}\mathrm{MultiHead}(Q,K,V)&=\mathrm{Concat}(\mathrm{head}_{1},...,\mathrm{head}_{\mathrm{h}})W^{O}\\\mathrm{where~head_{i}}&=\mathrm{Attention}(QW_{i}^{Q},KW_{i}^{K},VW_{i}^{V})\end{aligned} MultiHead(Q,K,V)where headi=Concat(head1,...,headh)WO=Attention(QWiQ,KWiK,VWiV)

Where the projections are parameter matrices W i Q ∈ R d m o d e l × d k , W i K ∈ R d m o d e l × d k , W i V ∈ R d m o d e l × d v W_{i}^{Q}\in\mathbb{R}^{d_{\mathrm{model}}\times d_{k}},W_{i}^{K}\in\mathbb{R}^{d_{\mathrm{model}}\times d_{k}},W_{i}^{V}\in\mathbb{R}^{d_{\mathrm{model}}\times d_v} WiQ∈Rdmodel×dk,WiK∈Rdmodel×dk,WiV∈Rdmodel×dv and W O ∈ R h d v × d m o d e l W^{O}\in\mathbb{R}^{hd_{v}\times d_{\mathrm{model}}} WO∈Rhdv×dmodel.

Practically, d k = d v = d m o d e l / h = 64 d_{k}=d_{v}=d_{\mathrm{model}}/h=64 dk=dv=dmodel/h=64 and h = 8 h=8 h=8.

In this way, we also have more parameters in liner layers to learn compared with single attention.

3.4 Application

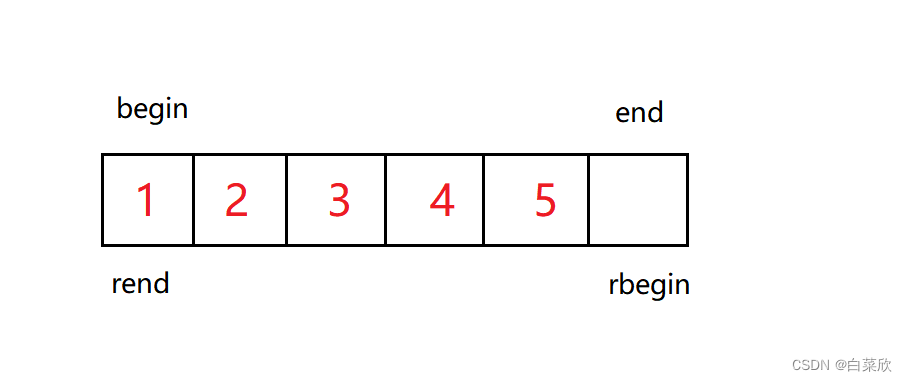

There are three types of multi-head attention in the model. For the first two, as shown below:

All of the keys, values, and queries come from the same place and have the same size. The output size is n × d n \times d n×d.

As for the third one, the queries come from the previous decoder layer,

and the memory keys and values come from the output of the encoder.

The K K K and V V V’s sizes are n × d n \times d n×d and the Q Q Q’s size is m × d m \times d m×d. So the final output’s size is m × d m \times d m×d. From a semantic point of view, it means to put forward the words in the output sequence that have similar semantics to the input word sequence.

3.5 Position-wise Feed-Forward Networks(The second sublayer)

Actually, it is a MLP:

F F N ( x ) = max ( 0 , x W 1 + b 1 ) W 2 + b 2 . \mathrm{FFN}(x)=\max(0,xW_1+b_1)W_2+b_2. FFN(x)=max(0,xW1+b1)W2+b2.

The input x x x is d m o d e l d_{model} dmodel(512), W 1 W_1 W1 is R 512 × 2048 \mathbb{R}^{512\times2048} R512×2048 and W 2 W_2 W2 is R 2048 × 512 \mathbb{R}^{2048\times512} R2048×512.

Position-wise means it is a reflection of every word in the sequence and all of them use the same MLP.

This is also the difference between him and RNN. The latter needs the output of the last MLP to be the input.

3.6 Embeddings and Softmax

Embeddings are the map from word tokens to vectors of dimension d m o d e l d_{model} dmodel. The linear transformation and softmax function will convert the decoder output to predicted next-token probabilities. All of them use the same weights.

3.7 Positional Encoding

In order for the model to make use of the order of the sequence, we must inject some information about the relative or absolute position of the

tokens in the sequence.

Every PC will be d m o d e l d_{model} dmodel and be added to the input embedding. The formula is:

P E ( p o s , 2 i ) = s i n ( p o s / 1000 0 2 i / d m o d e l ) P E ( p o s , 2 i + 1 ) = c o s ( p o s / 1000 0 2 i / d m o d e l ) , PE_{(pos,2i)}=sin(pos/10000^{2i/d_{\mathrm{model}}})\\PE_{(pos,2i+1)}=cos(pos/10000^{2i/d_{\mathrm{model}}}), PE(pos,2i)=sin(pos/100002i/dmodel)PE(pos,2i+1)=cos(pos/100002i/dmodel),

where p o s pos pos is the position and i i i is the dimension.

3.8 Why Self-Attention

Use this table to compare different models. Three metrics were used.

As for the Complexity per Layer of Self-Attention, O ( n 2 d ˙ ) O(n^2 \dot d) O(n2d˙) is the multiplication of matrix Q Q Q and K K K. The Self-Attention (restricted) means only use some near Q Q Q as quary.

Ref:

Transformer论文逐段精读【论文精读】_哔哩哔哩_bilibili

Transformer常见问题与回答总结