目录

- 1.avcodec_send_frame

- 1.1 将输入的frame存入内部buffer(encode_send_frame_internal)

- 1.1.1 frame的引用函数(av_frame_ref )

- 1.1.1.1 帧属性的拷贝(frame_copy_props)

- 1.1.1.2 buffer的引用函数(av_buffer_ref)

- 1.2 将frame送入编码器(encode_receive_packet_internal)

- 1.2.1 软编入口(encode_simple_receive_packet)

- 1.2.1.1 实际编码(codec->cb.encode)

- 1.2.1.2 创建pkt的refcount缓冲buffer(encode_make_refcounted)

- 2.小结

FFmpeg相关记录:

示例工程:

【FFmpeg】调用ffmpeg库实现264软编

【FFmpeg】调用ffmpeg库实现264软解

【FFmpeg】调用ffmpeg库进行RTMP推流和拉流

【FFmpeg】调用ffmpeg库进行SDL2解码后渲染

流程分析:

【FFmpeg】编码链路上主要函数的简单分析

【FFmpeg】解码链路上主要函数的简单分析

结构体分析:

【FFmpeg】AVCodec结构体

【FFmpeg】AVCodecContext结构体

【FFmpeg】AVStream结构体

【FFmpeg】AVFormatContext结构体

【FFmpeg】AVIOContext结构体

【FFmpeg】AVPacket结构体

函数分析:

【通用】

【FFmpeg】avcodec_find_encoder和avcodec_find_decoder

【FFmpeg】关键结构体的初始化和释放(AVFormatContext、AVIOContext等)

【FFmpeg】avcodec_open2函数

【推流】

【FFmpeg】avformat_open_input函数

【FFmpeg】avformat_find_stream_info函数

【FFmpeg】avformat_alloc_output_context2函数

【FFmpeg】avio_open2函数

【FFmpeg】avformat_write_header函数

【FFmpeg】av_write_frame函数

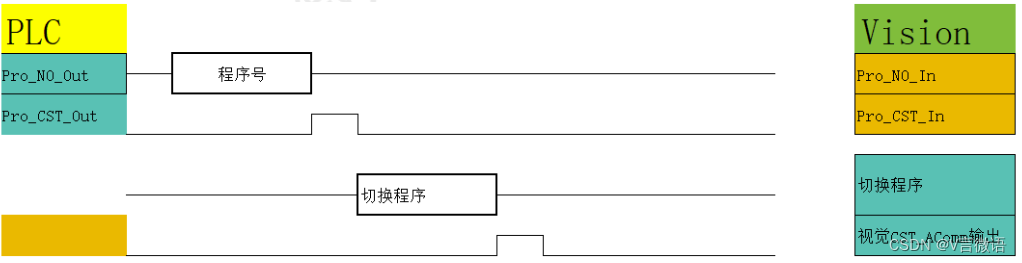

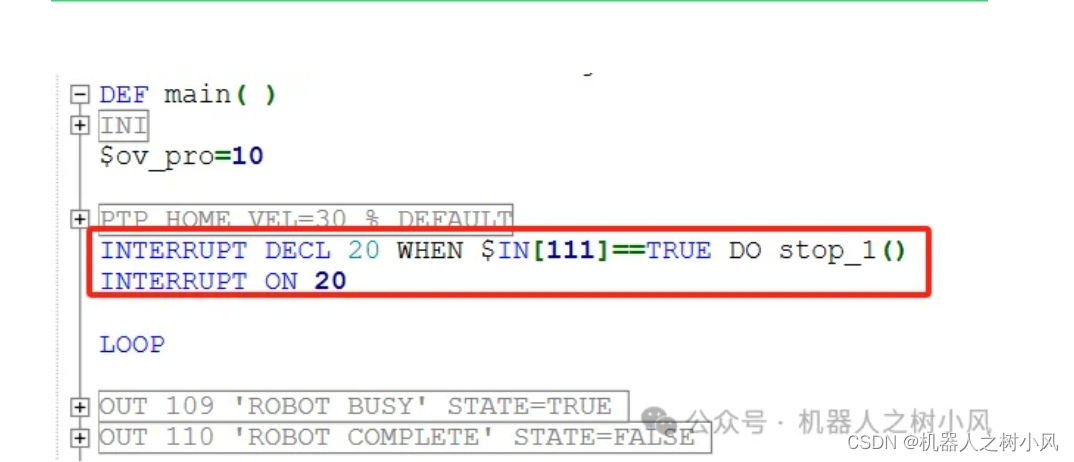

avcodec_send_frame的函数调用关系为

1.avcodec_send_frame

avcodec_send_frame函数的主要功能是将外部输入的frame送入到编码器中实现编码,函数的实现位于libavcodec\encode.c中,从代码看,主要进行的流程为:

(1)输入信息avctx的检查

(2)如果输入的帧frame不存在,设置draining为1,随后将输入的frame存入avctx的buffer中,存入的过程使用encode_send_frame_internal实现

(3)将frame送入编码器进行编码,使用encode_receive_packet_internal实现

/*** Supply a raw video or audio frame to the encoder. Use avcodec_receive_packet()* to retrieve buffered output packets.** @param avctx codec context* @param[in] frame AVFrame containing the raw audio or video frame to be encoded.* Ownership of the frame remains with the caller, and the* encoder will not write to the frame. The encoder may create* a reference to the frame data (or copy it if the frame is* not reference-counted).* It can be NULL, in which case it is considered a flush* packet. This signals the end of the stream. If the encoder* still has packets buffered, it will return them after this* call. Once flushing mode has been entered, additional flush* packets are ignored, and sending frames will return* AVERROR_EOF.** For audio:* If AV_CODEC_CAP_VARIABLE_FRAME_SIZE is set, then each frame* can have any number of samples.* If it is not set, frame->nb_samples must be equal to* avctx->frame_size for all frames except the last.* The final frame may be smaller than avctx->frame_size.* @retval 0 success* @retval AVERROR(EAGAIN) input is not accepted in the current state - user must* read output with avcodec_receive_packet() (once all* output is read, the packet should be resent, and the* call will not fail with EAGAIN).* @retval AVERROR_EOF the encoder has been flushed, and no new frames can* be sent to it* @retval AVERROR(EINVAL) codec not opened, it is a decoder, or requires flush* @retval AVERROR(ENOMEM) failed to add packet to internal queue, or similar* @retval "another negative error code" legitimate encoding errors*/

// 向编码器提供原始视频或音频帧。使用avcodec_receive_packet()检索缓冲的输出数据包

int attribute_align_arg avcodec_send_frame(AVCodecContext *avctx, const AVFrame *frame)

{AVCodecInternal *avci = avctx->internal;int ret;// 1.输入的检查if (!avcodec_is_open(avctx) || !av_codec_is_encoder(avctx->codec))return AVERROR(EINVAL);if (avci->draining)return AVERROR_EOF;if (avci->buffer_frame->buf[0])return AVERROR(EAGAIN);// 如果输出不存在,draining设为1if (!frame) {avci->draining = 1;} else { // 2.将输入的frame存入avctx的buffer中ret = encode_send_frame_internal(avctx, frame);if (ret < 0)return ret;}// 3.将frame送入编码器进行编码if (!avci->buffer_pkt->data && !avci->buffer_pkt->side_data) {ret = encode_receive_packet_internal(avctx, avci->buffer_pkt);if (ret < 0 && ret != AVERROR(EAGAIN) && ret != AVERROR_EOF)return ret;}avctx->frame_num++;return 0;

}

1.1 将输入的frame存入内部buffer(encode_send_frame_internal)

函数的功能是将输入的frame填充到avctx的buffer中,首先对音频进行检查,随后使用av_frame_ref将输入的frame填充到avctx的buffer中,如果是视频文件,最后还执行了encode_generate_icc_profile,但是这个函数没有被实现,默认会返回0

static int encode_send_frame_internal(AVCodecContext *avctx, const AVFrame *src)

{AVCodecInternal *avci = avctx->internal;EncodeContext *ec = encode_ctx(avci);AVFrame *dst = avci->buffer_frame;int ret;// 音频if (avctx->codec->type == AVMEDIA_TYPE_AUDIO) {/* extract audio service type metadata */AVFrameSideData *sd = av_frame_get_side_data(src, AV_FRAME_DATA_AUDIO_SERVICE_TYPE);if (sd && sd->size >= sizeof(enum AVAudioServiceType))avctx->audio_service_type = *(enum AVAudioServiceType*)sd->data;/* check for valid frame size */if (!(avctx->codec->capabilities & AV_CODEC_CAP_VARIABLE_FRAME_SIZE)) {/* if we already got an undersized frame, that must have been the last */if (ec->last_audio_frame) {av_log(avctx, AV_LOG_ERROR, "frame_size (%d) was not respected for a non-last frame\n", avctx->frame_size);return AVERROR(EINVAL);}if (src->nb_samples > avctx->frame_size) {av_log(avctx, AV_LOG_ERROR, "nb_samples (%d) > frame_size (%d)\n", src->nb_samples, avctx->frame_size);return AVERROR(EINVAL);}if (src->nb_samples < avctx->frame_size) {ec->last_audio_frame = 1;if (!(avctx->codec->capabilities & AV_CODEC_CAP_SMALL_LAST_FRAME)) {int pad_samples = avci->pad_samples ? avci->pad_samples : avctx->frame_size;int out_samples = (src->nb_samples + pad_samples - 1) / pad_samples * pad_samples;if (out_samples != src->nb_samples) {ret = pad_last_frame(avctx, dst, src, out_samples);if (ret < 0)return ret;goto finish;}}}}}// 将src拷贝到dst中ret = av_frame_ref(dst, src);if (ret < 0)return ret;finish:if (avctx->codec->type == AVMEDIA_TYPE_VIDEO) {// 函数还未实现,默认会返回0ret = encode_generate_icc_profile(avctx, dst);if (ret < 0)return ret;}// unset frame duration unless AV_CODEC_FLAG_FRAME_DURATION is set,// since otherwise we cannot be sure that whatever value it has is in the// right timebase, so we would produce an incorrect value, which is worse// than none at allif (!(avctx->flags & AV_CODEC_FLAG_FRAME_DURATION))dst->duration = 0;return 0;

}

下面看看av_frame_ref的实现过程

1.1.1 frame的引用函数(av_frame_ref )

int av_frame_ref(AVFrame *dst, const AVFrame *src)

{int ret = 0;av_assert1(dst->width == 0 && dst->height == 0);av_assert1(dst->ch_layout.nb_channels == 0 &&dst->ch_layout.order == AV_CHANNEL_ORDER_UNSPEC);// 长宽和format的配置dst->format = src->format;dst->width = src->width;dst->height = src->height;// 这个帧描述的音频采样数(每个通道)dst->nb_samples = src->nb_samples;// 拷贝一些属性值ret = frame_copy_props(dst, src, 0);if (ret < 0)goto fail;// 拷贝音频声道数ret = av_channel_layout_copy(&dst->ch_layout, &src->ch_layout);if (ret < 0)goto fail;/* duplicate the frame data if it's not refcounted */// 如果src没有被引用,即只有1份。没有进行拷贝操作// refcounted可以看做是一种编程模式,用于管理动态分配的资源,如内存、句柄、文件描述等// 这种模式通过维护一个引用计数器来跟踪资源的使用者数量,以确保资源在不再需要时被正确释放// 例如,当这个资源首次被创建或分配时,它的引用计数器被初始化为1,当有一个新的使用者或引用使用这个资源时// 引用计数器就会加1,当使用者不再需要使用这个资源时,它会减少引用计数器,并检查计数器是否为0// 如果这个计数器为0,这说明没有其他的使用者了,可以进行安全的释放if (!src->buf[0]) {// 分配一个新的bufferret = av_frame_get_buffer(dst, 0);if (ret < 0)goto fail;// 将帧数据从src复制到dstret = av_frame_copy(dst, src);if (ret < 0)goto fail;return 0;}/* ref the buffers */for (int i = 0; i < FF_ARRAY_ELEMS(src->buf); i++) {if (!src->buf[i])continue;// buffer的ref操作dst->buf[i] = av_buffer_ref(src->buf[i]);if (!dst->buf[i]) {ret = AVERROR(ENOMEM);goto fail;}}// extended_buf用于存储额外的数据缓冲区,这个字段通常用于存储与视频帧相关的额外信息,例如字幕、时间戳等if (src->extended_buf) {dst->extended_buf = av_calloc(src->nb_extended_buf,sizeof(*dst->extended_buf));if (!dst->extended_buf) {ret = AVERROR(ENOMEM);goto fail;}dst->nb_extended_buf = src->nb_extended_buf;for (int i = 0; i < src->nb_extended_buf; i++) {dst->extended_buf[i] = av_buffer_ref(src->extended_buf[i]);if (!dst->extended_buf[i]) {ret = AVERROR(ENOMEM);goto fail;}}}// 硬件frame上下文信息if (src->hw_frames_ctx) {dst->hw_frames_ctx = av_buffer_ref(src->hw_frames_ctx);if (!dst->hw_frames_ctx) {ret = AVERROR(ENOMEM);goto fail;}}/* duplicate extended data */if (src->extended_data != src->data) {int ch = dst->ch_layout.nb_channels;if (!ch) {ret = AVERROR(EINVAL);goto fail;}dst->extended_data = av_malloc_array(sizeof(*dst->extended_data), ch);if (!dst->extended_data) {ret = AVERROR(ENOMEM);goto fail;}memcpy(dst->extended_data, src->extended_data, sizeof(*src->extended_data) * ch);} elsedst->extended_data = dst->data;memcpy(dst->data, src->data, sizeof(src->data));memcpy(dst->linesize, src->linesize, sizeof(src->linesize));return 0;fail:// 如果失败,解引用av_frame_unref(dst);return ret;

}

1.1.1.1 帧属性的拷贝(frame_copy_props)

static int frame_copy_props(AVFrame *dst, const AVFrame *src, int force_copy)

{int ret;#if FF_API_FRAME_KEY

FF_DISABLE_DEPRECATION_WARNINGSdst->key_frame = src->key_frame;

FF_ENABLE_DEPRECATION_WARNINGS

#endifdst->pict_type = src->pict_type;dst->sample_aspect_ratio = src->sample_aspect_ratio;dst->crop_top = src->crop_top;dst->crop_bottom = src->crop_bottom;dst->crop_left = src->crop_left;dst->crop_right = src->crop_right;dst->pts = src->pts;dst->duration = src->duration;dst->repeat_pict = src->repeat_pict;

#if FF_API_INTERLACED_FRAME

FF_DISABLE_DEPRECATION_WARNINGSdst->interlaced_frame = src->interlaced_frame;dst->top_field_first = src->top_field_first;

FF_ENABLE_DEPRECATION_WARNINGS

#endif

#if FF_API_PALETTE_HAS_CHANGED

FF_DISABLE_DEPRECATION_WARNINGSdst->palette_has_changed = src->palette_has_changed;

FF_ENABLE_DEPRECATION_WARNINGS

#endifdst->sample_rate = src->sample_rate;dst->opaque = src->opaque;dst->pkt_dts = src->pkt_dts;

#if FF_API_FRAME_PKT

FF_DISABLE_DEPRECATION_WARNINGSdst->pkt_pos = src->pkt_pos;dst->pkt_size = src->pkt_size;

FF_ENABLE_DEPRECATION_WARNINGS

#endifdst->time_base = src->time_base;dst->quality = src->quality;dst->best_effort_timestamp = src->best_effort_timestamp;dst->flags = src->flags;dst->decode_error_flags = src->decode_error_flags;dst->color_primaries = src->color_primaries;dst->color_trc = src->color_trc;dst->colorspace = src->colorspace;dst->color_range = src->color_range;dst->chroma_location = src->chroma_location;av_dict_copy(&dst->metadata, src->metadata, 0);for (int i = 0; i < src->nb_side_data; i++) {const AVFrameSideData *sd_src = src->side_data[i];AVFrameSideData *sd_dst;if ( sd_src->type == AV_FRAME_DATA_PANSCAN&& (src->width != dst->width || src->height != dst->height))continue;if (force_copy) {sd_dst = av_frame_new_side_data(dst, sd_src->type,sd_src->size);if (!sd_dst) {frame_side_data_wipe(dst);return AVERROR(ENOMEM);}memcpy(sd_dst->data, sd_src->data, sd_src->size);} else {AVBufferRef *ref = av_buffer_ref(sd_src->buf);sd_dst = av_frame_new_side_data_from_buf(dst, sd_src->type, ref);if (!sd_dst) {av_buffer_unref(&ref);frame_side_data_wipe(dst);return AVERROR(ENOMEM);}}av_dict_copy(&sd_dst->metadata, sd_src->metadata, 0);}ret = av_buffer_replace(&dst->opaque_ref, src->opaque_ref);ret |= av_buffer_replace(&dst->private_ref, src->private_ref);return ret;

}

1.1.1.2 buffer的引用函数(av_buffer_ref)

AVBufferRef *av_buffer_ref(const AVBufferRef *buf)

{AVBufferRef *ret = av_mallocz(sizeof(*ret));if (!ret)return NULL;*ret = *buf;// 引用之后,refcount + 1atomic_fetch_add_explicit(&buf->buffer->refcount, 1, memory_order_relaxed);return ret;

}

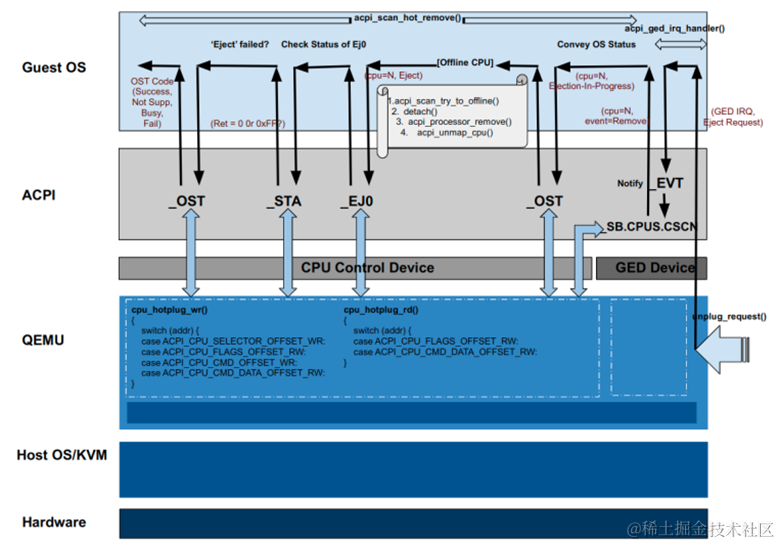

1.2 将frame送入编码器(encode_receive_packet_internal)

函数的主要功能是将frame送入到编码器中,先检查图像的尺寸是否有效,随后看是否是硬编还是软编,如果是硬编则使用receive_packet获取硬编码器输出的信息,否则调用encode_simple_receive_packet进行软编

static int encode_receive_packet_internal(AVCodecContext *avctx, AVPacket *avpkt)

{AVCodecInternal *avci = avctx->internal;int ret;if (avci->draining_done)return AVERROR_EOF;av_assert0(!avpkt->data && !avpkt->side_data);if (avctx->codec->type == AVMEDIA_TYPE_VIDEO) {if ((avctx->flags & AV_CODEC_FLAG_PASS1) && avctx->stats_out)avctx->stats_out[0] = '\0';// 1.检查图像的给定维度是否有效,这意味着具有指定pix_fmt的图像平面的所有字节都可以用带符号的int寻址if (av_image_check_size2(avctx->width, avctx->height, avctx->max_pixels, AV_PIX_FMT_NONE, 0, avctx))return AVERROR(EINVAL);}// FF_CODEC_CB_TYPE_RECEIVE_PACKET表示编解码器是使用receive_packet回调的编码器;仅限音频和视频编解码器// 2.cb_type为这个类型的都是硬件编码器的回调,例如amf,nvidia,vaapi平台if (ffcodec(avctx->codec)->cb_type == FF_CODEC_CB_TYPE_RECEIVE_PACKET) {ret = ffcodec(avctx->codec)->cb.receive_packet(avctx, avpkt);if (ret < 0)av_packet_unref(avpkt);else// Encoders must always return ref-counted buffers.// Side-data only packets have no data and can be not ref-counted.av_assert0(!avpkt->data || avpkt->buf);} elseret = encode_simple_receive_packet(avctx, avpkt); // 3.使用软件编码器进行编码if (ret >= 0)avpkt->flags |= encode_ctx(avci)->intra_only_flag; // 是否是intra flagif (ret == AVERROR_EOF)avci->draining_done = 1;return ret;

}

1.2.1 软编入口(encode_simple_receive_packet)

函数很简单,做一下检查之后,直接调用encode_simple_internal进行内部编码

static int encode_simple_receive_packet(AVCodecContext *avctx, AVPacket *avpkt)

{int ret;while (!avpkt->data && !avpkt->side_data) {ret = encode_simple_internal(avctx, avpkt);if (ret < 0)return ret;}return 0;

}

encode_simple_internal的定义如下

static int encode_simple_internal(AVCodecContext *avctx, AVPacket *avpkt)

{AVCodecInternal *avci = avctx->internal;AVFrame *frame = avci->in_frame;const FFCodec *const codec = ffcodec(avctx->codec);int got_packet;int ret;if (avci->draining_done)return AVERROR_EOF;// 如果引用计数为0,需要先获取1帧if (!frame->buf[0] && !avci->draining) {av_frame_unref(frame);// 由编码器调用以获取下一帧进行编码ret = ff_encode_get_frame(avctx, frame);if (ret < 0 && ret != AVERROR_EOF)return ret;}if (!frame->buf[0]) {if (!(avctx->codec->capabilities & AV_CODEC_CAP_DELAY ||avci->frame_thread_encoder))return AVERROR_EOF;// Flushing is signaled with a NULL frameframe = NULL;}got_packet = 0;av_assert0(codec->cb_type == FF_CODEC_CB_TYPE_ENCODE);// 多线程编码if (CONFIG_FRAME_THREAD_ENCODER && avci->frame_thread_encoder)/* This will unref frame. */ret = ff_thread_video_encode_frame(avctx, avpkt, frame, &got_packet);else {// 编码cbret = ff_encode_encode_cb(avctx, avpkt, frame, &got_packet);}if (avci->draining && !got_packet)avci->draining_done = 1;return ret;

}

ff_encode_encode_cb的定义如下,调用encode进行实际编码,对于libx264格式,会调用X264_frame()函数进行编码,随后会调用encode_make_refcounted来创建refcount缓冲buffer

int ff_encode_encode_cb(AVCodecContext *avctx, AVPacket *avpkt,AVFrame *frame, int *got_packet)

{const FFCodec *const codec = ffcodec(avctx->codec);int ret;// 执行编码,对于libx264格式,会调用X264_frame()函数进行编码// 编码后的压缩数据存储在avpkt的data中ret = codec->cb.encode(avctx, avpkt, frame, got_packet);emms_c();av_assert0(ret <= 0);if (!ret && *got_packet) {if (avpkt->data) {// 创建avpkt的refcount缓冲bufferret = encode_make_refcounted(avctx, avpkt);if (ret < 0)goto unref;// Date returned by encoders must always be ref-countedav_assert0(avpkt->buf);}// set the timestamps for the simple no-delay case// encoders with delay have to set the timestamps themselves// 为简单的无延迟情况设置时间戳,带有延迟的编码器必须自己设置时间戳if (!(avctx->codec->capabilities & AV_CODEC_CAP_DELAY) ||(frame && (codec->caps_internal & FF_CODEC_CAP_EOF_FLUSH))) {if (avpkt->pts == AV_NOPTS_VALUE)avpkt->pts = frame->pts;if (!avpkt->duration) {if (frame->duration)avpkt->duration = frame->duration;else if (avctx->codec->type == AVMEDIA_TYPE_AUDIO) {avpkt->duration = ff_samples_to_time_base(avctx,frame->nb_samples);}}// 注释翻译:根据需要将用户不透明的值从帧传播到avctx/pkt// 这里应该是将AVFrame结构体中记录重新排序的opaque数据,主要用于编码过程中对视频帧的重排序ret = ff_encode_reordered_opaque(avctx, avpkt, frame);if (ret < 0)goto unref;}// dts equals pts unless there is reordering// there can be no reordering if there is no encoder delayif (!(avctx->codec_descriptor->props & AV_CODEC_PROP_REORDER) ||!(avctx->codec->capabilities & AV_CODEC_CAP_DELAY) ||(codec->caps_internal & FF_CODEC_CAP_EOF_FLUSH))avpkt->dts = avpkt->pts;} else {

unref:av_packet_unref(avpkt);}if (frame)av_frame_unref(frame); // 这里不再使用,解引用return ret;

}

1.2.1.1 实际编码(codec->cb.encode)

本函数实际执行了编码过程,以libx264编码器为例,会调用X264_frame()函数进行编码,从定义的结构体可以看出

FFCodec ff_libx264_encoder = {.p.name = "libx264",CODEC_LONG_NAME("libx264 H.264 / AVC / MPEG-4 AVC / MPEG-4 part 10"),.p.type = AVMEDIA_TYPE_VIDEO,.p.id = AV_CODEC_ID_H264,.p.capabilities = AV_CODEC_CAP_DR1 | AV_CODEC_CAP_DELAY |AV_CODEC_CAP_OTHER_THREADS |AV_CODEC_CAP_ENCODER_REORDERED_OPAQUE |AV_CODEC_CAP_ENCODER_FLUSH |AV_CODEC_CAP_ENCODER_RECON_FRAME,.p.priv_class = &x264_class,.p.wrapper_name = "libx264",.priv_data_size = sizeof(X264Context),.init = X264_init,FF_CODEC_ENCODE_CB(X264_frame),.flush = X264_flush,.close = X264_close,.defaults = x264_defaults,

#if X264_BUILD < 153.init_static_data = X264_init_static,

#else.p.pix_fmts = pix_fmts_all,

#endif.caps_internal = FF_CODEC_CAP_INIT_CLEANUP | FF_CODEC_CAP_AUTO_THREADS

#if X264_BUILD < 158| FF_CODEC_CAP_NOT_INIT_THREADSAFE

#endif,

};

其中,X264_frame()的定义如下,主要工作流程为:

(1)根据外部输入的frame,初始化x264格式的pic_in,这个pic_in会被送入x264编码器进行编码(setup_frame)

(2)进行264编码(x264_encoder_encode)

(3)一些编码后信息的处理

(4)处理私有数据

(5)确定帧类型

(6)计算编码结束的psnr质量信息

主要函数的功能都用注释标出,不再具体对底层函数分析,另外x264_encoder_encode在【x264】系列记录中可以看到具体的实现

static int X264_frame(AVCodecContext *ctx, AVPacket *pkt, const AVFrame *frame,int *got_packet)

{X264Context *x4 = ctx->priv_data;x264_nal_t *nal;int nnal, ret;x264_picture_t pic_out = {0}, *pic_in;int pict_type;int64_t wallclock = 0;X264Opaque *out_opaque;// 1.根据外部输入的frame,来初始化x264格式的pic_in,从而进行x264编码ret = setup_frame(ctx, frame, &pic_in);if (ret < 0)return ret;do {// 2.x264的接口函数,具体执行264编码过程if (x264_encoder_encode(x4->enc, &nal, &nnal, pic_in, &pic_out) < 0)return AVERROR_EXTERNAL;// 3.下面是一些编码后信息的处理if (nnal && (ctx->flags & AV_CODEC_FLAG_RECON_FRAME)) {AVCodecInternal *avci = ctx->internal;av_frame_unref(avci->recon_frame);// csp: colorspaceavci->recon_frame->format = csp_to_pixfmt(pic_out.img.i_csp);if (avci->recon_frame->format == AV_PIX_FMT_NONE) {av_log(ctx, AV_LOG_ERROR,"Unhandled reconstructed frame colorspace: %d\n",pic_out.img.i_csp);return AVERROR(ENOSYS);}avci->recon_frame->width = ctx->width;avci->recon_frame->height = ctx->height;// 将编码输出的重建帧(img)信息给到recon_framefor (int i = 0; i < pic_out.img.i_plane; i++) {avci->recon_frame->data[i] = pic_out.img.plane[i];avci->recon_frame->linesize[i] = pic_out.img.i_stride[i];}// 确保帧数据是可写的,尽可能避免数据复制// 如果avframe当中的buf->flag不是READ_ONLY则可写ret = av_frame_make_writable(avci->recon_frame);if (ret < 0) {av_frame_unref(avci->recon_frame);return ret;}}// 将编码之后的nal信息,写入到pkt之中// pic_out.img当中没有存储nal信息,nal信息存储在了ctx之中ret = encode_nals(ctx, pkt, nal, nnal);if (ret < 0)return ret;// 返回当前延迟(缓冲)帧的数量,这应该在流结束时使用,以知道何时拥有所有编码帧} while (!ret && !frame && x264_encoder_delayed_frames(x4->enc)); // if (!ret)return 0;pkt->pts = pic_out.i_pts;pkt->dts = pic_out.i_dts;// 4.处理私有数据,opaque是一种通用的未指定具体含义的数据// 通常与视频帧或编码器上下文相关联,可以存储一些自定义数据,统计数据等等out_opaque = pic_out.opaque;if (out_opaque >= x4->reordered_opaque &&out_opaque < &x4->reordered_opaque[x4->nb_reordered_opaque]) {wallclock = out_opaque->wallclock;pkt->duration = out_opaque->duration;if (ctx->flags & AV_CODEC_FLAG_COPY_OPAQUE) {pkt->opaque = out_opaque->frame_opaque;pkt->opaque_ref = out_opaque->frame_opaque_ref;out_opaque->frame_opaque_ref = NULL;}opaque_uninit(out_opaque);} else {// Unexpected opaque pointer on picture outputav_log(ctx, AV_LOG_ERROR, "Unexpected opaque pointer; ""this is a bug, please report it.\n");}// 5.确定帧类型switch (pic_out.i_type) {case X264_TYPE_IDR:case X264_TYPE_I:pict_type = AV_PICTURE_TYPE_I;break;case X264_TYPE_P:pict_type = AV_PICTURE_TYPE_P;break;case X264_TYPE_B:case X264_TYPE_BREF:pict_type = AV_PICTURE_TYPE_B;break;default:av_log(ctx, AV_LOG_ERROR, "Unknown picture type encountered.\n");return AVERROR_EXTERNAL;}pkt->flags |= AV_PKT_FLAG_KEY*pic_out.b_keyframe;// 6.计算编码结束的psnr质量信息if (ret) {int error_count = 0;int64_t *errors = NULL;int64_t sse[3] = {0};if (ctx->flags & AV_CODEC_FLAG_PSNR) {const AVPixFmtDescriptor *pix_desc = av_pix_fmt_desc_get(ctx->pix_fmt);double scale[3] = { 1,(double)(1 << pix_desc->log2_chroma_h) * (1 << pix_desc->log2_chroma_w),(double)(1 << pix_desc->log2_chroma_h) * (1 << pix_desc->log2_chroma_w),};error_count = pix_desc->nb_components;for (int i = 0; i < pix_desc->nb_components; ++i) {double max_value = (double)(1 << pix_desc->comp[i].depth) - 1.0;double plane_size = ctx->width * (double)ctx->height / scale[i];/* psnr = 10 * log10(max_value * max_value / mse) */double mse = (max_value * max_value) / pow(10, pic_out.prop.f_psnr[i] / 10.0);/* SSE = MSE * width * height / scale -> because of possible chroma downsampling */sse[i] = (int64_t)floor(mse * plane_size + .5);};errors = sse;}ff_side_data_set_encoder_stats(pkt, (pic_out.i_qpplus1 - 1) * FF_QP2LAMBDA,errors, error_count, pict_type);if (wallclock)ff_side_data_set_prft(pkt, wallclock);}*got_packet = ret;return 0;

}

1.2.1.2 创建pkt的refcount缓冲buffer(encode_make_refcounted)

函数的主要功能是为avpkt分配refcounted的缓冲buffer

static int encode_make_refcounted(AVCodecContext *avctx, AVPacket *avpkt)

{uint8_t *data = avpkt->data;int ret;// 如果avpkt中存在buf,直接返回if (avpkt->buf)return 0;avpkt->data = NULL;// 获取encode的bufferret = ff_get_encode_buffer(avctx, avpkt, avpkt->size, 0);if (ret < 0)return ret;memcpy(avpkt->data, data, avpkt->size);return 0;

}

ff_get_encode_buffer的定义如下,其核心就是调用了get_encode_buffer进行encode_buffer的创建,默认的创建函数为avcodec_default_get_encode_buffer

int ff_get_encode_buffer(AVCodecContext *avctx, AVPacket *avpkt, int64_t size, int flags)

{int ret;if (size < 0 || size > INT_MAX - AV_INPUT_BUFFER_PADDING_SIZE)return AVERROR(EINVAL);av_assert0(!avpkt->data && !avpkt->buf);avpkt->size = size;// 默认使用的get_encode_buffer函数由avcodec_default_get_encode_buffer指定ret = avctx->get_encode_buffer(avctx, avpkt, flags);if (ret < 0)goto fail;if (!avpkt->data || !avpkt->buf) {av_log(avctx, AV_LOG_ERROR, "No buffer returned by get_encode_buffer()\n");ret = AVERROR(EINVAL);goto fail;}memset(avpkt->data + avpkt->size, 0, AV_INPUT_BUFFER_PADDING_SIZE);ret = 0;

fail:if (ret < 0) {av_log(avctx, AV_LOG_ERROR, "get_encode_buffer() failed\n");av_packet_unref(avpkt);}return ret;

}

avcodec_default_get_encode_buffer的定义如下,核心的地方是使用av_buffer_realloc为avpkt分配buf的空间

int avcodec_default_get_encode_buffer(AVCodecContext *avctx, AVPacket *avpkt, int flags)

{int ret;if (avpkt->size < 0 || avpkt->size > INT_MAX - AV_INPUT_BUFFER_PADDING_SIZE)return AVERROR(EINVAL);if (avpkt->data || avpkt->buf) {av_log(avctx, AV_LOG_ERROR, "avpkt->{data,buf} != NULL in avcodec_default_get_encode_buffer()\n");return AVERROR(EINVAL);}ret = av_buffer_realloc(&avpkt->buf, avpkt->size + AV_INPUT_BUFFER_PADDING_SIZE);if (ret < 0) {av_log(avctx, AV_LOG_ERROR, "Failed to allocate packet of size %d\n", avpkt->size);return ret;}avpkt->data = avpkt->buf->data;return 0;

}

2.小结

avcodec_send_frame函数主要功能是将外部输入的frame送入到编码器中进行编码,送入之前需要将输入信息转换成对应的格式(例如x264格式),编码完成之后获取重建信息进行计算和存储。在这个过程中,refcount这个概念很重要,这个技术应用的核心目的是良好的管理FFmpeg当中资源的生命周期(例如AVFrame,AVPacket等),每多一个访问者,该项资源的refcount数量加1,每减少一个访问者,refcount减1

粗略来说,使用refcount有几点好处:

(1)内存空间

在程序运行过程中,为了节省开销,许多地方没有必要重复分配空间,使用refcount可以通过浅拷贝的方式,访问同一资源

(2)内存管理

程序中,往往有很多地方会访问相同的地址,如果操作不当,可能会出现误操作如提前释放或双重释放,refcount提供了一个计数器,只有当计数器清零的时候才会释放这个变量

(3)代码清晰

将资源的生命周期管理封装起来,使得代码更好维护

CSDN : https://blog.csdn.net/weixin_42877471

Github : https://github.com/DoFulangChen