BEVWorld: A Multimodal World Model for Autonomous Driving via Unified BEV Latent Space

BEV世界:通过统一的BEV潜在空间实现自动驾驶的多模态世界模型

Abstract

World models are receiving increasing attention in autonomous driving for their ability to predict potential future scenarios. In this paper, we present BEVWorld, a novel approach that tokenizes multimodal sensor inputs into a unified and compact Bird’s Eye View (BEV) latent space for environment modeling. The world model consists of two parts: the multi-modal tokenizer and the latent BEV sequence diffusion model. The multi-modal tokenizer first encodes multi-modality information and the decoder is able to reconstruct the latent BEV tokens into LiDAR and image observations by ray-casting rendering in a self-supervised manner. Then the latent BEV sequence diffusion model predicts future scenarios given action tokens as conditions. Experiments demonstrate the effectiveness of BEVWorld in autonomous driving tasks, showcasing its capability in generating future scenes and benefiting downstream tasks such as perception and motion prediction. Code will be available at https://github.com/zympsyche/BevWorld.

在自动驾驶领域,世界模型因其能够预测潜在的未来场景而受到越来越多的关注。在这篇论文中,我们介绍了BEVWorld,这是一种新颖的方法,它将多模态传感器输入标记化到统一且紧凑的鸟瞰图(BEV)潜在空间中,用于环境建模。该世界模型由两部分组成:多模态标记器和潜在BEV序列扩散模型。多模态标记器首先对多模态信息进行编码,解码器能够通过自我监督的方式,通过光线投射渲染将潜在的BEV标记重构为激光雷达和图像观测。然后,潜在的BEV序列扩散模型根据动作标记作为条件来预测未来场景。实验表明BEVWorld在自动驾驶任务中的有效性,展示了其在生成未来场景以及对下游任务如感知和运动预测的益处。代码将在以下链接提供:BEVWorld GitHub。

1 Introduction

Autonomous driving has made significant progress in recent years, but it still faces several challenges. First, training a reliable autonomous driving system requires a large amount of precisely annotated data, which is resource-intensive and time-consuming. Thus, exploring how to utilize unlabeled multimodal sensor data within a self-supervised learning paradigm is crucial. Moreover, a reliable autonomous driving system requires not only the ability to perceive the environment but also a comprehensive understanding of environmental information for decision-making.

自动驾驶近年来取得了显著进展,但仍面临一些挑战。首先,训练一个可靠的自动驾驶系统需要大量的精确标注数据,这既耗费资源又耗时。因此,探索如何利用未标记的多模态传感器数据,在自我监督学习范式中是至关重要的。此外,一个可靠的自动驾驶系统不仅需要感知环境的能力,还需要对环境信息有全面的理解,以便进行决策。

We claim that the key to addressing these challenges is to construct a multimodal world model for autonomous driving. By modeling the environment, the world model predicts future states and behaviors, empowering the autonomous agent to make more sophisticated decisions. Recently, some world models have demonstrated their practical significance in autonomous driving [12, 42, 40]. However, most methods are based on a single modality, which cannot adapt to current multisensor, multimodal autonomous driving systems. Due to the heterogeneous nature of multimodal data, integrating them into a unified generative model and seamlessly adapting to downstream tasks remains an unresolved issue.

我们认为解决这些挑战的关键是构建一个多模态的世界模型用于自动驾驶。通过模拟环境,世界模型预测未来的各种状态和行为,使自动驾驶代理能够做出更复杂的决策。最近,一些世界模型已经证明了它们在自动驾驶中的实用意义[12, 42, 40]。然而,大多数方法都是基于单一模态,无法适应当前的多传感器、多模态自动驾驶系统。由于多模态数据的异质性,将它们整合到一个统一的生成模型中,并无缝适应下游任务,仍然是一个未解决的问题。

In this paper, we introduce BEVWorld, a multimodal world model that transforms diverse multimodal data into a unified bird’s-eye-view (BEV) representation and performs action-conditioned future prediction within this unified space. Our BEVWorld consists of two parts: a multimodal tokenizer network and a latent BEV sequence diffusion network.

在这篇论文中,我们介绍了BEVWorld,这是一个多模态世界模型,它将多样化的多模态数据转换为统一的鸟瞰图(BEV)表示,并在这个统一的空间内进行基于动作的未来预测。我们的BEVWorld由两部分组成:一个多模态标记网络和一个潜在BEV序列扩散网络。

The core capability of the multimodal tokenizer lies in compressing original multimodal sensor data into a unified BEV latent space. This is achieved by transforming visual information into 3D space and aligning visual semantic information with Lidar geometric information in a self-supervised manner using an auto-encoder structure. To reverse this process and reconstruct the multimodal data, a 3D volume representation is constructed from the BEV latent to predict high-resolution images and point clouds using a ray-based rendering technique [39].

多模态标记器的核心能力在于将原始的多模态传感器数据压缩到统一的BEV潜在空间中。这是通过将视觉信息转换到3D空间,并使用自编码器结构以自我监督的方式将视觉语义信息与激光雷达的几何信息对齐来实现的。为了逆转这一过程并重构多模态数据,从BEV潜在空间构建了一个3D体积表示,使用基于光线的渲染技术预测高分辨率图像和点云[39]。

The Latent BEV Sequence Diffusion network is designed to predict future frames of images and point clouds. With the help of a multimodal tokenizer, this task is made easier, allowing for accurate future BEV predictions. Specifically, we use a diffusion-based method with a spatial-temporal transformer, which converts sequential noisy BEV latents into clean future BEV predictions based on the action condition.

潜在BEV序列扩散网络旨在预测图像和点云的未来帧。借助多模态标记器的帮助,这项任务变得更加容易,允许进行准确的未来BEV预测。具体来说,我们使用基于扩散的方法,配合空间-时间变换器,它将顺序的噪声BEV潜在状态转换为基于动作条件的清晰未来BEV预测。

To summarize, the main contributions of this paper are:

• We introduced a novel multimodal tokenizer that integrates visual semantics and 3D geometry into a unified BEV representation. The quality of the BEV representation is ensured by innovatively applying a rendering-based method to restore multi-sensor data from BEV. The effectiveness of the BEV representation is validated through ablation studies, visualizations, and downstream task experiments.

• We designed a latent diffusion-based world model that enables the synchronous generation of future multi-view images and point clouds. Extensive experiments on the nuScenes and Carla datasets showcase the leading future prediction performance of multimodal data.

总结一下,这篇论文的主要贡献包括:

- 我们引入了一种新颖的多模态标记器,将视觉语义和3D几何整合到统一的BEV表示中。通过创新性地应用基于渲染的方法从BEV恢复多传感器数据,确保了BEV表示的质量。通过消融研究、可视化和下游任务实验验证了BEV表示的有效性。

- 我们设计了一个基于潜在扩散的世界模型,能够同步生成未来的多视角图像和点云。在nuScenes和Carla数据集上的广泛实验展示了多模态数据在领先未来预测性能上的优势。

2 Related Works

2.1 World Model

This part mainly reviews the application of world models in the autonomous driving area, focusing on scenario generation as well as the planning and control mechanism. If categorized by the key applications, we divide the sprung-up world model works into two categories. (1) Driving Scene Generation. The data collection and annotation for autonomous driving are high-cost and sometimes risky. In contrast, world models find another way to enrich unlimited, varied driving data due to their intrinsic self-supervised learning paradigms. GAIA-1 [12] adopts multi-modality inputs collected in the real world to generate diverse driving scenarios based on different prompts (e.g., changing weather, scenes, traffic participants, vehicle actions) in an autoregressive prediction manner, which shows its ability of world understanding. ADriver-I [13] combines the multimodal large language model and a video latent diffusion model to predict future scenes and control signals, which significantly improves the interpretability of decision-making, indicating the feasibility of the world model as a fundamental model. MUVO [3] integrates LiDAR point clouds beyond videos to predict future driving scenes in the representation of images, point clouds, and 3D occupancy. Further, Copilot4D [42] leverages a discrete diffusion model that operates on BEV tokens to perform 3D point cloud forecasting and OccWorld [45] adopts a GPT-like generative architecture for 3D semantic occupancy forecast and motion planning. DriveWorld [27] and UniWorld [26] approach the world model as 4D scene understanding task for pre-training for downstream tasks. (2) Planning and Control. MILE [11] is the pioneering work that adopts a model-based imitation learning approach for joint dynamics future environment and driving policy learning in autonomous driving. DriveDreamer [33] offers a comprehensive framework to utilize 3D structural information such as HDMap and 3D box to predict future driving videos and driving actions. Beyond the single front view generation, DriveDreamer-2 [44] further produces multi-view driving videos based on user descriptions. TrafficBots [43] develops a world model for multimodal motion prediction and end-to-end driving, by facilitating action prediction from a BEV perspective. Drive-WM [34] generates controllable multiview videos and applies the world model to safe driving planning to determine the optimal trajectory according to the image-based rewards.

这部分主要回顾了世界模型在自动驾驶领域的应用,重点关注场景生成以及规划和控制机制。如果按照关键应用进行分类,我们将涌现的世界模型工作分为两类。(1) 驾驶场景生成。自动驾驶的数据收集和标注成本高昂,有时还具有风险。相比之下,由于其内在的自我监督学习范式,世界模型找到了另一种方式来丰富无限、多样化的驾驶数据。GAIA-1 [12] 采用在现实世界中收集的多模态输入,基于不同的提示(例如,变化的天气、场景、交通参与者、车辆行为)以自回归预测方式生成多样化的驾驶场景,这显示了其对世界的理解能力。ADriver-I [13] 结合了多模态大型语言模型和视频潜在扩散模型来预测未来场景和控制信号,这显著提高了决策的可解释性,表明了世界模型作为基础模型的可行性。MUVO [3] 在图像、点云和3D占用的表示中整合了激光雷达点云,以预测未来的驾驶场景。进一步地,Copilot4D [42] 利用在BEV标记上操作的离散扩散模型进行3D点云预测,OccWorld [45] 采用类似GPT的生成架构进行3D语义占用预测和运动规划。DriveWorld [27] 和 UniWorld [26] 将世界模型视为4D场景理解任务,用于下游任务的预训练。(2) 规划和控制。MILE [11] 是开创性的工作,它采用基于模型的模仿学习方法,联合动态未来环境和自动驾驶策略学习。DriveDreamer [33] 提供了一个全面框架,利用3D结构信息,如高清地图和3D框,来预测未来的驾驶视频和驾驶行为。超越单一的前视图生成,DriveDreamer-2 [44] 进一步根据用户描述生成多视角驾驶视频。TrafficBots [43] 开发了一个世界模型,用于多模态运动预测和端到端驾驶,通过从BEV视角促进动作预测。Drive-WM [34] 生成可控的多视角视频,并将世界模型应用于安全驾驶规划,根据基于图像的奖励确定最优轨迹。

2.2 Video Diffusion Model

World model can be regarded as a sequence-data generation task, which belongs to the realm of video prediction. Many early methods [11, 12] adopt VAE [17] and auto-regression [6] to generate future predictions. However, the VAE suffers from unsatisfactory generation quality, and the auto-regressive method has the problem of cumulative error. Thus, many researchers switch to study on diffusionbased future prediction methods [44, 20], which achieves success in the realm of video generation recently and has ability to predict multiple future frames simultaneously. This part mainly reviews the related methods of video diffusion model.

世界模型可以被视为序列数据生成任务,属于视频预测领域。许多早期的方法[11, 12]采用变分自编码器(VAE)[17]和自回归[6]来生成未来预测。然而,VAE在生成质量上存在不满意的问题,自回归方法存在累积误差的问题。因此,许多研究人员转向研究基于扩散的未来预测方法[44, 20],这些方法在视频生成领域最近取得了成功,并且具有同时预测多个未来帧的能力。这部分主要回顾了视频扩散模型的相关方法。

The standard video diffusion model [10] takes temporal noise as input, and adopts the UNet [29] with temporal attention to obtain denoised videos. However, this method requires high training costs and the generation quality needs further improvement. Subsequent methods are mainly improved along these two directions. In view of the high training cost problem, LVDM[9] and Open-Sora [18] methods compress the video into a latent space through schemes such as VAE or VideoGPT [37], which reduces the video capacity in terms of spatial and temporal dimensions. In order to improve the generation quality of videos, stable video diffusion [2] proposes a multi-stage training strategy, which adopts image and low-resolution video pretraining to accelerate the model convergence and improve generation quality. GenAD [38] introduces the causal mask module into UNet to predict plausible futures following the temporal causality. VDT [24] and Sora [4] replace the traditional UNet with a spatial-temporal transformer structure. The powerful scale-up capability of the transformer enables the model to fit the data better and generates more reasonable videos.

标准的视频扩散模型[10]以时间噪声为输入,并采用带有时间注意力的UNet[29]来获得去噪视频。然而,这种方法需要高昂的训练成本,并且生成质量还需要进一步提高。随后的方法主要沿着这两个方向进行改进。针对高昂的训练成本问题,LVDM[9]和Open-Sora[18]方法通过VAE或VideoGPT[37]等方案将视频压缩到潜在空间中,这减少了视频在空间和时间维度上的容量。为了提高视频生成质量,稳定视频扩散[2]提出了一个多阶段训练策略,采用图像和低分辨率视频预训练来加速模型收敛并提高生成质量。GenAD[38]在UNet中引入因果掩码模块,以预测遵循时间因果关系的合理未来。VDT[24]和Sora[4]用空间-时间变换器结构替换了传统的UNet。变换器的强大扩展能力使模型能够更好地拟合数据,并生成更合理的视频。

3 Method

In this section, we delineate the model structure of BEVWorld. The overall architecture is illustrated in Figure 1. Given a sequence of multi-view image and Lidar observations {ot−P , · · · , ot−1, ot, ot+1, · · · , ot+N } where ot is the current observation, +/− represent the future/past observations and P/N is the number of past/future observations, we aim to predict {ot+1, · · · , ot+N } with the condition {ot−P , · · · , ot−1, ot}. In view of the high computing costs of learning a world model in original observation space, a multi-modal tokenizer is proposed to compress the multi-view image and Lidar information into a unified BEV space by frame. The encoder-decoder structure and the self-supervised reconstruction loss promise proper geometric and semantic information is well stored in the BEV representation. This design exactly provides a sufficiently concise representation for the world model and other downstream tasks. Our world model is designed as a diffusion-based network to avoid the problem of error accumulating as those in an auto-regressive fashion. It takes the ego motion and {xt−P , · · · , xt−1, xt}, i.e. the BEV representation of {ot−P , · · · , ot−1, ot}, as condition to learn the noise {ϵt+1, · · · , ϵt+N} added to {xt+1, · · · , xt+N } in the training process. In the testing process, a DDIM [32] scheduler is applied to restore the future BEV token from pure noises. Next we use the decoder of multi-modal tokenizer to render future multi-view images and Lidar frames out.

在这一部分,我们描述了BEVWorld的模型结构。整体架构在图1 中进行了说明。给定一系列多视角图像和激光雷达观测序列 { o t − P , . . . , o t − 1 , o t , o t + 1 , . . . , o t + N } \{o_{t−P} , ..., o_{t−1}, o_t, o_{t+1}, ..., o_{t+N} \} {ot−P,...,ot−1,ot,ot+1,...,ot+N},其中 o t o_t ot 是当前观测,+/−代表未来的/过去的观测,P/N 是过去/未来观测的数量,我们的目标是在条件 { o t − P , . . . , o t − 1 , o t } \{o_{t−P} , ..., o_{t−1}, o_t\} {ot−P,...,ot−1,ot}下预测 { o t + 1 , . . . , o t + N } \{o_{t+1}, ..., o_{t+N}\} {ot+1,...,ot+N}。考虑到在原始观测空间中学习世界模型的高计算成本,我们提出了一个多模态标记器,通过每帧将多视角图像和激光雷达信息压缩到统一的BEV空间中。编码器-解码器结构和自我监督的重建损失保证了适当的几何和语义信息被很好地存储在BEV表示中。这个设计为世界模型和其他下游任务提供了一个足够简洁的表示。我们的世界模型被设计为一个基于扩散的网络,以避免自回归方式中累积误差的问题。它以自我运动和 { x t − P , . . . , x t − 1 , x t } \{x_{t−P} , ..., x_{t−1}, x_t \} {xt−P,...,xt−1,xt},即 { o t − P , . . . , o t − 1 , o t } \{o_{t−P} , ..., o_{t−1}, o_t\} {ot−P,...,ot−1,ot} 的BEV表示,作为条件来学习训练过程中添加到 { x t + 1 , . . . , x t + N } \{x_{t+1}, ..., x_{t+N}\} {xt+1,...,xt+N} 的噪声 { ϵ t + 1 , . . . , ϵ t + N } \{ϵ_{t+1}, ..., ϵ_{t+N}\} {ϵt+1,...,ϵt+N}。在测试过程中,应用DDIM[32]调度器从纯噪声中恢复未来的BEV标记。接下来,我们使用多模态标记器的解码器来渲染未来的多视角图像和激光雷达帧。

图1:我们的方法BEVWorld的概述。BEVWorld由多模态分词器和潜在BEV序列扩散模型组成。分词器首先将图像和激光雷达观测编码成BEV标记,然后通过NeRF渲染策略将统一的BEV标记解码回重建的观测。潜在BEV序列扩散模型通过空间-时间变换器根据相应的动作条件预测未来的BEV标记。通过单次推理获得多帧未来BEV标记,避免了自回归方法累积误差的问题。

3.1 Multi-Modal Tokenizer

Our designed multi-modal tokenizer contains three parts: a BEV encoder network, a BEV Decoder network and a multi-modal rendering network. The structure of BEV encoder network is illustrated in the Figure 2. To make the multi-modal network as homogeneous as possible, we adopt the Swin-Transformer [22] network as the image backbone to extract multi-image features. For Lidar feature extraction, we first split point cloud into pillars [19] on the BEV space. Then we use the SwinTransformer network as the Lidar backbone to extract Lidar BEV features. We fuse the Lidar BEV features and the multi-view images features with a deformable-based transformer [46]. Specifically, we sample K(K = 4) points in the height dimension of pillars and project these points onto the image to sample corresponding image features. The sampled image features are treated as values and the Lidar BEV features is served as queries in the deformable attention calculation. Considering the future prediction task requires low-dimension inputs, we further compress the fused BEV feature into a low-dimensional(C′ = 4) BEV feature.

我们设计的多模态标记器包含三个部分:一个BEV编码器网络、一个BEV解码器网络以及一个多模态渲染网络。BEV编码器网络的结构在 图2 中进行了说明。为了使多模态网络尽可能同质化,我们采用Swin-Transformer[22]网络作为图像的主干网络来提取多图像特征。对于激光雷达特征提取,我们首先将点云在BEV空间中分割成柱状[19]。然后我们使用Swin-Transformer网络作为激光雷达的主干网络来提取激光雷达BEV特征。我们使用基于变形的变换器[46]来融合激光雷达BEV特征和多视角图像特征。具体来说,我们在柱状的高度维度中采样K(K=4)个点,并将这些点投影到图像上以采样相应的图像特征。采样的图像特征被视为值,而激光雷达BEV特征作为查询在可变形注意力计算中使用。考虑到未来预测任务需要低维输入,我们进一步将融合的BEV特征压缩成一个低维(C′=4)的BEV特征。

图2:BEV编码器的详细结构。编码器接收多视角多模态传感器数据作为输入。使用可变形注意力融合多模态信息,BEV特征在通道上被压缩,以与扩散模型兼容。

For BEV decoder, there is an ambiguity problem when directly using a decoder to restore the images and Lidar since the fused BEV feature lacks height information. To address this problem, we first convert BEV tokens into 3D voxel features through stacked layers of upsampling and swin-blocks. And then we use voxelized NeRF-based ray rendering to restore the multi-view images and Lidar point cloud.

对于BEV解码器,如果直接使用解码器来恢复图像和激光雷达数据,会存在一个模糊性问题,因为融合的BEV特征缺少高度信息。为了解决这个问题,我们首先通过上采样和Swin块的堆叠层将BEV标记转换为3D体素特征。然后,我们使用基于体素化的NeRF的光线渲染技术来恢复多视角图像和激光雷达点云。

The multi-modal rendering network can be elegantly segmented into two distinct components, image reconstruction network and Lidar reconstruction network. For image reconstruction network, we first get the ray r(t) = o + td, which shooting from the camera center o to the pixel center in direction d. Then we uniformly sample a set of points {(xi, yi, zi)}N i=1 r along the ray, where Nr(Nr = 150) is the total number of points sampled along a ray. Given a sampled point (xi, yi, zi), the corresponding features vi are obtained from the voxel feature according to its position. Then, all the sampled features in a ray are aggregated as pixel-wise feature descriptor (Eq. 1)

多模态渲染网络可以优雅地划分为两个不同的组件:图像重建网络和激光雷达重建网络。对于图像重建网络,我们首先得到光线 r ( t ) = o + t d r(t) = o + td r(t)=o+td,它从相机中心 o o o 射向像素中心 d d d 的方向。然后,我们在光线上均匀采样一组点 { ( x i , y i , z i ) } i = 1 N r \{(x_i, y_i, z_i)\}_{i=1}^{N_r} {(xi,yi,zi)}i=1Nr,其中 N r ( N r = 150 ) N_r (N_r = 150) Nr(Nr=150) 是沿光线采样的点的总数。给定一个采样点 ( x i , y i , z i ) (x_i, y_i, z_i) (xi,yi,zi),可以根据其位置从体素特征中获得相应的特征 v i v_i vi。然后,光线上所有采样的特征被聚合为像素级特征描述符(公式 1)。

We traverse all pixels and obtain the 2D feature map V ∈ RHf ×Wf ×Cf of the image. The 2D feature is converted into the RGB image Ig ∈ RH×W ×3 through a CNN decoder. Three common losses are added for improving the quality of generated images, perceptual loss [14], GAN loss [8] and L1 loss. Our full objective of image reconstruction is:

我们遍历所有像素并获取图像的2D特征图 V ∈ R H f × W f × C f V \in \mathbb{R}^{H_f \times W_f \times C_f} V∈RHf×Wf×Cf。2D特征通过CNN解码器转换为RGB图像 I g ∈ R H × W × 3 I_g \in \mathbb{R}^{H \times W \times 3} Ig∈RH×W×3。为了提高生成图像的质量,我们添加了三种常见的损失:感知损失[14]、GAN损失[8]和L1损失。我们图像重建的完整目标是:

where It is the ground truth of Ig, ϕj represents the jth layer of pretrained VGG [31] model, and the definition of L gan(Ig, It) can be found in [8].

在这里, I t I_t It 是 I g I_g Ig 的真实图像(ground truth),而 ϕ j \phi_j ϕj 表示预训练的VGG模型[31]的第 j j j 层。感知损失(Perceptual Loss)通常使用VGG网络的特征来计算生成图像 I g I_g Ig 和真实图像 I t I_t It 之间的差异。这种损失不仅考虑了像素级别的差异,还考虑了在不同层次上的高级特征差异,有助于生成在视觉上更加真实的图像。

L gan ( I g , I t ) L_{\text{gan}}(I_g, I_t) Lgan(Ig,It) 表示GAN损失,它的定义可以在文献[8]中找到。GAN损失通常涉及到一个判别器网络,该网络被训练来区分生成的图像和真实的图像。生成器网络的目标是最小化这个损失,从而使生成的图像能够欺骗判别器,达到以假乱真的效果。

整个图像重建的目标函数可能会结合这些损失,以确保生成的图像在视觉上与真实图像尽可能相似,并且在不同的评价标准下都具有良好的性能。

For Lidar reconstruction network, the ray is defined in the spherical coordinate system with inclination θ and azimuth ϕ. θ and ϕ are obtained by shooting from the Lidar center to current frame of Lidar point. We sample the points and get the corresponding features in the same way of image reconstruction. Since Lidar encodes the depth information, the expected depth Dg® of the sampled points are calculated for Lidar simulation. The depth simulation process and loss function are shown in Eq. 3.

对于激光雷达重建网络,射线在球坐标系中以倾斜角 θ \theta θ 和方位角 ϕ \phi ϕ 定义。 θ \theta θ 和 ϕ \phi ϕ 通过从激光雷达中心向当前帧的激光雷达点发射来获得。我们以与图像重建相同的方式采样点并获取相应的特征。由于激光雷达编码了深度信息,因此计算了采样点的预期深度 D g ( r ) D_g(r) Dg(r) 以进行激光雷达模拟。深度模拟过程和损失函数显示在公式3 中。

在激光雷达重建中,深度信息是非常重要的,因为它允许激光雷达点云准确地表示场景的三维结构。预期深度 D g ( r ) D_g(r) Dg(r) 的计算通常涉及到从体素特征中提取深度信息,并将这些信息与采样点相关联,以生成激光雷达点云的模拟。

损失函数可能包括以下几个部分:

- 重建损失(Reconstruction Loss):衡量模拟的激光雷达点云与实际观测到的激光雷达点云之间的差异。

- 深度损失(Depth Loss):衡量计算出的预期深度与实际深度之间的差异。

- 正则化损失(Regularization Loss):可能包括某些约束条件,以确保激光雷达点云的平滑性和一致性。

具体的损失函数形式将取决于所采用的模型和训练策略。在实际应用中,这些损失函数会根据问题的具体需求进行加权和组合,以优化激光雷达重建网络的性能。

where ti denotes the depth of sampled point from the Lidar center and Dt® is the depth ground truth calculated by the Lidar observation.

在这里, t i t_i ti 表示从激光雷达中心采样点的深度,而 D t ( r ) D_t(r) Dt(r) 是根据激光雷达观测计算出的真实深度。在激光雷达重建网络中,深度信息是关键,因为它允许激光雷达点云准确地表示场景的三维结构。

The Cartesian coordinate of point cloud could be calculated by:

点云的笛卡尔坐标可以通过以下公式计算:

假设我们有一个点在球坐标系中的位置,由三个参数定义:半径 r r r,倾斜角 θ \theta θ(通常从垂直轴向下的角度),和方位角 ϕ \phi ϕ(通常从某个参考方向,如北方,测量的角度)。笛卡尔坐标系中的 x x x, y y y,和 z z z 坐标可以通过以下转换得到:

x = r ⋅ sin ( θ ) ⋅ cos ( ϕ ) x = r \cdot \sin(\theta) \cdot \cos(\phi) x=r⋅sin(θ)⋅cos(ϕ)

y = r ⋅ sin ( θ ) ⋅ sin ( ϕ ) y = r \cdot \sin(\theta) \cdot \sin(\phi) y=r⋅sin(θ)⋅sin(ϕ)

z = r ⋅ cos ( θ ) z = r \cdot \cos(\theta) z=r⋅cos(θ)

这里:

- r r r 是从激光雷达中心到点的径向距离。

- θ \theta θ 是点与正 z z z 轴之间的角度,通常称为俯仰角或高度角。

- ϕ \phi ϕ 是点在 x y xy xy 平面上的水平角度,通常称为方位角。

这些公式假设球坐标系和笛卡尔坐标系的原点是相同的,且 θ \theta θ 和 ϕ \phi ϕ 的测量遵循标准的数学约定。在实际应用中,可能还需要考虑激光雷达传感器的具体几何配置和坐标系转换。

Overall, the multi-modal tokenizer is trained end-to-end with the total loss in Eq. 5:

总体而言,多模态标记器是端到端训练的,总损失函数在公式5中给出。

图3:左侧:多视图图像渲染的细节。沿射线对一系列采样点应用三线性插值以获得权重 w i w_i wi 和特征 v i v_i vi。分别用 w i w_i wi 加权 v i v_i vi 并求和,以获得渲染的图像特征,这些特征被连接起来并输入到解码器中进行8倍上采样,从而生成多视图RGB图像。右侧:激光雷达渲染的细节。也应用三线性插值来获得权重 w i w_i wi 和深度 t i t_i ti。分别用 w i w_i wi 加权 t i t_i ti 并求和,以获得点的最终深度。然后,将球坐标系中的点转换到笛卡尔坐标系中,以获得原始激光雷达点坐标。

3.2 Latent BEV Sequence Diffusion

Most existing world models [42, 12] adopt autoregression strategy to get longer future predictions, but this method is easily affected by cumulative errors. Instead, we propose latent sequence diffusion framework, which inputs multiple frames of noise BEV tokens and obtains all future BEV tokens simultaneously.

大多数现有的世界模型[42, 12]采用自回归策略来获得更长时间序列的未来预测,但这种方法容易受到累积误差的影响。相反,我们提出了潜在序列扩散框架,它输入多个帧的噪声BEV标记,并同时获得所有未来的BEV标记。

这种方法的优势在于:

- 并行处理:与传统的自回归方法相比,潜在序列扩散框架可以并行处理多个时间步的数据,从而提高效率。

- 减少累积误差:由于不是逐步预测每个时间步,因此减少了因逐步预测而可能累积的误差。

- 一次性生成:可以一次性生成整个未来时间序列的预测,这有助于保持预测之间的一致性和连贯性。

潜在序列扩散框架通常包括以下几个步骤:

- 噪声注入:在潜在的BEV表示中引入噪声,模拟数据的不确定性。

- 扩散过程:通过一系列操作逐步增加噪声,将数据从原始状态转化为高噪声状态。

- 逆扩散过程:学习逆过程,从高噪声状态恢复到原始的无噪声状态,即预测未来的状态。

这种框架在生成模型中越来越流行,特别是在需要预测未来场景的任务中,例如自动驾驶和视频预测等。通过这种方式,模型可以更好地捕捉时间序列数据中的长期依赖关系,同时避免了自回归方法的一些局限性。

The structure of latent sequence diffusion is illustrated in Figure 1. In the training process, the low-dimensional BEV tokens (xt−P , · · · , xt−1, xt, xt+1, · · · , xt+N) are firstly obtained from the sensor data. Only BEV encoder in the multi-modal tokenizer is involved in this process and the parameters of multi-modal tokenizer is frozen. To facilitate the learning of BEV token features by the world model module, we standardize the input BEV features along the channel dimension (xt−P , · · · , xt−1, xt, xt+1, · · · , xt+N ). Latest history BEV token and current frame BEV token (xt−P , · · · , xt−1, xt) are served as condition tokens while (xt+1, · · · , xt+N) are diffused to noisy BEV tokens (xϵ t+1, · · · , xϵ t+N) with noise {ϵi tˆ}t i=+tN+1, where tˆis the timestamp of diffusion process.

潜在序列扩散模型的结构在图1 中进行了说明。在训练过程中,首先从传感器数据中获得低维的BEV标记 ( x t − P , … , x t − 1 , x t , x t + 1 , … , x t + N ) ( x_{t-P}, \ldots, x_{t-1}, x_t, x_{t+1}, \ldots, x_{t+N}) (xt−P,…,xt−1,xt,xt+1,…,xt+N)。在这个过程中,只涉及多模态标记器中的BEV编码器,并且多模态标记器的参数是固定的。为了便于世界模型模块学习BEV标记特征,我们沿着通道维度对输入的BEV特征进行标准化 ( x ˉ t − P , … , x ˉ t − 1 , x ˉ t , x ˉ t + 1 , … , x ˉ t + N ) ( \bar x_{t-P}, \ldots, \bar x_{t-1}, \bar x_t, \bar x_{t+1}, \ldots, \bar x_{t+N}) (xˉt−P,…,xˉt−1,xˉt,xˉt+1,…,xˉt+N)。最近的历史BEV标记和当前帧的BEV标记 ( x ˉ t − P , … , x ˉ t − 1 , x ˉ t ) ( \bar x_{t-P}, \ldots, \bar x_{t-1}, \bar x_t) (xˉt−P,…,xˉt−1,xˉt) 被用作条件标记,而 ( x ˉ t + 1 , … , x ˉ t + N ) (\bar x_{t+1}, \ldots, \bar x_{t+N}) (xˉt+1,…,xˉt+N)被扩散成噪声BEV标记 ( x ˉ t + 1 ϵ , … , x ˉ t + N ϵ ) (\bar x^{\epsilon}_{t+1}, \ldots, \bar x^{\epsilon}_{t+N}) (xˉt+1ϵ,…,xˉt+Nϵ),其中噪声为 { ϵ t i } i = t + 1 t + N \{\epsilon^i_t \}^{t+N}_{i=t+1} {ϵti}i=t+1t+N, t ∧ t^{\wedge} t∧ 是扩散过程的时间戳。

The denoising process is carried out with a spatial-temporal transformer containing a sequence of transformer blocks, the architecture of which is shown in the Figure 4. The input of spatialtemporal transformer is the concatenation of condition BEV tokens and noisy BEV tokens (xt−P , · · · , xt−1, xt, xϵ t+1, · · · , xϵ t+N). These tokens are modulated with action tokens {ai}T i=+TN−P of vehicle movement and steering, which together form the inputs to spatial-temporal transformer. More specifically, the input tokens are first passed to temporal attention block for enhancing temporal smoothness. To avoid time confusion problem, we added the causal mask into temporal attention. Then, the output of temporal attention block are sent to spatial attention block for accurate details. The design of spatial attention block follows standard transformer block criterion [24]. Action token and diffusion timestamp {tˆd i }T i=+TN−P are concatenated as the condition {ci}T i=+TN−P of diffusion models and then sent to AdaLN [28] (6) to modulate the token features.

去噪过程是通过包含一系列变换器(transformer)块的空间-时间变换器来执行的,其架构在图4 中展示。空间-时间变换器的输入是条件BEV标记和噪声BEV标记的组合 ( x ˉ t − P , … , x ˉ t − 1 , x ˉ t , x ˉ t + 1 ϵ , … , x ˉ t + N ϵ ) ( \bar x_{t-P}, \ldots, \bar x_{t-1}, \bar x_t, \bar x_{t+1}^{\epsilon }, \ldots, \bar x_{t+N}^{\epsilon }) (xˉt−P,…,xˉt−1,xˉt,xˉt+1ϵ,…,xˉt+Nϵ)。这些标记与车辆运动和转向的动作标记 { a i } i = T − P T + P \{a_i\}^{T+P}_{i=T-P} {ai}i=T−PT+P 一起调节,形成空间-时间变换器的输入 。更具体地说,输入标记首先传递到时间注意力块,以增强时间平滑性。为了避免时间混淆问题,我们在时间注意力中加入了因果掩码。然后,时间注意力块的输出被发送到空间注意力块以获取准确的细节。空间注意力块的设计遵循标准变换器块的标准[24]。动作标记和扩散时间戳 { t ^ i d } i = t − P T + N \{\hat t^{d}_i\}^{T+N}_{i=t-P} {t^id}i=t−PT+N 被连接为扩散模型的条件 { c i } i = T − P T + N \{c_i\}^{T+N}_{i=T-P} {ci}i=T−PT+N,然后发送到AdaLN[28](公式6)来调节标记特征。

where ˆx is the input features of one transformer block, γ, β is the scale and shift of c.

在变换器(Transformer)块的上下文中, x ^ \hat{x} x^ 表示输入特征,而 γ \gamma γ 和 β \beta β 分别是条件 c c c 对输入特征进行缩放(scale)和偏移(shift)的参数。这通常出现在自注意力机制(Self-Attention)或前馈网络(Feed-Forward Networks)中,用于调节输入特征,使模型能够更好地捕捉条件信息。

这个过程的关键点包括:

- 时间注意力块:用于加强时间序列数据的平滑性,通过因果掩码避免时间上的混乱。

- 空间注意力块:用于捕捉空间上的详细信息,遵循标准变换器块的设计。

- 动作标记调节:动作标记与扩散时间戳结合,形成扩散模型的条件,对输入标记进行调节。

- AdaLN:自适应层归一化(AdaLN)用于进一步调节标记特征,以适应模型的学习和预测任务。

通过这种设计,模型能够在考虑时间动态和空间细节的同时,有效地从噪声数据中恢复出清晰的未来BEV标记,为自动驾驶中的决策和规划提供准确的环境理解。

The output of the Spatial-Temporal transformer is the noise prediction {ϵi tˆ(x)}N i=1, and the loss is shown in Eq. 7.

空间-时间变换器的输出是噪声预测 { ϵ t ^ i ( x ) } i = 1 N \{\epsilon^i_{\hat{t}}(x)\}_{i=1}^{N} {ϵt^i(x)}i=1N,这些预测反映了在给定条件下,模型对噪声BEV标记的预测。损失函数(通常在公式7中定义)用于训练过程中量化模型预测与实际噪声之间的差异,以此来优化模型参数。

In the testing process, normalized history frame and current frame BEV tokens (xt−P , · · · , xt−1, xt) and pure noisy tokens (ϵt+1, ϵt+2, · · · , ϵt+N) are concatenated as input to world model. The ego motion token {ai}T i=+TN−P , spanning from moment T − P to T + N, serve as the conditional inputs. We employ the DDIM [32] schedule to forecast the subsequent BEV tokens. Subsequently, the denormalized operation is applied to the predicted BEV tokens, which are then fed into the BEV decoder and rendering network yielding a comprehensive set of predicted multi-sensor data.

在测试过程中,归一化的历史帧和当前帧BEV标记 ( x ˉ t − P , … , x ˉ t − 1 , x ˉ t ) (\bar x_{t-P}, \ldots, \bar x_{t-1}, \bar x_t) (xˉt−P,…,xˉt−1,xˉt) 以及纯净的噪声标记 ( ϵ t + 1 , ϵ t + 2 , … , ϵ t + N ) (\epsilon_{t+1}, \epsilon_{t+2}, \ldots, \epsilon_{t+N}) (ϵt+1,ϵt+2,…,ϵt+N) 被连接作为输入提供给世界模型。自我运动标记 { a i } i = T − P T + N \{a_i\}_{i=T-P}^{T+N} {ai}i=T−PT+N,从时刻 T − P T-P T−P 跨越到 T + N T+N T+N,作为条件输入。我们采用DDIM[32]调度来预测随后的BEV标记。随后,对预测的BEV标记进行去归一化操作,然后将它们输入到BEV解码器和渲染网络,产生一套全面的预测多传感器数据。

4 Experiments

4.1 Dataset

NuScenes [5] NuScenes is a widely used autonomous driving dataset, which comprises multi-modal data such as multi-view images from 6 cameras and Lidar scans. It includes a total of 700 training videos and 150 validation videos. Each video includes 20 seconds at a frame rate of 12Hz.

NuScenes[5] 是一个广泛使用的自动驾驶数据集,它包括多模态数据,例如来自6个摄像头的多视角图像和激光雷达扫描。该数据集总共包含700个训练视频和150个验证视频,每个视频包含20秒的时长,帧率为12Hz。

Carla [7] The training data is collected in the open-source CARLA simulator at 2Hz, including 8 towns and 14 kinds of weather. We collect 3M frames with four cameras (1600 × 900) and one Lidar (32p) for training, and evaluate on the Carla Town05 benchmark, which is the same setting of [30].

CARLA[7]是一个开源模拟器,用于支持自动驾驶车辆的研究和开发。在CARLA模拟器中收集的训练数据具有以下特点:

- 数据采集频率:数据以2Hz的频率收集,这意味着每秒钟收集两次数据。

- 环境多样性:数据覆盖了8个不同的城镇和14种不同的天气条件,这为模型提供了丰富的环境变化,有助于提高其泛化能力。

- 传感器配置:训练数据包括来自四个摄像头(分辨率为1600×900)的图像和来自一个32线激光雷达的点云数据。

- 数据量:总共收集了300万帧数据用于训练。

- 评估基准:在CARLA的Town05城镇上进行评估,这是一个标准的基准测试,用于与先前的研究[30]进行比较。

4.2 Multi-modal Tokenizer

In this section, we explore the impact of different design decisions in the proposed multi-modal tokenizer and demonstrate its effectiveness in the downstream tasks. For multi-modal reconstruction visualization results, please refer to Figure7 and Figure8.

在本节中,我们将探讨所提出的多模态标记器中不同设计决策的影响,并在下游任务中展示其有效性。对于多模态重建的可视化结果,请参见 图7 和 图8。

4.2.1 Ablation Studies

Various input modalities and output modalities. The proposed multi-modal tokenizer supports various choice of input and output modalities. We test the influence of different modalities, and the results are shown in Table 1, where L indicates Lidar modality, C indicates multi-view cameras modality, and L&C indicates multi-modal modalities. The combination of Lidar and cameras achieves the best reconstruction performance, which demonstrates that using multi modalities can generate better BEV features. We find that the PSNR metric is somewhat distorted when comparing ground truth images and predicted images. This is caused by the mean characteristics of PSNR metric, which does not evaluate sharpening and blurring well. As shown in Figure 12, though the PSNR of multi modalities is slightly lower than single camera modality method, the visualization of multi modalities is better than single camera modality as the FID metric indicates.

提出的多模态标记器支持多种输入和输出模态的选择。我们测试了不同模态的影响,结果如表1 所示,其中L表示激光雷达模态,C表示多视角摄像头模态,L&C表示多模态模态。激光雷达和摄像头的结合达到了最佳的重建性能,这表明使用多模态可以生成更好的BEV特征。我们发现,在使用PSNR指标比较真实图像和预测图像时,该指标有些失真。这是由于PSNR指标的均值特性,它不擅长评估图像锐化和模糊。如图12 所示,尽管多模态的PSNR略低于单摄像头模态方法,但多模态的可视化效果优于单摄像头模态,这一点由FID指标显示。

这段描述中的关键点包括:

FID指标:Fréchet Inception Distance(FID)是一个度量图像质量的指标,它考虑了图像的分布特性。在这种情况下,FID指标表明多模态方法在视觉上比单摄像头模态方法更好,即使PSNR值略低。

PSNR指标的局限性:峰值信噪比(PSNR)可能无法准确反映图像质量,尤其是在比较真实图像和模型预测图像时。

Chamfer距离是一种在计算机图形学和机器学习中常用的度量,特别是在3D建模和点云处理领域。它衡量的是两个点集之间的相似度,通常用于比较预测的3D模型或点云与真实数据之间的差异。

Rendering approaches. To convert from BEV features into multiple sensor data, the main challenge lies in the varying positions and orientations of different sensors, as well as the differences in imaging (points and pixels). We compared two types of rendering methods: a) attention-based method, which implicitly encodes the geometric projection in the model parameters via global attention mechanism; b) ray-based sampling method, which explicitly utilizes the sensor’s pose information and imaging geometry. The results of the methods (a) and (b) are presented in Table 2. Method (a) faces with a significant performance drop in multi-view reconstruction, indicating that our ray-based sampling approach reduces the difficulty of view transformation, making it easier to achieve training convergence. Thus we adopt ray-based sampling method for generating multiple sensor data.

渲染方法。将鸟瞰视图(BEV)特征转换为多种传感器数据时,主要挑战在于不同传感器的位置和方向各异,以及成像(点和像素)的差异。我们比较了两种渲染方法:a) 基于注意力的方法,它通过全局注意力机制隐式地在模型参数中编码几何投影;b) 基于射线的采样方法,它明确地利用传感器的姿态信息和成像几何。方法(a)和(b)的结果呈现在表2 中。方法(a)在多视图重建中面临显著的性能下降,表明我们的基于射线的采样方法减少了视图转换的难度,使得训练收敛更容易实现。因此,我们采用基于射线的采样方法来生成多种传感器数据。

4.2.2 Benefit for Downstream Tasks

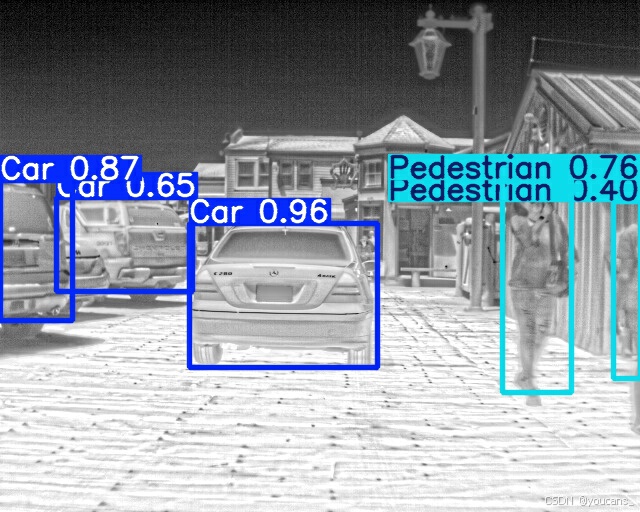

3D Detection. To verify our proposed method is effective for downstream tasks when used in the pre-train stage, we conduct experiments on the nuScenes 3D detection benchmark. For the model structure, in order to maximize the reuse of the structure of our multi-modal tokenizer, the encoder in the downstream 3D detection task is kept the same with the encoder of the tokenizer described in 3. We use a BEV encoder attached to the tokenizer encoder for further extracting BEV features. We design a UNet-style network with the Swin-transformer [22] layers as the BEV encoder. As for the detection head, we adopt query-based head [21], which contains 500 object queries that searching the whole BEV feature space and uses hungarian algorithm to match the prediction boxes and the ground truth boxes. We report both single frame and two frames results. We warp history 0.5s BEV future to current frame in two frames setting for better velocity estimation. Note that we do not perform fine-tuning specifically for the detection task all in the interest of preserving the simplicity and clarity of our setup. For example, the regular detection range is [-60.0m, -60.0m, -5.0m, 60.0m, 60.0m, 3.0m] in the nuScenes dataset while we follow the BEV range of [-80.0m, -80.0m, -4.5m, 80.0m, 80.0m, 4.5m] in the multi-modal reconstruction task, which would result in coarser BEV grids and lower accuracy. Meanwhile, our experimental design eschew the use of data augmentation techniques and the layering of point cloud frames. We train 30 epoches on 8 A100 GPUs with a starting learning rate of 5e−4 that decayed with cosine annealing policy. We mainly focus on the relative performance gap between training from scratch and use our proposed self-supervised tokenizer as pre-training model. As demonstrated in Table 3, it is evident that employing our multi-modal tokenizer as a pre-training model yields significantly enhanced performance across both single and multi-frame scenarios. Specifically, with a two-frame configuration, we have achieved an impressive 8.4% improvement in the NDS metric and a substantial 13.4% improvement in the mAP metric, attributable to our multi-modal tokenizer pre-training approach.

3D 检测。为了验证我们提出的方法在预训练阶段对下游任务的有效性,我们在 nuScenes 3D 检测基准上进行了实验。对于模型结构,为了最大化我们多模态分词器结构的重用,下游3D检测任务的编码器与3.3节中描述的分词器编码器保持相同。我们使用一个附加在分词器编码器上的 BEV 编码器来进一步提取 BEV 特征。我们设计了一个使用 Swin-transformer [22] 层作为 BEV 编码器的 UNet 风格的网络。至于检测头,我们采用了基于查询的头部 [21],其中包含 500 个对象查询,这些查询搜索整个 BEV 特征空间,并使用匈牙利算法匹配预测框和真实框。我们报告了单帧和双帧的结果。在双帧设置中,我们将历史 0.5 秒的 BEV 未来数据映射到当前帧,以更好地估计速度。请注意,我们没有专门为检测任务进行微调,以保持我们设置的简单性和清晰性。例如,在 nuScenes 数据集中,常规的检测范围是 [-60.0m, -60.0m, -5.0m, 60.0m, 60.0m, 3.0m],而我们遵循多模态重建任务中的 BEV 范围 [-80.0m, -80.0m, -4.5m, 80.0m, 80.0m, 4.5m],这将导致更粗糙的 BEV 网格和较低的准确性。同时,我们的实验设计避免了使用数据增强技术和点云帧的分层。我们在 8 个 A100 GPU 上训练了 30 个周期,起始学习率为 5e−4,并采用余弦退火策略进行衰减。我们主要关注从头开始训练与使用我们提出的自监督分词器作为预训练模型之间的相对性能差距。正如表 3 所示,显然,使用我们的多模态分词器作为预训练模型,在单帧和多帧场景中都显著提高了性能。具体来说,在双帧配置中,我们在 NDS 指标上取得了令人印象深刻的 8.4% 的提升,在 mAP 指标上取得了显著的 13.4% 的提升,这归功于我们的多模态分词器预训练方法。

Motion Prediction. We further validate the performance of using our method as pre-training model on the motion prediction task. We attach the motion prediction head to the 3D detection head. The motion prediction head is stacked of 6 layers of cross attention(CA) and feed-forward network(FFN).

运动预测。我们进一步验证了使用我们的方法作为预训练模型在运动预测任务上的性能。我们将运动预测头附加到3D检测头上。运动预测头由6层交叉注意力(CA)和前馈网络(FFN)堆叠而成。

For the first layer, the trajectory queries is initialized from the top 200 highest score object queries selected from the 3D detection head. Then for each layer, the trajectory queries is firstly interacting with temporal BEV future in CA and further updated by FFN. We reuse the hungarian matching results in 3D detection head to pair the prediction and ground truth for trajectories. We predict five possible modes of trajectories and select the one closest to the ground truth for evaluation. For the training strategy, we train 24 epoches on 8 A100 GPUs with a starting learning rate of 1e−4. Other settings are kept the same with the detection configuration. We display the motion prediction results in Table 3. We observed a decrease of 0.455 meters in minADE and a reduction of 0.749 meters in minFDE at the two-frames setting when utilizing the tokenizer during the pre-training phase. This finding confirms the efficacy of self-supervised multi-modal tokenizer pre-training.

在运动预测任务中,作者描述了一个详细的流程,其中包括初始化轨迹查询、交互更新以及训练策略。以下是对这段描述的翻译和解释:

- 初始化轨迹查询:第一层的轨迹查询是从3D检测头中得分最高的200个对象查询初始化而来。

- 交叉注意力(CA)交互:对于每一层,轨迹查询首先与时间上的BEV未来数据在交叉注意力层中进行交互。

- 前馈网络(FFN)更新:在CA层之后,轨迹查询通过前馈网络进一步更新。

- 匈牙利匹配结果的重用:在3D检测头中使用的匈牙利匹配结果被重用,以配对轨迹的预测和真实数据。

- 轨迹模式预测:模型预测五种可能的轨迹模式,并选择与真实数据最接近的轨迹进行评估。

- 训练策略:在8个A100 GPU上训练24个周期,起始学习率为1e−4。其他设置与检测配置保持一致。

- 结果展示:运动预测结果展示在 表3 中。使用分词器在预训练阶段时,在双帧设置下,最小平均位移误差(minADE)减少了0.455米,最小最终位移误差(minFDE)减少了0.749米。这一发现证实了自监督多模态分词器预训练的有效性。

4.3 Latent BEV Sequence Diffusion

In this section, we introduce the training details of latent BEV Sequence diffusion and compare this method with other related methods.

在这部分,我们将介绍潜在鸟瞰视图序列(latent BEV Sequence)扩散的训练细节,并比较这种方法与其他相关方法。

4.3.1 Training Details.

NuScenes. We adopt a three stage training for future BEV prediction. 1) Next BEV pretraining. The model predicts the next frame with the {xt−1, xt} condition. In practice, we adopt sweep data of nuScenes to reduce the difficulty of temporal feature learning. The model is trained 20000 iters with a batch size 128. 2) Short Sequence training. The model predicts the N(N = 5) future frames of sweep data. At this stage, the network can learn how to perform short-term (0.5s) feature reasoning. The model is trained 20000 iters with a batch size 128. 3) Long Sequence Fine-tuning. The model predicts the N(N = 6) future frames (3s) of key-frame data with the {xt−2, xt−1, xt} condition.

NuScenes。我们采用三阶段训练来预测未来的BEV(鸟瞰视图)。

- 下一帧预训练。模型在 { x t − 1 , x t } \{x_{t−1}, x_t\} {xt−1,xt} 条件下预测下一帧。在实践中,我们采用NuScenes的扫描数据来降低时间特征学习的难度。模型以128的批量大小训练了20000次迭代。

- 短序列训练。模型预测扫描数据的N(N = 5)个未来帧。在这个阶段,网络可以学习如何进行短期(0.5秒)特征推理。模型以128的批量大小训练了20000次迭代。

- 长序列微调。模型在 { x t − 2 , x t − 1 , x t } \{x_{t−2}, x_{t−1}, x_t\} {xt−2,xt−1,xt} 条件下预测关键帧数据的N(N = 6)个未来帧(3秒)。

The model is trained 30000 iters with a batch size 128. The learning rate of three stages is 5e-4 and the optimizer is AdamW [23]. Note that our method does not introduce classifier-free gudiance (CFG) strategy in the training process for better integration with downstream tasks, as CFG requires an additional network inference, which doubles the computational cost.

模型以128的批量大小训练了30000次迭代。三个阶段的学习率均为5e-4,优化器使用的是AdamW [23]。请注意,我们的方法在训练过程中没有引入无分类器引导(Classifier-Free Guidance, CFG)策略,以更好地与下游任务集成,因为CFG需要额外的网络推理,这会使计算成本翻倍。

Carla. The model is fine-tuned 30000 iterations with a nuScenes-pretrained model with a batch size 32. The initial learning rate is 5e-4 and the optimizer is AdamW [23]. CFG strategy is not introduced in the training process, following the same setting of nuScenes.

Carla。模型使用批量大小为32的nuScenes预训练模型进行了30000次迭代的微调。初始学习率为5e-4,优化器为AdamW [23]。在训练过程中没有引入CFG(分类器自由引导)策略,与nuScenes的设置保持一致。

4.3.2 Lidar Prediction Quality

NuScenes. We compare the Lidar prediction quality with existing SOTA methods. We follow the evaluation process of [42] and report the Chamfer 1s/3s results in Table 5, where the metric is computed within the region of interest: -70m to +70m in both x-axis and y-axis, -4.5m to +4.5m in z-axis. Our proposed method outperforms SPFNet, S2Net and 4D-Occ in Chamfer metric by a large margin. When compared to Copilot4D [42], our approach uses less history condition frames and no CFG schedule setting considering the large memory cost for multi-modal inputs. Our BEVWorld requires only 3 past frames for 3-second predictions, whereas Copilot4D utilizes 6 frames for the same duration. Our method demonstrates superior performance, achieving chamfer distance of 0.73 compared to 1.40, in the no CFG schedule setting, ensuring a fair and comparable evaluation.

NuScenes。我们将激光雷达预测质量与现有的最先进方法(SOTA)进行比较。我们遵循[42]的评估过程,并在 表5 中报告了Chamfer 1s/3s的结果,其中该指标在感兴趣区域(ROI)内计算:x轴和y轴从-70米到+70米,z轴从-4.5米到+4.5米。我们提出的方法在Chamfer指标上大幅度超越了SPFNet、S2Net和4D-Occ。

与Copilot4D [42]相比,我们的方法考虑到多模态输入的大内存成本,使用了较少的历史条件帧,并且没有CFG(分类器自由引导)计划设置。我们的BEVWorld仅需3个过去的帧即可进行3秒的预测,而Copilot4D则使用了6帧进行相同时长的预测。我们的方法展现出了卓越的性能,在没有CFG计划设置的情况下,Chamfer距离达到了0.73,相比之下Copilot4D为1.40,确保了公平且可比的评估。

Carla. We also conducted experiments on the Carla dataset to verify the scalability of our method. The quantitative results are shown in Table 5. We reproduce the results of 4D-Occ on Carla and compare it with our method, obtaining similar conclusions to this on the nuScenes dataset. Our method significantly outperform 4D-Occ in prediction results for both 1-second and 3-second.

Carla。我们还在Carla数据集上进行了实验,以验证我们方法的可扩展性。定量结果展示在 表5 中。我们在Carla上复现了4D-Occ的结果,并将其与我们的方法进行比较,得出了与在nuScenes数据集上类似的结论。我们的方法在1秒和3秒的预测结果上都显著优于4D-Occ。

4.3.3 Video Generation Quality

NuScenes. We compare the video generation quality with past single-view and multi-view generation methods. Most of existing methods adopt manual labeling condition, such as layout or object label, to improve the generation quality. However, using annotations reduces the scalability of the world model, making it difficult to train with large amounts of unlabeled data. Thus we do not use the manual annotations as model conditions. The results are shown in Table 4. The proposed method achieves best FID and FVD performance in methods without using manual labeling condition and exhibits comparable results with methods using extra conditions. The visual results of Lidar and video prediction are shown in Figure 5. Furthermore, the generation can be controlled by the action conditions. We transform the action token into left turn, right turn, speed up and slow down, and the generated image and Lidar can be generated according to these instructions. The visualization of controllability are shown in Figure 6.

NuScenes。我们比较了与过去单视图和多视图生成方法的视频生成质量。大多数现有方法采用手动标注条件,例如布局或对象标签,以提高生成质量。然而,使用注释会降低世界模型的可扩展性,使其难以用大量未标注的数据进行训练。因此,我们不使用手动注释作为模型条件。结果展示在 表4 中。我们提出的方法在不使用手动标注条件的方法中实现了最佳的FID(Fréchet Inception Distance)和FVD(Fréchet Video Distance)性能,并显示出与使用额外条件的方法相当的结果。激光雷达和视频预测的可视化结果展示在 图5 中。此外,生成可以通过动作条件进行控制。我们将动作标记转换为左转、右转、加速和减速,并且可以根据这些指令生成图像和激光雷达。可控性的可视化展示在 图6 中。

Carla. The generation quality on Carla is similar to that on nuScenes dataset, which demonstrates the scalability of our method across different datasets. The quantitative results of video predictions are shown in Table 4 with 36.80(FID 1s) and 43.12(FID 3s). Qualitative results of video predictions are shown in the appendix.

Carla。在Carla上生成的质量与在nuScenes数据集上相似,这证明了我们方法在不同数据集上的可扩展性。视频预测的定量结果在 表4 中显示,分别为36.80(1秒FID)和43.12(3秒FID)。视频预测的定性结果展示在附录中。

4.3.4 Benefit for Planning Tasks

We further validate the effectiveness of the predicted future BEV features from latent diffusion network for toy downstream open-loop planning task [41] on nuScenes dataset. Note that we do not use actions of ego car in future frames here and we adopt x0-parameterization [1] for fast inference. We adopt four vectors, history trajectory, command, perception and optional future BEV vectors, as input for planning head. History trajectory vector encodes the ego movement from last frame to current frame. Command vector refers to the routing command such as turning left or right. Perception vector is extracted from the object query in the detection head that interacted with all detection queries. Future BEV vector is obtained from the pooled BEV features from the fixed diffusion model. When using future BEV vectors, PNC L2 3s metric is decreased from 1.030m to 0.977m, which validates that the predicted BEV from world model is beneficial for planning tasks.

我们进一步验证了从潜在扩散网络预测的未来BEV(鸟瞰视图)特征在nuScenes数据集上的玩具下游开环规划任务[41]中的有效性。请注意,我们在这里不使用自我车辆在未来帧中的动作,我们采用 x 0 x_0 x0 参数化[1]进行快速推理。我们采用四个向量作为规划头的输入:历史轨迹、命令、感知和可选的未来BEV向量。历史轨迹向量编码了自我车辆从最后一帧到当前帧的运动。命令向量指的是路由命令,例如左转或右转。感知向量从与所有检测查询交互的检测头中的对象查询中提取。未来BEV向量是从固定扩散模型中汇总的BEV特征中获得的。当使用未来BEV向量时,PNC L2 3s指标从1.030米降低到0.977米,这验证了世界模型预测的BEV对规划任务是有益的。

5 Conclusion

We present BEVWorld, an innovative autonomous driving framework that leverages a unified Bird’s Eye View latent space to construct a multi-modal world model. BEVWorld’s self-supervised learning paradigm allows it to efficiently process extensive unlabeled multimodal sensor data, leading to a holistic comprehension of the driving environment. We validate the effectiveness of BEVWorld in the downstream autonomous driving tasks. Furthermore, BEVWorld achieves satisfactory results in multi-modal future predictions with latent diffusion network, showcasing its capabilities through experiments on both real-world(nuScenes) and simulated(carla) datasets. We hope that the work presented in this paper will stimulate and foster future developments in the domain of world models for autonomous driving.

我们介绍了BEVWorld,这是一个创新的自动驾驶框架,它利用统一的鸟瞰视图潜在空间构建多模态世界模型。BEVWorld的自监督学习范式使其能够高效地处理大量的未标记多模态传感器数据,从而全面理解驾驶环境。我们在下游自动驾驶任务中验证了BEVWorld的有效性。此外,BEVWorld在利用潜在扩散网络进行多模态未来预测方面取得了令人满意的结果,通过在真实世界(nuScenes)和模拟(Carla)数据集上的实验展示了其能力。我们希望本文所呈现的工作能够激发和促进自动驾驶领域世界模型的未来发展。

Appendix

A Qualitative Results

In this section, qualitative results are presented to demonstrate the performance of the proposed method.

在这一部分,我们将展示定性结果,以证明所提出方法的性能。

A.1 Tokenizer Reconstructions

The visualization of tokenizer reconstructions are shown in Figure 7 and Figure 8. The proposed tokenizer can recover the image and Lidar with the unified BEV features.

在 图7 和 图8 中展示了 tokenizer重建的可视化结果。所提出的分词器能够利用统一的BEV(鸟瞰视图)特征恢复图像和激光雷达数据。

A.2 Multi-modal Future Predictions

Diverse generation. The proposed diffusion-based world model can produce high-quality future predictions with different driving conditions, and both the dynamic and static objects can be generated properly. The qualitative results are illustrated in Figure 9 and Figure 10.

Controllability. We present more visual results of controllability in Figure 11. The generated images and Lidar exhibit a high degree of consistency with action, which demonstrates that our world model has the potential of being a simulator. PSNR metric.

PSNR metric has the problem of being unable to differentiate between blurring and sharpening. As shown in Figure 12, the image quality of L & C is better the that of C, while the psnr metric of L & C is worse than that of C.

多样化生成。所提出的基于扩散的世界模型能够根据不同的驾驶条件生成高质量的未来预测,动态和静态对象都能被正确生成。定性结果在 图9 和 图10 中进行了说明。

可控性。我们在 图11 中展示了更多关于可控性的视觉结果。生成的图像和激光雷达与动作高度一致,这表明我们的世界模型有成为模拟器的潜力。

峰值信噪比(PSNR)指标。PSNR指标存在无法区分模糊和锐化的问题。如 图12 所示,L & C的图像质量比C更好,然而L & C的PSNR指标却比C差。

B Implementation Details

Training details of tokenizer. We trained our model using 32 GPUs, with a batch size of 1 per card. We used the AdamW optimizer with a learning rate of 5e-4, beta1=0.5, and beta2=0.9, following a cosine learning rate decay strategy. The multi-task loss function includes a perceptual loss weight of 0.1, a lidar loss weight of 1.0, and an RGB L1 reconstruction loss weight of 1.0. For the GAN training, we employed a warm-up strategy, introducing the GAN loss after 30,000 iterations. The discriminator loss weight was set to 1.0, and the generator loss weight was set to 0.1.

分词器的训练细节如下:

- 我们使用32个GPU训练了我们的模型,每张卡的批量大小为1。

- 我们使用了AdamW优化器,学习率为5e-4,beta1设置为0.5,beta2设置为0.9,并遵循余弦学习率衰减策略。

- 多任务损失函数包括感知损失权重0.1,激光雷达损失权重1.0,以及RGB L1重建损失权重1.0。

- 对于生成对抗网络(GAN)的训练,我们采用了预热策略,在30,000次迭代后引入了GAN损失。

- 鉴别器损失权重设置为1.0,生成器损失权重设置为0.1。

C Broader Impacts

The concept of a world model holds significant relevance and diverse applications within the realm of autonomous driving. It serves as a versatile tool, functioning as a simulator, a generator of long-tail data, and a pre-trained model for subsequent tasks. Our proposed method introduces a multi-modal BEV world model framework, designed to align seamlessly with the multi-sensor configurations inherent in existing autonomous driving models. Consequently, integrating our approach into current autonomous driving methodologies stands to yield substantial benefits.

世界模型的概念在自动驾驶领域具有重要的相关性和多样化的应用。它作为一种多功能工具,充当模拟器、长尾数据的生成器,以及后续任务的预训练模型。我们提出的方法引入了一个多模态BEV(鸟瞰视图)世界模型框架,旨在与现有自动驾驶模型中固有的多传感器配置无缝对接。因此,将我们的方法整合到当前的自动驾驶方法中,有望带来巨大的好处。

D Limitations

It is widely acknowledged that inferring diffusion models typically demands around 50 steps to attain denoising results, a process characterized by its sluggishness and computational expense. Regrettably, we encounter similar challenges. As pioneers in the exploration of constructing a multi-modal world model, our primary emphasis lies on the generation quality within driving scenes, prioritizing it over computational overhead. Recognizing the significance of efficiency, we identify the adoption of onestep diffusion as a crucial direction for future improvement in the proposed method. Regarding the quality of the generated imagery, we have noticed that dynamic objects within the images sometimes suffer from blurriness. To address this and further improve their clarity and consistency, a dedicated module specifically tailored for dynamic objects may be necessary in the future.

普遍认为,推断扩散模型通常需要大约50步才能获得去噪结果,这个过程以其缓慢和计算成本高而著称。遗憾的是,我们面临着类似的挑战。作为构建多模态世界模型探索的先驱,我们的主要重点放在驾驶场景中的生成质量上,将其置于计算开销之上。认识到效率的重要性,我们认为采用一步扩散是未来改进所提出方法的关键方向。关于生成图像的质量,我们注意到图像中的动态对象有时会受到模糊的影响。为了解决这个问题并进一步提高它们的清晰度和一致性,未来可能需要一个专门针对动态对象的专用模块。