note

文章目录

- note

- 一、GRPO

- Reference

一、GRPO

论文:DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models (https://arxiv.org/pdf/2402.03300)GRPO 在 DeepSeek V2 中采用了,GRPO 在训练过程中,不需要 Value Model,因此也能够减少 RL 训练过程中的资源消耗。

GRPO 的目标函数为:

J G R P O ( θ ) = E [ q ∼ P ( Q ) , { o i } i = 1 G ∼ π θ o l d ( O ∣ q ) ] 1 G ∑ i = 1 G 1 ∣ o i ∣ ∑ t = 1 ∣ o i ∣ { min [ π θ ( o i , t ∣ q , o i , < t ) π θ o l d ( o i , t ∣ q , o i , < t ) A ^ i , t , clip ( π θ ( o i , t ∣ q , o i , < t ) π θ o l d ( o i , t ∣ q , o i , < t ) , 1 − ε , 1 + ε ) A ^ i , t ] − β D K L [ π θ ∣ ∣ π r e f ] } \begin{aligned} & \mathcal{J}_{G R P O}(\theta)=\mathbb{E}\left[q \sim P(Q),\left\{o_i\right\}_{i=1}^G \sim \pi_{\theta_{o l d}}(O \mid q)\right] \\ & \frac{1}{G} \sum_{i=1}^G \frac{1}{\left|o_i\right|} \sum_{t=1}^{\left|o_i\right|}\left\{\min \left[\frac{\pi_\theta\left(o_{i, t} \mid q, o_{i,<t}\right)}{\pi_{\theta_{o l d}\left(o_{i, t} \mid q, o_{i,<t}\right)}} \hat{A}_{i, t}, \operatorname{clip}\left(\frac{\pi_\theta\left(o_{i, t} \mid q, o_{i,<t}\right)}{\left.\left.\left.\pi_{\theta_{o l d}\left(o_{i, t} \mid q, o_{i,<t}\right)}, 1-\varepsilon, 1+\varepsilon\right) \hat{A}_{i, t}\right]-\beta \mathbb{D}_{K L}\left[\pi_\theta| | \pi_{r e f}\right]\right\}}\right.\right.\right.\end{aligned} JGRPO(θ)=E[q∼P(Q),{oi}i=1G∼πθold(O∣q)]G1i=1∑G∣oi∣1t=1∑∣oi∣⎩ ⎨ ⎧min πθold(oi,t∣q,oi,<t)πθ(oi,t∣q,oi,<t)A^i,t,clip πθold(oi,t∣q,oi,<t),1−ε,1+ε)A^i,t]−βDKL[πθ∣∣πref]}πθ(oi,t∣q,oi,<t)

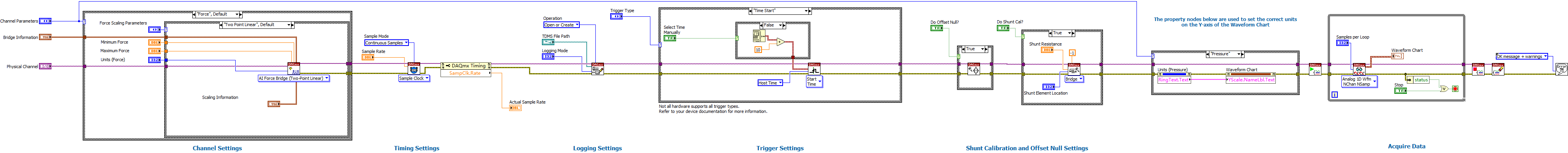

步骤流程:

Reference

https://github.com/huggingface/alignment-handbook

https://github.com/OpenRLHF/OpenRLHF

https://github.com/hiyouga/LLaMA-Factory

AI 大模型Paper Reading: DeepSeek Math 阅读笔记之GRPO算法

速读deepseek v2 (三)- 理解GRPO(deepseekmath 与 deepseek coder)

解读DeepSeekMath中的RL策略!GRPO:改进PPO增强推理能力