全连接神经网络,又称多层感知机(MLP),是深度学习最基础的神经网络。全连接神经网络主要由输入层、隐藏层和输出层构成。本文实现了一个通用MLP网络,包括以下功能:

- 根据输入的特征数、类别数、各隐藏层神经元数量构建一个MLP网络;

- 可以指定隐藏层的激活函数(默认为F.relu);

- 可以指定输出层的激活函数(默认回归无激活函数,分类激活函数为F.softmax)。

代码如下:

from typing import Optionalimport numpy as np

from sklearn.preprocessing import StandardScaler, MinMaxScaler

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.optim import SGD, Adamfrom process import classify, regress

#procss的代码见:https://blog.csdn.net/moyao_miao/article/details/141466047

# https://blog.csdn.net/moyao_miao/article/details/141497342class MLP(nn.Module):"""通用MLP网络"""def __init__(self, feature_num: int, class_num: int, *hidden_nums: int,fc_activation: nn.Module = F.relu, output_activation: Optional[nn.Module] = None):"""初始化MLP网络:param feature_num: 输入特征数:param class_num: 输出类别数:param hidden_nums: 隐藏层神经元数:param fc_activation: 隐藏层激活函数,默认为F.relu:param output_activation: 输出层激活函数,默认回归无激活函数,分类激活函数为F.softmax"""super().__init__()self.feature_num = feature_numself.class_num = class_numself.hidden_nums = hidden_numsself.fc_activation = fc_activationself.output_activation = output_activationinput_num = feature_num# 定义隐藏层for i, hidden_num in enumerate(hidden_nums):self.__dict__['_modules']['fc' + str(i)] = nn.Linear(input_num, hidden_num)input_num = hidden_numself.output = nn.Linear(input_num, class_num)def forward(self, x):# 定义网络的向前传播路径for i in range(len(self.hidden_nums)):x = self.fc_activation(self.__dict__['_modules']['fc' + str(i)](x))if self.output_activation is not None:x = self.output_activation(self.output(x))else:x = self.output(x)[..., 0] if self.class_num == 1 else F.softmax(self.output(x), dim=-1)return x关于隐藏层定义的详细说明见:【求助帖(已解决)】用PyTorch搭建MLP网络时遇到奇怪的问题-CSDN博客

下面举两个例子测试一下效果:

一、垃圾邮件分类

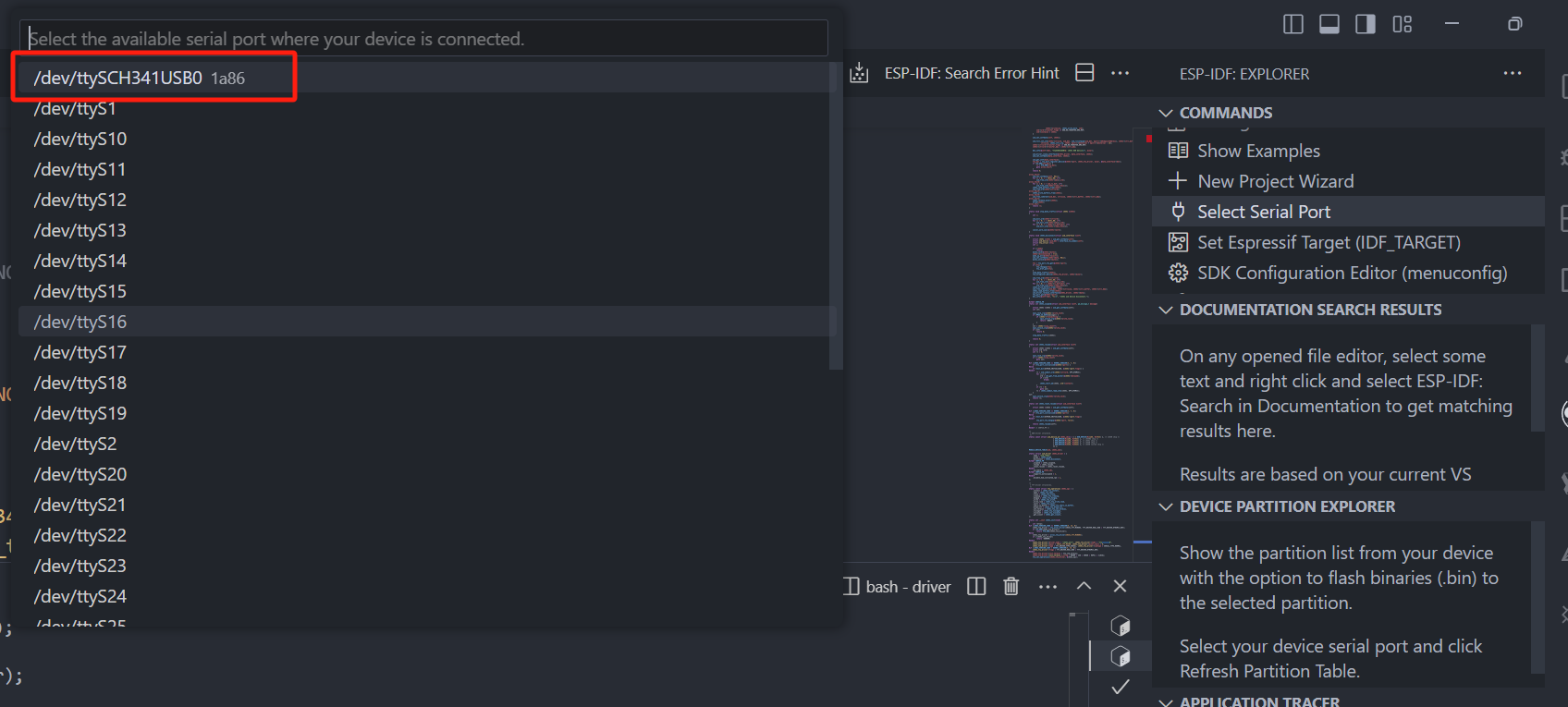

from ucimlrepo import fetch_ucirepospambase = fetch_ucirepo(id=94)X = np.array(spambase.data.features)y = np.array(spambase.data.targets.iloc[:, 0])model = MLP(57, 2, 30, 10)optimizer = Adam(model.parameters(), lr=0.01)criterion = nn.CrossEntropyLoss()device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')classify((X, y),model,optimizer,criterion,scaler=MinMaxScaler(feature_range=(0, 1)),batch_size=64,epochs=10,device=device,)分类效果:

二、波士顿房价预测

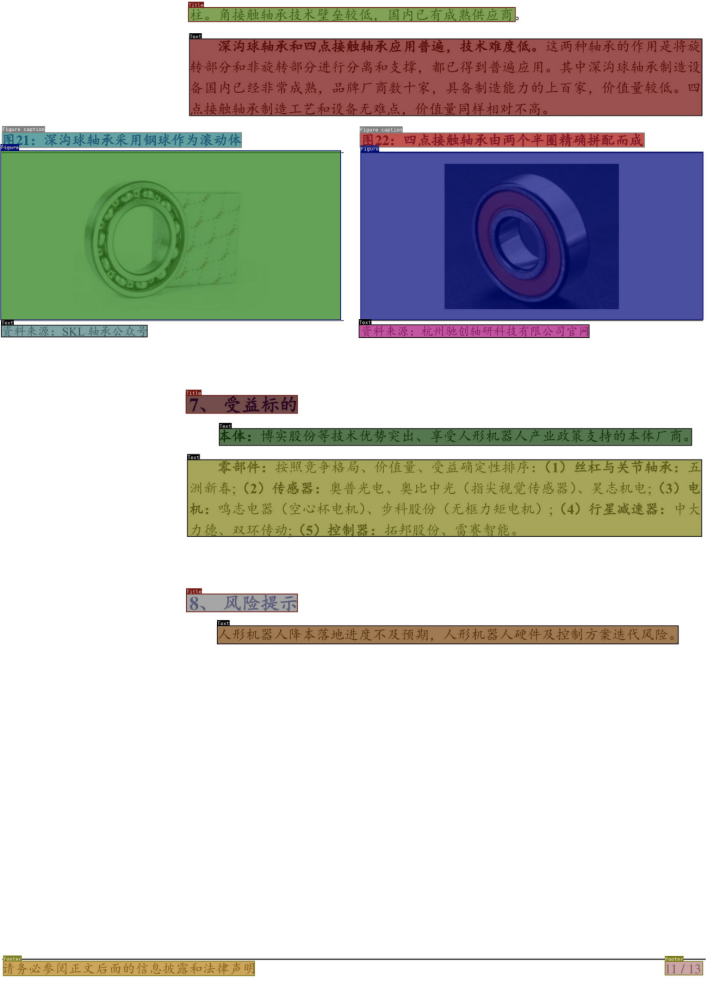

from sklearn.datasets import fetch_california_housinghouse_data = fetch_california_housing()model = MLP(8, 1, 100, 100, 50)optimizer = SGD(model.parameters(), lr=0.01)criterion = nn.MSELoss()device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')regress((house_data.data, house_data.target),model,optimizer,criterion,scaler=StandardScaler(),batch_size=64,epochs=30,device=device,)预测效果: