一、OpenEuler(欧拉) 系统简介

openEuler 是开放原子开源基金会(OpenAtom Foundation)孵化及运营的开源项目;

openEuler作为一个操作系统发行版平台,每两年推出一个LTS版本。该版本为企业级用户提供一个安全稳定可靠的操作系统。具体的介绍可以参考官网https://www.openeuler.org/zh/

二、Kubernetes 1.24版本发布及改动

2.1 Kubernetes 1.24 发布

2022 年 5 月 3 日,Kubernetes 1.24 正式发布,在新版本中,我们看到 Kubernetes 作为容器编排的事实标准,正愈发变得成熟,有 12 项功能都更新到了稳定版本,同时引入了很多实用的功能,例如 StatefulSets 支持批量滚动更新,NetworkPolicy 新增 NetworkPolicyStatus 字段方便进行故障排查等

2.2 Kubernetes 1.24 改动

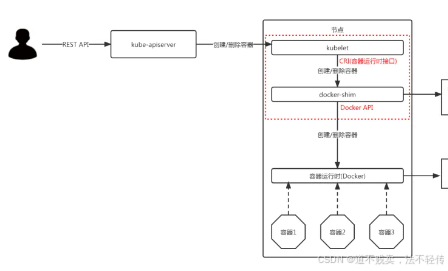

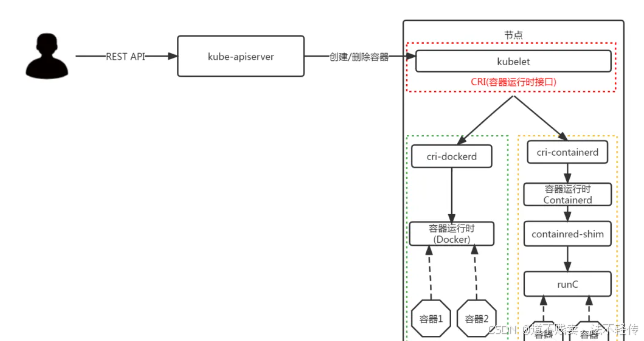

Kubernetes 正式移除对 Dockershim 的支持,讨论很久的 “弃用 Dockershim” 也终于在这个版本画上了句号。

想要清楚的了解docker 和 k8s 的关系,可以参考下这篇文章:https://i4t.com/5435.html

Kubernetes1.24 之前:

Kubernetes1.24 之后:

如还想继续在k8s中使用docker,需要自行安装cri-dockerd 组件;不然就使用containerd

三、在国产OpenEuler(欧拉)上部署Kubernetes 1.24版本集群

3.1 Kubernetes 1.24版本集群部署环境准备

3.1.1 主机操作系统说明

| 序号 | 操作系统及版本 | 备注 |

|---|---|---|

| 1 | openEuler 22.03 (LTS-SP1) |

3.1.2 主机硬件配置说明

| CPU | 内存 | 硬盘 | 角色 | IP地址 | 主机名 |

|---|---|---|---|---|---|

| 4C | 8G | 100GB | master | 172.16.200.90 | k8s-master01 |

| 8C | 16G | 100GB | node | 172.16.200.91 | k8s-node1 |

| 8C | 16G | 100GB | node | 172.16.200.92 | k8s-node2 |

3.1.3 主机配置

3.1.3.1 主机名配置

由于本次使用3台主机完成kubernetes集群部署,其中1台为master节点,名称为k8s-master01;其中2台为node节点,名称分别为:k8s-node1及k8s-node2

master节点

# hostnamectl set-hostname k8s-master01 && bashnode1节点

# hostnamectl set-hostname k8s-node1 && bashnode2节点

# hostnamectl set-hostname k8s-node2 && bash

3.1.3.2 主机名与IP地址解析3.1.3.2 主机名与IP地址解析

所有集群主机均需要进行配置。

cat >> /etc/hosts <<EOF

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.200.90 k8s-master01

172.16.200.91 k8s-node1

172.16.200.92 k8s-node2

EOF

3.1.3.3 关闭SWAP分区

修改完成后需要重启操作系统,如不重启,可临时关闭,

命令为 swapoff -a

#临时关闭

# swapoff -a#永远关闭swap分区,需要重启操作系统

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab3.1.3.4 防火墙配置

所有主机均需要操作。

关闭现有防火墙firewalld

systemctl disable firewalldsystemctl stop firewalldfirewall-cmd --state

not running3.1.3.5 SELINUX配置

所有主机均需要操作。修改SELinux配置需要重启操作系统。

#临时关闭

setenforce 0#永久生效

sed -ri 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config3.1.3.6 时间同步配置

所有主机均需要操作。最小化安装系统需要安装ntpdate软件。

# crontab -l

0 */1 * * * /usr/sbin/ntpdate time1.aliyun.com#设置上海时区,东八区

# timedatectl set-timezone Asia/Shanghai3.1.3.7 配置内核转发及网桥过滤

所有主机均需要操作。

#开启内核路由转发

sed -i 's/net.ipv4.ip_forward=0/net.ipv4.ip_forward=1/g' /etc/sysctl.conf#添加网桥过滤及内核转发配置文件

cat <<EOF >/etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness = 0

EOF配置加载br_netfilter模块

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF#加载br_netfilter overlay模块

modprobe br_netfilter

modprobe overlay#查看是否加载

lsmod | grep br_netfilter

br_netfilter 22256 0使用默认配置文件生效

sysctl -p#使用新添加配置文件生效

sysctl -p /etc/sysctl.d/k8s.conf3.1.3.8 安装ipset及ipvsadm

所有主机均需要操作。

安装ipset及ipvsadm

yum -y install ipset ipvsadm#配置ipvsadm模块加载方式.添加需要加载的模块

cat > /etc/sysconfig/modules/ipvs.module <<EOF

modprobe -- ip_vs

modprobe -- ip_vs_sh

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- nf_conntrack

EOF授权、运行、检查是否加载

chmod 755 /etc/sysconfig/modules/ipvs.module && /etc/sysconfig/modules/ipvs.module查看对应的模块是否加载成功

# lsmod | grep -e ip_vs -e nf_conntrack_ipv4k8s集群默认采用iptables 方式,如果集群在部署后已经是iptables 可以修改为ipvs模式

1.在master节点执行

# kubectl edit cm kube-proxy -n kube-system

...kind: KubeProxyConfigurationmetricsBindAddress: ""mode: "ipvs" # 此处修改为ipvs,默认为空nodePortAddresses: null...2.查看当前的kube-proxy

# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-84c476996d-8kz5d 1/1 Running 0 62m

calico-node-8tb29 1/1 Running 0 62m

calico-node-9dkpd 1/1 Running 0 62m

calico-node-wnlgv 1/1 Running 0 62m

coredns-74586cf9b6-jgtlq 1/1 Running 0 84m

coredns-74586cf9b6-nvkz4 1/1 Running 0 84m

etcd-k8s-master01 1/1 Running 2 84m

kube-apiserver-k8s-master01 1/1 Running 0 84m

kube-controller-manager-k8s-master01 1/1 Running 1 (69m ago) 84m

kube-proxy-l2vfq 1/1 Running 0 45m

kube-proxy-v4drh 1/1 Running 0 45m

kube-proxy-xvtnh 1/1 Running 0 45m

kube-scheduler-k8s-master01 1/1 Running 1 (69m ago) 84m3.删除当前的kube-proxy

# kubectl delete pod kube-proxy-f7rcx kube-proxy-ggchx kube-proxy-hbt94 -n kube-system

pod "kube-proxy-f7rcx" deleted

pod "kube-proxy-ggchx" deleted

pod "kube-proxy-hbt94" deleted4.查看新自动创建的kube-proxy

# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-74586cf9b6-5bfk7 1/1 Running 0 77m

coredns-74586cf9b6-d29mj 1/1 Running 0 77m

etcd-master-140 1/1 Running 0 78m

kube-apiserver-master-140 1/1 Running 0 78m

kube-controller-manager-master-140 1/1 Running 0 78m

kube-proxy-7859q 1/1 Running 0 44s

kube-proxy-l4gqx 1/1 Running 0 43s

kube-proxy-nnjr2 1/1 Running 0 43s

kube-scheduler-master-140 1/1 Running 0 78m3.2 Docker环境准备(优先访问:华为欧拉系统安装docker-CSDN博客)

3.2.1 Docker安装环境准备

准备一块单独的磁盘,建议单独把/var/lib/docker 挂载在一个单独的磁盘上 ,所有主机均需要操作。

#格式化磁盘

$ mkfs.ext4 /dev/sdb#创建docker工作目录

$ mkdir /var/lib/docker#写入挂载信息到fstab中,永久挂载

$ echo "/dev/sdb /var/lib/docker ext4 defaults 0 0" >> /etc/fstab#使fstab挂载生效

$ mount -a#查看磁盘挂载

$ df -h /dev/sdb3.2.2 可选(一):docker容器

#二进制部署docker,下载docker

wget https://download.docker.com/linux/static/stable/x86_64/docker-20.10.9.tgz

tar -xf docker-20.10.9.tgz

cp docker/* /usr/bin

which docker3.2.3 编写docker.service文件

如果需要指定docker工作目录,需要配置 ExecStart=/usr/bin/dockerd --graph=/home/application/docker

vim /etc/systemd/system/docker.service[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s[Install]

WantedBy=multi-user.target添加可执行权限

chmod +x /etc/systemd/system/docker.service创建docker组

groupadd docker3.2.4 配置docker加速,修改cgroup方式

/etc/docker/daemon.json 默认没有此文件,需要单独创建

在/etc/docker/daemon.json添加如下内容tee /etc/docker/daemon.json <<-'EOF'

{ "registry-mirrors" : ["http://hub-mirror.c.163.com"],"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF# 启动docker

systemctl enable docker && systemctl restart docker#查看docker 版本

[root@k8s-master ~]# docker version

Client:Version: 20.10.9API version: 1.41Go version: go1.16.8Git commit: c2ea9bcBuilt: Mon Oct 4 16:03:22 2021OS/Arch: linux/amd64Context: defaultExperimental: trueServer: Docker Engine - CommunityEngine:Version: 20.10.9API version: 1.41 (minimum version 1.12)Go version: go1.16.8Git commit: 79ea9d3Built: Mon Oct 4 16:07:30 2021OS/Arch: linux/amd64Experimental: falsecontainerd:Version: v1.4.11GitCommit: 5b46e404f6b9f661a205e28d59c982d3634148f8runc:Version: 1.0.2GitCommit: v1.0.2-0-g52b36a2ddocker-init:Version: 0.19.0GitCommit: de40ad03.2.5 【可选:container 容器】

1.使用containerd 作为容器,下载 containerd 包# wget https://github.com/containerd/containerd/releases/download/v1.6.6/cri-containerd-cni-1.6.6-linux-amd64.tar.gz这里需要制定解压目录为【/】,包自带结构。

# tar zxvf cri-containerd-cni-1.6.6-linux-amd64.tar.gz -C /2.创建容器目录

# mkdir /etc/containerd3.生成容器配置文件

# containerd config default >> /etc/containerd/config.toml4.配置systemdcgroup 驱动程序

# vim /etc/containerd/config.toml[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]...[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]SystemdCgroup = true5.修改sandbox (pause) image地址

# vim /etc/containerd/config.toml[plugins."io.containerd.grpc.v1.cri"]sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.2"6.更新runc,因为cri-containerd-cni-1.6.6-linux-amd64.tar.gz的runc二进制文件有问题,最后说明。这一步很重要 ✰ ✰ ✰ ✰ ✰ ✰ ✰ ✰ ✰ ✰ ✰ ✰

# wget https://github.com/opencontainers/runc/releases/download/v1.1.3/runc.amd64

# mv runc.amd64 /usr/local/sbin/runc

mv:是否覆盖"/usr/local/sbin/runc"?y

# chmod +x /usr/local/sbin/runc7.启动containerd服务

# systemctl start containerd

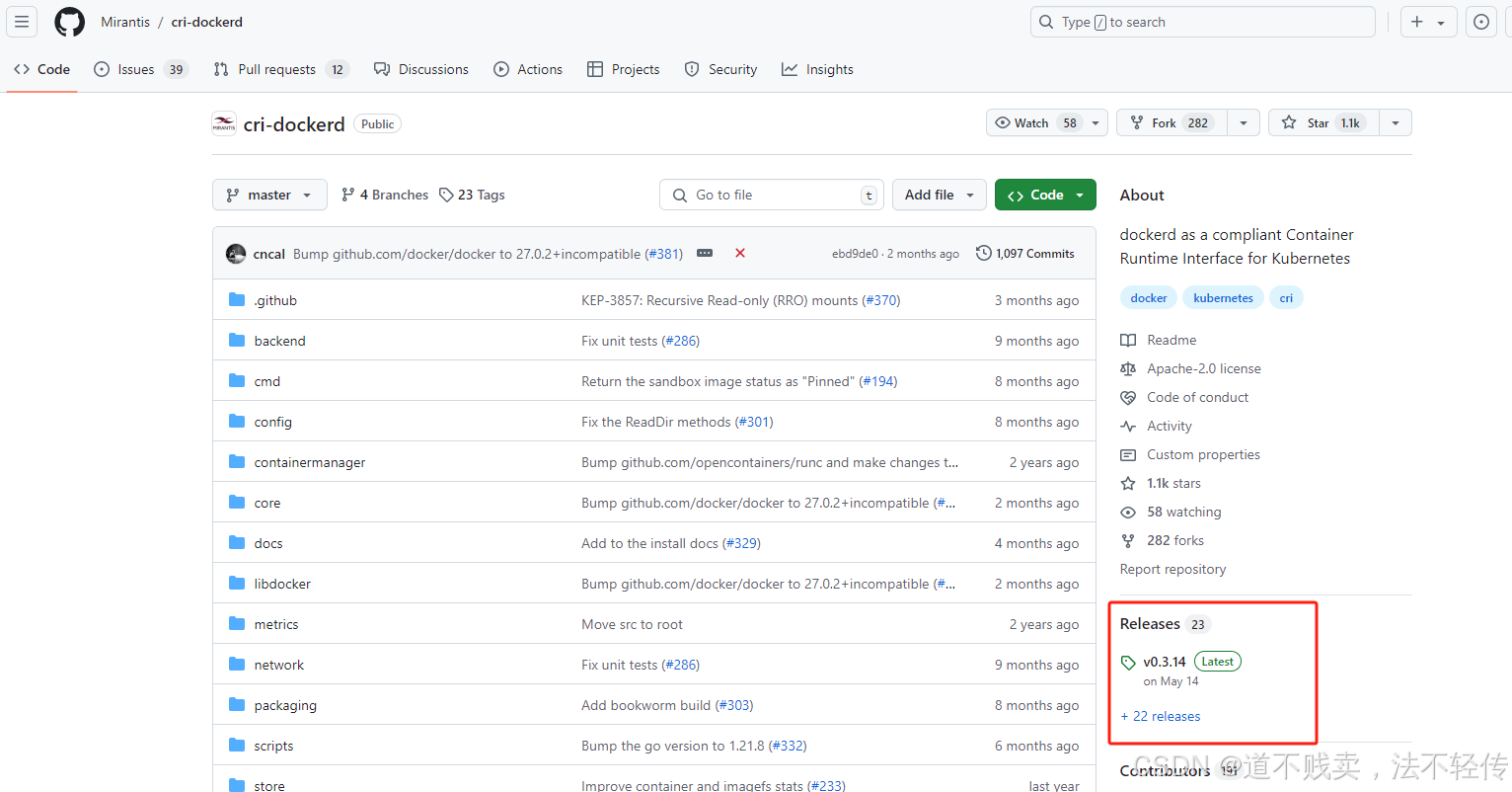

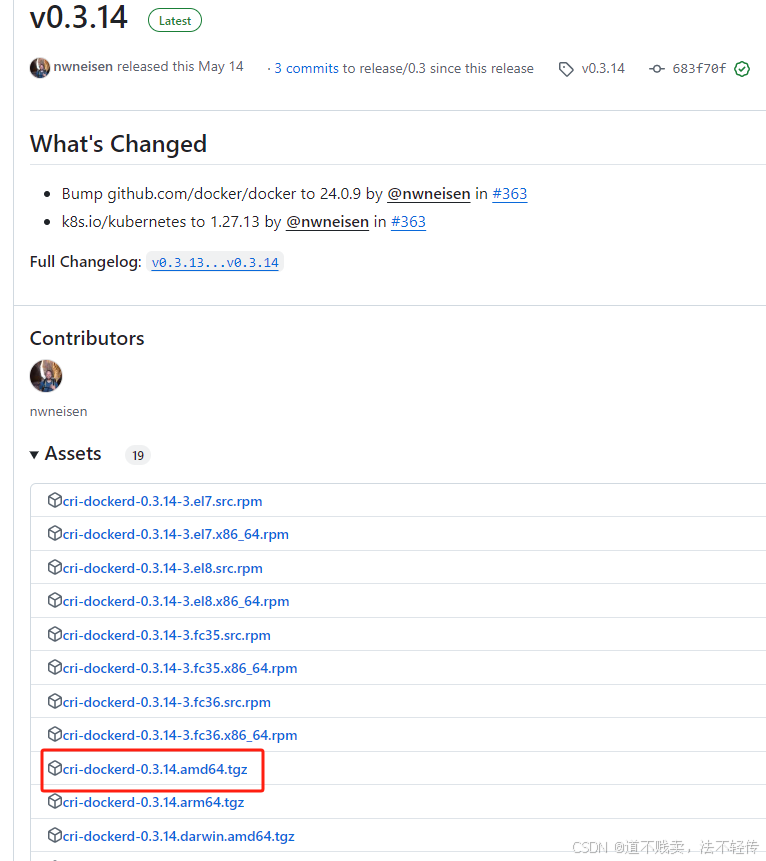

# systemctl enable containerd3.2.6 cri-dockerd

项目地址: https://github.com/Mirantis/cri-dockerd

所有节点 都安装 cri-dockerd

# 下载

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.3/cri-dockerd-0.3.3.amd64.tgz

tar -xf cri-dockerd-0.3.3.amd64.tgz

cp cri-dockerd/cri-dockerd /usr/bin/

chmod +x /usr/bin/cri-dockerd# 配置启动文件

cat <<"EOF" > /usr/lib/systemd/system/cri-docker.service

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

Requires=cri-docker.socket[Service]

Type=notifyExecStart=/usr/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.7ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=alwaysStartLimitBurst=3StartLimitInterval=60sLimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinityTasksMax=infinity

Delegate=yes

KillMode=process[Install]

WantedBy=multi-user.target

EOF# 生成socket 文件cat <<"EOF" > /usr/lib/systemd/system/cri-docker.socket

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service[Socket]

ListenStream=%t/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker[Install]

WantedBy=sockets.targetEOF# 启动CRI-DOCKER

systemctl daemon-reload

systemctl start cri-docker

systemctl enable cri-docker

systemctl is-active cri-docker3.3 kubernetes 1.24.2 集群部署(-)

3.3.1 集群软件及版本说明

| kubeadm | kubelet | kubectl | |

|---|---|---|---|

| 版本 | 1.24.2 | 1.24.2 | 1.24.1 |

| 安装位置 | 集群所有主机 | 集群所有主机 | 集群所有主机 |

| 作用 | 初始化集群、管理集群等 | 用于接收api-server指令,对pod生命周期进行管理 | 集群应用命令行管理工具 |

3.3.2 kubernetes YUM源准备

3.3.2.1 阿里云YUM源【国内主机】

cat >/etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOFyum clean all && yum makecache3.3.2 集群软件安装

所有节点均可安装

# 查看所有的可用版本

yum list kubeadm kubelet kubectl --showduplicates | sort -ryum install kubelet-1.24.2 kubeadm-1.24.2 kubectl-1.24.2#安装后查看版本

kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"24", GitVersion:"v1.24.2", GitCommit:"f66044f4361b9f1f96f0053dd46cb7dce5e990a8", GitTreeState:"clean", BuildDate:"2022-06-15T14:20:54Z", GoVersion:"go1.18.3", Compiler:"gc", Platform:"linux/amd64"}设置kubelet为开机自启动即可,由于没有生成配置文件,集群初始化后自动启动systemctl enable kubelet --now#此时kubelet状态是activating的,不是active的

systemctl is-active kubelet3.3.4 配置kubelet

为了实现docker使用的cgroupdriver与kubelet使用的cgroup的一致性,建议修改如下文件内容。

cat <<EOF > /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

EOF3.3.5 集群初始化

只在master节点(

k8s-master01)执行

kubeadm init \

--apiserver-advertise-address=172.16.200.90 \

--image-repository=registry.aliyuncs.com/google_containers \

--kubernetes-version=1.24.2 \

--pod-network-cidr=10.244.0.0/16 \

--service-cidr=10.96.0.0/12 \

--cri-socket /var/run/cri-dockerd.sock初始化过程输出

I0604 10:03:37.133902 2673 initconfiguration.go:255] loading configuration from "init.default.yaml"

I0604 10:03:37.136354 2673 kubelet.go:214] the value of KubeletConfiguration.cgroupDriver is empty; setting it to "systemd"

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.24.1

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.24.1

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.24.1

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.24.1

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.7

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.3-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.8.6

[init] Using Kubernetes version: v1.24.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master-1] and IPs [10.96.0.1 172.16.200.30]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master-1] and IPs [172.16.200.30 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master-1] and IPs [172.16.200.30 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 16.503761 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master-1 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master-1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxyYour Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 172.16.200.90:6443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:8a55d1074d4d74804ee493119a94902d816e2b185444b19398353585a1588120

3.3.6 集群应用客户端管理集群文件准备

[root@k8s-master01 ~]# mkdir -p $HOME/.kube

[root@k8s-master01 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master01 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master01 ~]# ls /root/.kube/

config[root@k8s-master01 ~]# export KUBECONFIG=/etc/kubernetes/admin.conf3.3.7 集群工作节点添加

[root@k8s-node1 ~]# kubeadm join 172.16.200.90:6443 --token 4e2uez.vzy37zl8btnd6fif \

> --discovery-token-ca-cert-hash sha256:3cf872560fe7f67fcc3f28fdbe3ffb84fae6348cf56898ed7e7a164cf562948e --cri-socket unix:///var/run/cri-dockerd.sock[root@k8s-node2 ~]# kubeadm join 172.16.200.90:6443 --token 4e2uez.vzy37zl8btnd6fif \

> --discovery-token-ca-cert-hash sha256:3cf872560fe7f67fcc3f28fdbe3ffb84fae6348cf56898ed7e7a164cf562948e --cri-socket unix:///var/run/cri-dockerd.sock[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready control-plane 76m v1.24.2

k8s-node01 Ready <none> 75m v1.24.2

k8s-node02 Ready <none> 75m v1.24.23.3.8 集群网络准备

3.3.8.1 calico安装

wget https://docs.projectcalico.org/v3.16/manifests/calico.yamlvim calico.yaml

................- name: CALICO_IPV4POOL_CIDRvalue: "192.168.0.0/16"

................[root@kubesphere ~]# kubectl apply -f calico.yaml监视kube-system命名空间中pod运行情况

[root@k8s-master01 ~]# watch kubectl get pods -n kube-system已经全部运行

[root@k8s-master01 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-56cdb7c587-szkjr 1/1 Running 0 11m

calico-node-6xzg7 1/1 Running 0 11m

coredns-74586cf9b6-bbhq6 1/1 Running 2 35m

coredns-74586cf9b6-g6shr 1/1 Running 2 35m

etcd-master-1 1/1 Running 3 35m

kube-apiserver-master-1 1/1 Running 3 35m

kube-controller-manager-master-1 1/1 Running 2 35m

kube-proxy-bbb2t 1/1 Running 2 35m

kube-scheduler-master-1 1/1 Running 2 35m3.3.8.2 【 可选:calico客户端安装】

下载二进制文件

# curl -L https://github.com/projectcalico/calico/releases/download/v3.23.1/calicoctl-linux-amd64 -o calicoctl安装calicoctl

mv calicoctl /usr/bin/为calicoctl添加可执行权限

chmod +x /usr/bin/calicoctl查看添加权限后文件

ls /usr/bin/calicoctl

/usr/bin/calicoctl查看calicoctl版本

calicoctl version

Client Version: v3.23.1

Git commit: 967e24543

Cluster Version: v3.23.1

Cluster Type: k8s,bgp,kubeadm,kdd

通过~/.kube/config连接kubernetes集群,查看已运行节点

$ DATASTORE_TYPE=kubernetes

$ KUBECONFIG=~/.kube/config

$ calicoctl get nodes

NAME

k8s-master01

k8s-node1

k8s-node2 3.3.9 验证集群可用性

查看所有的节点[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready control-plane 76m v1.24.2

k8s-node01 Ready <none> 75m v1.24.2

k8s-node02 Ready <none> 75m v1.24.2

查看集群健康情况

[root@k8s-master01 ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true","reason":""}1. 部署一个应用

apiVersion: apps/v1 #与k8s集群版本有关,使用 kubectl api-versions 即可查看当前集群支持的版本

kind: Deployment #该配置的类型,我们使用的是 Deployment

metadata: #译名为元数据,即 Deployment 的一些基本属性和信息name: nginx-deployment #Deployment 的名称labels: #标签,可以灵活定位一个或多个资源,其中key和value均可自定义,可以定义多组,目前不需要理解app: nginx #为该Deployment设置key为app,value为nginx的标签

spec: #这是关于该Deployment的描述,可以理解为你期待该Deployment在k8s中如何使用replicas: 1 #使用该Deployment创建一个应用程序实例selector: #标签选择器,与上面的标签共同作用,目前不需要理解matchLabels: #选择包含标签app:nginx的资源app: nginxtemplate: #这是选择或创建的Pod的模板metadata: #Pod的元数据labels: #Pod的标签,上面的selector即选择包含标签app:nginx的Podapp: nginxspec: #期望Pod实现的功能(即在pod中部署)containers: #生成container,与docker中的container是同一种- name: nginx #container的名称image: nginx:1.7.9 #使用镜像nginx:1.7.9创建container,该container默认80端口可访问kubectl apply -f nginx.yaml2. 访问应用

apiVersion: v1

kind: Service

metadata:name: nginx-service #Service 的名称labels: #Service 自己的标签app: nginx #为该 Service 设置 key 为 app,value 为 nginx 的标签

spec: #这是关于该 Service 的定义,描述了 Service 如何选择 Pod,如何被访问selector: #标签选择器app: nginx #选择包含标签 app:nginx 的 Podports:- name: nginx-port #端口的名字protocol: TCP #协议类型 TCP/UDPport: 80 #集群内的其他容器组可通过 80 端口访问 ServicenodePort: 32600 #通过任意节点的 32600 端口访问 ServicetargetPort: 80 #将请求转发到匹配 Pod 的 80 端口type: NodePort #Serive的类型,ClusterIP/NodePort/LoaderBalancerkubectl apply -f service.yaml3. 测试

[root@k8s-master01 ~]# kubectl get pods,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-deployment-7ddbb5f97-h9dhs 1/1 Running 0 49mNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 90m

service/nginx-service NodePort 10.111.132.6 <none> 80:32600/TCP 48m[root@k8s-master01 ~]# curl -I 10.111.132.6

HTTP/1.1 200 OK

Server: nginx/1.7.9

Date: Wed, 16 Nov 2022 07:47:36 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 23 Dec 2014 16:25:09 GMT

Connection: keep-alive

ETag: "54999765-264"

Accept-Ranges: bytes3.3.10 k8s其他设置

kubectl 命令自动补齐

yum install bash-completion -y

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

kubectl completion bash >/etc/bash_completion.d/kubectl四、参考

-

https://www.bilibili.com/video/BV1uY411c7qU?p=3&spm_id_from=333.880.my_history.page.click

-

https://www.jianshu.com/p/a613f64ccab6

-

https://i4t.com/5435.html

-

https://www.bilibili.com/video/BV1gS4y1B7Ut?spm_id_from=333.880.my_history.page.click

![[数据集][目标检测]智慧农业草莓叶子病虫害检测数据集VOC+YOLO格式4040张9类别](https://i-blog.csdnimg.cn/direct/4a9ca83db964467783f221a1fd15ab5b.png)