字节(Byte)是计算机信息中用于描述存储容量和传输容量的一种计量单位,也是数据处理的基本单位。计算机和数字设备的CPU按照字节来读取和写入数据,执行计算和处理指令。文档、图像、程序都存储为字节数。存储设备(例如硬盘、SSD、USB 驱动器)的容量通常以字节为单位。字节作为人们可理解的数据信息与机器可识别的数据信息(二进制)相互转换的桥梁,在计算机应用中有着十分重要的作用。

ByteConverter是一个字节转换器对象,它提供了简单高效的字节转换功能,可以方便地进行字节数据和其它数据的相互转换。其主要功能如下:

1、原始数组和指定转换“字节序”的Bytes类型的数组(字节数组)之间的相互转换;

2、将一个数组的部分或全部内容复制到另一个相同类型的数组;

3、字节数组与十六进制表示的字符串进行相互转换;

4、字符串使用指定的编码与字节数组相互转换。

ByteConverter的属性、方法和详细示例如下:

1、ArrayCopy

语法:ArrayCopy (src As Object, srcOffset As Int, dest As Object, destOffset As Int, count As Int)

从源数组src的索引srcOffset处开始向目标数组dest复制指定数量count的元素。目标数组dest从索引destOffset处开始写入,覆写count个元素。

示例:

Dim a1() As Int=Array As Int(1,2,3,4,5)

Dim c1() As Int=Array As Int(10,20,30,40,50)

Dim bc As ByteConverter

bc.ArrayCopy(a1,0,c1,1,2)

Dim s As String=""

For i=0 To c1.Length-1s=s&c1(i)&" "

Next

Log(s) '输出:10 1 2 40 50 注意:

① 两个数组必须是相同的类型,否则会抛出陈列存储异常错误。

② 必须满足srcOffset + count小于等于src.Length,否则会抛出超范围错误。

③ 必须满足destOffset + count小于等于dest.Length,否则会抛出超范围错误。

2、CharsToBytes

语法:CharsToBytes (values As Char()) As Byte()

返回一个从给定字符数组values转换而来的字节数组。返回值类型:Byte()。

示例:

Dim bc As ByteConverter

Dim C() As Char=Array As Char("a","1",",","。","的")

Dim s As StringBuilder

For i=0 To C.Length-1Dim b() As Byte=bc.CharsToBytes(Array As Char(c(i)))s.Initializes.Append(C(i)&" 的字节数组:")For j=0 To b.Length-1s.Append(b(j)).Append(IIf(j=b.Length-1,"",","))NextLog(s)

Next 输出结果如下:

a 的字节数组:0,97

1 的字节数组:0,49

, 的字节数组:0,44

。 的字节数组:48,2

的 的字节数组:118,-124

由此可见,在B4X中:

① 一个char字符占用2个字节。

② 英文字符(包括标点符号)和数字字符第1个字节为0,第2个字节为byte类型整数。

③ 中文字符(包括汉字和标点符号)2个字节均为byte类型整数。

④ 一个字节占用8位(最高位是符号位),字节数组中每个字节数值范围是-128 - 127,代表着相应的8位二进制位(补码)。例如:97代表1100001;-124代表10000100。

3、CharsFromBytes

语法:CharsFromBytes (bytes As Byte()) As Char()

返回一个从给定字节数组bytes转换而来的字符数组。返回值类型:Char()。

示例:

Dim bc As ByteConverter

Dim a1() As Byte=Array As Byte(0,65,0,66)

Dim c() As Char=bc.CharsFromBytes(a1)

Dim s As String=""

For i=0 To c.Length-1s=s&c(i)&" "

Next

Log(s) '输出:A B 注意:

字节数组bytes中的元素个数必须是偶数(因为一个字符占用2个字节),否则会产生错误。

4、FloatsToBytes

语法:FloatsToBytes (values As Float()) As Byte()

返回一个从单精度数值数组values转换而来的字节数组,返回值类型:Byte()。

注意:Float是32位数据类型,占用4个字节,每个Float数据返回的元素个数为4个。

示例:

Dim bc As ByteConverter

Dim f() As Float=Array As Float(2,13.5)

Dim b() As Byte=bc.FloatsToBytes(f)

Dim s As StringBuilder

s.Initialize

For i=0 To b.Length-1s.Append(b(i)).Append(" ")

Next

Log(s) '输出:64 0 0 0 65 88 0 05、FloatsFromBytes

语法:FloatsFromBytes (bytes As Byte()) As Float()

返回一个从字节数组bytes转换而来的单精度数值数组,返回值类型:Float()。

注意:Float是浮点型数据,浮点数在计算机和数字设备上的存储和运算使用特殊的机器码,是根据国际标准IEEE 754定义的二进制码。根据IEEE 754规则,我们可以自定义一个Float和二进制相互转换的自定义函数,代码如下:

Sub FloatToBinary(n As Float) As StringDim bc As ByteConverter Dim b() As Byte=bc.FloatsToBytes(Array As Float(n))Dim s As StringBuilders.InitializeFor i=0 To b.Length-1 Dim c As String=Bit.ToBinaryString(b(i)) c=IIf(b(i)<0,c.SubString(24),c)For j=1 To 8-c.Lengths.Append("0")Nexts.Append(c)NextDim s1 As String=s.ToStrings1=IIf(Bit.And(s1,1)=0,"0",s1)Return s1

End SubSub BinaryTOFloat(str As String) As FloatIf str="0" ThenReturn 0End IfIf Regex.IsMatch("^[01]+$",str)=False Then xui.MsgboxAsync("二进制字符串错误!","提示信息")Return 0/0End If Dim bc As ByteConverterDim b(4) As ByteFor i=0 To 3b(i)=Bit.ParseInt(str.SubString2(i*8,(i+1)*8),2) NextDim f() As Float= bc.FloatsFromBytes(b)Return (f(0))

End Sub示例:

Dim bc As ByteConverter

Dim b() As Byte=bc.FloatsToBytes(Array As Float(-23.5))

For i=0 To b.Length-1Log(b(i)&":"&Bit.ToBinaryString(b(i)))

Next

Dim f1 As String=FloatToBinary(-23.5)

Log(f1) '输出:11000001101111000000000000000000

Dim f2 As Float=BinaryTOFloat(f1)

Log(f2) '输出:-23.56、DoublesToBytes

语法:DoublesToBytes (values As Double()) As Byte()

返回一个从双精度数值数组values转换而来的字节数组,返回值类型:Byte()。

示例:

Dim bc As ByteConverter

Dim d() As Double=Array As Double(2,13.5)

Dim b() As Byte=bc.DoublesToBytes(d)

Dim s As StringBuilder

s.Initialize

For i=0 To b.Length-1s.Append(b(i)).Append(" ")

Next

Log(s) '输出:64 0 0 0 0 0 0 0 64 43 0 0 0 0 0 07、DoublesFromBytes

语法:DoublesFromBytes (bytes As Byte()) As Double()

返回一个从字节数组bytes转换而来的双精度数值数组,返回值类型:Double()。

接上例:

Dim d1() As Double=bc.DoublesFromBytes(b)

s.Initialize

For i=0 To d1.Length-1s.Append(d(i)).Append(" ")

Next

Log(s) '输出:2 13.5 备注:

float:单精度浮点数,占用4个字节(32位),适合内存和性能要求较高的应用场景,如嵌入式系统和移动设备。FloatsToBytes返回的字节数组大小是给定Float数组values大小的4倍。FloatsFromBytes语法中给定字节数组bytes大小必须是4的倍数,否侧会抛出错误。

double:双精度浮点数,占用8个字节(64位),虽然占用的存储空间是float的两倍,但提供了更高的精度和更大的数值范围。DoublesToBytes返回的字节数组大小是给定Double数组values大小的8倍。DoublesFromBytes语法中给定字节数组bytes大小必须是8的倍数,否侧会抛出错误。

8、FromChars

语法:FromChars (chars As Char()) As String

返回从给定的字符数组转换而来的字符串。返回值类型:String。

示例:

Dim bc As ByteConverter

Dim c() As Char=Array As Char("S","t","u","d","e","n","t")

Dim s As String=bc.FromChars(c)

Log(s) '输出:Student

9、HexFromBytes

语法:HexFromBytes (bytes As Byte()) As String

返回一个包含字节数组bytes中各字节的十六进制表示形式的字符串。返回值类型:String。

10、HexToBytes

语法:HexToBytes (hex As String) As Byte()

返回一个包含由十六进制字符串hex表示的数据的字节数组。返回值类型:Byte()。

字符串hex可以是大写的,也可以是小写的。

示例:

Dim bc As ByteConverter

Dim b() As Byte=Array As Byte(8,10,28,30)

Dim s As String=bc.HexFromBytes(b)

Log(s) '输出:080A1C1E

Dim b1() As Byte=bc.HexToBytes(s)

Dim s1 As StringBuilder

s1.Initialize

For i=0 To b1.Length-1s1.Append(b1(i)).Append(" ")

Next

Log(s1) '输出:8 10 28 30 备注:

① 由于一个十六进制字符由4位二进制表示,所以一个字节由2个十六进制字符表示。

② HexFromBytes返回的字符串长度是字节数组bytes元素个数的2倍。

③ HexToBytes语法中给定字符串hex的长度必须是偶数,否侧会抛出错误。

11、IntsFromBytes

语法:IntsFromBytes (bytes As Byte()) As Int()

返回一个从给定字节数组bytes转换而来的整型数值数组。返回值类型:Int()。

12、IntsToBytes

语法:IntsToBytes (values As Int()) As Byte()

返回一个从给定Int数组values转换而来的字节数组。返回值类型:Byte()。

示例:

Dim bc As ByteConverter

Dim i() As Int=Array As Int(5,6,7,8212)

Dim b() As Byte=bc.IntsToBytes(i)

Dim s As StringBuilder

s.Initialize

For k=0 To b.Length-1 s.Append(b(k)).Append(" ")

Next

Log(s) '输出:0 0 0 5 0 0 0 6 0 0 0 7 0 0 32 20Dim i1() As Int=bc.IntsFromBytes(b)

s.Initialize

For k=0 To i1.Length-1s.Append(i1(k)).Append(" ")

Next

Log(s) '输出:5 6 7 8212 备注:

① Int类型是32位数据,一个Int类型数值占用4个字节。

② IntsToBytes 返回的字节数组大小是给定Int数组values大小的4倍。

③ IntsFromBytes语法中给定字节数组bytes大小必须是4的倍数,否侧会抛出错误。

13、LittleEndian

获取或设置字节与其他原始值之间相互转换的字节序是否是Little_Endian,如果是返回True,不是则返回False。设置为False时,ByteConverter使用Big_Endian。

笔记:

字节序是指占内存多于一个字节类型的数据在内存中的存放顺序,通常有小端、大端两种字节顺序。

小端字节序Little_Endian:将低序字节存储在起始地址(内存低地址处)。

大端字节序Big_Endian:将高序字节存储在起始地址(内存低地址处)。

注意,我们的书写字节数据表示法是从高字节位--->低字节位(从左到右)。

内存地址生长方向为: 从左到右 由低到高(这是不变的)。

一般情况下:主机字节序中,Intel、AMD的CPU是Little_Endian,而PowerPC 、SPARC和Motorola处理器是Big_Endian。

在Internet的网络字节序是Big_Endian(网络传输)。JAVA字节序是Big_Endian。

B4X的默认字节序是Big_Endian,我们可以使用ByteConverter的LittleEndian设置需要使用的字节序。

示例:

Dim bc As ByteConverter

Log(bc.LittleEndian) '输出:false

Dim b() As Byte=bc.FloatsToBytes(Array As Float(5666))

Dim s As StringBuilder

s.Initialize

For i=0 To b.Length-1s.Append(b(i)).Append(IIf(i=b.Length-1,"",","))

Next

Log(s) '输出:69,-79,16,0

bc.LittleEndian=True '设置为小端字节序模式

b=bc.FloatsToBytes(Array As Float(5666))

s.Initialize

For i=0 To b.Length-1s.Append(b(i)).Append(IIf(i=b.Length-1,"",","))

Next

Log(s) '输出:0,16,-79,6914、LongsFromBytes

语法:LongsFromBytes (bytes As Byte()) As Long()

返回一个从给定字节数组bytes转换而来的长整型数值数组。返回值类型:Long()。

15、LongsToBytes

语法:LongsToBytes (values As Long()) As Byte()

返回一个从给定长整型数值数组values转换而来的字节数组。返回值类型:Byte()。

示例:

Dim bc As ByteConverter

bc.LittleEndian=True

DateTime.DateFormat="yyyy.MM.dd"

Dim t1 As Long=DateTime.DateParse("2024.02.01")

Dim t2 As Long=DateTime.DateParse("2025.02.01")

Dim l() As Long=Array As Long(t1,t2)

Dim b() As Byte=bc.LongsToBytes(l)

Dim s As StringBuilder

s.Initialize

For i=0 To b.Length-1s.Append(b(i)).Append(" ")

Next

Log(s) '输出:0 -92 63 96 -115 1 0 0 0 44 23 -67 -108 1 0 0Dim l1() As Long=bc.LongsFromBytes(b)

s.Initialize

For i=0 To l1.Length-1s.Append(DateTime.Date(l1(i))).Append(" ")

Next

Log(s) '输出:2024.02.01 2025.02.01 备注:

① Long类型是64位数据,一个Long类型数值占用8个字节。

② LongsToBytes返回的字节数组大小是给定Long数组values大小的8倍。

③ LongsFromBytes语法中给定字节数组bytes大小必须是8的倍数,否侧会抛出错误。

16、ShortsFromBytes

语法:ShortsFromBytes (bytes As Byte()) As Short()

返回一个从给定字节数组bytes转换而来的短整型数值数组。返回值类型:Short()。

17、ShortsToBytes

语法:ShortsToBytes (values As Short()) As Byte()

返回一个从给定短整型数值数组values转换而来的字节数组。返回值类型:Byte()。

示例:

Dim bc As ByteConverter

bc.LittleEndian=False

Dim sh() As Short=Array As Short(1,2,3,4)

Dim b() As Byte=bc.ShortsToBytes(sh)

Dim s As StringBuilder

s.Initialize

For i=0 To b.Length-1s.Append(b(i)).Append(" ")

Next

Log(s) '输出:0 1 0 2 0 3 0 4Dim sh1() As Short=bc.ShortsFromBytes(b)

s.Initialize

For i=0 To sh1.Length-1s.Append(sh1(i)).Append(" ")

Next

Log(s) '输出:1 2 3 4 备注:

① Short类型是16位数据,一个Short类型数值占用2个字节。

② ShortsToBytes返回的字节数组大小是给定Short数组values大小的2倍。

③ ShortsFromBytes语法中给定字节数组bytes大小必须是2的倍数,否侧会抛出错误。

18、StringFromBytes

语法:StringFromBytes (bytes As Byte(), encoding As String) As String

返回一个从给定字节数组bytes转换而来的字符串,字节数组转换为字符串时采用的字符编码为encoding。返回值类型:String。

备注:

字符串常用的编码方式包括UTF-8、UTF-16、GBK、ASCII、ISO-8859-1、Unicode等。这些编码方式各有特点,适用于不同的场景。

① UTF-8

UTF-8是一种变长编码方式,支持全球范围内的字符表示。每个字符的长度可以从1到4个字节不等,是最常用的字符编码之一,广泛用于互联网和多种编程语言中。

② UTF-16

UTF-16是一种定长编码方式,用于表示Unicode字符集。在Java中,字符串的内部表示采用UTF-16编码。UTF-16编码每个字符占用2或4个字节,适用于需要高效处理Unicode字符的场景。

③ GBK

GBK是一种扩展的GB2312编码,支持更多的汉字和符号。它兼容ASCII编码,对于ASCII可以表示的字符,如英文字符,编码与ASCII一致;对于不能表示的字符,如中文字符,GBK用两个字节表示,且最高位不为0以防止与ASCII字符冲突。

④ ASCII

ASCII是一种基于拉丁字母的编码系统,用于表示现代英语和其他西欧语言。它使用7位二进制数(后来扩展为8位),可以表示128个字符,包括控制字符和可打印字符。ASCII编码是计算机和通信设备之间交换信息的基础,广泛应用于编程、数据存储和传输等领域。

⑤ ISO-8859-1

ISO-8859-1是西欧语言字符的编码标准,每个字符占用一个字节,适用于需要处理西欧语言文本的场景。尽管它不支持中文等非西欧语言字符,但在处理西欧语言文本时非常高效。

⑥ Unicode

Unicode是一种国际化的字符编码标准,旨在将世界上所有的符号纳入其中,每个符号都有一个独一无二的编码。Unicode编码支持全球范围内的字符表示,适用于需要处理多种语言和符号的场景。

19、StringToBytes

语法:StringToBytes (str As String, encoding As String) As Byte()

返回从给定字符串str(字符编码为encoding)转换而来的字节数组。返回值类型:Byte()。

示例:

Dim bc As ByteConverter

bc.LittleEndian=False

Dim s As StringBuilder

Dim unicode() As String=Array ("UTF-8","UTF-16","GBK","ASCII","ISO-8859-1","Unicode")

Dim str() As String=Array As String("Student","学生")

For j=0 To str.Length-1Log($"字符串'${str(j)}'的字节数组:"$)For k=0 To unicode.Length-1Dim b() As Byte=bc.StringToBytes(str(j),unicode(k))Dim size As Int=b.Lengths.InitializeFor i=0 To b.Length-1s.Append(b(i)).Append(" ")NextDim s1 As StringIf str(j)="学生" And (unicode(k)="ASCII" Or unicode(k)="ISO-8859-1") Thens1=$"${unicode(k)}编码:不支持中文!"$Elses1=$"${unicode(k)}编码:${s.ToString},数组大小:${size}"$End IfLog(s1)Next

Next 运行日志记录如下:

字符串'Student'的字节数组:

UTF-8编码:83 116 117 100 101 110 116 ,数组大小:7

UTF-16编码:-2 -1 0 83 0 116 0 117 0 100 0 101 0 110 0 116 ,数组大小:16

GBK编码:83 116 117 100 101 110 116 ,数组大小:7

ASCII编码:83 116 117 100 101 110 116 ,数组大小:7

ISO-8859-1编码:83 116 117 100 101 110 116 ,数组大小:7

Unicode编码:-2 -1 0 83 0 116 0 117 0 100 0 101 0 110 0 116 ,数组大小:16

字符串'学生'的字节数组:

UTF-8编码:-27 -83 -90 -25 -108 -97 ,数组大小:6

UTF-16编码:-2 -1 91 102 117 31 ,数组大小:6

GBK编码:-47 -89 -55 -6 ,数组大小:4

ASCII编码:不支持中文!

ISO-8859-1编码:不支持中文!

Unicode编码:-2 -1 91 102 117 31 ,数组大小:6

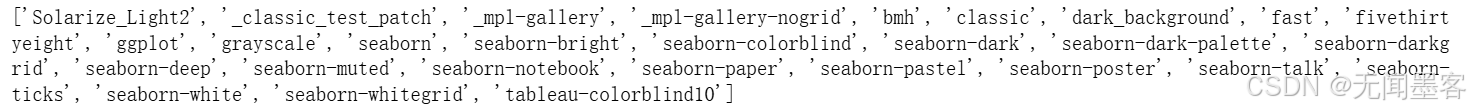

20、SupportedEncodings

语法:SupportedEncodings As String()

返回一个包含此系统支持的所有编码值的字符串数组。返回值类型:String()。

示例:

Dim bc As ByteConverter

bc.LittleEndian=False

Dim str() As String=bc.SupportedEncodings

For i=0 To str.Length-1

Log(str(i))

Next

21、ToChars

语法:ToChars (str As String) As Char()

返回由给定字符串str的字符组成的字符数组。返回值类型:Char()

示例:

Dim bc As ByteConverter

Dim str As String="Student"

Dim c() As Char=bc.ToChars(str)

Dim s As StringBuilder

s.Initialize

For i=0 To c.Length-1Dim s1 As String=IIf(i=c.Length-1,"",",")s.Append(c(i)).Append(s1)

Next

Log(s) '输出:S,t,u,d,e,n,t22、Version

返回当前ByteConverter库的版本号。返回值类型:Double。

示例:

Dim bc As ByteConverter

Log(bc.Version) '输出:1.1