1、insatll environment|安装环境

!pip install torchtoolbox

!pip install torchsummary

2、import package|导入三方库

# 基础库

import os

import time

import random

import shutil

import pandas as pd

import csv

from math import exp

from PIL import Image

import numpy as np

import math

import cv2

from tqdm import tqdm

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

from mpl_toolkits.axes_grid1 import ImageGrid

%matplotlib inline

# torch

import torch

import torch.nn as nn

import torch.optim as optim

from torch.optim import lr_scheduler

from torch.autograd import Variable

import torchvision

from torchvision import datasets, models, transforms

import torch.nn.functional as F

from torchsummary import summary

# 混合精度

from torch.optim.lr_scheduler import CosineAnnealingLR

scaler = torch.cuda.amp.GradScaler()

# Kaggle文件下载

from IPython.display import FileLink

# 其他数据增强

from torchtoolbox.tools import mixup_data, mixup_criterion

from torchtoolbox.transform import Cutout

3、set random seed|固定随机种子

The random seed is the initial value used in the pseudo-random number generator to generate pseudo-random numbers. For a pseudo-random number generator, starting from the same random number seed, the same random number sequence can be obtained. Set a random seed so that the same training effect can be produced later.

随机数种子(random seed)是在伪随机数生成器中用于生成伪随机数的初始数值。对于一个伪随机数生成器,从相同的随机数种子出发,可以得到相同的随机数序列。设置随机种子,便于后期产生相同的训练效果。

def seed_torch(seed=3407):random.seed(seed)os.environ['PYTHONHASHSEED'] = str(seed)np.random.seed(seed)torch.manual_seed(seed)torch.cuda.manual_seed(seed)torch.cuda.manual_seed_all(seed) # if you are using multi-GPU.torch.backends.cudnn.benchmark = Falsetorch.backends.cudnn.deterministic = True

seed_torch()

4、split datastes|划分数据集

The dataset is divided into training set, validation set and test set. The training set is used for model training, the validation set is used to verify the training effect of the model, used for parameter adjustment, etc., and the test set is used to test the prediction ability of the final model.This part divides the training and validation ratio as 8:2.

数据集分为训练集、验证集和测试集。训练集用于模型训练,验证集用于验证模型的训练效果,用于参数调整等,测试集用于测试最终模型的预测能力。本部分划分训练、验证比例为8:2。

# 指定数据集路径

ori_dataset_path = '../input/comp6101-assignment2-group2/train'

dataset_path = './'

# 验证集比例

test_frac = 0.2

classes = os.listdir(ori_dataset_path)

# 创建 train 文件夹

if not os.path.exists(os.path.join(dataset_path, 'train')):os.makedirs(os.path.join(dataset_path, 'train'))

# 创建 val 文件夹

if not os.path.exists(os.path.join(dataset_path, 'val')):os.makedirs(os.path.join(dataset_path, 'val'))

# 在 train 和 val 文件夹中创建各类别子文件夹

for label in classes:if not os.path.exists(os.path.join(dataset_path, 'train', label)):os.makedirs(os.path.join(dataset_path, 'train', label))if not os.path.exists(os.path.join(dataset_path, 'val', label)):os.makedirs(os.path.join(dataset_path, 'val', label))

df = pd.DataFrame()

print('{:^18} {:^18} {:^18}'.format('类别', '训练集数据个数', '测试集数据个数'))

for sigle_label in classes: # 遍历每个类别# 读取该类别的所有图像文件名old_dir = os.path.join(ori_dataset_path, sigle_label)images_filename = os.listdir(old_dir)random.shuffle(images_filename) # 随机打乱# 划分训练集和验证集testset_numer = int(len(images_filename) * test_frac) # 验证集图像个数testset_images = images_filename[:testset_numer] # 获取拟移动至 val 目录的测试集图像文件名trainset_images = images_filename[testset_numer:] # 获取拟移动至 train 目录的训练集图像文件名# 移动图像至 val 目录for image in testset_images:old_img_path = os.path.join(ori_dataset_path, sigle_label, image) # 获取原始文件路径new_test_path = os.path.join(dataset_path, 'val', sigle_label, image) # 获取 val 目录的新文件路径shutil.copy(old_img_path, new_test_path) # 移动文件# 移动图像至 train 目录for image in trainset_images:old_img_path = os.path.join(ori_dataset_path, sigle_label, image) # 获取原始文件路径new_train_path = os.path.join(dataset_path, 'train', sigle_label, image) # 获取 train 目录的新文件路径shutil.copy(old_img_path, new_train_path) # 移动文件# 工整地输出每一类别的数据个数print('{:^18} {:^18} {:^18}'.format(sigle_label, len(trainset_images), len(testset_images)))

5、visual some datasets|可视化部分数据

folder_path = './train/cat/'

# 可视化图像的个数

N = 36

# n 行 n 列

n = math.floor(np.sqrt(N))

# 获取数据

images = []

for each_img in os.listdir(folder_path)[:N]:img_path = os.path.join(folder_path, each_img)img_bgr = cv2.imread(img_path)img_rgb = cv2.cvtColor(img_bgr, cv2.COLOR_BGR2RGB)images.append(img_rgb)

# 可视化

fig = plt.figure(figsize=(10, 10))

grid = ImageGrid(fig, 111, # 类似绘制子图 subplot(111)nrows_ncols=(n, n), # 创建 n 行 m 列的 axes 网格axes_pad=0.02, # 网格间距share_all=True)

# 遍历每张图像

for ax, im in zip(grid, images):ax.imshow(im)ax.axis('off')

plt.tight_layout()

plt.show()

6、data preprocessing|数据预处理

It mainly includes data enhancement and DataLoader loading.

主要包括数据增强和DataLoader装载。

# 基于imagenet上的std和mean

std_nums = [0.229, 0.224, 0.225]

mean_nums = [0.485, 0.456, 0.406]

# 训练集上进行的数据增强

train_dataTrans = transforms.Compose([transforms.Resize(256),transforms.CenterCrop(224),#Cutout(),#transforms.RandomHorizontalFlip(), # 水平翻转#transforms.RandomVerticalFlip(), # 垂直翻转#transforms.RandomRotation(5),#transforms.GaussianBlur(kernel_size=(5, 5), sigma=(0.1, 1)),#transforms.ColorJitter(brightness=0.5, contrast=0.5, hue=0.5),#transforms.RandomHorizontalFlip(0.5),transforms.ToTensor(),transforms.Normalize(mean_nums, std_nums)

])

# 验证集上进行的数据增强

val_dataTrans = transforms.Compose([transforms.Resize(256),transforms.CenterCrop(224),transforms.ToTensor(),transforms.Normalize(mean_nums, std_nums)

])# 数据装载

train_data_dir = './train'

val_data_dir = './val'

train_dataset = datasets.ImageFolder(train_data_dir, train_dataTrans)

val_dataset = datasets.ImageFolder(val_data_dir, val_dataTrans)image_datasets = {'train':train_dataset,'val':val_dataset}

shuff = {'train':True,'val':False}dataloders = {x: torch.utils.data.DataLoader(image_datasets[x],batch_size=32,num_workers=2,shuffle=shuff[x]) for x in ['train', 'val']}train_loader = torch.utils.data.DataLoader(train_dataset,batch_size=32,num_workers=2,shuffle=True)val_loader = torch.utils.data.DataLoader(val_dataset,batch_size=32,num_workers=2,shuffle=True)dataset_sizes = {x: len(image_datasets[x]) for x in ['train', 'val']}print(dataset_sizes)

7、trian model|训练模型

7.1、自设计模型

class CNN(nn.Module):def __init__(self):super(CNN, self).__init__()self.relu = nn.ReLU()self.sigmoid = nn.Sigmoid()self.conv1 = nn.Sequential(nn.Conv2d(in_channels=3,out_channels=16,kernel_size=3,stride=2,),nn.BatchNorm2d(16),nn.ReLU(),nn.MaxPool2d(kernel_size=2),)self.conv2 = nn.Sequential(nn.Conv2d(in_channels=16,out_channels=32,kernel_size=3,stride=2,),nn.BatchNorm2d(32),nn.ReLU(),nn.MaxPool2d(kernel_size=2),)self.conv3 = nn.Sequential(nn.Conv2d(in_channels=32,out_channels=64,kernel_size=3,stride=2,),nn.BatchNorm2d(64),nn.ReLU(),nn.MaxPool2d(kernel_size=2),)self.fc1 = nn.Linear(3 * 3 * 64, 64)self.fc2 = nn.Linear(64, 10)self.out = nn.Linear(10, 2)def forward(self, x):x = self.conv1(x)x = self.conv2(x)x = self.conv3(x)# print(x.size())x = x.view(x.shape[0], -1)x = self.relu(self.fc1(x))x = self.relu(self.fc2(x))x = self.out(x)x = F.log_softmax(x, dim=1)return x

# 打印网络

CNN().to('cuda')

7.2、迁移学习

# 迁移学习

DEVICE = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model_ft = models.resnet50(pretrained=True)

num_ftrs = model_ft.fc.in_features

model_ft.fc = nn.Linear(num_ftrs, 2)

model_ft.to(DEVICE)

Downloading: "https://download.pytorch.org/models/resnet50-0676ba61.pth" to /root/.cache/torch/hub/checkpoints/resnet50-0676ba61.pth0%| | 0.00/97.8M [00:00<?, ?B/s]ResNet((conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)(layer1): Sequential((0): Bottleneck((conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(downsample): Sequential((0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(1): Bottleneck((conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True))(2): Bottleneck((conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)))(layer2): Sequential((0): Bottleneck((conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(downsample): Sequential((0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(1): Bottleneck((conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True))(2): Bottleneck((conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True))(3): Bottleneck((conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)))(layer3): Sequential((0): Bottleneck((conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(downsample): Sequential((0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False)(1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(1): Bottleneck((conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True))(2): Bottleneck((conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True))(3): Bottleneck((conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True))(4): Bottleneck((conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True))(5): Bottleneck((conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)))(layer4): Sequential((0): Bottleneck((conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(downsample): Sequential((0): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(2, 2), bias=False)(1): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(1): Bottleneck((conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True))(2): Bottleneck((conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)))(avgpool): AdaptiveAvgPool2d(output_size=(1, 1))(fc): Linear(in_features=2048, out_features=2, bias=True)

)

# 设置全局参数

modellr = 1e-4

BATCH_SIZE = 32

EPOCHS = 6

DEVICE = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

alpha=0.2# 实例化模型并且移动到GPU

criterion = nn.CrossEntropyLoss()

# 自定义模型

#model_ft = CNN()

#model_ft.to(DEVICE)

optimizer = optim.Adam(model_ft.parameters(), lr=modellr)

cosine_schedule = optim.lr_scheduler.CosineAnnealingLR(optimizer=optimizer,T_max=2,eta_min=1e-9)

train_loss_list = []

val_loss_list = []

val_acc_list = []# 定义训练过程

def train(model, device, train_loader, optimizer, epoch):print('=====train====')model.train()sum_loss = 0total_num = len(train_loader.dataset)for batch_idx, (data, target) in enumerate(train_loader):data, target = data.to(device, non_blocking=True), target.to(device, non_blocking=True)data, labels_a, labels_b, lam = mixup_data(data, target, alpha)optimizer.zero_grad()output = model(data)loss = mixup_criterion(criterion, output, labels_a, labels_b, lam)# 普通训练loss.backward()optimizer.step()# 混合精度训练#scaler.scale(loss).backward()#scaler.step(optimizer)#scaler.update()lr = optimizer.state_dict()['param_groups'][0]['lr']print_loss = loss.data.item()sum_loss += print_lossif (batch_idx + 1) % 100 == 0:print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}\tLR:{:.9f}'.format(epoch, (batch_idx + 1) * len(data), len(train_loader.dataset),100. * (batch_idx + 1) / len(train_loader), loss.item(),lr))ave_loss = sum_loss / len(train_loader)print('epoch:{},loss:{}'.format(epoch, ave_loss))train_loss_list.append(ave_loss)ACC=0

# 验证过程

def val(model, device, test_loader):global ACCprint('=====valid====')model.eval()test_loss = 0correct = 0total_num = len(test_loader.dataset)with torch.no_grad():for data, target in test_loader:data, target = Variable(data).to(device), Variable(target).to(device)output = model(data)loss = criterion(output, target)_, pred = torch.max(output.data, 1)correct += torch.sum(pred == target)print_loss = loss.data.item()test_loss += print_losscorrect = correct.data.item()acc = correct / total_numavgloss = test_loss / len(test_loader)val_loss_list.append(avgloss)print('\nVal set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(avgloss, correct, len(test_loader.dataset), 100 * acc))val_acc_list.append(acc)if acc > ACC:torch.save(model_ft.state_dict(), '66model_' + str(epoch) + '_' + str(round(acc, 3)) + '.pth')ACC = accprint('当前最好模型精度:{}%'.format(ACC*100))# 迭代训练

for epoch in range(1, EPOCHS + 1):train(model_ft, DEVICE, train_loader, optimizer, epoch)cosine_schedule.step()val(model_ft, DEVICE, val_loader)plt.plot(val_loss_list, label='loss')

plt.plot(val_acc_list, label='accuracy')

plt.legend()

plt.title('Valid loss and accuracy')

plt.show()

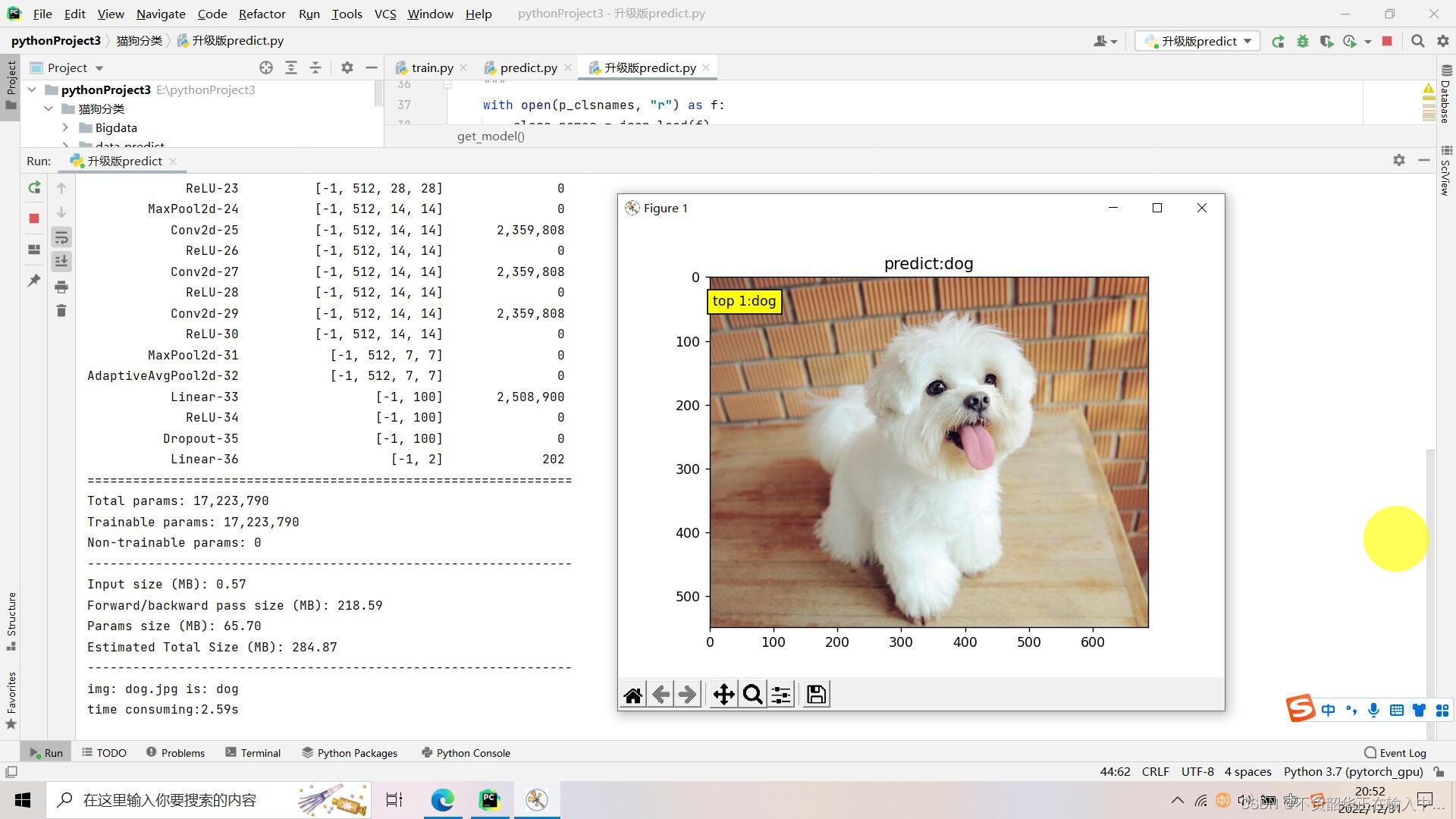

8、visual inference|可视化推理

infer_transformation = transforms.Compose([transforms.Resize(256),transforms.CenterCrop(224),transforms.ToTensor(),transforms.Normalize(mean_nums, std_nums)

])IMAGES_KEY = 'images'

MODEL_INPUT_KEY = 'images'

LABEL_OUTPUT_KEY = 'predicted_label'

MODEL_OUTPUT_KEY = 'scores'def decode_image(file_content):image = Image.open(file_content)image = image.convert('RGB')return imagedef cnn(model_path):model = CNN()model.load_state_dict(torch.load(model_path,map_location ='cuda:0'))return modeldef trans_learning(model_path):model = models.resnet50(pretrained=False)num_ftrs = model.fc.in_featuresmodel.fc = nn.Linear(num_ftrs, 2)model.load_state_dict(torch.load(model_path,map_location ='cuda:0'))model.eval()return modeldef predict(file_name):LABEL_LIST = ['cat', 'dog']# 迁移学习模型model = trans_learning('../input/weightsresnet/model_2_0.992.pth')# 自定义模型#model = cnn('./55model_9_0.837.pth')image1 = decode_image(file_name)input_img = infer_transformation(image1)input_img = torch.autograd.Variable(torch.unsqueeze(input_img, dim=0).float(), requires_grad=False)logits_list = model(input_img)[0].detach().numpy().tolist()maxlist=max(logits_list)z_exp = [exp(i-maxlist) for i in logits_list]sum_z_exp = sum(z_exp)softmax = [round(i / sum_z_exp, 3) for i in z_exp]labels_to_logits = {LABEL_LIST[i]: s for i, s in enumerate(softmax)}predict_result = {LABEL_OUTPUT_KEY: max(labels_to_logits, key=labels_to_logits.get),MODEL_OUTPUT_KEY: labels_to_logits}return predict_resultfile_name = '../input/comp6101-assignment2-group2/test/test/7.jpg'

result = predict(file_name)

import matplotlib.pyplot as pltplt.figure(figsize=(10,10))

img = decode_image(file_name)

plt.imshow(img)

plt.show()print('结果:',result)

结果: {'predicted_label': 'cat', 'scores': {'cat': 0.987, 'dog': 0.013}}

9、inference and submission|推理和提交

import csv

from tqdm import tqdmfor i in range(2):headers = ['id','category']f = open('results.csv','w', newline='')writer = csv.writer(f)writer.writerow(headers)for i in tqdm(range(5000)):file_name='../input/comp6101-assignment2-group2/test/test/'+str(i)+'.jpg'result = predict(file_name)if result['predicted_label']=='cat':value=0if result['predicted_label']=='dog':value=1writer.writerow([str(i),int(value)])

100%|██████████| 5000/5000 [52:24<00:00, 1.59it/s]

100%|██████████| 5000/5000 [51:26<00:00, 1.62it/s]

FileLink('results.csv')

results.csv

FileLink('./66model_2_0.992.pth')

| 模型 | 预训练 | lr优化器 | 损失函数 | 学习率 | 训轮次 | 验证集ACC | 线上提交ACC | 权重下载 | CSV结果 | ACC-Loss图 |

|---|---|---|---|---|---|---|---|---|---|---|

自设计CNN | - | CosineAnnealingLR | CrossEntropyLoss | 1e-3 | 10 | 0.83675 | 0.7336 | 【点击查看】 | 【点击查看】 | 【点击查看】 |

Resnet50 | 是 | CosineAnnealingLR | CrossEntropyLoss | 1e-4 | 6 | 0.992 | 0.98880 | 【点击查看】 | 【点击查看】 | 【点击查看】 |

10、Summarize|总结

本次作业,该.ipynb实现了以下内容:

- 构建了一个简单的

CNN模型,在训练ACC上上达到0.83准确率,线上提交分数在0.73; - 引入:

Resize、CenterCrop、Cutout、RandomHorizontalFlip、RandomVerticalFlip等众多增强方案; - 引入

mixup_data、mixup_criterion等方法,提升训练精度; - 内嵌

torch.cuda.amp.GradScaler混合精度训练,不影响精度下加速模型训练过程; - 引入迁移学习,多种

torchvision.models模型任意调用,并在Resnet50迁移学习上达到0.988的成绩; - 任意模型结构可视化,任意模型权重保存与加载;

- 训练和验证

ACC与loss图输出对比,模型效果简单直观; - 完整流程:

数据划分->部分数据可视化->数据预处理->模型构建->模型训练->可视化推理->批量推理;