无论是图片动漫转换以及视频动漫转换,我们前期也介绍过相关的模型,但是其模型输出的动漫视频不是有瑕疵,就是动漫效果不唯美,今天介绍一个modelscope社区开源的动漫风格转换模型Diffutoon。

Diffutoon模型接受视频作为输入,然后根据输入的视频提取人物的线条以及相关的视频颜色,并根据人物线条与视频颜色合成动漫的视频,当然模型支持相关的编辑功能,可以在输出的视频时添加想要的效果。

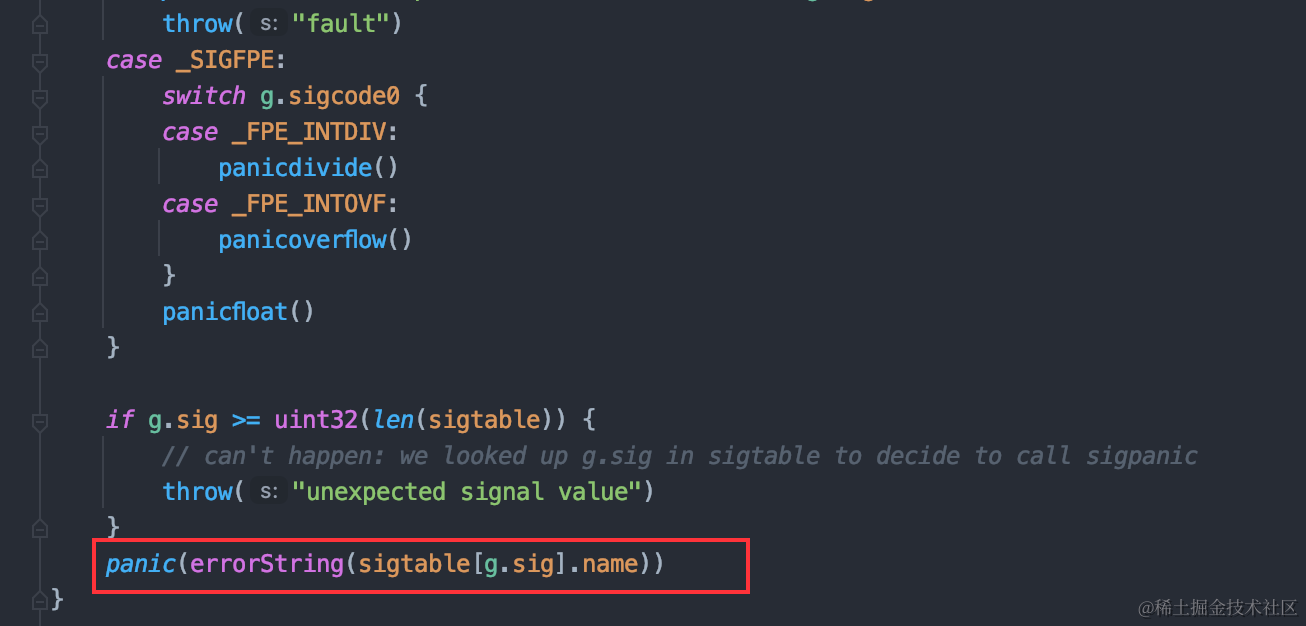

Diffutoon利用个性化的 stable diffusion 模型进行图片动漫风格化操作,为了增强时间一致性,模型采用了多个运动模块。这些模块基于AnimateDiff,然后结合UNet模型以保持输出视频内容的一致性。而模型中的人物线条的提取便是使用ControlNet模型,此模型可以保留人物的姿态,然后通过人物轮廓作为模型的结构输入。Diffutoon后期的动漫视频渲染过程便是使用此轮廓渲染出动漫风格的视频。

Diffutoon最重要的是着色,需要根据源视频的颜色色彩,与渲染的轮廓进行合成,以便输出合适的颜色,此部分使用了另外一个ControlNet模型进行上色。该模型针对超分辨率任务进行训练,因此,即使输入视频分辨率较低。该模型也能直接处理,并输出高分辨率的动漫视频。

与其他模型对比,Diffutoon模型的效果显著,特别是针对视频生成图片的连贯性,以及唯美性进行了调整,而模型也针对人物脸部进行了重点训练,让生成的模型效果 可以更加生动形象。

而针对模型轮廓,人物人脸虚化以及其他人物姿态部分虚化现象,Diffutoon模型通过FastBlend与AnimateDiff方法来控制模型的输出效果,并添加ControlNet与编辑词进行模型的微调,让生成的视频更加高清连贯。

此模型开源在 GitHub 上,也可以直接在 Google cloab 空间上直接运行,真正的开箱即用,无需部署生产环境,只需要导入自己的视频,运行即可。

在本地,也可以直接使用代码运行,在运行代码前,首先需要安装对应的第三方库以及部署整个环境,并下载对应的模型。

!git clone https://github.com/Artiprocher/DiffSynth-Studio.git

!pip install -q transformers controlnet-aux==0.0.7 streamlit streamlit-drawable-canvas imageio imageio[ffmpeg] safetensors einops cupy-cuda12x

%cd /content/DiffSynth-Studio

import requests

def download_model(url, file_path):model_file = requests.get(url, allow_redirects=True)with open(file_path, "wb") as f:f.write(model_file.content)

download_model("https://civitai.com/api/download/models/229575", "models/stable_diffusion/aingdiffusion_v12.safetensors")

download_model("https://huggingface.co/guoyww/animatediff/resolve/main/mm_sd_v15_v2.ckpt", "models/AnimateDiff/mm_sd_v15_v2.ckpt")

download_model("https://huggingface.co/lllyasviel/ControlNet-v1-1/resolve/main/control_v11p_sd15_lineart.pth", "models/ControlNet/control_v11p_sd15_lineart.pth")

download_model("https://huggingface.co/lllyasviel/ControlNet-v1-1/resolve/main/control_v11f1e_sd15_tile.pth", "models/ControlNet/control_v11f1e_sd15_tile.pth")

download_model("https://huggingface.co/lllyasviel/ControlNet-v1-1/resolve/main/control_v11f1p_sd15_depth.pth", "models/ControlNet/control_v11f1p_sd15_depth.pth")

download_model("https://huggingface.co/lllyasviel/ControlNet-v1-1/resolve/main/control_v11p_sd15_softedge.pth", "models/ControlNet/control_v11p_sd15_softedge.pth")

download_model("https://huggingface.co/lllyasviel/Annotators/resolve/main/dpt_hybrid-midas-501f0c75.pt", "models/Annotators/dpt_hybrid-midas-501f0c75.pt")

download_model("https://huggingface.co/lllyasviel/Annotators/resolve/main/ControlNetHED.pth", "models/Annotators/ControlNetHED.pth")

download_model("https://huggingface.co/lllyasviel/Annotators/resolve/main/sk_model.pth", "models/Annotators/sk_model.pth")

download_model("https://huggingface.co/lllyasviel/Annotators/resolve/main/sk_model2.pth", "models/Annotators/sk_model2.pth")

download_model("https://civitai.com/api/download/models/25820?type=Model&format=PickleTensor&size=full&fp=fp16", "models/textual_inversion/verybadimagenegative_v1.3.pt")

然后就可以使用自己的视频进行动漫视频的转换操作了,其代码实现如下:

from diffsynth import SDVideoPipelineRunner

config = config_stage_2_template.copy()

config["data"]["input_frames"] = {"video_file": "/content/input_video.mp4","image_folder": None,"height": 1024,"width": 1024,"start_frame_id": 0,"end_frame_id": 30

}

config["data"]["controlnet_frames"] = [config["data"]["input_frames"], config["data"]["input_frames"]]

config["data"]["output_folder"] = "/content/toon_video"

config["data"]["fps"] = 25

runner = SDVideoPipelineRunner()

runner.run(config)

当然模型支持输入关键词,对输出的视频进行微调,可以在配置文件中写自己的 prompt

from diffsynth import SDVideoPipelineRunner

config_stage_1 = config_stage_1_template.copy()

config_stage_1["data"]["input_frames"] = {"video_file": "/content/input_video.mp4","image_folder": None,"height": 512,"width": 512,"start_frame_id": 0,"end_frame_id": 30

}

config_stage_1["data"]["controlnet_frames"] = [config_stage_1["data"]["input_frames"], config_stage_1["data"]["input_frames"]]

config_stage_1["data"]["output_folder"] = "/content/color_video"

config_stage_1["data"]["fps"] = 25

config_stage_1["pipeline"]["pipeline_inputs"]["prompt"] = "best quality, perfect anime illustration, orange clothes, night, a girl is dancing, smile, solo, black silk stockings"

runner = SDVideoPipelineRunner()

runner.run(config_stage_1)https://colab.research.google.com/github/Artiprocher/DiffSynth-Studio/blob/main/examples/Diffutoon.ipynb

https://github.com/modelscope/DiffSynth-Studio/blob/main/examples/Diffutoon/Diffutoon.ipynb

https://github.com/modelscope/DiffSynth-Studio?tab=readme-ov-file更多transformer,VIT,swin tranformer

参考头条号:人工智能研究所

v号:启示AI科技动画详解transformer 在线教程