文章目录

- 前言

- 一、LoRA训练与Resume方法Demo

- 1、LoraConfig配置文件介绍

- 2、PEFT的LoRA训练的完整Demo

- 3、LoRA训练与LoRA的resume训练

- 1、LoRA训练

- 2、LoRA的resume训练

- 4、PEFT的LoRA训练方法

- 二、权重载入

- 1、参数

- 2、文件路径获取

- 3、config加载更新

- 4、权重文件加载

- 1、不同条件权重载入

- 2、os.path.isfile(weights_file) or os.path.isfile(safe_weights_file)条件载入权重

- 3、is_peft_available() and isinstance(model, PeftModel)条件载入权重

- 4、else条件载入权重

- 5、特定文件重点解释

- 三、LoRA权重载入(def load_adapter(self, model_id: str, adapter_name: str, is_trainable: bool = False, **kwargs: Any))

- 1、load_adapter完整源码

- 2、adapter的config参数处理

- 3、LoRA权重文件获取(file_name)

- 4、LoRA权重文件加载

- 5、载入LoRA权重

- 1、完整源码(set_peft_model_state_dict)

- 2、获取config值

- 3、获得Lora权重值

- 4、模型加工为模型能加载Lora权重变量

- 5、模型加载LoRA权重

- 6、set_peft_model_state_dict返回值

- 6、load_adapter返回值

- 四、optimizer、scheduler与状态_load_rng_state载入

- 五、LoRA训练推理合并模型方法

- 1、LoRA权重合并Demo

- 2、LoRA权重合模型变量变化

- 六、LoRA权重合于模型权重保存

- 1、保存合并lora权重Demo

- 2、保存合并权重说明

前言

在huggingface中,很多情况是使用LoRA方法来训练模型。有关LoRA训练获得权重与加载,以及如何LoRA继续resume训练等问题,尚未有一个较好文章说明。因此,本文将详细说明LoRA相关内容。首先介绍了LoRA(Low-Rank Adaptation)的原理及相关内容;其次也对训练相关各种模型权重、LoRA权重、配置文件、优化器、调度器以及训练器状态和随机状态保存方法;接着给出了关于LoRA训练与恢复方法Demo与介绍,包括LoraConfig配置文件介绍和PEFT的LoRA训练;并进一步解读huggingface训练期间的LoRA权重等内容加载源码解读;最后,给出训练完后LoRA权重如何与原始模型合并Demo,以此实现模型推理。而本文是给出LoRA训练Demo与LoRA的resume的Demo及LoRA合并推理和保存内容。

提示:huggingface的lora与resume训练原理与方法!

一、LoRA训练与Resume方法Demo

1、LoraConfig配置文件介绍

在训练之前,我们有必要解读下相关Lora的config文件配置,其源码如下:

@dataclass

class LoraConfig(PeftConfig):"""This is the configuration class to store the configuration of a [`LoraModel`].Args:r (`int`): Lora attention dimension.target_modules (`Union[List[str],str]`): The names of the modules to apply Lora to.lora_alpha (`int`): The alpha parameter for Lora scaling.lora_dropout (`float`): The dropout probability for Lora layers.fan_in_fan_out (`bool`): Set this to True if the layer to replace stores weight like (fan_in, fan_out).For example, gpt-2 uses `Conv1D` which stores weights like (fan_in, fan_out) and hence this should be set to `True`.:bias (`str`): Bias type for Lora. Can be 'none', 'all' or 'lora_only'modules_to_save (`List[str]`):List of modules apart from LoRA layers to be set as trainableand saved in the final checkpoint.layers_to_transform (`Union[List[int],int]`):The layer indexes to transform, if this argument is specified, it will apply the LoRA transformations onthe layer indexes that are specified in this list. If a single integer is passed, it will apply the LoRAtransformations on the layer at this index.layers_pattern (`str`):The layer pattern name, used only if `layers_to_transform` is different from `None` and if the layerpattern is not in the common layers pattern."""r: int = field(default=8, metadata={"help": "Lora attention dimension"})target_modules: Optional[Union[List[str], str]] = field(default=None,metadata={"help": "List of module names or regex expression of the module names to replace with Lora.""For example, ['q', 'v'] or '.*decoder.*(SelfAttention|EncDecAttention).*(q|v)$' "},)lora_alpha: int = field(default=8, metadata={"help": "Lora alpha"})lora_dropout: float = field(default=0.0, metadata={"help": "Lora dropout"})fan_in_fan_out: bool = field(default=False,metadata={"help": "Set this to True if the layer to replace stores weight like (fan_in, fan_out)"},)bias: str = field(default="none", metadata={"help": "Bias type for Lora. Can be 'none', 'all' or 'lora_only'"})modules_to_save: Optional[List[str]] = field(default=None,metadata={"help": "List of modules apart from LoRA layers to be set as trainable and saved in the final checkpoint. ""For example, in Sequence Classification or Token Classification tasks, ""the final layer `classifier/score` are randomly initialized and as such need to be trainable and saved."},)init_lora_weights: bool = field(default=True,metadata={"help": ("Whether to initialize the weights of the Lora layers with their default initialization. Don't change ""this setting, except if you know exactly what you're doing."),},)layers_to_transform: Optional[Union[List, int]] = field(default=None,metadata={"help": "The layer indexes to transform, is this argument is specified, PEFT will transform only the layers indexes that are specified inside this list. If a single integer is passed, PEFT will transform only the layer at this index."},)layers_pattern: Optional[str] = field(default=None,metadata={"help": "The layer pattern name, used only if `layers_to_transform` is different to None and if the layer pattern is not in the common layers pattern."},)def __post_init__(self):self.peft_type = PeftType.LORA针对上面源码,主要解读下相关参数,如下:

这段代码定义了一个名为 LoraConfig 的类,它继承自 PeftConfig 类。该类用于存储一个 LoraModel 的配置信息。在类中定义了一些属性和参数,包括:r: Lora 注意力维度

target_modules: 要应用 Lora 的模块的名称列表或正则表达式

lora_alpha: Lora 缩放的 alpha 参数

lora_dropout: Lora 层的 dropout 概率

fan_in_fan_out: 如果要替换的层存储权重类似于 (fan_in, fan_out),则将此设置为 True

bias: Lora 的偏置类型,可以是 'none', 'all' 或 'lora_only'

modules_to_save: 除 LoRA 层之外要设置为可训练并保存在最终检查点中的模块列表

layers_to_transform: 要转换的层索引列表

layers_pattern: 层模式名称,仅在 layers_to_transform 不为 None 且层模式不在常见层模式中时使用

同时,类中包含了一个 __post_init__ 方法,在初始化完成后会设置 peft_type 属性为 PeftType.LORA。总体来说,这个类用于配置 LoraModel,并提供了各种参数来控制 Lora 模型的行为和特性。

截止2024年huggingface的peft库参数配置全部说明如下:

r (int) — Lora注意力维度(“rank”)。target_modules(可选[Union[List[str], str]])— 要应用适配器的模块的名称。如果指定了此参数,则只会替换具有指定名称的模块。当传递字符串时,将执行正则表达式匹配。当传递字符串列表时,将执行精确匹配或检查模块名称是否以任何传递的字符串结尾。如果指定为“all-linear”,则选择所有线性/Conv1D模块,不包括输出层。如果未指定此参数,将根据模型架构选择模块。如果架构未知,则会引发错误 - 在这种情况下,您应该手动指定目标模块。lora_alpha(int)— Lora缩放的alpha参数。lora_dropout(float)— Lora层的丢失概率。fan_in_fan_out(bool)—如果要替换的层存储类似于(fan_in,fan_out)的权重,请将此设置为True。例如,gpt-2使用存储类似于(fan_in,fan_out)的Conv1D,因此应将其设置为True。bias(str)—LoRA的偏差类型。可以是“none”、“all”或“lora_only”。如果是“all”或“lora_only”,则在训练过程中将更新相应的bias。请注意,这意味着即使禁用了适配器,模型也不会产生与未经调整的基础模型相同的输出。use_rslora(bool)—当设置为True时,使用稳定秩LoRA,将适配器缩放因子设置为lora_alpha/math.sqrt(r),因为已经证明效果更好。否则,它将使用lora_alpha/r的原始默认值。modules_to_save(List[str])—除适配器层之外要设置为可训练并保存在最终检查点中的模块列表。init_lora_weights(bool | Literal["gaussian", "pissa", "pissa_niter_[number of iters]", "loftq"])—如何初始化适配器层的权重。传递True(默认值)将导致从Microsoft的参考实现中使用默认初始化。传递“gaussian”将导致线性和层的高斯初始化按LoRA秩缩放。将初始化设置为False将导致完全随机初始化,并且不建议使用。传递'loftq'以使用LoftQ初始化。传递“pissa”将导致PiSSA的初始化,它比LoRA更快地收敛,并最终实现了更好的性能。此外,PiSSA减少了与QLoRA相比的量化误差,从而带来进一步的增强。传递'pissaniter[number of iters]'启动基于快速SVD的PiSSA初始化,其中[number of iters]表示执行FSVD的子空间迭代次数,并且必须是非负整数。当[number of iters]设置为16时,它可以在几秒钟内完成7b模型的初始化,并且训练效果大约等同于使用SVD。有关更多信息,请参阅主奇异值和奇异向量适应。layers_to_transform(Union[List[int], int])—要转换的层索引。如果传递整数列表,则将适配器应用于在此列表中指定的层索引。如果传递单个整数,则将在该索引处的层上应用转换。layers_pattern(str)—层模式名称,仅在layers_to_transform与None不同的情况下使用。rank_pattern(dict)—从与r不同的默认秩指定的层名称或正则表达式到秩的映射。alpha_pattern(dict)—从与lora_alpha不同的默认alpha指定的层名称或正则表达式到alpha的映射。megatron_config(Optional[dict])—Megatron的TransformerConfig参数。用于创建LoRA的并行线性层。您可以这样获得它,core_transformer_config_from_args(get_args()),这两个函数来自Megatron。这些参数将用于初始化Megatron的TransformerConfig。当您想要将LoRA应用于Megatron的ColumnParallelLinear和RowParallelLinear层时,您需要指定此参数。megatron_core(Optional[str])—要使用的Megatron核心模块,默认为“megatron.core”。loftq_config(Optional[LoftQConfig])—LoftQ的配置。如果这不是None,则LoftQ将用于量化主干权重并初始化Lora层。还要传递init_lora_weights='loftq'。请注意,在这种情况下,您不应传递量化模型,因为LoftQ将自己对模型进行量化。use_dora(bool)—启用“Weight-Decomposed Low-Rank Adaptation”(DoRA)。此技术将权重的更新分解为两部分,大小和方向。方向由普通LoRA处理,而大小由单独的可学习参数处理。这可以提高LoRA在低秩时特别表现出色。目前,DoRA仅支持线性和Conv2D层。DoRA引入比纯LoRA更大的开销,因此建议在推理时合并权重。有关更多信息,请参阅https://arxiv.org/abs/2402.09353。layer_replication (List[Tuple[int, int]]) — 通过按照指定范围叠加原始模型层来构建新的层堆栈。这允许扩展(或缩小)模型,而无需复制基础模型的权重。新层将都附带单独的LoRA适配器。2、PEFT的LoRA训练的完整Demo

先给出Peft的lora训练的完整Demo源码,如下:

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "1"

from torch.utils.data import Dataset

import torch

from transformers import BertTokenizer, BertForSequenceClassification, Trainer, TrainingArguments

import random

import numpy as np# 随机种子

seed = 42

random.seed(24)

torch.manual_seed(seed)# 示例数据,给更多数据便于常看数据是否继承train_data = [["Work is my source of happiness", 1], ["I am full of joy in my work", 1], ["Work makes me feel satisfied and happy", 1],["I feel pain in my work", 0], ["I detest work pressure", 0],["I love every workday", 1], ["Work makes me feel utterly exhausted", 0], ["I am not interested in work", 0], ["Work makes me feel tired", 0],["I am full of passion and enthusiasm for my work", 1], ["I like my job", 1], ["I really like my job", 1],["I feel disappointed and discouraged about work", 0], ["I hate the repetitiveness of work", 0],["Work leaves me physically and mentally exhausted", 0], ["Work makes me feel fulfilled and happy", 1], ["I have no passion for work", 0], ["Work is my motivation", 1],["Work makes me feel stressed", 0], ["I feel tired and negative about work", 0], ["I despise the daily repetitive tasks at work", 0], ["I am full of love for my work", 1], ["Work is my life", 1], ["I am passionate about my work", 1],["Work makes me feel depressed and hopeless", 0], ["I have lost interest and motivation in work", 0],["I feel tired and resistant towards work", 0], ["Work makes me feel unbearable and intolerable exhaustion", 0], ["I find work monotonous and dull", 0], ["Work leaves me physically and mentally exhausted and helpless", 0], ["Work makes me feel satisfied", 1], ["I enjoy the work process", 1], ["Work makes me feel excited", 1], ["I feel I have lost enthusiasm for work", 0], ["Work makes me feel utterly tired and bored", 0], ["Work makes me feel valuable", 1], ["I love my job", 1], ["Work makes me feel happy", 1], ["I am immersed in my work", 1], ["I am full of passion for my work", 1], ["Work makes me feel happy", 1], ["I do not like work", 0], ["Work makes me feel proud", 1], ["Work is my passion", 1], ["I am full of enthusiasm for my work", 1],["I am tired of work life", 0], ["I do not like going to work", 0], ["I am full of zeal for my work", 1]]train_texts = [d[0] for d in train_data]

train_labels = [d[1] for d in train_data] # 1代表正面,0代表负面# 加载预训练的Bert tokenizer

tokenizer = BertTokenizer.from_pretrained("./bert-base-uncased")

# 数据编码

train_encodings = tokenizer(train_texts, truncation=True, padding=True)class SentimentDataset(torch.utils.data.Dataset):def __init__(self, encodings, labels):self.encodings = encodingsself.labels = labelsdef __getitem__(self, idx):item = {key: torch.tensor(val[idx]) for key, val in self.encodings.items()}item['labels'] = torch.tensor(self.labels[idx])return itemdef __len__(self):return len(self.labels)# 创建数据集

train_dataset = SentimentDataset(train_encodings, train_labels)# 加载预训练的Bert模型

model = BertForSequenceClassification.from_pretrained("./bert-base-uncased", num_labels=2)from peft import LoraConfig, get_peft_model

lora_config = LoraConfig(r=8,lora_alpha=16,# target_modules=['query_key_value'],lora_dropout=0.01,bias="none",task_type="CAUSAL_LM",

)

model = get_peft_model(model, lora_config) # peft的lora训练# 定义训练参数

training_args = TrainingArguments(output_dir='./out_dirs',per_device_train_batch_size=3,per_device_eval_batch_size=3,num_train_epochs=20,logging_dir="./logs",report_to="none",gradient_accumulation_steps=2, # 梯度迭代累计save_strategy ="steps", # 权重保存策略,默认no,不保存save_steps=2,# save_total_limit = 10,learning_rate=2e-4,# ignore_data_skip=True)# 定义Trainer对象

trainer = Trainer(model=model,args=training_args,train_dataset=train_dataset,)for name, param in model.named_parameters(): print("梯度参数:{}:\t{}".format(name, param.requires_grad))

# 开始训练

# trainer.train()

trainer.train(resume_from_checkpoint=True)3、LoRA训练与LoRA的resume训练

实际LoRA训练与resume训练和之前方法类似,只是在训练前包装模型,在进行trainer的实例化。我给出2种训练方法,如下。

1、LoRA训练

trainer.train()

2、LoRA的resume训练

trainer.train(resume_from_checkpoint=True)

4、PEFT的LoRA训练方法

构建了model之后,配置好peft的lora的参数后,直接使用model = get_peft_model(model, lora_config)此方法,即可实现该模型model的lora训练。

from peft import LoraConfig, get_peft_model

lora_config = LoraConfig(r=8,lora_alpha=16,# target_modules=['query_key_value'],lora_dropout=0.01,bias="none",task_type="CAUSAL_LM",

)

model = get_peft_model(model, lora_config) # peft的lora训练

注:我需强调,模型构建后使用lora相关包装,在使用Trainer来进一步包装实例化。

二、权重载入

1、参数

一个权重文件夹相关路径与模型,若model参数不赋值,则使用self.model模型。代码如下:

def _load_from_checkpoint(self, resume_from_checkpoint, model=None):if model is None:model = self.model

2、文件路径获取

我已将文件路径与代码给出,如下:

config_file = os.path.join(resume_from_checkpoint, CONFIG_NAME) # ./out_dirs/checkpoint-10/config.json

adapter_weights_file = os.path.join(resume_from_checkpoint, ADAPTER_WEIGHTS_NAME) # ./out_dirs/checkpoint-10/adapter_model.bin

adapter_safe_weights_file = os.path.join(resume_from_checkpoint, ADAPTER_SAFE_WEIGHTS_NAME) # ./out_dirs/checkpoint-10/adapter_model.safetensors

weights_file = os.path.join(resume_from_checkpoint, WEIGHTS_NAME) # ./out_dirs/checkpoint-10/pytorch_model.bin

weights_index_file = os.path.join(resume_from_checkpoint, WEIGHTS_INDEX_NAME) # ./out_dirs/checkpoint-10/pytorch_model.bin.index.json

safe_weights_file = os.path.join(resume_from_checkpoint, SAFE_WEIGHTS_NAME) # ./out_dirs/checkpoint-10/model.safetensors

safe_weights_index_file = os.path.join(resume_from_checkpoint, SAFE_WEIGHTS_INDEX_NAME) # ./out_dirs/checkpoint-10/model.safetensors.index.json

其保存文件如下:

3、config加载更新

存在config文件就更新,或许一样就可以不用管。这里,直接给出代码,不解释了。

if os.path.isfile(config_file):config = PretrainedConfig.from_json_file(config_file)checkpoint_version = config.transformers_versionif checkpoint_version is not None and checkpoint_version != __version__:logger.warning(f"You are resuming training from a checkpoint trained with {checkpoint_version} of "f"Transformers but your current version is {__version__}. This is not recommended and could ""yield to errors or unwanted behaviors.")peft没有保存该文件,该文件存在一样会更新替换。

4、权重文件加载

1、不同条件权重载入

权重文件根据相关条件判断来实现载入。第一种方法是都存在pytorch_model.bin或者model.safetensors载入方法,其满足条件为if os.path.isfile(weights_file) or os.path.isfile(safe_weights_file):;第二种是PEFT方式在权重,其满足条件为elif is_peft_available() and isinstance(model, PeftModel):;第三种是else其它载入方式,其满足条件为else。

if os.path.isfile(weights_file) or os.path.isfile(safe_weights_file): # 载入方法一 if is_sagemaker_mp_enabled():if os.path.isfile(os.path.join(resume_from_checkpoint, "user_content.pt")):smp.resume_from_checkpoint(path=resume_from_checkpoint, tag=WEIGHTS_NAME, partial=False, load_optimizer=False )else:state_dict = torch.load(weights_file, map_location="cpu")state_dict["_smp_is_partial"] = Falseload_result = model.load_state_dict(state_dict, strict=True)elif self.is_fsdp_enabled:load_fsdp_model(self.accelerator.state.fsdp_plugin, self.accelerator, model, resume_from_checkpoint)else:state_dict = safetensors.torch.load_file(safe_weights_file, device="cpu") or torch.load(weights_file, map_location="cpu")load_result = model.load_state_dict(state_dict, False)self._issue_warnings_after_load(load_result)

elif is_peft_available() and isinstance(model, PeftModel): # lora方式载入# If train a model using PEFT & LoRA, assume that adapter have been saved properly.if hasattr(model, "active_adapter") and hasattr(model, "load_adapter"):if os.path.exists(resume_from_checkpoint):model.load_adapter(resume_from_checkpoint, model.active_adapter, is_trainable=True)

else: # 其它方式载入权重load_result = load_sharded_checkpoint( model, resume_from_checkpoint, strict=is_sagemaker_mp_enabled(), prefer_safe=self.args.save_safetensors)if not is_sagemaker_mp_enabled():self._issue_warnings_after_load(load_result)

2、os.path.isfile(weights_file) or os.path.isfile(safe_weights_file)条件载入权重

这个也分其它训练方式条件,我这里不说了。大概说下载入方法类似pytorch格式。

state_dict = safetensors.torch.load_file(safe_weights_file, device="cpu") or torch.load(weights_file, map_location="cpu")load_result = model.load_state_dict(state_dict, False)

3、is_peft_available() and isinstance(model, PeftModel)条件载入权重

这个是peft方式载入,但是我想与ADAPTER_WEIGHTS_NAME=“adapter_model.bin”权重相关。其载入代码如下:

model.load_adapter(resume_from_checkpoint, model.active_adapter, is_trainable=True)

这是我要重点解读内容,我将在后面单独使用一节来介绍model.load_adapter方法。

4、else条件载入权重

这个就不解读了。

load_result = load_sharded_checkpoint( model, resume_from_checkpoint, strict=is_sagemaker_mp_enabled(), prefer_safe=self.args.save_safetensors)5、特定文件重点解释

adapter_model.bin:在Hugging Face中,adapter_model.bin 文件通常用于存储适配器模型的权重参数。适配器模型是一种轻量级的模型扩展方法,可以在不修改预训练模型架构的情况下进行特定任务的微调。适配器模型通过添加小的线性层(适配器)来学习特定任务的表示,以减少额外的参数量和计算成本。adapter_model.bin 文件包含了这些适配器模型的权重参数,用于在特定任务上微调预训练模型。

‘adapter_model.safetensors’:在Hugging Face中,adapter_model.safetensors 文件通常用于存储适配器模型的安全张量(safe tensors)。安全张量是一种保护隐私和安全的技术,用于在云端或不受信任的环境中处理敏感数据。这种技术通过加密和其他安全机制来确保数据在处理过程中不会泄露。adapter_model.safetensors 文件存储了经过安全处理的适配器模型张量,以便在需要时加载和使用这些张量,同时保护数据的安全性和隐私。

三、LoRA权重载入(def load_adapter(self, model_id: str, adapter_name: str, is_trainable: bool = False, **kwargs: Any))

我重点强调一下,该函数load_adapter也是Trainer类的一个方法。因此,后续有个调用传递self的参数,实际也是模型。

1、load_adapter完整源码

def load_adapter(self, model_id: str, adapter_name: str, is_trainable: bool = False, **kwargs: Any):from .mapping import PEFT_TYPE_TO_CONFIG_MAPPINGhf_hub_download_kwargs, kwargs = self._split_kwargs(kwargs)if adapter_name not in self.peft_config:# load the configpeft_config = PEFT_TYPE_TO_CONFIG_MAPPING[PeftConfig._get_peft_type(model_id,**hf_hub_download_kwargs,)].from_pretrained(model_id,**hf_hub_download_kwargs,)if isinstance(peft_config, PromptLearningConfig) and is_trainable:raise ValueError("Cannot set a prompt learning adapter to trainable when loading pretrained adapter.")else:peft_config.inference_mode = not is_trainableself.add_adapter(adapter_name, peft_config)# load weights if anypath = os.path.join(model_id, kwargs["subfolder"]) if kwargs.get("subfolder", None) is not None else model_idif os.path.exists(os.path.join(path, SAFETENSORS_WEIGHTS_NAME)):filename = os.path.join(path, SAFETENSORS_WEIGHTS_NAME)use_safetensors = Trueelif os.path.exists(os.path.join(path, WEIGHTS_NAME)):filename = os.path.join(path, WEIGHTS_NAME)use_safetensors = Falseelse:has_remote_safetensors_file = hub_file_exists(model_id,SAFETENSORS_WEIGHTS_NAME,revision=hf_hub_download_kwargs.get("revision", None),repo_type=hf_hub_download_kwargs.get("repo_type", None),)use_safetensors = has_remote_safetensors_fileif has_remote_safetensors_file:# Priority 1: load safetensors weightsfilename = hf_hub_download(model_id,SAFETENSORS_WEIGHTS_NAME,**hf_hub_download_kwargs,)else:try:filename = hf_hub_download(model_id, WEIGHTS_NAME, **hf_hub_download_kwargs)except EntryNotFoundError:raise ValueError(f"Can't find weights for {model_id} in {model_id} or in the Hugging Face Hub. "f"Please check that the file {WEIGHTS_NAME} or {SAFETENSORS_WEIGHTS_NAME} is present at {model_id}.")if use_safetensors:adapters_weights = safe_load_file(filename, device="cuda" if torch.cuda.is_available() else "cpu")else:adapters_weights = torch.load(filename, map_location=torch.device("cuda" if torch.cuda.is_available() else "cpu"))# load the weights into the modelload_result = set_peft_model_state_dict(self, adapters_weights, adapter_name=adapter_name)if ((getattr(self, "hf_device_map", None) is not None)and (len(set(self.hf_device_map.values()).intersection({"cpu", "disk"})) > 0)and len(self.peft_config) == 1):device_map = kwargs.get("device_map", "auto")max_memory = kwargs.get("max_memory", None)offload_dir = kwargs.get("offload_folder", None)offload_index = kwargs.get("offload_index", None)dispatch_model_kwargs = {}# Safety checker for previous `accelerate` versions# `offload_index` was introduced in https://github.com/huggingface/accelerate/pull/873/if "offload_index" in inspect.signature(dispatch_model).parameters:dispatch_model_kwargs["offload_index"] = offload_indexno_split_module_classes = self._no_split_modulesif device_map != "sequential":max_memory = get_balanced_memory(self,max_memory=max_memory,no_split_module_classes=no_split_module_classes,low_zero=(device_map == "balanced_low_0"),)if isinstance(device_map, str):device_map = infer_auto_device_map(self, max_memory=max_memory, no_split_module_classes=no_split_module_classes)dispatch_model(self,device_map=device_map,offload_dir=offload_dir,**dispatch_model_kwargs,)hook = AlignDevicesHook(io_same_device=True)if isinstance(self.peft_config[adapter_name], PromptLearningConfig):remove_hook_from_submodules(self.prompt_encoder)add_hook_to_module(self.get_base_model(), hook)# Set model in evaluation mode to deactivate Dropout modules by defaultif not is_trainable:self.eval()return load_result

2、adapter的config参数处理

如果adapter_name代表参数不在self.peft_config中,就加载参数。

if adapter_name not in self.peft_config:# load the configpeft_config = PEFT_TYPE_TO_CONFIG_MAPPING[PeftConfig._get_peft_type(model_id,**hf_hub_download_kwargs,)].from_pretrained(model_id,**hf_hub_download_kwargs,)if isinstance(peft_config, PromptLearningConfig) and is_trainable:raise ValueError("Cannot set a prompt learning adapter to trainable when loading pretrained adapter.")else:peft_config.inference_mode = not is_trainableself.add_adapter(adapter_name, peft_config)其参数如下图:

若不存在,需要添加,应当使用add_adapter,其代码如下:

def add_adapter(self, adapter_name: str, peft_config: PeftConfig):if peft_config.peft_type != self.peft_type:raise ValueError(f"Cannot combine adapters with different peft types. "f"Found {self.peft_type} and {peft_config.peft_type}.")self.peft_config[adapter_name] = peft_configif isinstance(peft_config, PromptLearningConfig):if hasattr(self.config, "to_dict"):dict_config = self.config.to_dict()else:dict_config = self.configpeft_config = _prepare_prompt_learning_config(peft_config, dict_config)self._setup_prompt_encoder(adapter_name)else:self.base_model.add_adapter(adapter_name, peft_config)self.set_additional_trainable_modules(peft_config, adapter_name)def set_additional_trainable_modules(self, peft_config, adapter_name):if getattr(peft_config, "modules_to_save", None) is not None:if self.modules_to_save is None:self.modules_to_save = set(peft_config.modules_to_save)else:self.modules_to_save.update(peft_config.modules_to_save)_set_trainable(self, adapter_name)3、LoRA权重文件获取(file_name)

这里,我们将获取权重文件,也就是得到adapter_model.safetensors或adapter_model.bin的文件路径,并给出到底是安全保存lora权重还是正常保存lora权重。当然,我也说明下2个变量,SAFETENSORS_WEIGHTS_NAME=adapter_model.safetensors,WEIGHTS_NAME=adapter_model.bin。其代码如下:

# load weights if any

path = os.path.join(model_id, kwargs["subfolder"]) if kwargs.get("subfolder", None) is not None else model_idif os.path.exists(os.path.join(path, SAFETENSORS_WEIGHTS_NAME)):filename = os.path.join(path, SAFETENSORS_WEIGHTS_NAME)use_safetensors = True

elif os.path.exists(os.path.join(path, WEIGHTS_NAME)):filename = os.path.join(path, WEIGHTS_NAME)use_safetensors = False

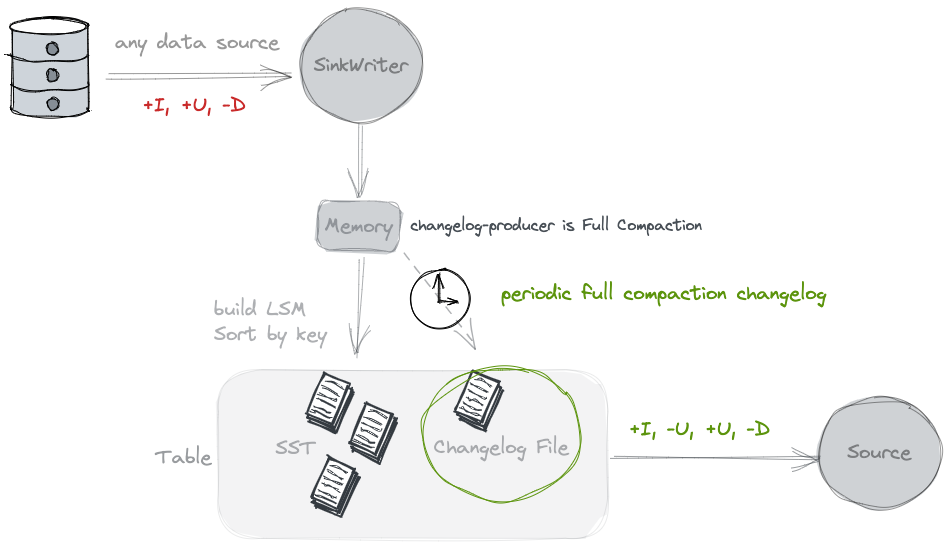

else:has_remote_safetensors_file = hub_file_exists(model_id,SAFETENSORS_WEIGHTS_NAME,revision=hf_hub_download_kwargs.get("revision", None),repo_type=hf_hub_download_kwargs.get("repo_type", None),)use_safetensors = has_remote_safetensors_fileif has_remote_safetensors_file:# Priority 1: load safetensors weightsfilename = hf_hub_download(model_id,SAFETENSORS_WEIGHTS_NAME,**hf_hub_download_kwargs,)else:try:filename = hf_hub_download(model_id, WEIGHTS_NAME, **hf_hub_download_kwargs)except EntryNotFoundError:raise ValueError(f"Can't find weights for {model_id} in {model_id} or in the Hugging Face Hub. "f"Please check that the file {WEIGHTS_NAME} or {SAFETENSORS_WEIGHTS_NAME} is present at {model_id}.")4、LoRA权重文件加载

这里,我们加载lora训练的权重字典,选择其一加载(因同一个权重字典要么安全保存要么正常保存)。我们加载后给了变量adapters_weights中。

if use_safetensors:adapters_weights = safe_load_file(filename, device="cuda" if torch.cuda.is_available() else "cpu")

else:adapters_weights = torch.load(filename, map_location=torch.device("cuda" if torch.cuda.is_available() else "cpu"))

filename值为./out_dirs/checkpoint-122/adapter_model.bin,

5、载入LoRA权重

# load the weights into the model

load_result = set_peft_model_state_dict(self, adapters_weights, adapter_name=adapter_name)

1、完整源码(set_peft_model_state_dict)

这个函数就是模型载入函数,其完整源码如下:

def set_peft_model_state_dict(model, peft_model_state_dict, adapter_name="default"):"""Set the state dict of the Peft model.Args:model ([`PeftModel`]): The Peft model.peft_model_state_dict (`dict`): The state dict of the Peft model."""config = model.peft_config[adapter_name]state_dict = {}if model.modules_to_save is not None:for key, value in peft_model_state_dict.items():if any(module_name in key for module_name in model.modules_to_save):for module_name in model.modules_to_save:if module_name in key:key = key.replace(module_name, f"{module_name}.modules_to_save.{adapter_name}")breakstate_dict[key] = valueelse:state_dict = peft_model_state_dictif config.peft_type in (PeftType.LORA, PeftType.ADALORA, PeftType.IA3):peft_model_state_dict = {}parameter_prefix = "ia3_" if config.peft_type == PeftType.IA3 else "lora_"for k, v in state_dict.items():if parameter_prefix in k:suffix = k.split(parameter_prefix)[1]if "." in suffix:suffix_to_replace = ".".join(suffix.split(".")[1:])k = k.replace(suffix_to_replace, f"{adapter_name}.{suffix_to_replace}")else:k = f"{k}.{adapter_name}"peft_model_state_dict[k] = velse:peft_model_state_dict[k] = vif config.peft_type == PeftType.ADALORA:rank_pattern = config.rank_patternif rank_pattern is not None:model.resize_modules_by_rank_pattern(rank_pattern, adapter_name)elif isinstance(config, PromptLearningConfig) or config.peft_type == PeftType.ADAPTION_PROMPT:peft_model_state_dict = state_dictelse:raise NotImplementedErrorload_result = model.load_state_dict(peft_model_state_dict, strict=False)if isinstance(config, PromptLearningConfig):model.prompt_encoder[adapter_name].embedding.load_state_dict({"weight": peft_model_state_dict["prompt_embeddings"]}, strict=True)return load_result2、获取config值

获取config值,交给config变量。

config = model.peft_config[adapter_name]

本实列值如下图:

3、获得Lora权重值

获取lora权重值,交给state_dict变量。

state_dict = {}

if model.modules_to_save is not None:for key, value in peft_model_state_dict.items():if any(module_name in key for module_name in model.modules_to_save):for module_name in model.modules_to_save:if module_name in key:key = key.replace(module_name, f"{module_name}.modules_to_save.{adapter_name}")breakstate_dict[key] = value

else:state_dict = peft_model_state_dict

state_dict值如下图:

4、模型加工为模型能加载Lora权重变量

这里,config.peft_type in (PeftType.LORA, PeftType.ADALORA, PeftType.IA3)按照config的peft_type方式将上面state_dict权重值变成模型能加载peft_model_state_dict 权重,主要是改了权重名称(符合模型加载),其代码如下:

if config.peft_type in (PeftType.LORA, PeftType.ADALORA, PeftType.IA3):peft_model_state_dict = {}parameter_prefix = "ia3_" if config.peft_type == PeftType.IA3 else "lora_"for k, v in state_dict.items():if parameter_prefix in k:suffix = k.split(parameter_prefix)[1]if "." in suffix:suffix_to_replace = ".".join(suffix.split(".")[1:])k = k.replace(suffix_to_replace, f"{adapter_name}.{suffix_to_replace}")else:k = f"{k}.{adapter_name}"peft_model_state_dict[k] = velse:peft_model_state_dict[k] = vif config.peft_type == PeftType.ADALORA:rank_pattern = config.rank_patternif rank_pattern is not None:model.resize_modules_by_rank_pattern(rank_pattern, adapter_name)

elif isinstance(config, PromptLearningConfig) or config.peft_type == PeftType.ADAPTION_PROMPT:peft_model_state_dict = state_dict

else:raise NotImplementedError

5、模型加载LoRA权重

就这么一句话,模型变加载了权重,实际是非常简单的,类似pytorch方法。

load_result = model.load_state_dict(peft_model_state_dict, strict=False)

而其它方式加载如下:

if isinstance(config, PromptLearningConfig):model.prompt_encoder[adapter_name].embedding.load_state_dict({"weight": peft_model_state_dict["prompt_embeddings"]}, strict=True)

这里,本文是没有使用的。

6、set_peft_model_state_dict返回值

return load_result

6、load_adapter返回值

在之后代码,我将不在解读。模型返回值是6、set_peft_model_state_dict返回值的值,其代码如下:

return load_result

四、optimizer、scheduler与状态_load_rng_state载入

请参考博客:https://blog.csdn.net/weixin_38252409/article/details/138808649

五、LoRA训练推理合并模型方法

1、LoRA权重合并Demo

这里,我给出完整LoRA模型合并的完整源码,如下:

##****************************************推理********************************************### 加载预训练的Bert模型

model_path = "/extend/disk/out_dirs/checkpoint-160"

model_base = "/huggingface_demo/bert-base-uncased"model= BertForSequenceClassification.from_pretrained(model_base, num_labels=2)from peft import PeftModel

# print('Loading LoRA weights...')

model = PeftModel.from_pretrained(model, model_path)

# print('Merging LoRA weights...')

model = model.merge_and_unload()print('ok')我继续给出model_base(huggingface下载内容)与model_path(模型LoRA训练保存内容)路径下的内容,如下图:

2、LoRA权重合模型变量变化

紧接着,我技术说明,每个步骤模型变量变化,这个非常重要。

首先,我们直接加载原来BERT模型,使用model= BertForSequenceClassification.from_pretrained(model_base, num_labels=2)此方法,可发现模型变量为True,为BERT本身模型且梯度更新为True。

接着使用model = PeftModel.from_pretrained(model, model_path)此方法,可发现模型变量多了lora相关权重内容,但梯度更新为False。此时,这里加载了lora权重。

最后,我们使用model = model.merge_and_unload()此方法,可发现模型变量剔除了lora相关权重内容,只有原有模型变量,已实现lora权重合并,且梯度为False。此时,这里合并了lora权重。

六、LoRA权重合于模型权重保存

1、保存合并lora权重Demo

该代码直接实现保存合并权重,如下代码:

# 加载预训练的Bert模型

# 加载预训练的Bert模型

model_base = "./bert-base-uncased"

model_path = "./out_dirs/checkpoint-160"

save_path = "./out_dirs/bb"model= BertForSequenceClassification.from_pretrained(model_base, num_labels=2)from peft import PeftModel

# print('Loading LoRA weights...')

model = PeftModel.from_pretrained(model, model_path)

# print('Merging LoRA weights...')

model = model.merge_and_unload()training_args = TrainingArguments(output_dir='./out_dirs',per_device_train_batch_size=3,per_device_eval_batch_size=3,num_train_epochs=20,logging_dir='./out_dirs',log_level ="info",logging_strategy='steps',logging_steps=4,report_to="none",gradient_accumulation_steps=2, # 梯度迭代累计save_strategy ="steps", # 权重保存策略,默认no,不保存save_steps=2,save_total_limit = 10,learning_rate=2e-4,# ignore_data_skip=True)# 定义Trainer对象

trainer = Trainer(model=model,args=training_args,train_dataset=None,

)trainer.model.save_pretrained( save_path)2、保存合并权重说明

就是使用huggingface包装,然后调用huggingface权重保存方法model.save_pretrained,实现代码如下:

方法一:

# 定义Trainer对象

trainer = Trainer(model=model,args=training_args,train_dataset=None,

)trainer.model.save_pretrained( save_path)

方法二:

model = PeftModel.from_pretrained(model, model_path)

model = model.merge_and_unload()

model.save_pretrained( save_path)当然,保存了权重文件为:

那么就可以直接替换原有权重,即为模型现有权重。

![[ 问题解决篇 ] 解决windows虚拟机安装vmtools报错-winserver2012安装vmtools及安装KB2919355补丁 (附离线工具)](https://i-blog.csdnimg.cn/direct/7f2e10e3e5d94c279101f71556e728c2.png)