为了方便理解NF4算法的实现,这里用PyTorch实现了一版可以和CUDA NF4精度对齐的量化和反量化函数,并使用llama-3.1-8b模型进行测试,可以做到和CUDA实现的算子精度基本对齐(仅反量化存在少许误差),并对模型输出进行测试,64个tokens和CUDA实现完全一致。

CUDA上使用dQuantizeNF4函数使用float类型的x与float类型的NF4表的中间值进行比较,从而得到表中距离x的最近元素的索引。

__device__ unsigned char dQuantizeNF4(float x)

{// the values for this tree was generated by test_normal_map_tree// in the file tests/test_functional.pyif(x > 0.03979014977812767f)if(x > 0.3893125355243683f) // 1if(x > 0.6427869200706482f) // 11if(x > 0.8614784181118011f) // 111return 0b1111;elsereturn 0b1110;elseif(x > 0.5016634166240692f) // 110return 0b1101;elsereturn 0b1100;elseif(x > 0.2035212516784668f) // 10if(x > 0.2920137718319893f) // 101return 0b1011;elsereturn 0b1010;elseif(x > 0.1202552504837513f) // 100return 0b1001;elsereturn 0b1000;elseif(x > -0.33967943489551544f) // 0if(x > -0.13791173323988914f) // 01if(x > -0.045525018125772476f) // 011return 0b0111;elsereturn 0b0110;elseif(x > -0.23460740596055984f) // 010return 0b0101;elsereturn 0b0100;elseif(x > -0.6106329262256622f) // 00if(x > -0.4599952697753906f) // 001return 0b0011;elsereturn 0b0010;elseif(x > -0.8480964004993439f) // 000return 0b0001;elsereturn 0b0000;

}

因此在实现时也需要注意MAPPING和absmax的类型都需要是float32,经过在实际的llama3权重数据上测试:

- 量化函数PyTorch实现可以和CUDA实现精度对齐,无精度误差;

- 反量化函数平均绝对误差大约在

1.5e-6,不影响模型输出(仅测试llama-3.1-8b)。

BNB_MAP = [-1.0, -0.6961928009986877, -0.5250730514526367, -0.39491748809814453, -0.28444138169288635, -0.18477343022823334, -0.09105003625154495, 0.0, 0.07958029955625534, 0.16093020141124725, 0.24611230194568634, 0.33791524171829224, 0.44070982933044434, 0.5626170039176941, 0.7229568362236023, 1.0]

MAPPING = torch.tensor(BNB_MAP, device="cuda", dtype=torch.float32).view(1, -1)def py_quantize_nf4_fp16(A, blocksize=64):shape = A.shapeabsmax = A.view(-1, blocksize).abs().max(dim=1, keepdim=True).valuesa = A.view(-1, blocksize) / absmax.float()diff = torch.abs(a.unsqueeze(-1) - MAPPING)out = torch.argmin(diff, dim=-1)out = out.reshape(-1, 2)out = (out[:, 0] * 16 + out[:, 1]).to(torch.uint8)return out, absmax, shapedef py_dequantize_nf4_fp16(A, absmax, shape, blocksize=64):A = A.view(-1)A = torch.stack([A // 16, A % 16], dim=1).to(torch.int32)out = MAPPING.reshape(-1)[A]out = out.view(-1, blocksize) * absmax.reshape(-1, 1)out = out.reshape(*shape)return out

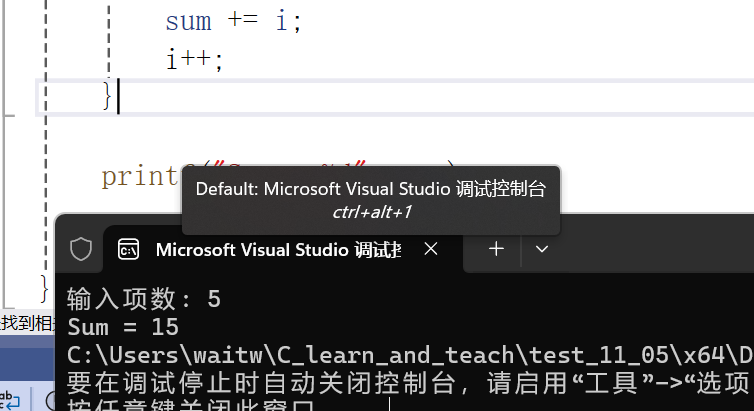

在bitsandbytes中使用这两个函数对CUDA实现进行替换,可以达到模型输出64个tokens完全一致的效果:

<|begin_of_text|>Once upon a time, 20 years ago, I was a young, idealistic, and naive college student. I was also a young, idealistic, and naive college student who was a member of the Young Republicans Club. I was also a young, idealistic, and naive college student who was a member of the Young Republicans Club who was

不过PyTorch的实现存在一定的性能损失,8B模型的量化过程从CUDA实现的3s增加到PyTorch实现的10s;使用PyTorch实现的版本输出64 tokens需要28.012s(仅受反量化函数性能影响),而CUDA实现仅需3.65512s。