一.背景

还是之前的主题,使用开源软件为公司搭建安全管理平台,从视觉模型识别安全帽开始。主要参考学习了开源项目 https://github.com/jomarkow/Safety-Helmet-Detection,我是从运行、训练、标注倒过来学习的。由于工作原因,抽空学习了vscode的使用、python语法,了解了pytorch、yolo、ultralytics、cuda、cuda toolkit、cudnn、AI的分类、AI的相关专业名词等等。到这里,基本可以利用工程化的方式解决目标检测环境搭建、AI标注、训练、运行全过程了。

二.捋一捋思路

1.人类目前的AI本质是一堆数据喂出个没有智慧的算命师

大量的数量,AI最大程度(如95%)的找到了满足了数据输入与结果的因果关系。你说不智能吧?你人不一定能有它预测的准。你说智能吧?其实受限于有限的输入数据,毕竟不能穷举所有输入数据,人类掌握的数据既有限,也不一定就是客观的。个人愚见,不喜勿喷。

2.图形目标检测就是划圈圈、多看看、试一试的过程

划圈圈就是数据标注,在图片上面框一下。多看看就是让算法自己看图片,好比我们教小孩子指着自己反复说“爸爸”,而后小孩慢慢的学会了叫“爸爸”的过程。试一试,就是把训练的结果(AI模型或者算法模型)拿来运行,就像让小孩对着另外一个男人让他去称呼,他可能也叫“爸爸”。那么,就需要纠正告诉他只有自己才是叫“爸爸”,其他的男人应该叫“叔叔”。图形目标检测就是这么个过程,没有什么神秘的。当然,我们是站在前辈肩膀上的,那些算法在那个年代写出来,确实是值得敬佩的。

3.环境与工具说明

windows11家庭版、 显卡NVIDIA GeForce GT 730(我没有用GPU训练,主要还是显卡太老了,版本兼容性问题把我弄哭了,CPU慢点~~就慢点吧)、vscode1.83.0 、conda 24.9.2、Python 3.12.7、pytorch2.5.0、yolo8(后面换最新的yolo11试试)

三.开始手搓

1.创建空的工程结构

1)在非中文、空格目录中创建object-detection-hello文件夹

2)vscode打开文件夹

3)在vscode中创建目录及文件等

下图中的文件夹,并不是必须的,但是推荐这样

4)编写训练需要的参数文件train_config.yaml

train: ../../datas/images

val: ../../datas/images#class count

nc: 1

# names: ['helmet']

names: ['helmet']

labels: ../../datas/labels5)下载yolov8n.pt到models目录中

在我之前上传的也有,详见本文章关联的资源。也可以去安全帽开源项目GitHub - jomarkow/Safety-Helmet-Detection: YoloV8 model, trained for recognizing if construction workers are wearing their protection helmets in mandatory areas中去下载,在根目录就有。

2.图片中安全帽标注

1)图片准备

去把安全帽的开源下载下来,里面有图片。我只选择了0-999,共1千张图片,毕竟我是cpu,训练慢,1千张估计也能有个效果了。

2)图片标注

参考之前的文章在windows系统中使用labelimg对图片进行标注之工具安装及简单使用-CSDN博客

3)标注数据处理

我标注后的文件是xml,需要转为txt文件。内容分别是

<annotation><folder>images</folder><filename>hard_hat_workers2.png</filename><path>D:\zsp\works\temp\20241119-zsp-helmet\Safety-Helmet-Detection-main\data\images\hard_hat_workers2.png</path><source><database>Unknown</database></source><size><width>416</width><height>415</height><depth>3</depth></size><segmented>0</segmented><object><name>helmet</name><pose>Unspecified</pose><truncated>0</truncated><difficult>0</difficult><bndbox><xmin>295</xmin><ymin>219</ymin><xmax>326</xmax><ymax>249</ymax></bndbox></object><object><name>helmet</name><pose>Unspecified</pose><truncated>0</truncated><difficult>0</difficult><bndbox><xmin>321</xmin><ymin>212</ymin><xmax>365</xmax><ymax>244</ymax></bndbox></object>

</annotation>0 0.745192 0.565060 0.072115 0.060241

0 0.826923 0.549398 0.081731 0.072289有时候,默认就是文本文件的格式了。如果不是,创建converter.py直接转换:

from xml.dom import minidom

import osclasses={"helmet":0}def convert_coordinates(size, box):dw = 1.0/size[0]dh = 1.0/size[1]x = (box[0]+box[1])/2.0y = (box[2]+box[3])/2.0w = box[1]-box[0]h = box[3]-box[2]x = x*dww = w*dwy = y*dhh = h*dhreturn (x,y,w,h)def converter(classes):old_labels_path = "datas/images/raw_data/"new_labels_path = "datas/images/raw_data/"current_path = os.getcwd()# 打印当前工作目录print("当前路径是:", current_path)for file_name in os.listdir(old_labels_path):if ".xml" in file_name:old_file = minidom.parse(f"{old_labels_path}/{file_name}")name_out = (file_name[:-4]+'.txt')with open(f"{new_labels_path}/{name_out}", "w") as new_file:itemlist = old_file.getElementsByTagName('object')size = old_file.getElementsByTagName('size')[0]width = int((size.getElementsByTagName('width')[0]).firstChild.data)height = int((size.getElementsByTagName('height')[0]).firstChild.data)for item in itemlist:# get class labelclass_name = (item.getElementsByTagName('name')[0]).firstChild.dataif class_name in classes:label_str = str(classes[class_name])else:label_str = "-1"print (f"{class_name} not in function classes")# get bbox coordinatesxmin = ((item.getElementsByTagName('bndbox')[0]).getElementsByTagName('xmin')[0]).firstChild.dataymin = ((item.getElementsByTagName('bndbox')[0]).getElementsByTagName('ymin')[0]).firstChild.dataxmax = ((item.getElementsByTagName('bndbox')[0]).getElementsByTagName('xmax')[0]).firstChild.dataymax = ((item.getElementsByTagName('bndbox')[0]).getElementsByTagName('ymax')[0]).firstChild.datab = (float(xmin), float(xmax), float(ymin), float(ymax))bb = convert_coordinates((width,height), b)#print(bb)#new_file.write(f"{label_str} {' '.join([(f'{a}.6f') for a in bb])}\n")new_file.write(f"{label_str} {' '.join([(f'{a:.6f}') for a in bb])}\n")print (f"wrote {name_out}")def main():converter(classes)if __name__ == '__main__':main()4)偷懒直接用开源项目标注的labels

当然,也可以偷懒,复制开源项目的labels中0-999的txt文件到我们的labels目录。但是它文件是多目标检测,我们只保留下我们的安全帽标注,也就是txt文件中0开始的行。所以,我在AI的帮助下写了这个utils/deleteOtherclass.py程序,文件内容如下:

import osPROY_FOLDER = os.getcwd().replace("\\","/")INPUT_FOLDER = f"{PROY_FOLDER}/datas/labels/"

files = os.listdir(INPUT_FOLDER)def process_file(file_path):# 存储处理后的行processed_lines = []try:with open(file_path, 'r') as file:for line in file:if len(line) > 0 and line[0] == '0': # 检查行的第一个字符是否为 0processed_lines.append(line)except FileNotFoundError:print(f"文件 {file_path} 未找到")returntry:with open(file_path, 'w') as file: # 以写入模式打开文件,会清空原文件file.writelines(processed_lines)except Exception as e:print(f"写入文件时出现错误: {e}")for file_name in files:file_path = INPUT_FOLDER + file_nameprint(file_path)process_file(file_path)执行这个程序后,txt文件中就只剩下0开始的了,也就是我们安全帽的标注了。

比如,hard_hat_workers0.txt文件前后内容分别如下:

0 0.914663 0.349760 0.112981 0.141827

0 0.051683 0.396635 0.084135 0.091346

0 0.634615 0.379808 0.052885 0.091346

0 0.748798 0.391827 0.055288 0.086538

0 0.305288 0.397837 0.052885 0.069712

0 0.216346 0.397837 0.048077 0.069712

1 0.174279 0.379808 0.050481 0.067308

1 0.801683 0.383413 0.055288 0.088942

1 0.443510 0.411058 0.045673 0.072115

1 0.555288 0.400240 0.043269 0.074519

1 0.500000 0.383413 0.038462 0.064904

0 0.252404 0.360577 0.033654 0.048077

1 0.399038 0.393029 0.043269 0.064904

0 0.914663 0.349760 0.112981 0.141827

0 0.051683 0.396635 0.084135 0.091346

0 0.634615 0.379808 0.052885 0.091346

0 0.748798 0.391827 0.055288 0.086538

0 0.305288 0.397837 0.052885 0.069712

0 0.216346 0.397837 0.048077 0.069712

0 0.252404 0.360577 0.033654 0.0480773.编写训练的程序

Ultralytics的配置文件,一般存放在C:\Users\Dell\AppData\Roaming\Ultralytics\settings.json中,路径中的Dell你要换成你的用户名哦。当然,这里只是知道就好了。看看它内部的内容如下:

{"settings_version": "0.0.6","datasets_dir": "D:\\zsp\\works\\temp\\20241218-zsp-pinwei\\object-detection-hello","weights_dir": "weights","runs_dir": "runs","uuid": "09253350c3bd45fd265c2e8346acaaa599711c1c3ef91e7e78ceff31d4132a83","sync": true,"api_key": "","openai_api_key": "","clearml": true,"comet": true,"dvc": true,"hub": true,"mlflow": true,"neptune": true,"raytune": true,"tensorboard": true,"wandb": false,"vscode_msg": true

}从配置的内容看,我们可能需要修改的是datasets_dir,为了更优雅,我写了代码来修改。

1)用程序去修改配置文件的代码scripts/ultralytics_init.py

from ultralytics import settingsimport osdef update_ultralytics_settings(key, value):try:#settings.update(key, value) # 假设存在 update 方法settings[key]=valueprint(f"Updated {key} to {value} in ultralytics settings.")except AttributeError:print(f"Failed to update {key}, the update method may not exist in the settings module.")def init():current_path = os.getcwd()print(current_path)# 调用函数,使用形参,参数值用引号括起来update_ultralytics_settings("datasets_dir",current_path)print(settings)

2)建立训练的主程序scripts/train.py

import ultralytics_init as uinit

uinit.init()from ultralytics import YOLOimport os# Return a specific setting

# value = settings["runs_dir"]model = YOLO("models/yolov8n.pt")

model.train(data="config/train_config.yaml", epochs=10)

result = model.val()

path = model.export(format="onnx")

代码我我觉得不解释了,一看就明白。

3)配置文件config/train_config.yaml的设置

#训练的图片集合

train: ../../datas/images

#过程验证的图片集合

val: ../../datas/images#目标类型的数量

nc: 1

#label的英文名称

names: ['helmet']4.执行训练

右上角,点三角形运行。

训练了10代,训练过程约1小时。日志如下:

PS D:\zsp\works\temp\20241218-zsp-pinwei\object-detection-hello> & C:/Users/Dell/.conda/envs/myenv/python.exe d:/zsp/works/temp/20241218-zsp-pinwei/object-detection-hello/scripts/train.py

D:\zsp\works\temp\20241218-zsp-pinwei\object-detection-hello

Updated datasets_dir to D:\zsp\works\temp\20241218-zsp-pinwei\object-detection-hello in ultralytics settings.

JSONDict("C:\Users\Dell\AppData\Roaming\Ultralytics\settings.json"):

{"settings_version": "0.0.6","datasets_dir": "D:\\zsp\\works\\temp\\20241218-zsp-pinwei\\object-detection-hello","weights_dir": "weights","runs_dir": "runs","uuid": "09253350c3bd45fd265c2e8346acaaa599711c1c3ef91e7e78ceff31d4132a83","sync": true,"api_key": "","openai_api_key": "","clearml": true,"comet": true,"dvc": true,"hub": true,"mlflow": true,"neptune": true,"raytune": true,"tensorboard": true,"wandb": false,"vscode_msg": true

}

New https://pypi.org/project/ultralytics/8.3.55 available 😃 Update with 'pip install -U ultralytics'

Ultralytics 8.3.49 🚀 Python-3.12.7 torch-2.5.0 CPU (12th Gen Intel Core(TM) i7-12700)

engine\trainer: task=detect, mode=train, model=models/yolov8n.pt, data=config/train_config.yaml, epochs=10, time=None, patience=100, batch=16, imgsz=640, save=True, save_period=-1, cache=False, device=None, workers=8, project=None, name=train, exist_ok=False, pretrained=True, optimizer=auto, verbose=True, seed=0, deterministic=True, single_cls=False, rect=False, cos_lr=False, close_mosaic=10, resume=False, amp=True, fraction=1.0, profile=False, freeze=None, multi_scale=False, overlap_mask=True, mask_ratio=4, dropout=0.0, val=True, split=val, save_json=False, save_hybrid=False, conf=None, iou=0.7, max_det=300, half=False, dnn=False, plots=True, source=None, vid_stride=1, stream_buffer=False, visualize=False, augment=False, agnostic_nms=False, classes=None, retina_masks=False, embed=None, show=False, save_frames=False, save_txt=False, save_conf=False, save_crop=False, show_labels=True, show_conf=True, show_boxes=True, line_width=None, format=torchscript, keras=False, optimize=False, int8=False, dynamic=False, simplify=True, opset=None, workspace=None, nms=False, lr0=0.01, lrf=0.01, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=7.5, cls=0.5, dfl=1.5, pose=12.0, kobj=1.0, nbs=64, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, bgr=0.0, mosaic=1.0, mixup=0.0, copy_paste=0.0, copy_paste_mode=flip, auto_augment=randaugment, erasing=0.4, crop_fraction=1.0, cfg=None, tracker=botsort.yaml, save_dir=runs\detect\train

Overriding model.yaml nc=80 with nc=1from n params module arguments 0 -1 1 464 ultralytics.nn.modules.conv.Conv [3, 16, 3, 2] 1 -1 1 4672 ultralytics.nn.modules.conv.Conv [16, 32, 3, 2]2 -1 1 7360 ultralytics.nn.modules.block.C2f [32, 32, 1, True]3 -1 1 18560 ultralytics.nn.modules.conv.Conv [32, 64, 3, 2]4 -1 2 49664 ultralytics.nn.modules.block.C2f [64, 64, 2, True]5 -1 1 73984 ultralytics.nn.modules.conv.Conv [64, 128, 3, 2]6 -1 2 197632 ultralytics.nn.modules.block.C2f [128, 128, 2, True]7 -1 1 295424 ultralytics.nn.modules.conv.Conv [128, 256, 3, 2]8 -1 1 460288 ultralytics.nn.modules.block.C2f [256, 256, 1, True]9 -1 1 164608 ultralytics.nn.modules.block.SPPF [256, 256, 5] 10 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']11 [-1, 6] 1 0 ultralytics.nn.modules.conv.Concat [1] 12 -1 1 148224 ultralytics.nn.modules.block.C2f [384, 128, 1] 13 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']14 [-1, 4] 1 0 ultralytics.nn.modules.conv.Concat [1] 15 -1 1 37248 ultralytics.nn.modules.block.C2f [192, 64, 1] 16 -1 1 36992 ultralytics.nn.modules.conv.Conv [64, 64, 3, 2]17 [-1, 12] 1 0 ultralytics.nn.modules.conv.Concat [1] 18 -1 1 123648 ultralytics.nn.modules.block.C2f [192, 128, 1] 19 -1 1 147712 ultralytics.nn.modules.conv.Conv [128, 128, 3, 2]20 [-1, 9] 1 0 ultralytics.nn.modules.conv.Concat [1] 21 -1 1 493056 ultralytics.nn.modules.block.C2f [384, 256, 1] 22 [15, 18, 21] 1 751507 ultralytics.nn.modules.head.Detect [1, [64, 128, 256]]

Model summary: 225 layers, 3,011,043 parameters, 3,011,027 gradients, 8.2 GFLOPstrain: Scanning D:\zsp\works\temp\20241218-zsp-pinwei\object-detection-hello\datas\labels.cache.

val: Scanning D:\zsp\works\temp\20241218-zsp-pinwei\object-detection-hello\datas\labels.cache...

Plotting labels to runs\detect\train\labels.jpg...

optimizer: 'optimizer=auto' found, ignoring 'lr0=0.01' and 'momentum=0.937' and determining best 'optimizer', 'lr0' and 'momentum' automatically...

optimizer: AdamW(lr=0.002, momentum=0.9) with parameter groups 57 weight(decay=0.0), 64 weight(decay=0.0005), 63 bias(decay=0.0)

Image sizes 640 train, 640 val

Using 0 dataloader workers

Logging results to runs\detect\train

Starting training for 10 epochs...

Closing dataloader mosaicEpoch GPU_mem box_loss cls_loss dfl_loss Instances Size1/10 0G 1.592 2.148 1.278 40 640: 100%|██████████| Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 32/32 [02:58<00:00, 5.57s/it]all 1000 3792 0.977 0.033 0.423 0.229Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size2/10 0G 1.483 1.464 1.215 23 640: 100%|██████████| 63/63 [07:53<00:00, 7.51s/it]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 32/32 [01:20<00:00, 2.51s/it]all 1000 3792 0.697 0.647 0.687 0.398Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size3/10 0G 1.489 1.318 1.242 15 640: 100%|██████████| 63/63 [02:52<00:00, 2.74s/it]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 32/32 [01:13<00:00, 2.30s/it]all 1000 3792 0.783 0.662 0.744 0.401Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size4/10 0G 1.47 1.183 1.22 19 640: 100%|██████████| 63/63 [02:50<00:00, 2.71s/it]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 32/32 [01:12<00:00, 2.25s/it]all 1000 3792 0.837 0.749 0.832 0.496Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size5/10 0G 1.42 1.041 1.196 31 640: 100%|██████████| 63/63 [02:51<00:00, 2.72s/it]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 32/32 [01:11<00:00, 2.22s/it]all 1000 3792 0.867 0.776 0.87 0.537Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size6/10 0G 1.4 0.9758 1.196 30 640: 100%|██████████| 63/63 [02:51<00:00, 2.72s/it]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 32/32 [01:11<00:00, 2.24s/it]all 1000 3792 0.898 0.818 0.902 0.565Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size7/10 0G 1.352 0.8787 1.156 37 640: 100%|██████████| 63/63 [02:52<00:00, 2.74s/it]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 32/32 [01:20<00:00, 2.51s/it]all 1000 3792 0.921 0.843 0.922 0.576Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size8/10 0G 1.307 0.825 1.13 17 640: 100%|██████████| 63/63 [06:18<00:00, 6.01s/it]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 32/32 [01:11<00:00, 2.22s/it]all 1000 3792 0.906 0.845 0.924 0.58Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size9/10 0G 1.294 0.7867 1.133 29 640: 100%|██████████| 63/63 [02:51<00:00, 2.72s/it]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 32/32 [01:10<00:00, 2.21s/it]all 1000 3792 0.922 0.87 0.938 0.611Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size10/10 0G 1.257 0.7387 1.119 57 640: 100%|██████████| 63/63 [02:51<00:00, 2.72s/it]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 32/32 [01:18<00:00, 2.47s/it]all 1000 3792 0.933 0.884 0.95 0.6210 epochs completed in 0.934 hours.

Optimizer stripped from runs\detect\train\weights\last.pt, 6.2MB

Optimizer stripped from runs\detect\train\weights\best.pt, 6.2MBValidating runs\detect\train\weights\best.pt...

Ultralytics 8.3.49 🚀 Python-3.12.7 torch-2.5.0 CPU (12th Gen Intel Core(TM) i7-12700)

Model summary (fused): 168 layers, 3,005,843 parameters, 0 gradients, 8.1 GFLOPsClass Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 32/32 [00:59<00:00, 1.86s/it]all 1000 3792 0.933 0.884 0.95 0.62

Speed: 1.4ms preprocess, 51.6ms inference, 0.0ms loss, 0.4ms postprocess per image

Results saved to runs\detect\train

Ultralytics 8.3.49 🚀 Python-3.12.7 torch-2.5.0 CPU (12th Gen Intel Core(TM) i7-12700)

Model summary (fused): 168 layers, 3,005,843 parameters, 0 gradients, 8.1 GFLOPs

val: Scanning D:\zsp\works\temp\20241218-zsp-pinwei\object-detection-hello\datas\labels.cache... 1000 images, 76 backgrounds, 0 corrupt: 100%|██████████| 1000/1000 [00:00<?, ?it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 63/63 [00:54<00:00, 1.17it/s]all 1000 3792 0.933 0.884 0.95 0.62

Speed: 1.1ms preprocess, 46.3ms inference, 0.0ms loss, 0.4ms postprocess per image

Results saved to runs\detect\train2

Ultralytics 8.3.49 🚀 Python-3.12.7 torch-2.5.0 CPU (12th Gen Intel Core(TM) i7-12700)PyTorch: starting from 'runs\detect\train\weights\best.pt' with input shape (1, 3, 640, 640) BCHW and output shape(s) (1, 5, 8400) (6.0 MB)ONNX: starting export with onnx 1.17.0 opset 19...

ONNX: slimming with onnxslim 0.1.43...

ONNX: export success ✅ 1.0s, saved as 'runs\detect\train\weights\best.onnx' (11.7 MB)Export complete (1.1s)

Results saved to D:\zsp\works\temp\20241218-zsp-pinwei\object-detection-hello\runs\detect\train\weights

Predict: yolo predict task=detect model=runs\detect\train\weights\best.onnx imgsz=640

Validate: yolo val task=detect model=runs\detect\train\weights\best.onnx imgsz=640 data=config/train_config.yaml

Visualize: https://netron.app5.运行训练后的模型看看效果

1)把训练后的模型best.pt准备好

训练结果模型在哪里?看看日志啊Results saved to D:\zsp\works\temp\20241218-zsp-pinwei\object-detection-hello\runs\detect\train\weights。我去把它复制到了test文件夹中。

2)把测试图片1.jpg复制到test目录下

注意:图片中的马赛克是为了保护同事隐私添加的,并非程序效果。

3)编写验证代码script/test.py

代码也不解释了,一看就明白的

import os

from ultralytics import YOLO

import cv2PROY_FOLDER = os.getcwd().replace("\\","/")INPUT_FOLDER = f"{PROY_FOLDER}/test/"

OUTPUT_FOLDER = f"{PROY_FOLDER}/test_out/"

MODEL_PATH = f"{PROY_FOLDER}/test/best.pt"if not os.path.exists(OUTPUT_FOLDER):os.mkdir(OUTPUT_FOLDER)model = YOLO(MODEL_PATH)

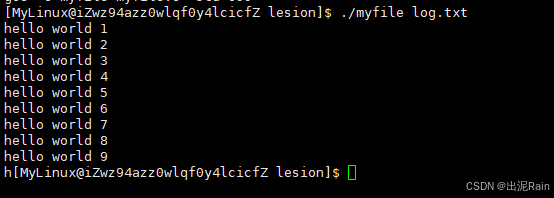

files = os.listdir(INPUT_FOLDER)def draw_box(params, frame, threshold = 0.2):x1, y1, x2, y2, score, class_id = paramsif score > threshold:cv2.rectangle(frame, (int(x1), int(y1)), (int(x2), int(y2)), (0, 255, 0), 4)textValue=results.names[int(class_id)].upper()if "HELMET" in textValue :textValue="yes"cv2.putText(frame, textValue, (int(x1), int(y1 - 10)),cv2.FONT_HERSHEY_SIMPLEX, 1.3, (0, 255, 0), 3, cv2.LINE_AA) elif "HEAD" in textValue :textValue="no!!!"cv2.putText(frame, textValue, (int(x1), int(y1 - 10)),cv2.FONT_HERSHEY_SIMPLEX, 1.3, (0, 255, 0), 3, cv2.LINE_AA) else: cv2.putText(frame, textValue, (int(x1), int(y1 - 10)),cv2.FONT_HERSHEY_SIMPLEX, 1.3, (0, 255, 0), 3, cv2.LINE_AA) return framefor file_name in files:file_path = INPUT_FOLDER + file_nameif ".jpg" in file_name:image_path_out = OUTPUT_FOLDER + file_name[:-4] + "_out.jpg"image = cv2.imread(file_path,cv2.IMREAD_COLOR) results = model(image)[0]for result in results.boxes.data.tolist():image = draw_box(result, image) cv2.imwrite(image_path_out, image) cv2.destroyAllWindows()4)运行结果查看

注意:图片中的马赛克是为了保护同事隐私添加的,并非程序效果。

四.总结

到这里,我们就完成了从标注、写代码训练、验证训练结果的全过程。为我们后面搭建一个安全帽检测的服务奠定了基础,当然对于训练结果的调优干预还是我们的短板,毕竟是初学,我想未来都不是问题。我有编程基础,过程中还是出现了不少的问题,但只要努力尝试去看输出日志,都能解决。不行的话,把输出日志拿去问AI都能找到解决问题的思路。