设置服务

预先说明

- 需要预先安装支持NVIDIA的docker,docker compose >= 1.28

- 不能再容器里运行,否则出现以下报错:

root@c536ca0dbd64:/test/fauxpilot-main# ./setup.sh

Checking for curl ...

/usr/bin/curl

Checking for zstd ...

/opt/conda/bin/zstd

Checking for docker ...

Please install docker.

-

我的电脑有双层的代理,报以下错误(需要关闭代理):failed to solve: python:3.10-slim-buster: failed to copy: httpReadSeeker: failed open: unexpected status code https://XXX.mirror.aliyuncs.com/v2/library/python/blobs/sha256:0f2ede477f4434d54e251ae6b932ccb7a652ebf891e097ccc09dca155622cc50?ns=docker.io: 500 Internal Server Error - Server message: unknown: unknown error

-

https://stackoverflow.com/questions/76499172/in-docker-fails-to-do-request-to-a-python-official-docker-image

-

构建时Address for Triton填的是ip地址,一般选择默认即可: Address for Triton [triton]: 192.168.1.15

开始构建

(base) $:~/imgup/fauxpilot-main$ ./setup.sh

.env already exists, do you want to delete .env and recreate it? [y/n] y

Deleting .env

Checking for curl ...

/home/pdd/anaconda3/bin/curl

Checking for zstd ...

/home/pdd/anaconda3/bin/zstd

Checking for docker ...

/usr/bin/docker

Enter number of GPUs [1]:

External port for the API [5000]:

Address for Triton [triton]:

Port of Triton host [8001]:

Where do you want to save your models [/home/pdd/imgup/fauxpilot-main/models]?

Choose your backend:

[1] FasterTransformer backend (faster, but limited models)

[2] Python backend (slower, but more models, and allows loading with int8)

Enter your choice [1]: 2

Models available:

[1] codegen-350M-mono (1GB total VRAM required; Python-only)

[2] codegen-350M-multi (1GB total VRAM required; multi-language)

[3] codegen-2B-mono (4GB total VRAM required; Python-only)

[4] codegen-2B-multi (4GB total VRAM required; multi-language)

Enter your choice [4]: 2

Do you want to share your huggingface cache between host and docker container? y/n [n]:

Do you want to use int8? y/n [n]: y

Config written to /home/pdd/imgup/fauxpilot-main/models/py-Salesforce-codegen-350M-multi/py-model/config.pbtxt

[+] Building 0.0s (0/1)

[+] Building 0.2s (2/3) => [internal] load build definition from proxy.Dockerfile 0.0s=> => transferring dockerfile: 307B 0.0s=> [internal] load .dockerignore 0.0s=> => transferring context: 2B 0.0s

[+] Building 4.0s (10/10) FINISHED => [internal] load build definition from proxy.Dockerfile 0.0s=> => transferring dockerfile: 307B 0.0s=> [internal] load .dockerignore 0.0s=> => transferring context: 2B 0.0s=> [internal] load metadata for docker.io/library/python:3.10-slim-buster 3.9s=> [internal] load build context 0.0s=> => transferring context: 1.10kB 0.0s=> [1/5] FROM docker.io/library/python:3.10-slim-buster@sha256:d4354e51d606b0cf335fca22714bd599eef74ddc5778de31c64f1f73941008a4 0.0s=> CACHED [2/5] WORKDIR /python-docker 0.0s=> CACHED [3/5] COPY copilot_proxy/requirements.txt requirements.txt 0.0s

[+] Building 7301.3s (5/6) => [internal] load build definition from triton.Dockerfile 0.0s=> => transferring dockerfile: 325B 0.0s=> [internal] load .dockerignore 0.0s=> => transferring context: 2B 0.0s=> [internal] load metadata for docker.io/moyix/triton_with_ft:22.09 3.9s=> [1/3] FROM docker.io/moyix/triton_with_ft:22.09@sha256:5a15c1f29c6b018967b49c588eb0ea67acbf897abb7f26e509ec21844574c9b1 6225.3s=> => resolve docker.io/moyix/triton_with_ft:22.09@sha256:5a15c1f29c6b018967b49c588eb0ea67acbf897abb7f26e509ec21844574c9b1 0.0s=> => sha256:61ad5a82b2aa4c888a15ab025783a869ba7fcc4afebc298ae6c1db6d8c88d245 30.90kB / 30.90kB 0.0s=> => sha256:d7bfe07ed8476565a440c2113cc64d7c0409dba8ef761fb3ec019d7e6b5952df 28.57MB / 28.57MB 24.5s=> => sha256:5a15c1f29c6b018967b49c588eb0ea67acbf897abb7f26e509ec21844574c9b1 6.63kB / 6.63kB 0.0s=> => sha256:4256836e525fb6be06e949690b3678503e5634576ac06bf47d3f77b817014690 109.46MB / 109.46MB 591.2s=> => sha256:19f0d80655c9ce2e0b0f4c988a6129d02a80851532ac431639803b35ac9388fe 116.11MB / 116.11MB 1182.0s=> => sha256:58206f9095ade1626a6a3631f360506c2e8a2d2db992f336f8403e7ac6616de9 182.12kB / 182.12kB 41.5s=> => extracting sha256:d7bfe07ed8476565a440c2113cc64d7c0409dba8ef761fb3ec019d7e6b5952df 0.3s=> => sha256:d2a30fa05b2604a68a60e2d7ca7717a195811af7788717291ae097d3b508bb1c 2.19GB / 2.19GB 877.3s=> => sha256:fc77cb5b926d5dded1900a15b90cbde73a8ef5c6332d5caa59e6f86fc796aebb 11.54kB / 11.54kB 596.0s=> => extracting sha256:4256836e525fb6be06e949690b3678503e5634576ac06bf47d3f77b817014690 1.5s=> => sha256:ef352a62ee1ad6992927650cbad5087f7a9c49f137d4cfaf3f1fda62205ecc83 187B / 187B 597.0s=> => sha256:a3bd3aeb5effd188cf6588b7b81ebe60c7206075131c78ce15343a34ce8282e2 5.11kB / 5.11kB 597.7s=> => sha256:1e4275fc348ac7f7e78cebd123240879b138a18d28f0beb2e7eb81fc0d793e89 139.30kB / 139.30kB 599.0s=> => sha256:c4ee4e3a08c4254f6a6606f6b48816f9cbee18a06932149806b33dfb71c725f5 40.73MB / 40.73MB 759.8s=> => sha256:cec49411216ff5119f78d701933b1d9a67332b262a4864984cbc14591576fc02 89.19MB / 89.19MB 1170.1s=> => sha256:ba316fa6251763af102cd3986c3c816d3875ab38aeab678e982774c5ba4fece6 510B / 510B 894.8s=> => sha256:96c199ab7e47e7cf2c7904b658e5b0998e9a8bbe16fe65c09364813c8ad4d508 343.52MB / 343.52MB 1038.4s=> => sha256:4f4fb700ef54461cfa02571ae0db9a0dc1e0cdb5577484a6d75e68dc38e8acc1 32B / 32B 1039.9s=> => sha256:9117ffb2d6c9c80f3d960b83180d957850c21589b071f26bce291912554161d9 1.85kB / 1.85kB 1040.5s=> => sha256:f3c931f6ec51555073fc0e6e774bb2818f144695e1278da53726c3d97ce462f3 33.49MB / 33.49MB 1055.4s=> => sha256:4f9f9e6af713c19ab3b3f98908a8bd3d84309d6bc267cf833bd19b96fe7736ce 223.12MB / 223.12MB 1137.0s=> => sha256:c02344e4bf2050ab3bc22c54f27818329425989133b6cb478455ab9af25cfeda 11.70kB / 11.70kB 1139.1s=> => sha256:1d64355c71c116b7921e8f99d2f7aa9546f9c3f23a5c2e42caec6dcd641e99ab 57.57MB / 57.57MB 1161.4s=> => sha256:0b73bcf5d4b7466ae8886232d1fd1dc46797fd356d7df48b6e62f0eca3f1c8fa 154B / 154B 1162.7s=> => sha256:4de67e4565c24fe85120e66fb98d2f959f9ed2c533366b3c79dadd9017b55003 759B / 759B 1163.5s=> => sha256:72c1f1a85bcc2f724643cf77e7cfa2a6fdc924b3f3413fc4a8966b7f65d59e5b 2.05GB / 2.05GB 1961.1s=> => sha256:89674754f7108241283a5471fceabe0cb61fdc0096696e7e60c53ac56cbbe6ce 2.80MB / 2.80MB 1176.8s=> => sha256:6c30ef9533b48071705d32c5542c9a26648da014e5d9f70a55db72ffb8b21fb9 1.96kB / 1.96kB 1178.1s=> => sha256:ee2229eb7915a6a32446ea1c6a1789ad6af05fb210493316ba0dae80fb026add 106.69MB / 106.69MB 1367.8s=> => extracting sha256:19f0d80655c9ce2e0b0f4c988a6129d02a80851532ac431639803b35ac9388fe 1.2s=> => sha256:f36b1c387933572355aa844233ecf033e2afa1edc6d5d3db6b37c4b14dbb2f52 4.15GB / 4.15GB 5556.8s=> => extracting sha256:58206f9095ade1626a6a3631f360506c2e8a2d2db992f336f8403e7ac6616de9 0.0s=> => extracting sha256:d2a30fa05b2604a68a60e2d7ca7717a195811af7788717291ae097d3b508bb1c 130.2s=> => extracting sha256:fc77cb5b926d5dded1900a15b90cbde73a8ef5c6332d5caa59e6f86fc796aebb 0.3s=> => extracting sha256:ef352a62ee1ad6992927650cbad5087f7a9c49f137d4cfaf3f1fda62205ecc83 0.0s=> => extracting sha256:a3bd3aeb5effd188cf6588b7b81ebe60c7206075131c78ce15343a34ce8282e2 0.0s=> => extracting sha256:1e4275fc348ac7f7e78cebd123240879b138a18d28f0beb2e7eb81fc0d793e89 0.0s=> => extracting sha256:c4ee4e3a08c4254f6a6606f6b48816f9cbee18a06932149806b33dfb71c725f5 12.9s=> => extracting sha256:cec49411216ff5119f78d701933b1d9a67332b262a4864984cbc14591576fc02 13.1s=> => sha256:ea77bc02b274ed2e356491fef5e519978292b079f876556336561974ea0ea90f 133.88MB / 133.88MB 1639.3s=> => extracting sha256:ba316fa6251763af102cd3986c3c816d3875ab38aeab678e982774c5ba4fece6 0.0s=> => extracting sha256:96c199ab7e47e7cf2c7904b658e5b0998e9a8bbe16fe65c09364813c8ad4d508 10.4s=> => extracting sha256:4f4fb700ef54461cfa02571ae0db9a0dc1e0cdb5577484a6d75e68dc38e8acc1 0.0s=> => extracting sha256:9117ffb2d6c9c80f3d960b83180d957850c21589b071f26bce291912554161d9 0.0s=> => extracting sha256:f3c931f6ec51555073fc0e6e774bb2818f144695e1278da53726c3d97ce462f3 7.3s=> => extracting sha256:4f9f9e6af713c19ab3b3f98908a8bd3d84309d6bc267cf833bd19b96fe7736ce 47.7s=> => extracting sha256:c02344e4bf2050ab3bc22c54f27818329425989133b6cb478455ab9af25cfeda 0.0s=> => extracting sha256:1d64355c71c116b7921e8f99d2f7aa9546f9c3f23a5c2e42caec6dcd641e99ab 4.6s=> => extracting sha256:0b73bcf5d4b7466ae8886232d1fd1dc46797fd356d7df48b6e62f0eca3f1c8fa 0.0s=> => extracting sha256:4de67e4565c24fe85120e66fb98d2f959f9ed2c533366b3c79dadd9017b55003 0.0s=> => sha256:9fa86b1e8da475c00ba976b7037722c63d33fedeaa30f1c649451780c00fff7c 1.65kB / 1.65kB 1657.7s=> => sha256:5982b80a971a9924c1538c296da667072f834e34d2319aee5a142431e995c201 111B / 111B 1674.1s=> => sha256:344ef016a1aed27cd5162fca0023020e219805b15e327eafc6bf5ba04e30f0ea 468.35kB / 468.35kB 1691.3s=> => extracting sha256:72c1f1a85bcc2f724643cf77e7cfa2a6fdc924b3f3413fc4a8966b7f65d59e5b 182.5s=> => extracting sha256:89674754f7108241283a5471fceabe0cb61fdc0096696e7e60c53ac56cbbe6ce 2.8s=> => extracting sha256:6c30ef9533b48071705d32c5542c9a26648da014e5d9f70a55db72ffb8b21fb9 0.0s=> => extracting sha256:ee2229eb7915a6a32446ea1c6a1789ad6af05fb210493316ba0dae80fb026add 45.1s=> => extracting sha256:f36b1c387933572355aa844233ecf033e2afa1edc6d5d3db6b37c4b14dbb2f52 488.4s=> => extracting sha256:ea77bc02b274ed2e356491fef5e519978292b079f876556336561974ea0ea90f 99.1s=> => extracting sha256:9fa86b1e8da475c00ba976b7037722c63d33fedeaa30f1c649451780c00fff7c 0.0s=> => extracting sha256:5982b80a971a9924c1538c296da667072f834e34d2319aee5a142431e995c201 0.0s=> => extracting sha256:344ef016a1aed27cd5162fca0023020e219805b15e327eafc6bf5ba04e30f0ea 0.1s=> ERROR [2/3] RUN python3 -m pip install --disable-pip-version-check -U torch --extra-index-url https://download.pytorch.org/whl/cu116 1071.1s

------> [2/3] RUN python3 -m pip install --disable-pip-version-check -U torch --extra-index-url https://download.pytorch.org/whl/cu116:

#0 17.89 Looking in indexes: https://pypi.org/simple, https://download.pytorch.org/whl/cu116

#0 17.89 Requirement already satisfied: torch in /usr/local/lib/python3.8/dist-packages (1.9.1+cu111)

#0 20.92 Collecting torch

#0 21.17 Downloading torch-2.0.1-cp38-cp38-manylinux1_x86_64.whl (619.9 MB)

#0 119.3 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 619.9/619.9 MB ? eta 0:00:00

#0 139.0 Collecting sympy

#0 139.1 Downloading sympy-1.12-py3-none-any.whl (5.7 MB)

#0 139.4 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 5.7/5.7 MB 15.8 MB/s eta 0:00:00

#0 140.3 Collecting triton==2.0.0

#0 140.4 Downloading https://download.pytorch.org/whl/triton-2.0.0-1-cp38-cp38-manylinux2014_x86_64.manylinux_2_17_x86_64.whl (63.2 MB)

#0 145.6 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 63.2/63.2 MB 5.4 MB/s eta 0:00:00

#0 146.5 Collecting nvidia-cusparse-cu11==11.7.4.91

#0 146.5 Downloading nvidia_cusparse_cu11-11.7.4.91-py3-none-manylinux1_x86_64.whl (173.2 MB)

#0 162.9 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 173.2/173.2 MB 1.1 MB/s eta 0:00:00

#0 165.2 Collecting filelock

#0 165.3 Downloading filelock-3.12.2-py3-none-any.whl (10 kB)

#0 167.6 Collecting nvidia-cudnn-cu11==8.5.0.96

#0 167.6 Downloading nvidia_cudnn_cu11-8.5.0.96-2-py3-none-manylinux1_x86_64.whl (557.1 MB)

#0 242.3 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 557.1/557.1 MB ? eta 0:00:00

#0 243.7 Collecting nvidia-cufft-cu11==10.9.0.58

#0 243.7 Downloading nvidia_cufft_cu11-10.9.0.58-py3-none-manylinux1_x86_64.whl (168.4 MB)

#0 274.2 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 168.4/168.4 MB 422.9 kB/s eta 0:00:00

#0 275.4 Collecting nvidia-cuda-cupti-cu11==11.7.101

#0 275.5 Downloading nvidia_cuda_cupti_cu11-11.7.101-py3-none-manylinux1_x86_64.whl (11.8 MB)

#0 277.3 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 11.8/11.8 MB 5.7 MB/s eta 0:00:00

#0 278.7 Collecting nvidia-cuda-nvrtc-cu11==11.7.99

#0 278.9 Downloading nvidia_cuda_nvrtc_cu11-11.7.99-2-py3-none-manylinux1_x86_64.whl (21.0 MB)

#0 283.2 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 21.0/21.0 MB 2.6 MB/s eta 0:00:00

#0 284.1 Collecting jinja2

#0 284.2 Downloading https://download.pytorch.org/whl/Jinja2-3.1.2-py3-none-any.whl (133 kB)

#0 284.3 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 133.1/133.1 kB 2.1 MB/s eta 0:00:00

#0 285.1 Collecting networkx

#0 285.2 Downloading networkx-3.1-py3-none-any.whl (2.1 MB)

#0 285.5 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 2.1/2.1 MB 8.4 MB/s eta 0:00:00

#0 286.4 Collecting nvidia-curand-cu11==10.2.10.91

#0 286.4 Downloading nvidia_curand_cu11-10.2.10.91-py3-none-manylinux1_x86_64.whl (54.6 MB)

#0 296.3 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 54.6/54.6 MB 1.3 MB/s eta 0:00:00

#0 297.6 Collecting nvidia-nvtx-cu11==11.7.91

#0 297.8 Downloading nvidia_nvtx_cu11-11.7.91-py3-none-manylinux1_x86_64.whl (98 kB)

#0 297.9 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 98.6/98.6 kB 633.8 kB/s eta 0:00:00

#0 298.8 Collecting nvidia-cusolver-cu11==11.4.0.1

#0 298.8 Downloading nvidia_cusolver_cu11-11.4.0.1-2-py3-none-manylinux1_x86_64.whl (102.6 MB)

#0 310.2 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 102.6/102.6 MB 1.3 MB/s eta 0:00:00

#0 310.3 Requirement already satisfied: typing-extensions in /usr/local/lib/python3.8/dist-packages (from torch) (4.3.0)

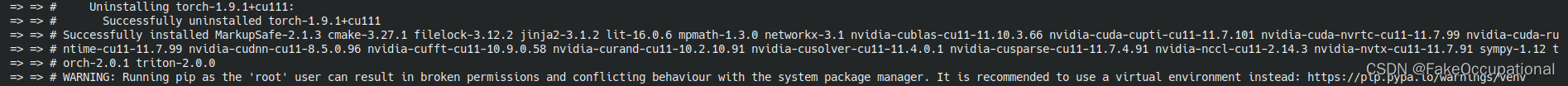

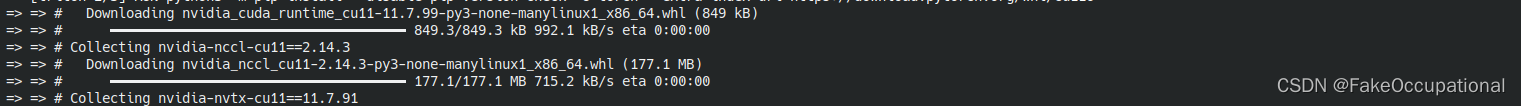

#0 311.0 Collecting nvidia-nccl-cu11==2.14.3

#0 311.1 Downloading nvidia_nccl_cu11-2.14.3-py3-none-manylinux1_x86_64.whl (177.1 MB)

#0 349.2 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 177.1/177.1 MB ? eta 0:00:00

#0 350.3 Collecting nvidia-cublas-cu11==11.10.3.66

#0 350.3 Downloading nvidia_cublas_cu11-11.10.3.66-py3-none-manylinux1_x86_64.whl (317.1 MB)

#0 457.1 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 317.1/317.1 MB ? eta 0:00:00

#0 458.4 Collecting nvidia-cuda-runtime-cu11==11.7.99

#0 458.5 Downloading nvidia_cuda_runtime_cu11-11.7.99-py3-none-manylinux1_x86_64.whl (849 kB)

#0 459.0 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 849.3/849.3 kB 1.4 MB/s eta 0:00:00

#0 459.1 Requirement already satisfied: wheel in /usr/local/lib/python3.8/dist-packages (from nvidia-cublas-cu11==11.10.3.66->torch) (0.37.1)

#0 459.1 Requirement already satisfied: setuptools in /usr/local/lib/python3.8/dist-packages (from nvidia-cublas-cu11==11.10.3.66->torch) (62.6.0)

#0 460.4 Collecting lit

#0 460.8 Downloading lit-16.0.6.tar.gz (153 kB)

#0 461.2 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 153.7/153.7 kB 385.8 kB/s eta 0:00:00

#0 484.1 Installing build dependencies: started

#0 495.5 Installing build dependencies: finished with status 'done'

#0 495.5 Getting requirements to build wheel: started

#0 501.3 Getting requirements to build wheel: finished with status 'done'

#0 501.4 Installing backend dependencies: started

#0 504.4 Installing backend dependencies: finished with status 'done'

#0 504.4 Preparing metadata (pyproject.toml): started

#0 506.2 Preparing metadata (pyproject.toml): finished with status 'done'

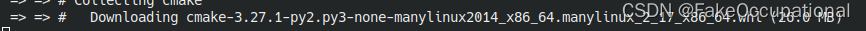

#0 507.4 Collecting cmake

#0 507.5 Downloading cmake-3.27.1-py2.py3-none-manylinux2014_x86_64.manylinux_2_17_x86_64.whl (26.0 MB)

#0 1070.8 ━━━━━━━━━━━━━━━━━━━━━━━━━━╸ 17.4/26.0 MB 10.4 kB/s eta 0:13:51

#0 1070.9 ERROR: Exception:

#0 1070.9 Traceback (most recent call last):

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_vendor/urllib3/response.py", line 435, in _error_catcher

#0 1070.9 yield

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_vendor/urllib3/response.py", line 516, in read

#0 1070.9 data = self._fp.read(amt) if not fp_closed else b""

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_vendor/cachecontrol/filewrapper.py", line 90, in read

#0 1070.9 data = self.__fp.read(amt)

#0 1070.9 File "/usr/lib/python3.8/http/client.py", line 459, in read

#0 1070.9 n = self.readinto(b)

#0 1070.9 File "/usr/lib/python3.8/http/client.py", line 503, in readinto

#0 1070.9 n = self.fp.readinto(b)

#0 1070.9 File "/usr/lib/python3.8/socket.py", line 669, in readinto

#0 1070.9 return self._sock.recv_into(b)

#0 1070.9 File "/usr/lib/python3.8/ssl.py", line 1241, in recv_into

#0 1070.9 return self.read(nbytes, buffer)

#0 1070.9 File "/usr/lib/python3.8/ssl.py", line 1099, in read

#0 1070.9 return self._sslobj.read(len, buffer)

#0 1070.9 socket.timeout: The read operation timed out

#0 1070.9

#0 1070.9 During handling of the above exception, another exception occurred:

#0 1070.9

#0 1070.9 Traceback (most recent call last):

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/cli/base_command.py", line 167, in exc_logging_wrapper

#0 1070.9 status = run_func(*args)

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/cli/req_command.py", line 205, in wrapper

#0 1070.9 return func(self, options, args)

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/commands/install.py", line 341, in run

#0 1070.9 requirement_set = resolver.resolve(

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/resolution/resolvelib/resolver.py", line 94, in resolve

#0 1070.9 result = self._result = resolver.resolve(

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_vendor/resolvelib/resolvers.py", line 481, in resolve

#0 1070.9 state = resolution.resolve(requirements, max_rounds=max_rounds)

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_vendor/resolvelib/resolvers.py", line 373, in resolve

#0 1070.9 failure_causes = self._attempt_to_pin_criterion(name)

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_vendor/resolvelib/resolvers.py", line 213, in _attempt_to_pin_criterion

#0 1070.9 criteria = self._get_updated_criteria(candidate)

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_vendor/resolvelib/resolvers.py", line 204, in _get_updated_criteria

#0 1070.9 self._add_to_criteria(criteria, requirement, parent=candidate)

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_vendor/resolvelib/resolvers.py", line 172, in _add_to_criteria

#0 1070.9 if not criterion.candidates:

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_vendor/resolvelib/structs.py", line 151, in __bool__

#0 1070.9 return bool(self._sequence)

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/resolution/resolvelib/found_candidates.py", line 155, in __bool__

#0 1070.9 return any(self)

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/resolution/resolvelib/found_candidates.py", line 143, in <genexpr>

#0 1070.9 return (c for c in iterator if id(c) not in self._incompatible_ids)

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/resolution/resolvelib/found_candidates.py", line 47, in _iter_built

#0 1070.9 candidate = func()

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/resolution/resolvelib/factory.py", line 215, in _make_candidate_from_link

#0 1070.9 self._link_candidate_cache[link] = LinkCandidate(

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/resolution/resolvelib/candidates.py", line 291, in __init__

#0 1070.9 super().__init__(

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/resolution/resolvelib/candidates.py", line 161, in __init__

#0 1070.9 self.dist = self._prepare()

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/resolution/resolvelib/candidates.py", line 230, in _prepare

#0 1070.9 dist = self._prepare_distribution()

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/resolution/resolvelib/candidates.py", line 302, in _prepare_distribution

#0 1070.9 return preparer.prepare_linked_requirement(self._ireq, parallel_builds=True)

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/operations/prepare.py", line 428, in prepare_linked_requirement

#0 1070.9 return self._prepare_linked_requirement(req, parallel_builds)

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/operations/prepare.py", line 473, in _prepare_linked_requirement

#0 1070.9 local_file = unpack_url(

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/operations/prepare.py", line 155, in unpack_url

#0 1070.9 file = get_http_url(

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/operations/prepare.py", line 96, in get_http_url

#0 1070.9 from_path, content_type = download(link, temp_dir.path)

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/network/download.py", line 146, in __call__

#0 1070.9 for chunk in chunks:

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/cli/progress_bars.py", line 53, in _rich_progress_bar

#0 1070.9 for chunk in iterable:

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_internal/network/utils.py", line 63, in response_chunks

#0 1070.9 for chunk in response.raw.stream(

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_vendor/urllib3/response.py", line 573, in stream

#0 1070.9 data = self.read(amt=amt, decode_content=decode_content)

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_vendor/urllib3/response.py", line 538, in read

#0 1070.9 raise IncompleteRead(self._fp_bytes_read, self.length_remaining)

#0 1070.9 File "/usr/lib/python3.8/contextlib.py", line 131, in __exit__

#0 1070.9 self.gen.throw(type, value, traceback)

#0 1070.9 File "/usr/local/lib/python3.8/dist-packages/pip/_vendor/urllib3/response.py", line 440, in _error_catcher

#0 1070.9 raise ReadTimeoutError(self._pool, None, "Read timed out.")

#0 1070.9 pip._vendor.urllib3.exceptions.ReadTimeoutError: HTTPSConnectionPool(host='files.pythonhosted.org', port=443): Read timed out.

------

failed to solve: process "/bin/sh -c python3 -m pip install --disable-pip-version-check -U torch --extra-index-url https://download.pytorch.org/whl/cu116" did not complete successfully: exit code: 2

[+] Building 1686.7s (16/16) FINISHED => [triton internal] load .dockerignore 0.1s=> => transferring context: 2B 0.0s=> [copilot_proxy internal] load build definition from proxy.Dockerfile 0.1s=> => transferring dockerfile: 307B 0.0s=> [copilot_proxy internal] load .dockerignore 0.1s=> => transferring context: 2B 0.0s=> [triton internal] load build definition from triton.Dockerfile 0.1s=> => transferring dockerfile: 325B 0.0s=> [triton internal] load metadata for docker.io/moyix/triton_with_ft:22.09 17.2s=> [copilot_proxy internal] load metadata for docker.io/library/python:3.10-slim-buster 67.1s=> CACHED [triton 1/3] FROM docker.io/moyix/triton_with_ft:22.09@sha256:5a15c1f29c6b018967b49c588eb0ea67acbf897abb7f26e509ec21844574c9b1 0.0s=> [triton 2/3] RUN python3 -m pip install --disable-pip-version-check -U torch --extra-index-url https://download.pytorch.org/whl/cu116 1566.6s=> [copilot_proxy 1/5] FROM docker.io/library/python:3.10-slim-buster@sha256:d4354e51d606b0cf335fca22714bd599eef74ddc5778de31c64f1f73941008a4 0.0s=> [copilot_proxy internal] load build context 22.7s=> => transferring context: 1.10kB 3.6s=> CACHED [copilot_proxy 2/5] WORKDIR /python-docker 0.0s=> CACHED [copilot_proxy 3/5] COPY copilot_proxy/requirements.txt requirements.txt 0.0s=> CACHED [copilot_proxy 4/5] RUN pip3 install --no-cache-dir -r requirements.txt 0.0s=> CACHED [copilot_proxy 5/5] COPY copilot_proxy . 0.0s=> [triton] exporting to image 72.4s=> => exporting layers 72.0s=> => writing image sha256:7234f617bc0cebdbb97fe7eeaf981b0330f43ee40c6b13e32804ff0e14e2ee1c 0.1s=> => naming to docker.io/library/fauxpilot-main-copilot_proxy 0.3s=> => writing image sha256:c94abc71a90518de183514419befb156155fef5d631ede01932229c527c5dc4f 0.0s=> => naming to docker.io/library/fauxpilot-main-triton 0.0s => [triton 3/3] RUN python3 -m pip install --disable-pip-version-check -U transformers bitsandbytes accelerate 30.7s

Config complete, do you want to run FauxPilot? [y/n] Config complete, do you want to run FauxPilot? [y/n] y

[+] Building 16.2s (16/16) FINISHED => [fauxpilot-main-copilot_proxy internal] load .dockerignore 0.3s => => transferring context: 2B 0.0s => [fauxpilot-main-copilot_proxy internal] load build definition from proxy.Dockerfile 0.2s=> => transferring dockerfile: 307B 0.0s=> [fauxpilot-main-triton internal] load build definition from triton.Dockerfile 0.2s=> => transferring dockerfile: 325B 0.0s=> [fauxpilot-main-triton internal] load .dockerignore 0.1s=> => transferring context: 2B 0.0s=> [fauxpilot-main-triton internal] load metadata for docker.io/moyix/triton_with_ft:22.09 2.7s=> [fauxpilot-main-copilot_proxy internal] load metadata for docker.io/library/python:3.10-slim-buster 15.7s=> [fauxpilot-main-triton 1/3] FROM docker.io/moyix/triton_with_ft:22.09@sha256:5a15c1f29c6b018967b49c588eb0ea67acbf897abb7f26e509ec21844574c9b1 0.0s=> CACHED [fauxpilot-main-triton 2/3] RUN python3 -m pip install --disable-pip-version-check -U torch --extra-index-url https://download.pytorch.org/whl/cu116 0.0s=> CACHED [fauxpilot-main-triton 3/3] RUN python3 -m pip install --disable-pip-version-check -U transformers bitsandbytes accelerate 0.0s=> [fauxpilot-main-copilot_proxy] exporting to image 0.1s=> => exporting layers 0.0s=> => writing image sha256:377f69e79f0e876632e4d35aa41aab4e2b294ce5a57711999e420a86a4584675 0.0s=> => naming to docker.io/library/fauxpilot-main-triton 0.0s=> => writing image sha256:84091f46edce3ef3c44a1dc47ce651c0fed7c3d70054136ada7154f5c31083c8 0.0s=> => naming to docker.io/library/fauxpilot-main-copilot_proxy 0.0s=> [fauxpilot-main-copilot_proxy internal] load build context 0.1s=> => transferring context: 1.10kB 0.0s=> [fauxpilot-main-copilot_proxy 1/5] FROM docker.io/library/python:3.10-slim-buster@sha256:d4354e51d606b0cf335fca22714bd599eef74ddc5778de31c64f1f73941008a4 0.0s=> CACHED [fauxpilot-main-copilot_proxy 2/5] WORKDIR /python-docker 0.0s=> CACHED [fauxpilot-main-copilot_proxy 3/5] COPY copilot_proxy/requirements.txt requirements.txt 0.0s=> CACHED [fauxpilot-main-copilot_proxy 4/5] RUN pip3 install --no-cache-dir -r requirements.txt 0.0s=> CACHED [fauxpilot-main-copilot_proxy 5/5] COPY copilot_proxy . 0.0s

[+] Running 3/3⠿ Network fauxpilot-main_default Created 0.2s⠿ Container fauxpilot-main-copilot_proxy-1 Created 0.6s⠿ Container fauxpilot-main-triton-1 Created 0.6s

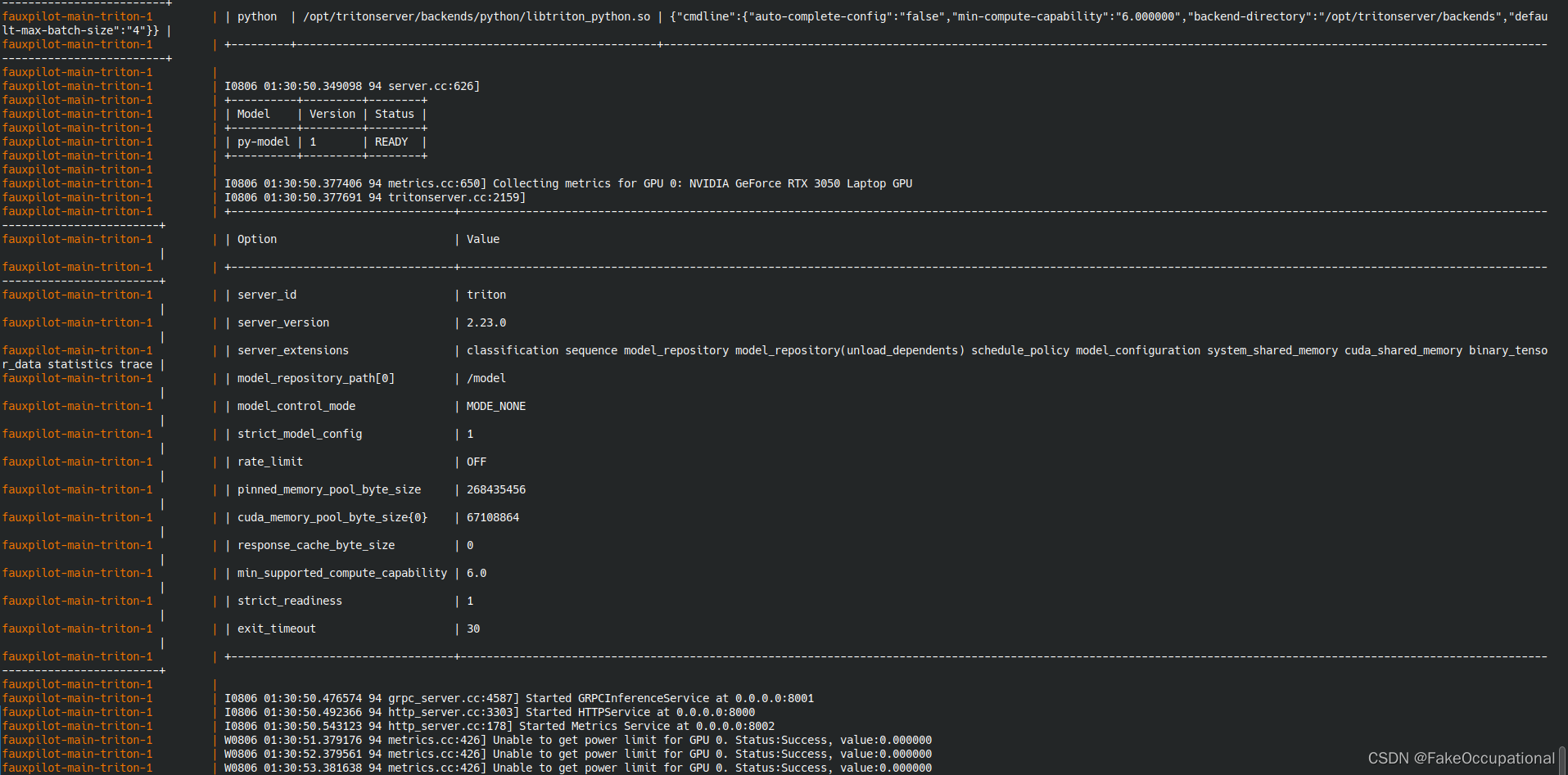

Attaching to fauxpilot-main-copilot_proxy-1, fauxpilot-main-triton-1

fauxpilot-main-copilot_proxy-1 | INFO: Started server process [1]

fauxpilot-main-copilot_proxy-1 | INFO: Waiting for application startup.

fauxpilot-main-copilot_proxy-1 | INFO: Application startup complete.

fauxpilot-main-copilot_proxy-1 | INFO: Uvicorn running on http://0.0.0.0:5000 (Press CTRL+C to quit)

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 | =============================

fauxpilot-main-triton-1 | == Triton Inference Server ==

fauxpilot-main-triton-1 | =============================

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 | NVIDIA Release 22.06 (build 39726160)

fauxpilot-main-triton-1 | Triton Server Version 2.23.0

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 | Copyright (c) 2018-2022, NVIDIA CORPORATION & AFFILIATES. All rights reserved.

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 | Various files include modifications (c) NVIDIA CORPORATION & AFFILIATES. All rights reserved.

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 | This container image and its contents are governed by the NVIDIA Deep Learning Container License.

fauxpilot-main-triton-1 | By pulling and using the container, you accept the terms and conditions of this license:

fauxpilot-main-triton-1 | https://developer.nvidia.com/ngc/nvidia-deep-learning-container-license

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 | WARNING: CUDA Minor Version Compatibility mode ENABLED.

fauxpilot-main-triton-1 | Using driver version 470.161.03 which has support for CUDA 11.4. This container

fauxpilot-main-triton-1 | was built with CUDA 11.7 and will be run in Minor Version Compatibility mode.

fauxpilot-main-triton-1 | CUDA Forward Compatibility is preferred over Minor Version Compatibility for use

fauxpilot-main-triton-1 | with this container but was unavailable:

fauxpilot-main-triton-1 | [[Forward compatibility was attempted on non supported HW (CUDA_ERROR_COMPAT_NOT_SUPPORTED_ON_DEVICE) cuInit()=804]]

fauxpilot-main-triton-1 | See https://docs.nvidia.com/deploy/cuda-compatibility/ for details.

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 | I0805 18:07:02.998320 94 pinned_memory_manager.cc:240] Pinned memory pool is created at '0x7f3414000000' with size 268435456

fauxpilot-main-triton-1 | I0805 18:07:02.999815 94 cuda_memory_manager.cc:105] CUDA memory pool is created on device 0 with size 67108864

fauxpilot-main-triton-1 | I0805 18:07:03.012595 94 model_repository_manager.cc:1191] loading: py-model:1

fauxpilot-main-triton-1 | I0805 18:07:03.149906 94 python_be.cc:1774] TRITONBACKEND_ModelInstanceInitialize: py-model_0 (CPU device 0)

fauxpilot-main-triton-1 | Cuda available? True

fauxpilot-main-triton-1 | is_half: True, int8: True, auto_device_map: True

Downloading (\u2026)lve/main/config.json: 100%|##########| 1.00k/1.00k [00:00<00:00, 101kB/s]0805 18:07:07.986441 100 pb_stub.cc:309] Failed to initialize Python stub: RuntimeError: Failed to import transformers.models.codegen.modeling_codegen because of the following error (look up to see its traceback):

fauxpilot-main-triton-1 | No module named 'scipy'

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 | At:

fauxpilot-main-triton-1 | /usr/local/lib/python3.8/dist-packages/transformers/utils/import_utils.py(1101): _get_module

fauxpilot-main-triton-1 | /usr/local/lib/python3.8/dist-packages/transformers/utils/import_utils.py(1089): __getattr__

fauxpilot-main-triton-1 | /usr/local/lib/python3.8/dist-packages/transformers/models/auto/auto_factory.py(625): getattribute_from_module

fauxpilot-main-triton-1 | /usr/local/lib/python3.8/dist-packages/transformers/models/auto/auto_factory.py(680): _load_attr_from_module

fauxpilot-main-triton-1 | /usr/local/lib/python3.8/dist-packages/transformers/models/auto/auto_factory.py(666): __getitem__

fauxpilot-main-triton-1 | /usr/local/lib/python3.8/dist-packages/transformers/models/auto/auto_factory.py(376): _get_model_class

fauxpilot-main-triton-1 | /usr/local/lib/python3.8/dist-packages/transformers/models/auto/auto_factory.py(492): from_pretrained

fauxpilot-main-triton-1 | /model/py-model/1/model.py(40): initialize

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 | E0805 18:07:08.413723 94 model_repository_manager.cc:1348] failed to load 'py-model' version 1: Internal: RuntimeError: Failed to import transformers.models.codegen.modeling_codegen because of the following error (look up to see its traceback):

fauxpilot-main-triton-1 | No module named 'scipy'

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 | At:

fauxpilot-main-triton-1 | /usr/local/lib/python3.8/dist-packages/transformers/utils/import_utils.py(1101): _get_module

fauxpilot-main-triton-1 | /usr/local/lib/python3.8/dist-packages/transformers/utils/import_utils.py(1089): __getattr__

fauxpilot-main-triton-1 | /usr/local/lib/python3.8/dist-packages/transformers/models/auto/auto_factory.py(625): getattribute_from_module

fauxpilot-main-triton-1 | /usr/local/lib/python3.8/dist-packages/transformers/models/auto/auto_factory.py(680): _load_attr_from_module

fauxpilot-main-triton-1 | /usr/local/lib/python3.8/dist-packages/transformers/models/auto/auto_factory.py(666): __getitem__

fauxpilot-main-triton-1 | /usr/local/lib/python3.8/dist-packages/transformers/models/auto/auto_factory.py(376): _get_model_class

fauxpilot-main-triton-1 | /usr/local/lib/python3.8/dist-packages/transformers/models/auto/auto_factory.py(492): from_pretrained

fauxpilot-main-triton-1 | /model/py-model/1/model.py(40): initialize

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 | I0805 18:07:08.413880 94 server.cc:556]

fauxpilot-main-triton-1 | +------------------+------+

fauxpilot-main-triton-1 | | Repository Agent | Path |

fauxpilot-main-triton-1 | +------------------+------+

fauxpilot-main-triton-1 | +------------------+------+

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 | I0805 18:07:08.413913 94 server.cc:583]

fauxpilot-main-triton-1 | +---------+-------------------------------------------------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------+

fauxpilot-main-triton-1 | | Backend | Path | Config |

fauxpilot-main-triton-1 | +---------+-------------------------------------------------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------+

fauxpilot-main-triton-1 | | python | /opt/tritonserver/backends/python/libtriton_python.so | {"cmdline":{"auto-complete-config":"false","min-compute-capability":"6.000000","backend-directory":"/opt/tritonserver/backends","default-max-batch-size":"4"}} |

fauxpilot-main-triton-1 | +---------+-------------------------------------------------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------+

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 | I0805 18:07:08.419905 94 server.cc:626]

fauxpilot-main-triton-1 | +----------+---------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------+

fauxpilot-main-triton-1 | | Model | Version | Status |

fauxpilot-main-triton-1 | +----------+---------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------+

fauxpilot-main-triton-1 | | py-model | 1 | UNAVAILABLE: Internal: RuntimeError: Failed to import transformers.models.codegen.modeling_codegen because of the following error (look up to see its traceback): |

fauxpilot-main-triton-1 | | | | No module named 'scipy' |

fauxpilot-main-triton-1 | | | | |

fauxpilot-main-triton-1 | | | | At: |

fauxpilot-main-triton-1 | | | | /usr/local/lib/python3.8/dist-packages/transformers/utils/import_utils.py(1101): _get_module |

fauxpilot-main-triton-1 | | | | /usr/local/lib/python3.8/dist-packages/transformers/utils/import_utils.py(1089): __getattr__ |

fauxpilot-main-triton-1 | | | | /usr/local/lib/python3.8/dist-packages/transformers/models/auto/auto_factory.py(625): getattribute_from_module |

fauxpilot-main-triton-1 | | | | /usr/local/lib/python3.8/dist-packages/transformers/models/auto/auto_factory.py(680): _load_attr_from_module |

fauxpilot-main-triton-1 | | | | /usr/local/lib/python3.8/dist-packages/transformers/models/auto/auto_factory.py(666): __getitem__ |

fauxpilot-main-triton-1 | | | | /usr/local/lib/python3.8/dist-packages/transformers/models/auto/auto_factory.py(376): _get_model_class |

fauxpilot-main-triton-1 | | | | /usr/local/lib/python3.8/dist-packages/transformers/models/auto/auto_factory.py(492): from_pretrained |

fauxpilot-main-triton-1 | | | | /model/py-model/1/model.py(40): initialize |

fauxpilot-main-triton-1 | +----------+---------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------+

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 | I0805 18:07:08.526629 94 metrics.cc:650] Collecting metrics for GPU 0: NVIDIA GeForce RTX 3050 Laptop GPU

fauxpilot-main-triton-1 | I0805 18:07:08.526812 94 tritonserver.cc:2159]

fauxpilot-main-triton-1 | +----------------------------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

fauxpilot-main-triton-1 | | Option | Value |

fauxpilot-main-triton-1 | +----------------------------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

fauxpilot-main-triton-1 | | server_id | triton |

fauxpilot-main-triton-1 | | server_version | 2.23.0 |

fauxpilot-main-triton-1 | | server_extensions | classification sequence model_repository model_repository(unload_dependents) schedule_policy model_configuration system_shared_memory cuda_shared_memory binary_tensor_data statistics trace |

fauxpilot-main-triton-1 | | model_repository_path[0] | /model |

fauxpilot-main-triton-1 | | model_control_mode | MODE_NONE |

fauxpilot-main-triton-1 | | strict_model_config | 1 |

fauxpilot-main-triton-1 | | rate_limit | OFF |

fauxpilot-main-triton-1 | | pinned_memory_pool_byte_size | 268435456 |

fauxpilot-main-triton-1 | | cuda_memory_pool_byte_size{0} | 67108864 |

fauxpilot-main-triton-1 | | response_cache_byte_size | 0 |

fauxpilot-main-triton-1 | | min_supported_compute_capability | 6.0 |

fauxpilot-main-triton-1 | | strict_readiness | 1 |

fauxpilot-main-triton-1 | | exit_timeout | 30 |

fauxpilot-main-triton-1 | +----------------------------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 | I0805 18:07:08.526819 94 server.cc:257] Waiting for in-flight requests to complete.

fauxpilot-main-triton-1 | I0805 18:07:08.526824 94 server.cc:273] Timeout 30: Found 0 model versions that have in-flight inferences

fauxpilot-main-triton-1 | I0805 18:07:08.526829 94 server.cc:288] All models are stopped, unloading models

fauxpilot-main-triton-1 | I0805 18:07:08.526831 94 server.cc:295] Timeout 30: Found 0 live models and 0 in-flight non-inference requests

fauxpilot-main-triton-1 | error: creating server: Internal - failed to load all models

fauxpilot-main-triton-1 | W0805 18:07:09.532102 94 metrics.cc:426] Unable to get power limit for GPU 0. Status:Success, value:0.000000

fauxpilot-main-triton-1 | --------------------------------------------------------------------------

fauxpilot-main-triton-1 | Primary job terminated normally, but 1 process returned

fauxpilot-main-triton-1 | a non-zero exit code. Per user-direction, the job has been aborted.

fauxpilot-main-triton-1 | --------------------------------------------------------------------------

fauxpilot-main-triton-1 | --------------------------------------------------------------------------

fauxpilot-main-triton-1 | mpirun detected that one or more processes exited with non-zero status, thus causing

fauxpilot-main-triton-1 | the job to be terminated. The first process to do so was:

fauxpilot-main-triton-1 |

fauxpilot-main-triton-1 | Process name: [[30509,1],0]

fauxpilot-main-triton-1 | Exit code: 1

fauxpilot-main-triton-1 | --------------------------------------------------------------------------

fauxpilot-main-triton-1 exited with code 1

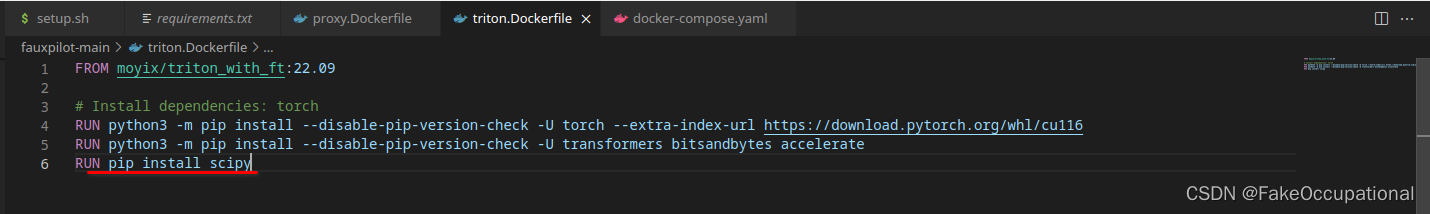

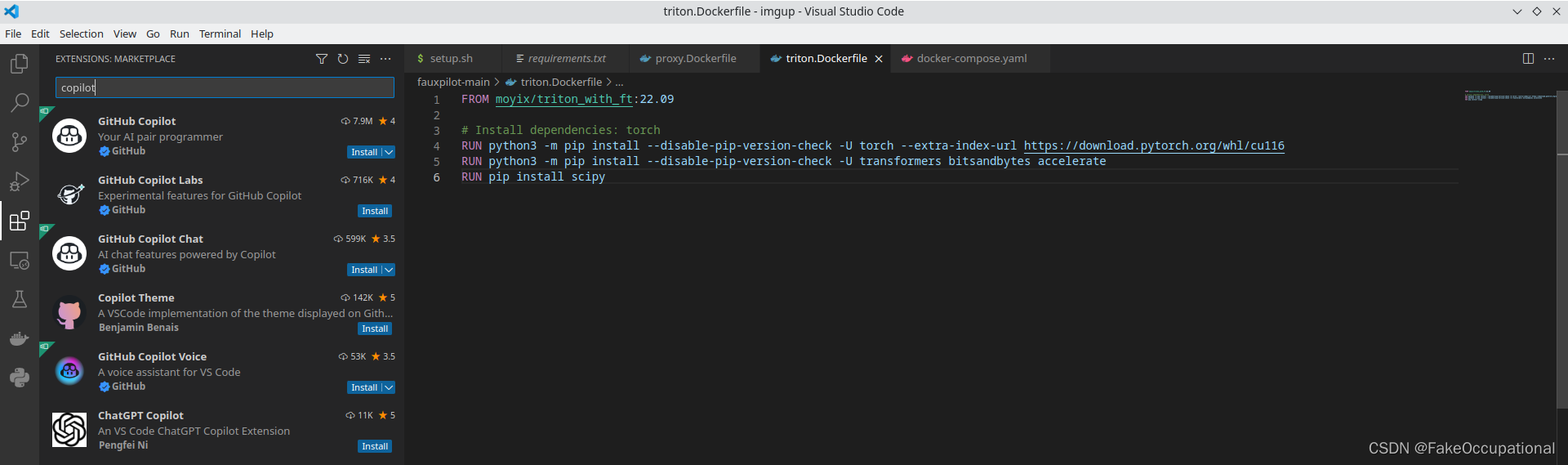

- 发现有报错No module named ‘scipy’ ,修改dockerfile文件,然后重新构建

- 最终成功运行

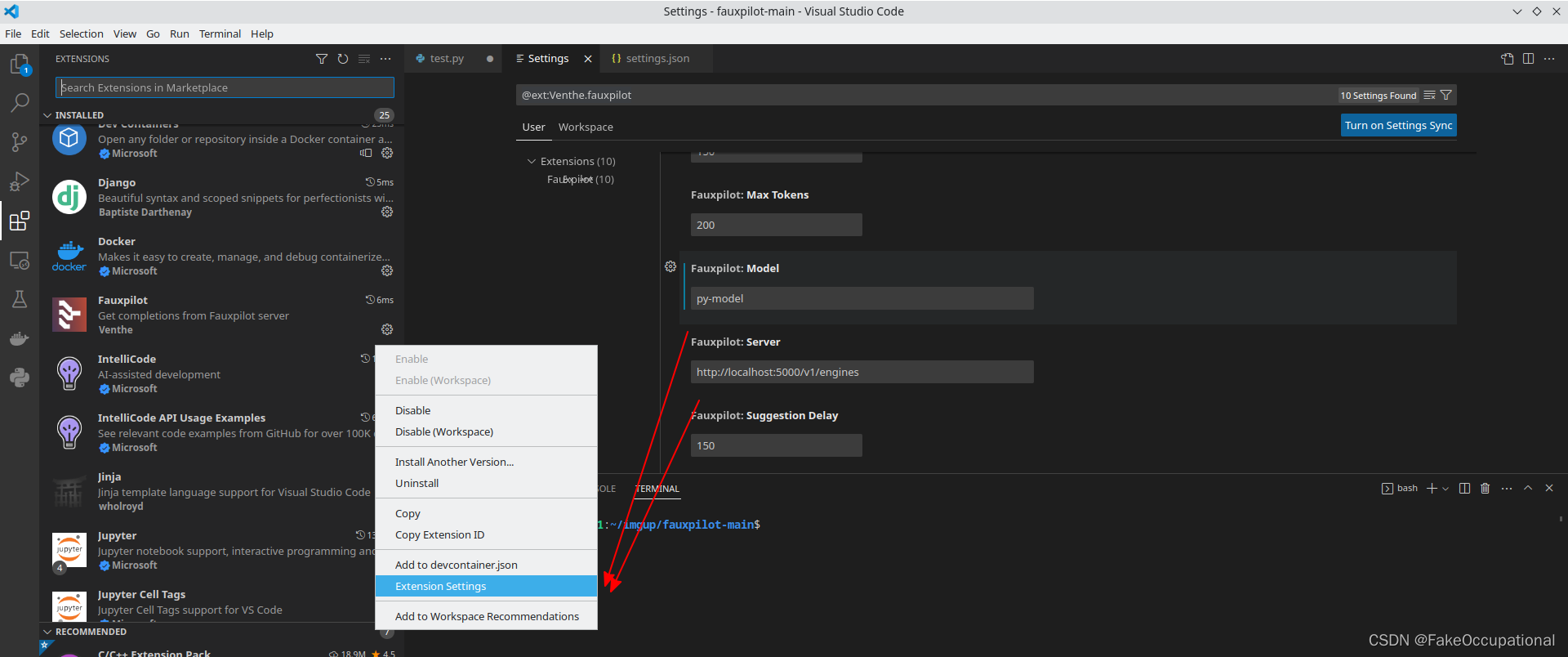

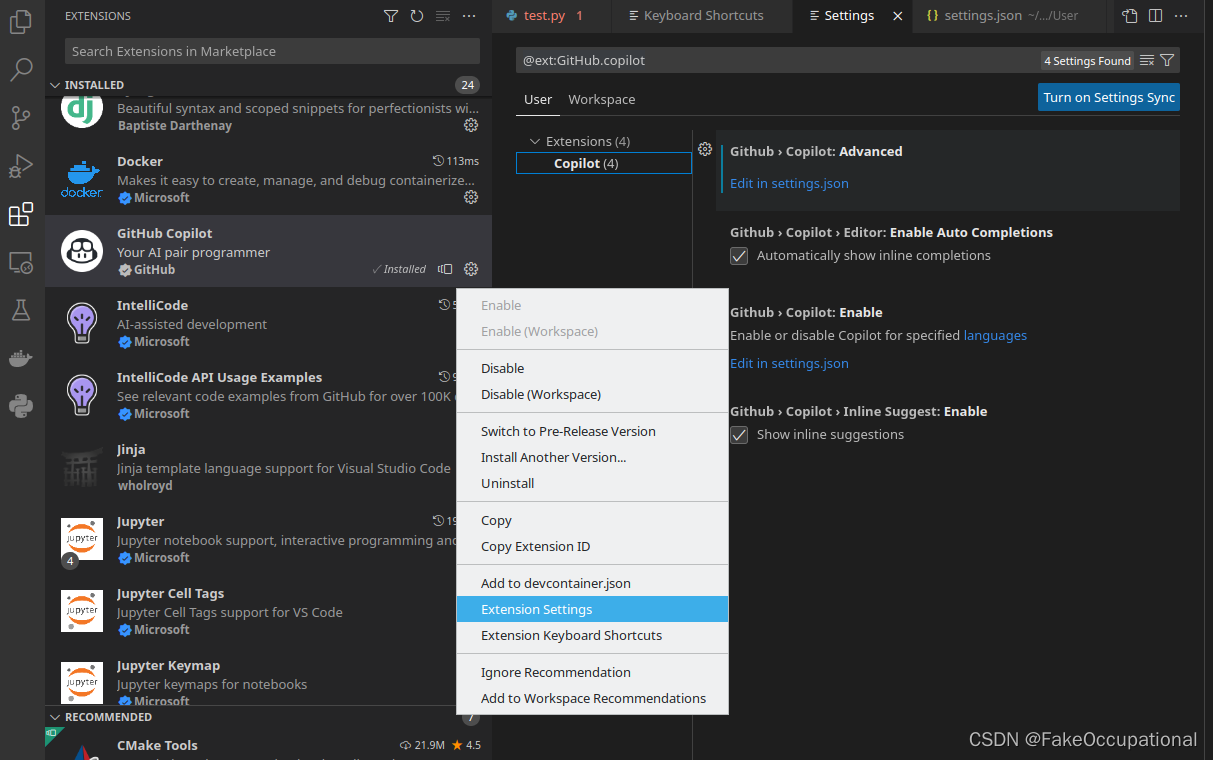

插件

-

https://github.com/Venthe/vscode-fauxpilot

-

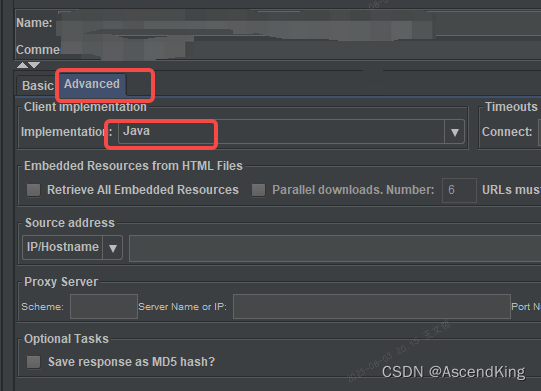

设置对应的模型类型,‘fastertransformer’ or ‘py-model’ :否则会报错fauxpilot-main-copilot_proxy-1 | WARNING: Model ‘fastertransformer’ is not available. Please ensure that

modelis set to either ‘fastertransformer’ or ‘py-model’ depending on your installation

-

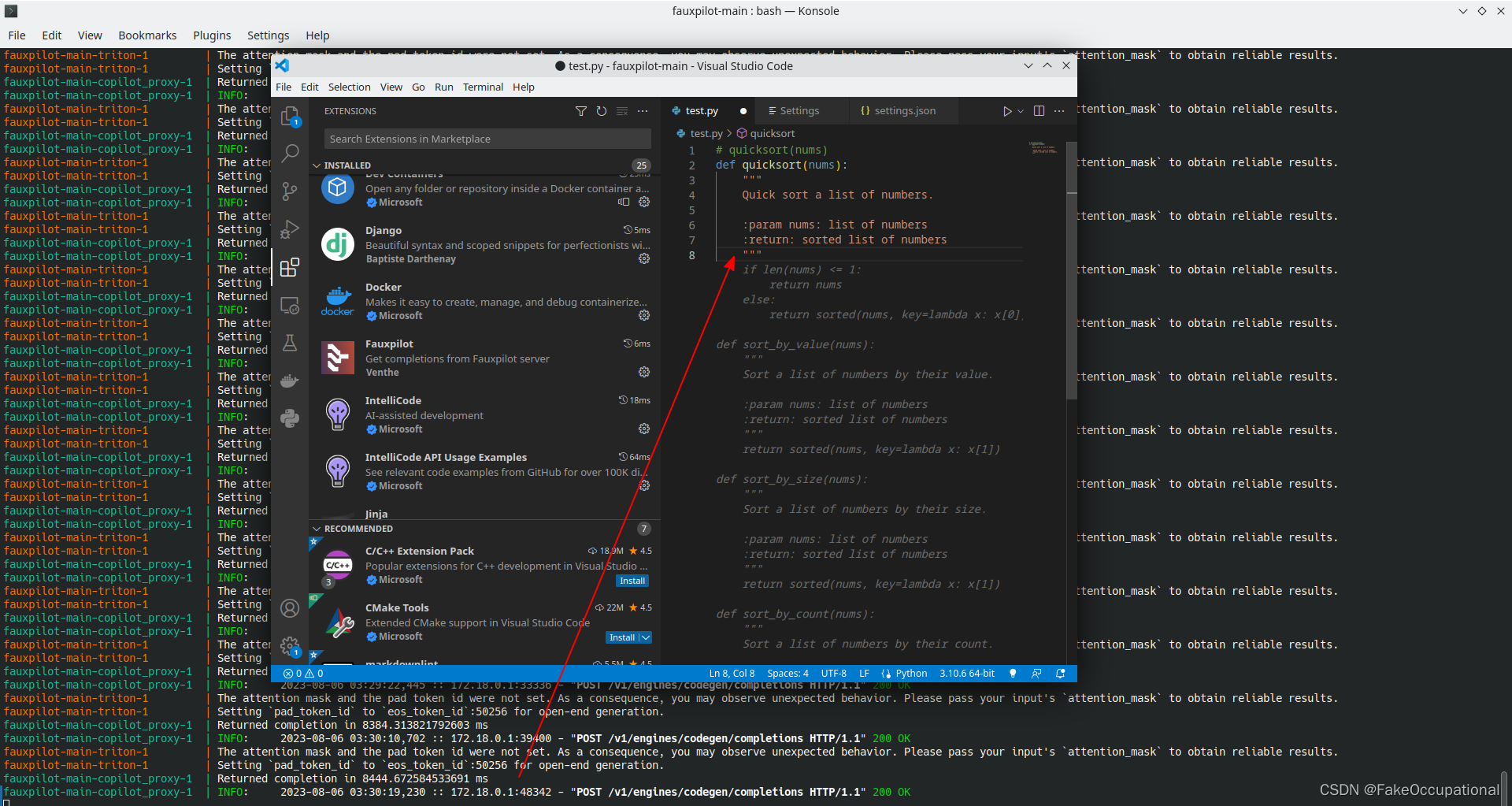

可能会有警告:The attention mask and the pad token id were not set. As a consequence, you may observe unexpected behavior. Please pass your input’s

attention_maskto obtain reliable results.

fauxpilot-main-triton-1 | Settingpad_token_idtoeos_token_id:50256 for open-end generation.本文说不用管

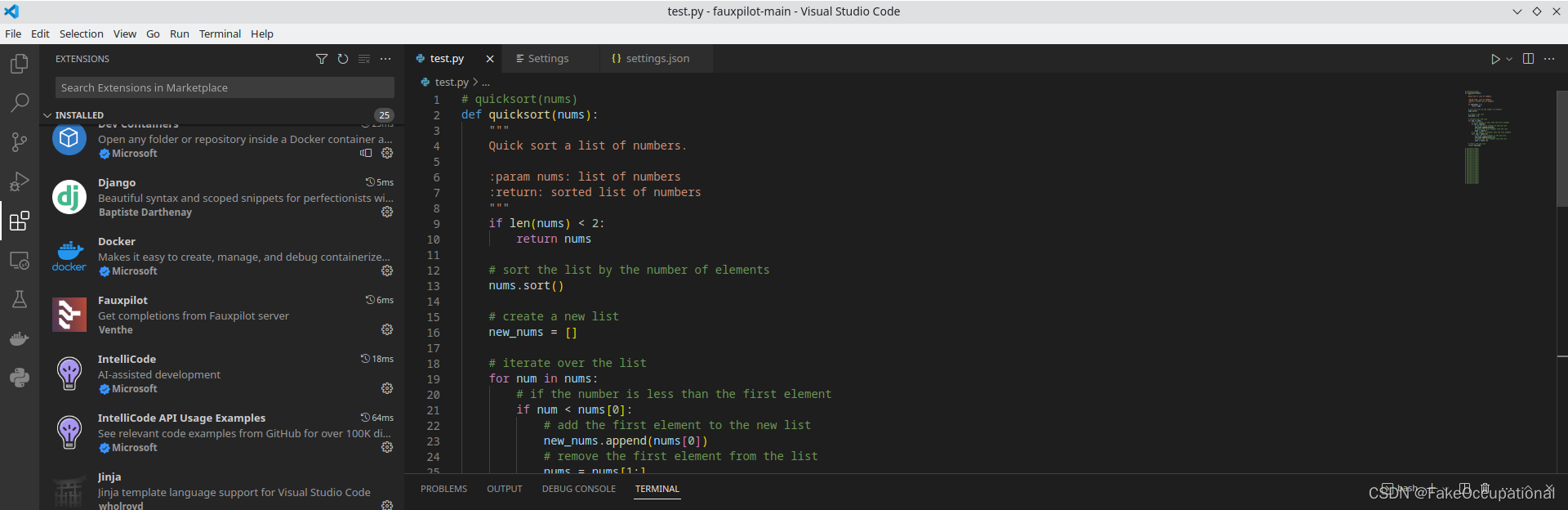

使用

- 需要等待推理完成才会显示智能建议

- tab接受建议

CG

-

Fauxpilot windows :https://fma.wiki/d/155-aifauxpilot

-

https://github.com/TritonDataCenter/triton

-

原来cmake和cublas也有python的安装包啊

-

https://github.com/fauxpilot/fauxpilot/blob/main/documentation/server.md

找一个没在使用的端口

$:~/imgup$ sudo netstat -anp | grep 8000

$:~/imgup$ -a:此选项用于显示所有活动的连接,包括监听和非监听连接。-n:此选项用于显示IP地址和端口号,而不是尝试进行反向DNS解析。-p:此选项用于显示与每个连接/侦听端口相关联的进程/程序的名称或PID(进程ID)。

"github.copilot.advanced": {"debug.overrideEngine": "codegen","debug.testOverrideProxyUrl": "http://localhost:5000","debug.overrideProxyUrl": "http://localhost:5000"}

相关软件

- opensourcealternative.to|查找开源替代网 https://www.opensourcealternative.to/

- Copilot 开源替代品 triton https://github.com/moyix/fauxpilot

- languageTool |Grammarly 开源替代品 https://languagetool.org/dev

- 【正在焦虑英文论文写作?那你或许可以试试这个AI工具-哔哩哔哩https://b23.tv/5iInW18