OpenCV-Python中的图像处理-图像特征

- 图像特征

- Harris角点检测

- 亚像素级精度的角点检测

- Shi-Tomasi角点检测

- SIFT(Scale-Invariant Feature Transfrom)

- SURF(Speeded-Up Robust Features)

- FAST算法

- BRIEF(Binary Robust Independent Elementary Features)算法

- ORB (Oriented FAST and Rotated BRIEF)算法

- 特征匹配

- Brute-Force 蛮力匹配

- 对 ORB 描述符进行蛮力匹配

- 对 SIFT 描述符进行蛮力匹配和比值测试

- FLANN 匹配

图像特征

- 特征理解

- 特征检测

- 特征描述

Harris角点检测

- cv2.cornerHarris(img, blockSize, ksize, k, borderType=…)

- img:输入图像,数据类型为float32

- blockSize:角点检测中要考虑的领域大小

- ksize:Sobe求导中使用的窗口大小

- k:Harris角点检测方程中的自由参数,取值参数为 [0.04,0.06]

- borderType:边界类型

import numpy as np

import cv2

from matplotlib import pyplot as plt# img = cv2.imread('./resource/opencv/image/chessboard.png', cv2.IMREAD_COLOR)

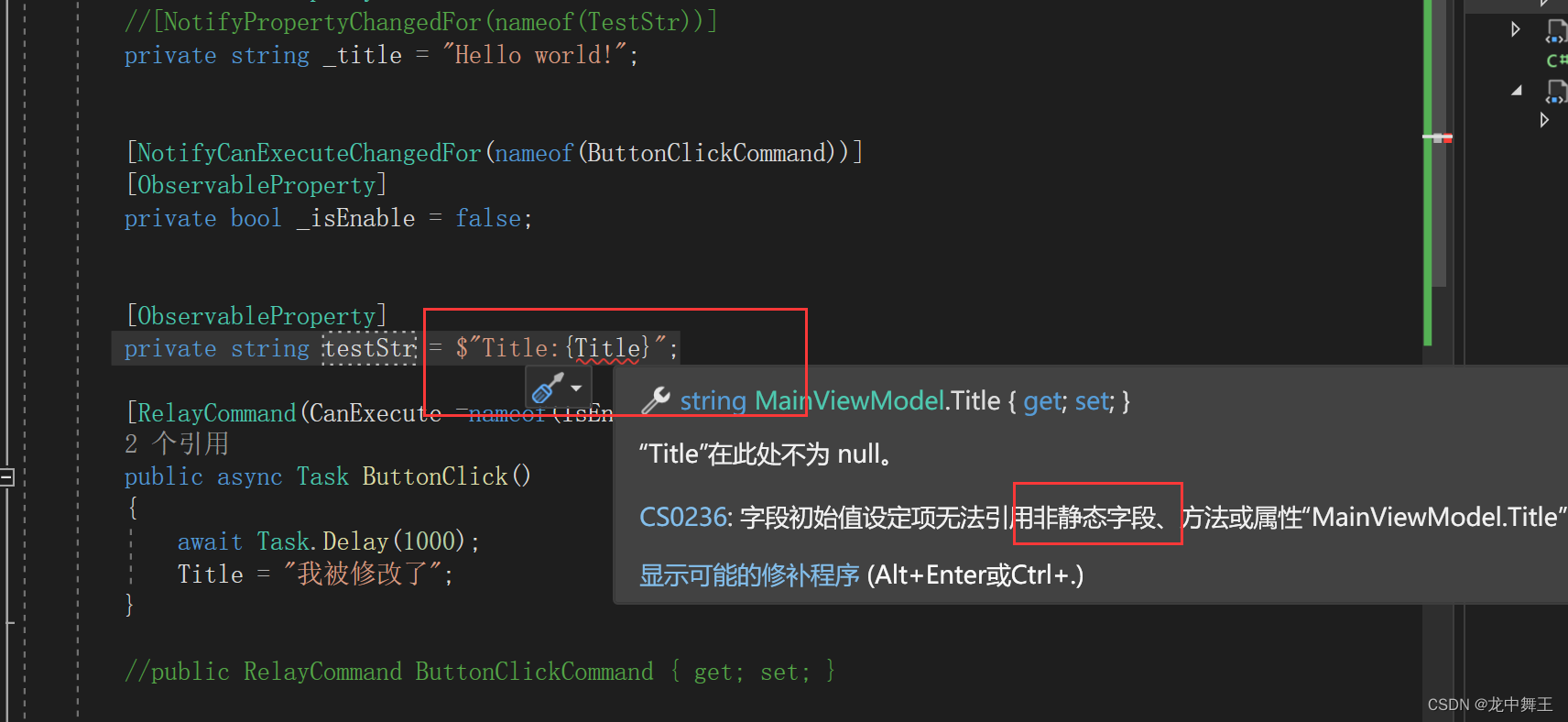

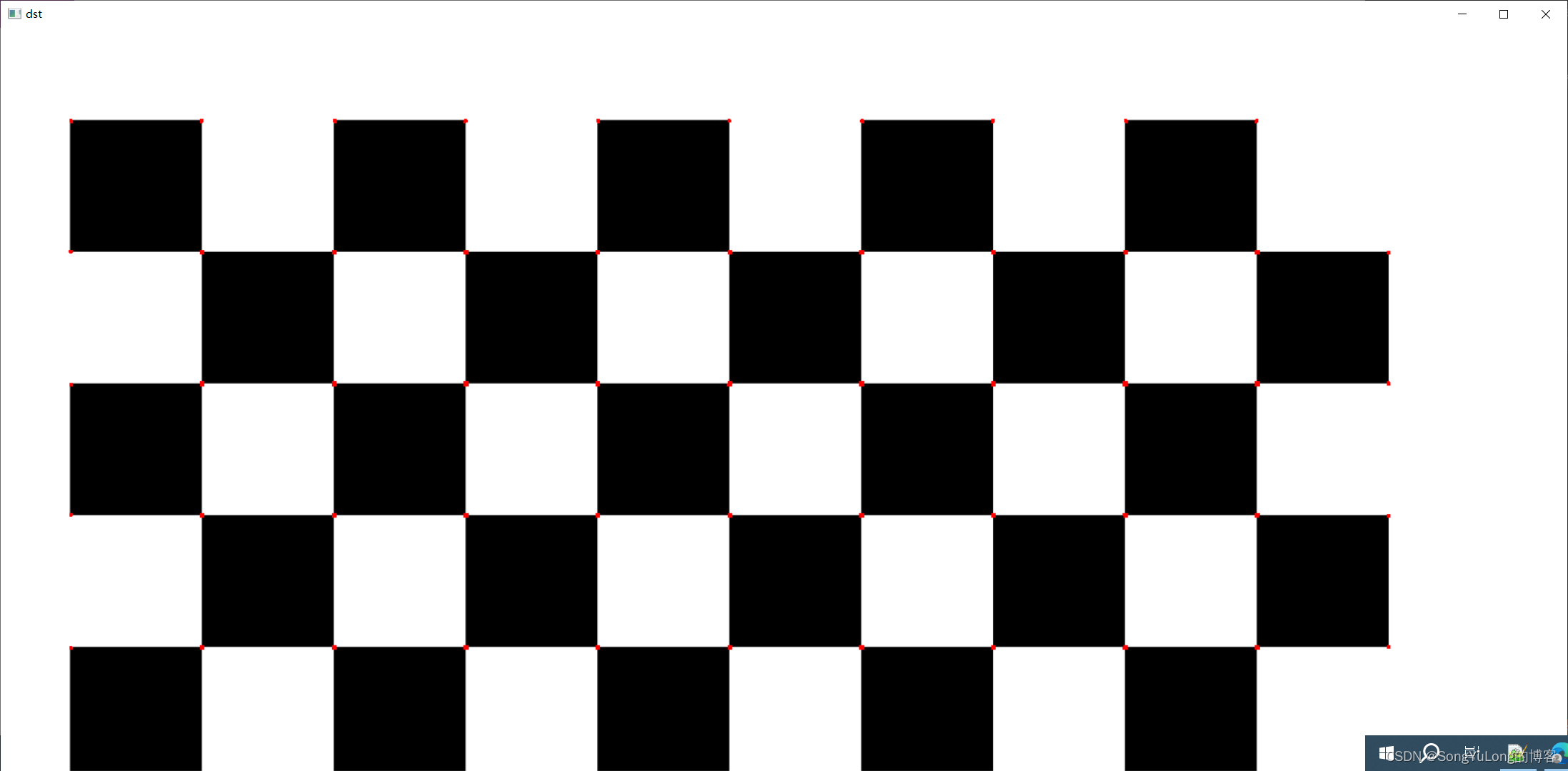

img = cv2.imread('./resource/opencv/image/pattern.png', cv2.IMREAD_COLOR)gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)gray = np.float32(gray)# 输入图像必须是float32,最后一个参数在0.04到0.05之间

dst = cv2.cornerHarris(gray, 2, 3, 0.05)

dst = cv2.dilate(dst, None)img[dst>0.01*dst.max()] = [0, 0, 255]cv2.imshow('dst', img)

cv2.waitKey(0)

cv2.destroyAllWindows()

亚像素级精度的角点检测

- cv2.cornerSubPix(img, corners, winSize, zeroZone, criteria)

最大精度的角点检测,首先要找到 Harris角点,然后将角点的重心传给这个函数进行修正。

import numpy as np

import cv2

from matplotlib import pyplot as pltimg = cv2.imread('./resource/opencv/image/subpixel.png', cv2.IMREAD_COLOR)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)gray = np.float32(gray)

dst = cv2.cornerHarris(gray, 2, 3, 0.04)

dst = cv2.dilate(dst, None)

ret, dst = cv2.threshold(dst, 0.01*dst.max(), 255, 0)

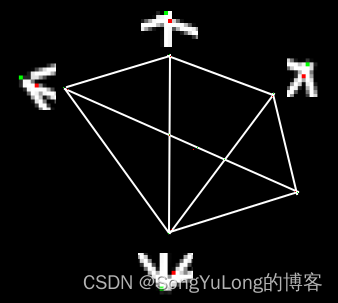

dst = np.uint8(dst)ret, labels, stats, centroids = cv2.connectedComponentsWithStats(dst)criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 100, 0.001)corners = cv2.cornerSubPix(gray, np.float32(centroids), (5,5), (-1, -1), criteria)res = np.hstack((centroids, corners))res = np.int0(res)

img[res[:,1],res[:,0]]=[0,0,255]

img[res[:,3],res[:,2]]=[0,255,0]cv2.imshow('img', img)

cv2.waitKey(0)

cv2.destroyAllWindows()

Harris 角点用红色像素标出,绿色像素是修正后的角点。

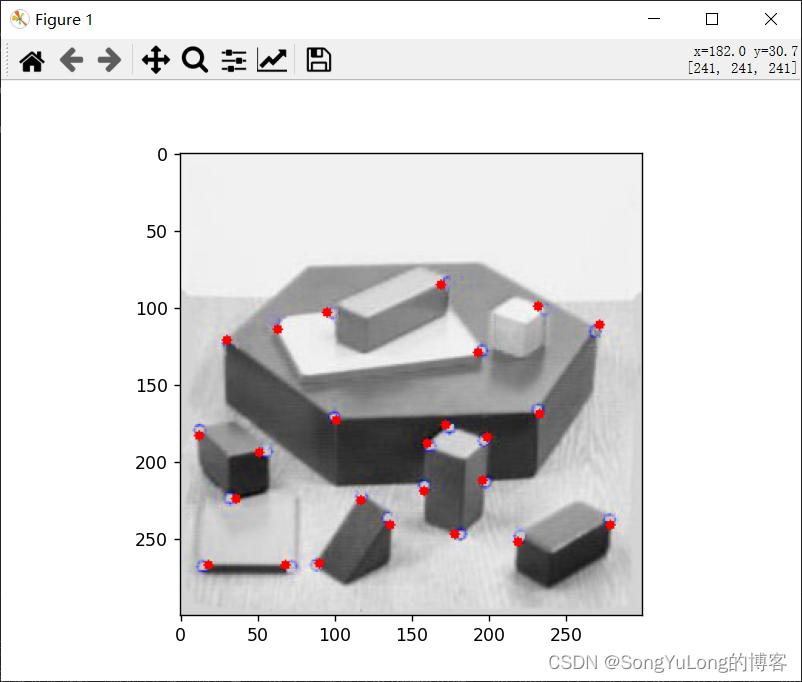

Shi-Tomasi角点检测

- cv2.goodFeatureToTrack()

import numpy as np

import cv2

from matplotlib import pyplot as pltimg = cv2.imread('./resource/opencv/image/shitomasi_block.jpg', cv2.IMREAD_COLOR)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)corners = cv2.goodFeaturesToTrack(gray, 25, 0.01, 10)corners = np.int0(corners)for i in corners:x,y = i.ravel()cv2.circle(img, (x,y), 3, 255, -1)plt.imshow(img)

plt.show()

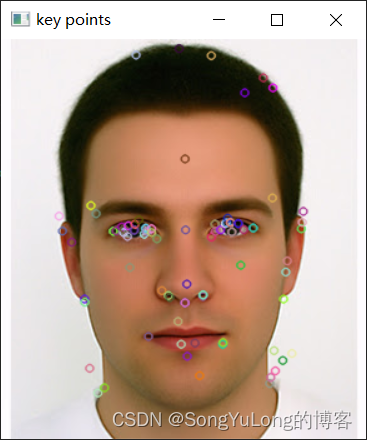

SIFT(Scale-Invariant Feature Transfrom)

-

SIFT,即尺度不变特征变换(Scale-invariant feature transform,SIFT),是用于图像处理领域的一种描述。这种描述具有尺度不变性,可在图像中检测出关键点,是一种局部特征描述子。

-

cv2.SIFT_create()

- kp = sift.detect(img, None):查找特征点

- kp, des = sift.compute(img, kp):计算特征点

- kp, des = sift.detectAndCompute(img, None) :直接找到特征点并计算描述符

-

cv2.drawKeypoints(img, kp, out_img, flags=cv2.DRAW_MATCHES_FLAGS_NOT_DRAW_SINGLE_POINTS):画特征点

- img : 输入图像

- kp:图像特征点

- out_img:输出图像

- flags:

cv2.DRAW_MATCHES_FLAGS_DEFAULT

cv2.DRAW_MATCHES_FLAGS_DRAW_OVER_OUTIMG

cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS

cv2.DRAW_MATCHES_FLAGS_NOT_DRAW_SINGLE_POINTS

import numpy as np

import cv2# 读取图片

# img = cv2.imread('./resource/opencv/image/home.jpg')

img = cv2.imread('./resource/opencv/image/AverageMaleFace.jpg')

key_points = img.copy()# 实例化SIFT算法

sift = cv2.SIFT_create()# 得到特征点

kp = sift.detect(img, None)

print(np.array(kp).shape)# 绘制特征点

cv2.drawKeypoints(img, kp, key_points, flags=cv2.DRAW_MATCHES_FLAGS_NOT_DRAW_SINGLE_POINTS)# 图片展示

cv2.imshow("key points", key_points)

cv2.waitKey(0)

cv2.destroyAllWindows()# 保存图片

# cv2.imwrite("key_points.jpg", key_points)# 计算特征

kp, des = sift.compute(img, kp)# 调试输出

print(des.shape)

print(des[0])cv2.imshow('kp', key_points)

cv2.waitKey(0)

cv2.destroyAllWindows()

SURF(Speeded-Up Robust Features)

- 文章前面介绍了使用 SIFT 算法进行关键点检测和描述。但是这种算法的执行速度比较慢,人们需要速度更快的算法。在 2006 年Bay,H.,Tuytelaars,T. 和 Van Gool,L 共同提出了 SURF(加速稳健特征)算法。跟它的名字一样,这是个算法是加速版的 SIFT。

- 与 SIFT 相同 OpenCV 也提供了 SURF 的相关函数。首先我们要初始化一个 SURF 对象,同时设置好可选参数: 64/128 维描述符, Upright/Normal 模式等。所有的细节都已经在文档中解释的很明白了。就像我们在SIFT 中一样,我们可以使用函数 SURF.detect(), SURF.compute() 等来进行关键点搀着和描述。

img = cv2.imread(‘fly.png’, 0)

surf = cv2.SURF(400)

kp, des = surf.detectAndCompute(img, None)

len(kp) # 699

print(surf.hessianThreshold)

surf.hessianThreshold = 50000

kp, des = surf.detectAndCompute(img,None)

print(len(kp)) # 47

不检测关键点的方向

print(surf.upright) #False

surf.upright = True

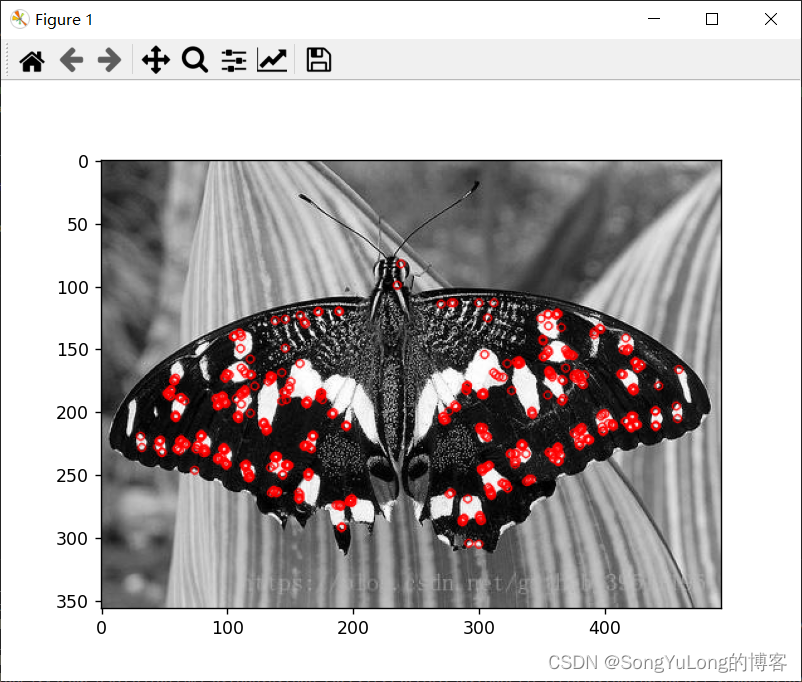

FAST算法

import numpy as np

import cv2

from matplotlib import pyplot as pltimg = cv2.imread('./resource/opencv/image/fly.jpg', cv2.IMREAD_GRAYSCALE)# fast = cv2.FastFeatureDetector_create(threshold=100, nonmaxSuppression=False, type=cv2.FAST_FEATURE_DETECTOR_TYPE_5_8)

fast = cv2.FastFeatureDetector_create(threshold=400)

kp = fast.detect(img, None)

img2 = cv2.drawKeypoints(img, kp, img.copy(), color=(0, 0, 255), flags=cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)cv2.imshow('fast', img2)

cv2.waitKey(0)

cv2.destroyAllWindows()

BRIEF(Binary Robust Independent Elementary Features)算法

- BRIEF(Binary Robust Independent Elementary Features)

import numpy as np

import cv2

from matplotlib import pyplot as pltimg = cv2.imread('./resource/opencv/image/fly.jpg', cv2.IMREAD_GRAYSCALE)# Initiate STAR detector

star = cv2.FeatureDetector_create("STAR")

# Initiate BRIEF extractor

brief = cv2.DescriptorExtractor_create("BRIEF")

# find the keypoints with STAR

kp = star.detect(img,None)

# compute the descriptors with BRIEF

kp, des = brief.compute(img, kp)

print(brief.getInt('bytes'))

print(des.shape)

ORB (Oriented FAST and Rotated BRIEF)算法

import numpy as np

import cv2

from matplotlib import pyplot as pltimg = cv2.imread('./resource/opencv/image/fly.jpg', cv2.IMREAD_GRAYSCALE)# ORB_create(nfeatures=..., scaleFactor=..., nlevels=..., edgeThreshold=..., firstLevel=..., WTA_K=..., scoreType=..., patchSize=..., fastThreshold=...)

orb = cv2.ORB_create()kp = orb.detect(img, None)kp, des = orb.compute(img, kp)img2 = cv2.drawKeypoints(img, kp, img.copy(), color=(255, 0, 0), flags=0)

plt.imshow(img2)

plt.show()

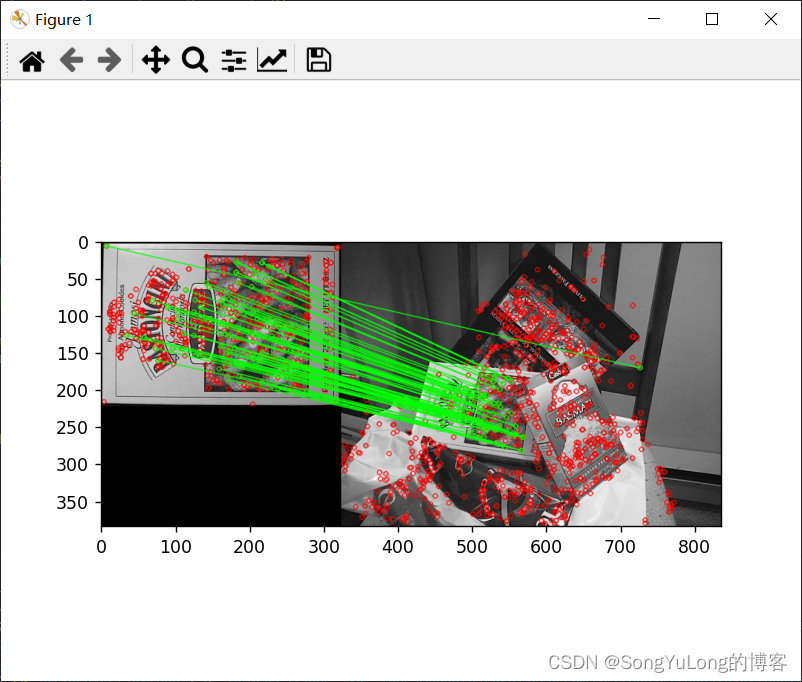

特征匹配

OpenCV 中的特征匹配

- 蛮力( Brute-Force)匹配

- FLANN 匹配

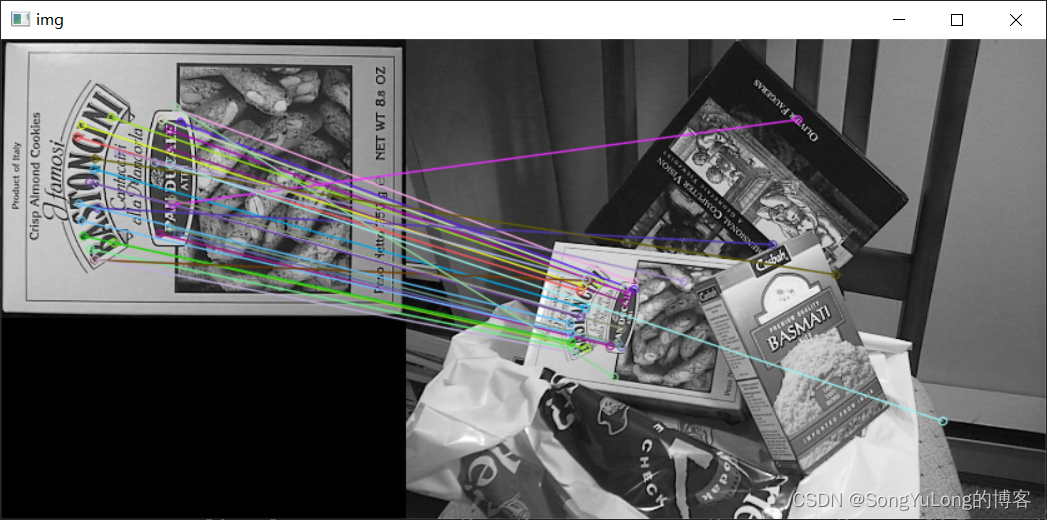

Brute-Force 蛮力匹配

对 ORB 描述符进行蛮力匹配

import numpy as np

import cv2

from matplotlib import pyplot as pltimg1 = cv2.imread('./resource/opencv/image/box.png', 0)

img2 = cv2.imread('./resource/opencv/image/box_in_scene.png', 0)orb = cv2.ORB_create()kp1, des1 = orb.detectAndCompute(img1, None)

kp2, des2 = orb.detectAndCompute(img2, None)bf = cv2.BFMatcher_create(cv2.NORM_HAMMING, crossCheck=True)matches = bf.match(des1, des2)# matches = bf:match(des1; des2) 返回值是一个 DMatch 对象列表。这个

# DMatch 对象具有下列属性:

# • DMatch.distance - 描述符之间的距离。越小越好。

# • DMatch.trainIdx - 目标图像中描述符的索引。

# • DMatch.queryIdx - 查询图像中描述符的索引。

# • DMatch.imgIdx - 目标图像的索引。# 距离排序

matches = sorted(matches, key = lambda x:x.distance)# 画出前30匹配

img3 = cv2.drawMatches(img1, kp1, img2, kp2, matches[:30], None, flags=2)cv2.imshow('img', img3)

cv2.waitKey(0)

cv2.destroyAllWindows()

对 SIFT 描述符进行蛮力匹配和比值测试

现在我们使用 BFMatcher.knnMatch() 来获得 k 对最佳匹配。在本例中我们设置 k = 2,这样我们就可以使用 D.Lowe 文章中的比值测试了。

import numpy as np

import cv2

from matplotlib import pyplot as pltimg1 = cv2.imread('./resource/opencv/image/box.png', 0)

img2 = cv2.imread('./resource/opencv/image/box_in_scene.png', 0)sift = cv2.SIFT_create()

kp1, des1 = sift.detectAndCompute(img1, None)

kp2, des2 = sift.detectAndCompute(img2, None)bf = cv2.BFMatcher_create()

matches = bf.knnMatch(des1, des2, k=2)good = []

for m,n in matches:if m.distance < 0.75*n.distance:good.append([m])# drawMatchesKnn(img1, keypoints1, img2, keypoints2, matches1to2, outImg, matchColor=..., singlePointColor=..., matchesMask=..., flags: int = ...)

img3 = cv2.drawMatchesKnn(img1, kp1, img2, kp2, good[:100], None, flags=2)

plt.imshow(img3)

plt.show()

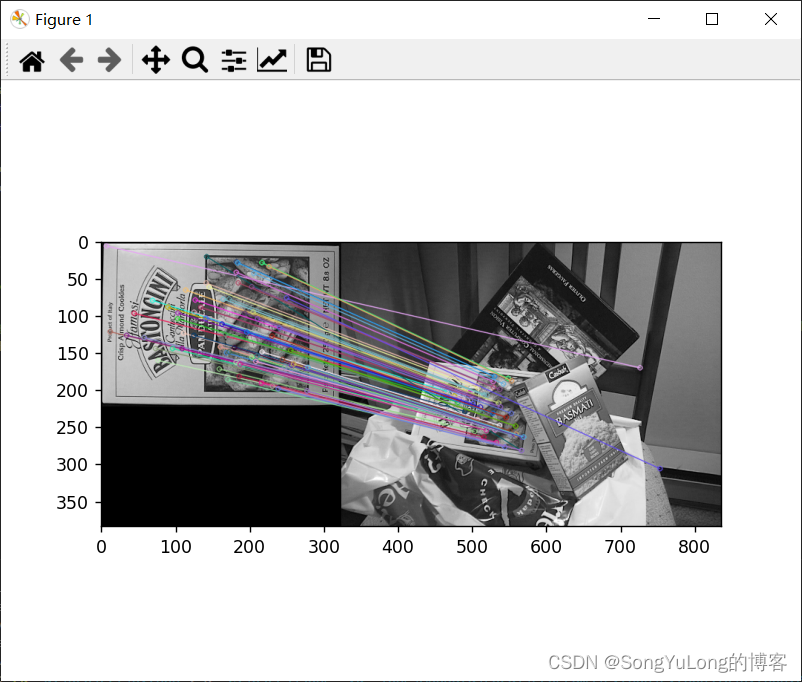

FLANN 匹配

FLANN 是快速最近邻搜索包(Fast_Library_for_Approximate_Nearest_Neighbors)的简称。它是一个对大数据集和高维特征进行最近邻搜索的算法的集合,而且这些算法都已经被优化过了。在面对大数据集时它的效果要好于 BFMatcher。我们来对第二个例子使用 FLANN 匹配看看它的效果。

import numpy as np

import cv2

from matplotlib import pyplot as pltimg1 = cv2.imread('./resource/opencv/image/box.png', 0)

img2 = cv2.imread('./resource/opencv/image/box_in_scene.png', 0)sift = cv2.SIFT_create()

kp1, des1 = sift.detectAndCompute(img1, None)

kp2, des2 = sift.detectAndCompute(img2, None)flann = cv2.FlannBasedMatcher_create()

matches = flann.knnMatch(des1, des2, k=2)matchesMask = [[0,0] for i in range(len(matches))]for i, (m, n) in enumerate(matches):if m.distance < 0.7*n.distance:matchesMask[i] = [1,0]draw_params = dict(matchColor = (0, 255, 0),singlePointColor = (255, 0, 0),matchesMask = matchesMask,flags = 0)img3 = cv2.drawMatchesKnn(img1, kp1, img2, kp2, matches, None, **draw_params)

plt.imshow(img3)

plt.show()