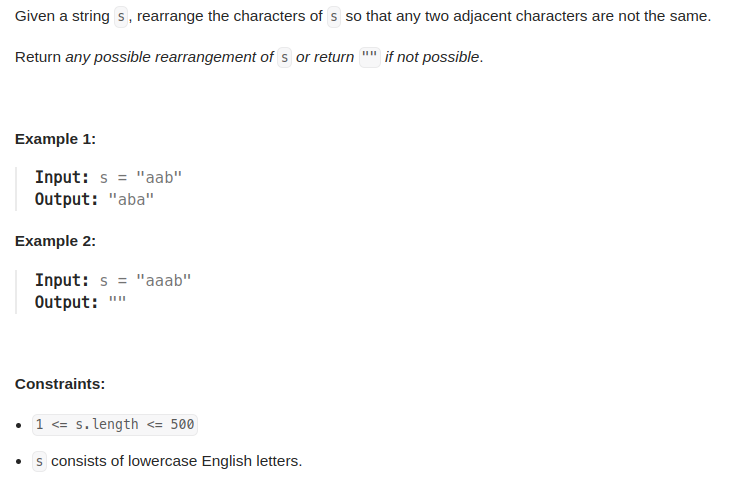

2023.8.19

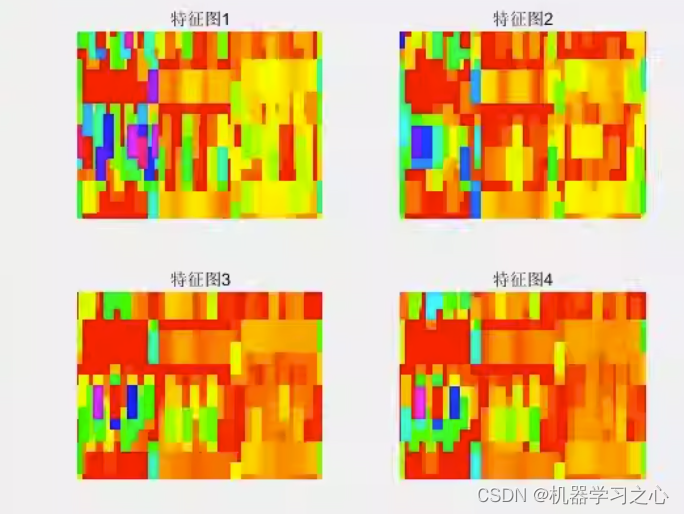

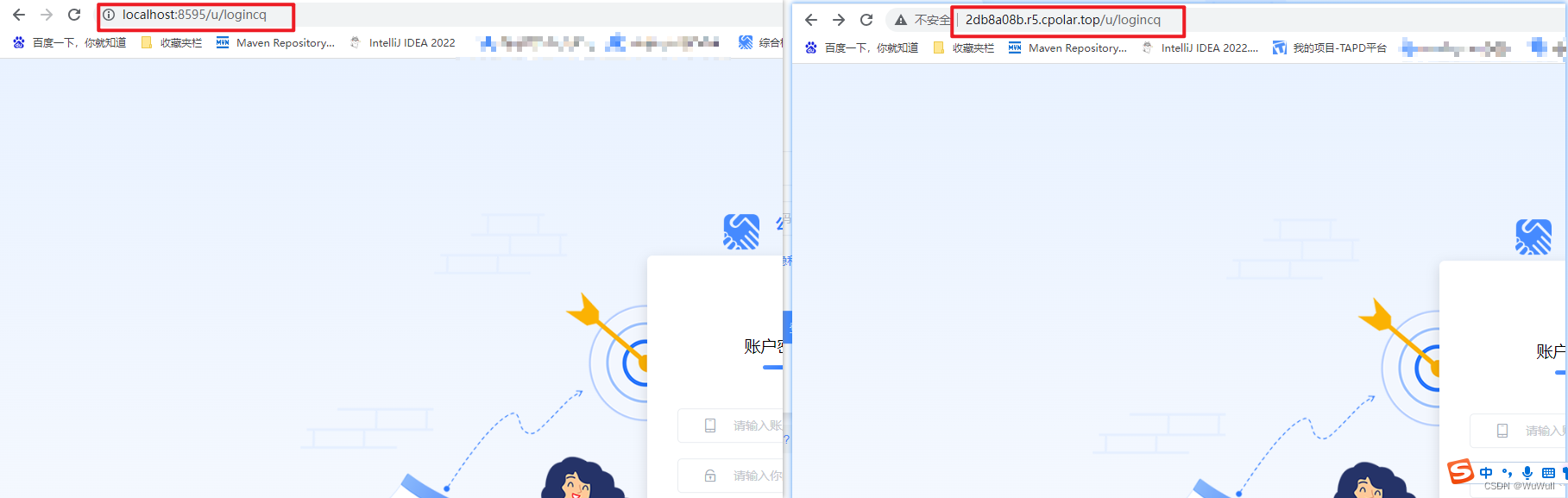

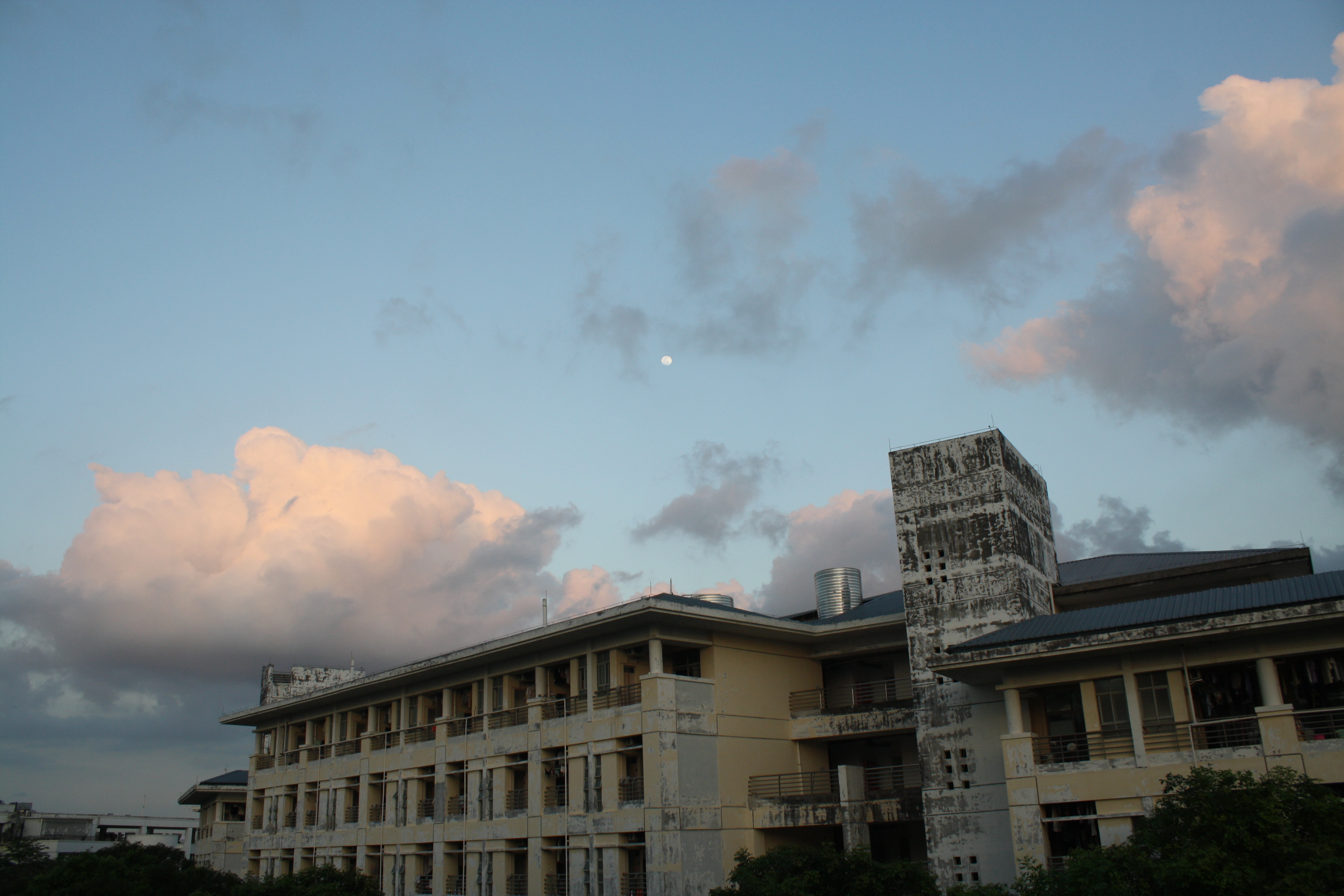

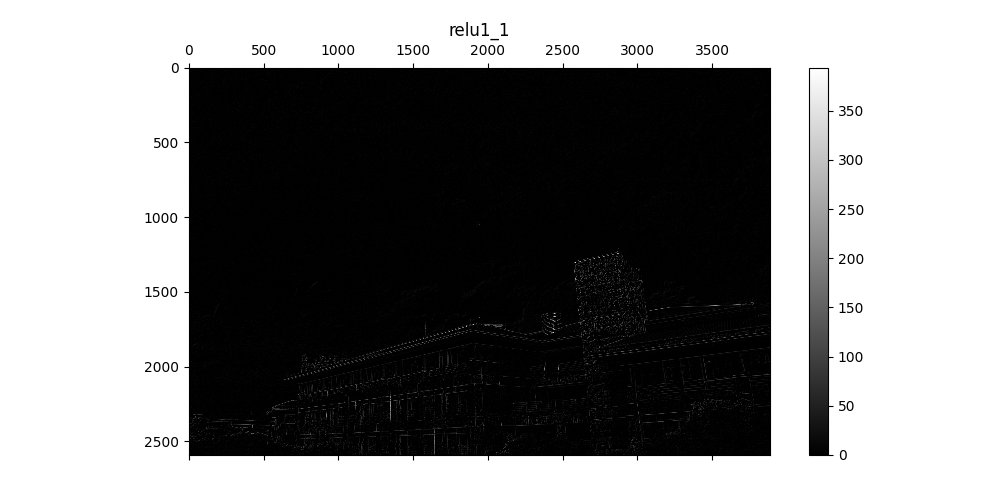

深度学习的卷积对于初学者是非常抽象,当时在入门学习的时候直接劝退一大班人,还好我坚持了下来。可视化时用到的图片(我们学校的一角!!!)以下展示了一个卷积和一次Relu的变化

作者使用的GPU是RTX 3050ti 在这张图像上已经出现了Cuda out of memory了。防止其他 图片出现类似情况:附上这张cat.jpg可以完成实验

代码是Copy大神的,用tensorflow1写的,使用tensoflow2的伙伴们,记得添上:

import tensorflow.compat.v1 as tf

Code:

# coding: utf-8# # 使用预训练的VGG网络# In[1]:import scipy.io

import numpy as np

import os

import scipy.misc

import matplotlib.pyplot as plt

import tensorflow as tf

import imageioimport tensorflow.compat.v1 as tf# get_ipython().magic(u'matplotlib inline')

print("所有包载入完毕")# In[2]:# 下载预先训练好的vgg-19模型,为Matlab的.mat格式,之后会用scipy读取

# (注意此版本模型与此处http://www.vlfeat.org/matconvnet/pretrained/最新版本不同)

import os.pathif not os.path.isfile('./data/imagenet-vgg-verydeep-19.mat'):os.system(u'wget -O data/imagenet-vgg-verydeep-19.mat http://www.vlfeat.org/matconvnet/models/beta16/imagenet-vgg-verydeep-19.mat')# get_ipython().system(u'wget -O data/imagenet-vgg-verydeep-19.mat http://www.vlfeat.org/matconvnet/models/beta16/imagenet-vgg-verydeep-19.mat')# # 定义网络# In[3]:def net(data_path, input_image):layers = ('conv1_1', 'relu1_1', 'conv1_2', 'relu1_2', 'pool1','conv2_1', 'relu2_1', 'conv2_2', 'relu2_2', 'pool2','conv3_1', 'relu3_1', 'conv3_2', 'relu3_2', 'conv3_3','relu3_3', 'conv3_4', 'relu3_4', 'pool3','conv4_1', 'relu4_1', 'conv4_2', 'relu4_2', 'conv4_3','relu4_3', 'conv4_4', 'relu4_4', 'pool4','conv5_1', 'relu5_1', 'conv5_2', 'relu5_2', 'conv5_3','relu5_3', 'conv5_4', 'relu5_4')data = scipy.io.loadmat(data_path)mean_pixel = [103.939, 116.779, 123.68]weights = data['layers'][0]net = {}current = input_imagefor i, name in enumerate(layers):kind = name[:4]if kind == 'conv':kernels, bias = weights[i][0][0][0][0]# matconvnet: weights are [width, height, in_channels, out_channels]# tensorflow: weights are [height, width, in_channels, out_channels]kernels = np.transpose(kernels, (1, 0, 2, 3))bias = bias.reshape(-1)current = _conv_layer(current, kernels, bias)elif kind == 'relu':current = tf.nn.relu(current)elif kind == 'pool':current = _pool_layer(current)net[name] = currentassert len(net) == len(layers)return net, mean_pixel, layersprint("Network for VGG ready")# # 定义模型# In[4]:def _conv_layer(input, weights, bias):conv = tf.nn.conv2d(input, tf.constant(weights), strides=(1, 1, 1, 1),padding='SAME')return tf.nn.bias_add(conv, bias)def _pool_layer(input):return tf.nn.max_pool(input, ksize=(1, 2, 2, 1), strides=(1, 2, 2, 1),padding='SAME')def preprocess(image, mean_pixel):return image - mean_pixeldef unprocess(image, mean_pixel):return image + mean_pixeldef imread(path):# return scipy.misc.imread(path).astype(np.float)return imageio.imread(path)def imsave(path, img):img = np.clip(img, 0, 255).astype(np.uint8)scipy.misc.imsave(path, img)print("Functions for VGG ready")# # 运行# In[5]:cwd = os.getcwd()

VGG_PATH = cwd + "/data/imagenet-vgg-verydeep-19.mat"

IMG_PATH = cwd + "/images/cat.jpg"

input_image = imread(IMG_PATH)

shape = (1,) + input_image.shape # (h, w, nch) => (1, h, w, nch)

with tf.Graph().as_default(), tf.Session() as sess:image = tf.placeholder('float', shape=shape)nets, mean_pixel, all_layers = net(VGG_PATH, image)input_image_pre = np.array([preprocess(input_image, mean_pixel)])layers = all_layers # For all layers# layers = ('relu2_1', 'relu3_1', 'relu4_1')for i, layer in enumerate(layers):print("[%d/%d] %s" % (i + 1, len(layers), layer))features = nets[layer].eval(feed_dict={image: input_image_pre})print(" Type of 'features' is ", type(features))print(" Shape of 'features' is %s" % (features.shape,))# Plot response if 1:plt.figure(i + 1, figsize=(10, 5))plt.matshow(features[0, :, :, 0], cmap=plt.cm.gray, fignum=i + 1)plt.title("" + layer)plt.colorbar()plt.show()