文章目录 emptyDir hostpath pv和pvc介绍 nfs作为静态pv案例 nfs作为动态pv案例 使用本地文件夹作为pv 改变默认存储类及回收策略 参考文档

重启文件还有,但是如果杀了进程,则会丢失文件

创建pod

# kubectl apply –f redis.yaml校验pod是否处于运行,并观察pod的改变

# kubectl get pod redis –-watch在其它终端上执行如下命令进入容器

# kubectl exec –it redis -- /bin/bash在shell中,进入/data/redis,然后创建文件

# cd /data/redis/

# echo Hello > test-file运行以下命令查找redis的进程

# apt-get update

# apt-get install procps

# ps aux杀掉redis进程,并观察redis pod的改变

# kill <pid>再次进入到redis的容器,查看文件是否存在。

# kubectl exec –it redis -- /bin/bash[root@k8s-01 chapter07]# cat redis.yaml

apiVersion: v1

kind: Pod

metadata:name: redis

spec:containers:- name: redisimage: redisvolumeMounts:- name: redis-storagemountPath: /data/redisvolumes:- name: redis-storageemptyDir: {}只要在一个node 里面,就会找到文件

[root@k8s-01 chapter07]# cat hostpath.yaml

apiVersion: v1

kind: Pod

metadata:name: test-pod

spec:containers:- image: nginxname: test-containervolumeMounts:- name: test-volumemountPath: /usr/share/nginxvolumes:- name: test-volumehostPath:path: /data

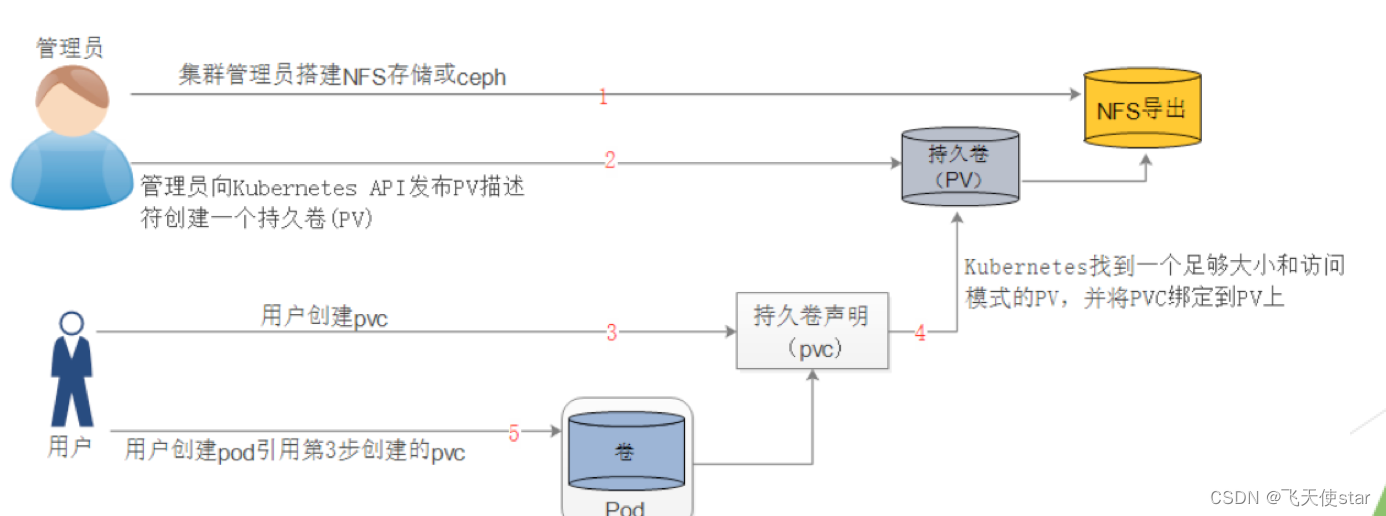

Pv: 是集群中的一段存储,由管理员提供或使用存储类动态提供。

Pvc(PersistentVolumeClaim)是用户对存储资源的请求。

新增nfs

[root@k8s-01 data]# vim /etc/exports

[root@k8s-01 data]# exportfs -rv

exporting 192.168.100.0/24:/data/nfs5

exporting 192.168.100.0/24:/data/nfs4

exporting 192.168.100.0/24:/data/nfs3

exporting 192.168.100.0/24:/data/nfs2

exporting 192.168.100.0/24:/data/nfs1

[root@k8s-01 data]# cat /etc/exports

/data/nfs1 192.168.100.0/24(rw,async,insecure,no_root_squash)

/data/nfs2 192.168.100.0/24(rw,async,insecure,no_root_squash)

/data/nfs3 192.168.100.0/24(rw,async,insecure,no_root_squash)

/data/nfs4 192.168.100.0/24(rw,async,insecure,no_root_squash)

/data/nfs5 192.168.100.0/24(rw,async,insecure,no_root_squash)

[root@k8s-01 data]# yum install -y nfs-utils rpcbind客户端安装

yum install -y utils创建pv并查看Pv

# showmount –e 192.168.20.88

# kubectl create –f nfs-pv.yaml

# kubectl get pv创建pvc

# kubectl create –f nfs-pvc.yaml使用以下命令查看pv和pvc是否绑定

kubectl get pvc创建pod使用先前创建的pvc

# kubectl create –f nginx-pvc.yaml

# kubectl get pod nginx-vol-pvc –o yaml[root@k8s-01 chapter07]# cat nfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:name: pv001labels:name: pv001

spec:nfs:path: /data/nfs1server: 192.168.20.111accessModes: ["ReadWriteMany","ReadWriteOnce"]capacity:storage: 1Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:name: pv002labels:name: pv002

spec:nfs:path: /data/nfs2server: 192.168.20.111accessModes: ["ReadWriteMany","ReadWriteOnce"]capacity:storage: 1Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:name: pv003labels:name: pv003

spec:nfs:path: /data/nfs3server: 192.168.20.111accessModes: ["ReadWriteMany","ReadWriteOnce"]capacity:storage: 1Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:name: pv004labels:name: pv004

spec:nfs:path: /data/nfs4server: 192.168.20.111accessModes: ["ReadWriteMany","ReadWriteOnce"]capacity:storage: 2Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:name: pv005labels:name: pv005

spec:nfs:path: /data/nfs5server: 192.168.20.111accessModes: ["ReadWriteMany","ReadWriteOnce"]capacity:storage: 2Gi[root@k8s-01 chapter07]#

[root@k8s-01 chapter07]#

[root@k8s-01 chapter07]# cat nfs-pvc.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: mypvcnamespace: default

spec:accessModes: ["ReadWriteMany"]resources:requests:storage: 2Gi[root@k8s-01 chapter07]# cat nginx-pvc.yml

apiVersion: v1

kind: Pod

metadata:name: nginx-vol-pvcnamespace: default

spec:containers:- name: mywwwimage: nginxvolumeMounts:- name: wwwmountPath: /usr/share/nginx/htmlvolumes:- name: wwwpersistentVolumeClaim:claimName: mypvc[root@k8s-01 chapter07]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv001 1Gi RWO,RWX Retain Available 2m46s

pv002 1Gi RWO,RWX Retain Available 2m46s

pv003 1Gi RWO,RWX Retain Available 2m46s

pv004 2Gi RWO,RWX Retain Bound default/mypvc 2m46s

pv005 2Gi RWO,RWX Retain Available 2m46s

[root@k8s-01 chapter07]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mypvc Bound pv004 2Gi RWO,RWX 2m8s进去查看

[root@k8s-01 chapter07]# kubectl exec -it nginx-vol-pvc -- bash

root@nginx-vol-pvc:/# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 50G 11G 40G 21% /

tmpfs 64M 0 64M 0% /dev

tmpfs 1.9G 0 1.9G 0% /sys/fs/cgroup

/dev/sda2 50G 11G 40G 21% /etc/hosts

shm 64M 0 64M 0% /dev/shm

192.168.100.30:/data/nfs4 50G 8.9G 41G 18% /usr/share/nginx/html

tmpfs 1.9G 12K 1.9G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 1.9G 0 1.9G 0% /proc/acpi

tmpfs 1.9G 0 1.9G 0% /proc/scsi

tmpfs 1.9G 0 1.9G 0% /sys/firmware安装部署存储

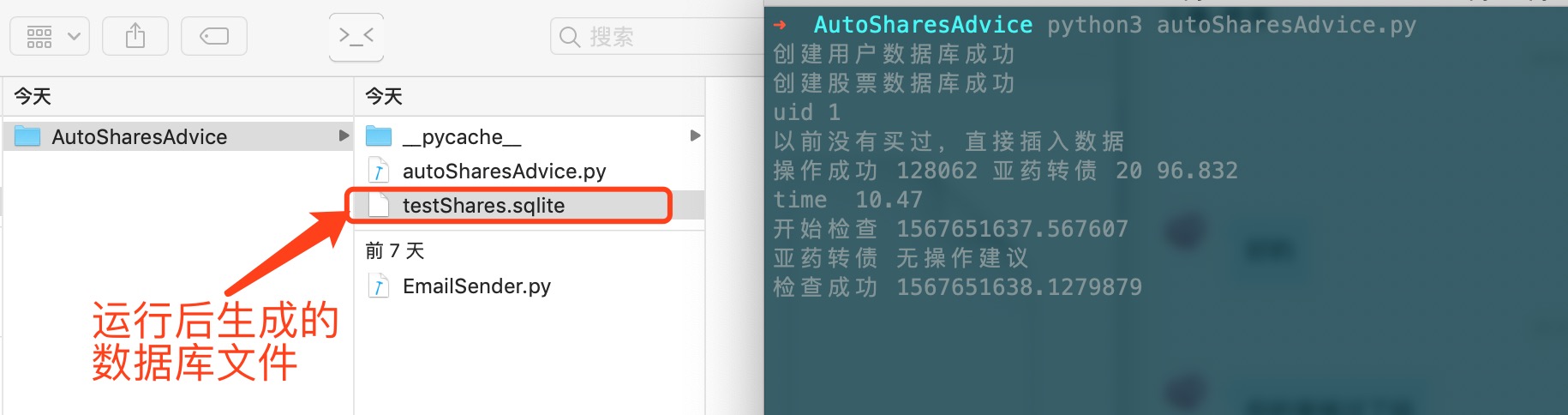

创建服务帐户

# kubectl create –f serviceaccount.yaml

创建集群角色并与服务帐户绑定

# kubectl create –f clusterrole.yaml

# kubectl create –f clusterrolebinding.yaml创建角色,并与服务帐户绑定

# kubectl create –f role.yaml

# kubectl create –f rolebinding.yaml创建动态存储类

# kubectl create –f class.yaml部署

# kubectl create –f deployment.yaml]

注意:以上也可以直接执行 kubectl apply –f ./nfs-de创建pv,pod及查看pvc是否通过类创建了pv并且绑定

# kubectl create –f test-claim.yaml

# kubectl create –f test-pod.yaml

# kubectl get pvc –n aishangwei文件内容

[root@k8s-01 nfs-de]# ll

total 28

-rw-r--r--. 1 root root 247 Aug 22 10:32 class.yaml

-rw-r--r--. 1 root root 306 Aug 22 10:32 clusterrolebinding.yaml

-rw-r--r--. 1 root root 525 Aug 22 10:32 clusterrole.yaml

-rw-r--r--. 1 root root 901 Aug 24 13:58 deployment.yaml

-rw-r--r--. 1 root root 311 Aug 22 10:32 rolebinding.yaml

-rw-r--r--. 1 root root 228 Aug 22 10:32 role.yaml

-rw-r--r--. 1 root root 76 Aug 22 10:32 serviceaccount.yaml

[root@k8s-01 nfs-de]# cat class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:name: managed-nfs-storage

provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

reclaimPolicy: Retain

parameters:archiveOnDelete: "false"[root@k8s-01 nfs-de]# cat clusterrolebinding.yaml

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: run-nfs-client-provisioner

subjects:- kind: ServiceAccountname: nfs-client-provisionernamespace: default

roleRef:kind: ClusterRolename: nfs-client-provisioner-runnerapiGroup: rbac.authorization.k8s.io[root@k8s-01 nfs-de]# cat clusterrole.yaml

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: nfs-client-provisioner-runner

rules:- apiGroups: [""]resources: ["persistentvolumes"]verbs: ["get", "list", "watch", "create", "delete"]- apiGroups: [""]resources: ["persistentvolumeclaims"]verbs: ["get", "list", "watch", "update"]- apiGroups: ["storage.k8s.io"]resources: ["storageclasses"]verbs: ["get", "list", "watch"]- apiGroups: [""]resources: ["events"]verbs: ["create", "update", "patch"][root@k8s-01 nfs-de]# cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: nfs-client-provisioner

spec:replicas: 1selector:matchLabels:app: nfs-client-provisionerstrategy:type: Recreatetemplate:metadata:labels:app: nfs-client-provisionerspec:serviceAccountName: nfs-client-provisionercontainers:- name: nfs-client-provisionerimage: quay.io/external_storage/nfs-client-provisioner:latestvolumeMounts:- name: nfs-client-rootmountPath: /persistentvolumesenv:- name: PROVISIONER_NAMEvalue: fuseim.pri/ifs- name: NFS_SERVERvalue: 192.168.100.30- name: NFS_PATHvalue: /data/nfs1volumes:- name: nfs-client-rootnfs:server: 192.168.100.30path: /data/nfs1[root@k8s-01 nfs-de]# ls

class.yaml clusterrolebinding.yaml clusterrole.yaml deployment.yaml rolebinding.yaml role.yaml serviceaccount.yaml

[root@k8s-01 nfs-de]# ll

total 28

-rw-r--r--. 1 root root 247 Aug 22 10:32 class.yaml

-rw-r--r--. 1 root root 306 Aug 22 10:32 clusterrolebinding.yaml

-rw-r--r--. 1 root root 525 Aug 22 10:32 clusterrole.yaml

-rw-r--r--. 1 root root 901 Aug 24 13:58 deployment.yaml

-rw-r--r--. 1 root root 311 Aug 22 10:32 rolebinding.yaml

-rw-r--r--. 1 root root 228 Aug 22 10:32 role.yaml

-rw-r--r--. 1 root root 76 Aug 22 10:32 serviceaccount.yaml

[root@k8s-01 nfs-de]# cat rolebinding.yaml

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: leader-locking-nfs-client-provisioner

subjects:- kind: ServiceAccountname: nfs-client-provisionernamespace: default

roleRef:kind: Rolename: leader-locking-nfs-client-provisionerapiGroup: rbac.authorization.k8s.io[root@k8s-01 nfs-de]# cat role.yaml

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: leader-locking-nfs-client-provisioner

rules:- apiGroups: [""]resources: ["endpoints"]verbs: ["get", "list", "watch", "create", "update", "patch"][root@k8s-01 nfs-de]# cat serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: nfs-client-provisioner[root@k8s-01 nfs-de]# [root@k8s-01 chapter07]# cat test-claim.yaml

apiVersion: v1

kind: Namespace

metadata:name: aishangwei

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:name: test-claimnamespace: aishangweiannotations:volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:accessModes:- ReadWriteManyresources:requests:storage: 10Mi[root@k8s-01 chapter07]# cat test-pod.yaml

kind: Pod

apiVersion: v1

metadata:name: test-podnamespace: aishangwei

spec:containers:- name: test-podimage: busyboxcommand:- "/bin/sh"args:- "-c"- "touch /mnt/aishangwei-SUCCESS && exit 0 || exit 1"volumeMounts:- name: nfs-pvcmountPath: "/mnt"restartPolicy: "Never"volumes:- name: nfs-pvcpersistentVolumeClaim:claimName: test-claim

创建文件夹,并创建文件

# mkdir /mnt/data

# echo ‘Hello from Kubernetes storage’ > /mnt/data/index.html执行如下命令创建pv,并查看创建的pv信息

# kubectl create –f pv-volume.yaml

# kubectl get pv task-pv-volume创建pvc并校验pv和pvc的信息

# kubectl create –f pvc-claim.yaml

# kubectl get pv task-pv-volume

# kubectl get pvc task-pv-claim创建pod,并引用使用的pvc

# kubectl create –f pv-pod.yaml

# kubectl get pod taks-pv-pod[root@k8s-01 chapter07]# cat pv-volume.yaml

apiVersion: v1

kind: PersistentVolume

metadata:name: task-pv-volumelabels:type: local

spec:storageClassName: manualcapacity:storage: 10GiaccessModes:- ReadWriteOncehostPath:path: "/mnt/data"[root@k8s-01 chapter07]# cat pv-claim.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: task-pv-claim

spec:storageClassName: manualaccessModes:- ReadWriteOnceresources:requests:storage: 3Gi[root@k8s-01 chapter07]# cat pv-

pv-claim.yaml pv-pod.yaml pv-volume.yaml

[root@k8s-01 chapter07]# cat pv-

pv-claim.yaml pv-pod.yaml pv-volume.yaml

[root@k8s-01 chapter07]# cat pv-pod.yaml

apiVersion: v1

kind: Pod

metadata:name: task-pv-pod

spec:volumes:- name: task-pv-storagepersistentVolumeClaim:claimName: task-pv-claimcontainers:- name: task-pv-containerimage: nginxports:- containerPort: 80name: "http-server"volumeMounts:- mountPath: "/usr/share/nginx/html"name: task-pv-storage查看存储类

# kubectl get storageclass2. 将存储类设置为非默认的

# kubectl patch storageclass <your-class-name> -p ‘{“metadata”:{“annotations”:{“storageclass.Kubernetes.io/is-default-class”:”false”}}}’3. 标记存储类为默认的

# kubectl patch storageclass <your-class-name> -p ‘{“metadata”:{“annotations”:{“storageclass.Kubernetes.io/is-default-class”:”true”}}}’列出持久卷

# kubectl get pv选择一个持久卷来改变它的回收策略

# kubectl patch pv <your-pv-name> -p ‘{“spec”:{“persistentVolumeReclaimPolicy”:”Retain”}}’查看设置是否正确

# kubectl get pv

https://edu.csdn.net/course/detail/27762?spm=1003.2449.3001.8295.3