深度学习卷积神经网络识别验证码

文章目录

- 深度学习卷积神经网络识别验证码

- 一、引言

- 二、导入必要的库

- 三、防止 tensorflow 占用所有显存

- 四、定义数据生成器并测试

- 五、定义网络结构

- 六、训练模型

- 七、测试模型

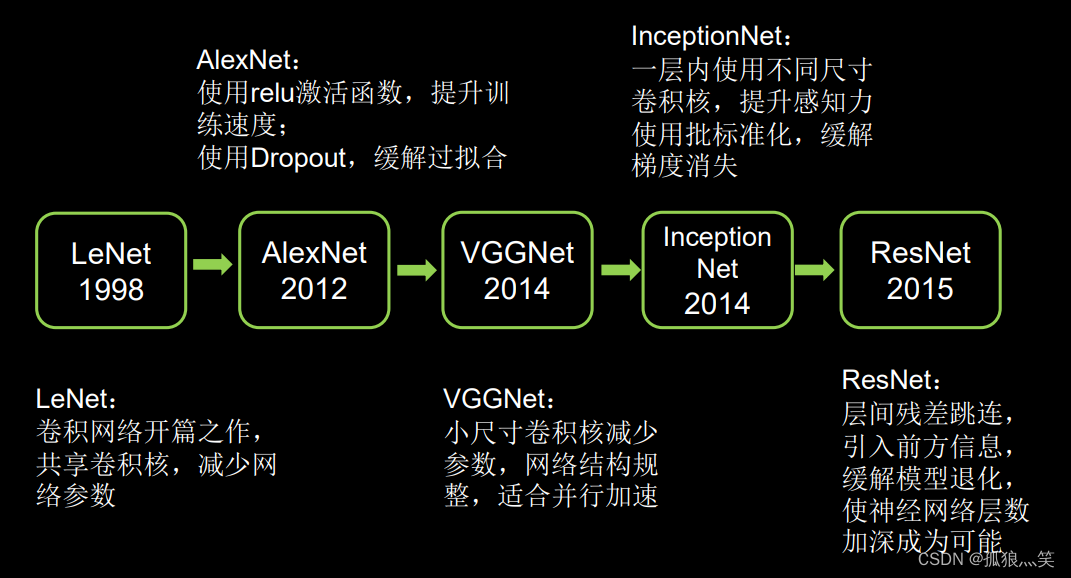

一、引言

验证码识别,本身使用来判断访问网站的用户是不是一个真人,但是随着人工智能的发展,尤其是深度卷积神经网络的发展,使得验证码识别机器还是人的底线再被层层攻破,本文所使用的captcha 可以生成语音和图片验证码,验证码是由数字、大写字母、小写字母组成,可以自动生成验证码。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-E7jq6PxI-1693272688113)(C:\Users\Administrator\AppData\Roaming\Typora\typora-user-images\image-20230828213513905.png)]](https://img-blog.csdnimg.cn/4ebe735645b94aafbe43623396912b3d.png)

最好掌握的预备知识:

- 有python脚本编程基础;

- 了解图像处理、计算机视觉理论基础;

- 对Opencv有一定的认识;

- 对图像处理有操作经验;

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-6EZ6HRR7-1693272688114)(C:\Users\Administrator\AppData\Roaming\Typora\typora-user-images\image-20230828213911621.png)]](https://img-blog.csdnimg.cn/a8196896615543bb8342ac94df18d4aa.png)

目的:通过Python语言与其各种资源库如numpy,pillow,tensorflow,keras等来实现。将效果实现出来。了解如何使用catptcha自动生成验证码数据,同时使用tensorflow撰写网络,来完成验证码训练,将使用高级框架的keras作为手写字母的网络主体。

二、导入必要的库

我们需要导入一个叫 captcha 的库来生成验证码。

我们生成验证码的字符由数字和大写字母组成。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-9xBRQPC1-1693272688115)(C:\Users\Administrator\AppData\Roaming\Typora\typora-user-images\image-20230828185346056.png)]](https://img-blog.csdnimg.cn/371473cab8a64029b0c4aa47dec24c8c.png)

导入成功后,我们使用jupyter notebook编写代码,使用py3.6.9,TensorFlow2.0+

from captcha.image import ImageCaptcha

import matplotlib.pyplot as plt

import numpy as np

import random

import tensorflow as tf%matplotlib inline

%config InlineBackend.figure_format = 'retina'#输出所有的ascii,用来生成验证码

import string

characters = string.digits + string.ascii_uppercase

print(characters)#验证码的长、宽、字符数、类别数

width, height, n_len, n_class = 128, 64, 4, len(characters)

输出结果:

0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZ

三、防止 tensorflow 占用所有显存

#下面就是实现按需分配的代码

gpus = tf.config.experimental.list_physical_devices('GPU')

if gpus:try:#设置仅在需要时申请显存空间for gpu in gpus:tf.config.experimental.set_memory_growth(gpu, True)logical_gpus = tf.config.experimental.list_logical_devices('GPU')print(len(gpus), "Physical GPUs,", len(logical_gpus), "Logical GPUs")except RuntimeError as e:print(e)

四、定义数据生成器并测试

from tensorflow.keras.utils import Sequenceclass CaptchaSequence(Sequence):#这里传入的参数与上面的导入模块常量相对应def __init__(self, characters, batch_size, steps, n_len=4, width=128, height=64):self.characters = charactersself.batch_size = batch_sizeself.steps = stepsself.n_len = n_lenself.width = widthself.height = heightself.n_class = len(characters)self.generator = ImageCaptcha(width=width, height=height)def __len__(self):return self.stepsdef __getitem__(self, idx):X = np.zeros((self.batch_size, self.height, self.width, 3), dtype=np.float32)y = [np.zeros((self.batch_size, self.n_class), dtype=np.uint8) for i in range(self.n_len)]for i in range(self.batch_size):random_str = ''.join([random.choice(self.characters) for j in range(self.n_len)])X[i] = np.array(self.generator.generate_image(random_str)) / 255.0for j, ch in enumerate(random_str):y[j][i, :] = 0y[j][i, self.characters.find(ch)] = 1return X, y

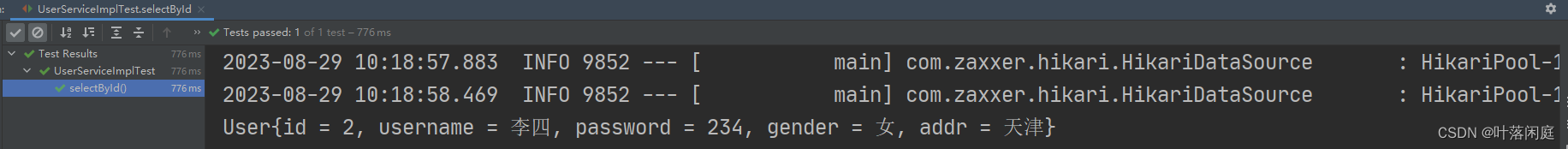

测试代码:

def decode(y):y = np.argmax(np.array(y), axis=2)[:,0]return ''.join([characters[x] for x in y])

#注意每次运行输出结果可能不同,因为我们每次随机选择一个字符

data = CaptchaSequence(characters, batch_size=1, steps=1)

X, y = data[0]

plt.imshow(X[0])

plt.title(decode(y))

结果如下:

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-8lx2zPpf-1693272688115)(C:\Users\Administrator\AppData\Roaming\Typora\typora-user-images\image-20230828214749531.png)]](https://img-blog.csdnimg.cn/37483d2e93a641fbbafd62a8fb2f731b.png)

注意:生成的验证码中字可能会出现不清晰的情况

例如这样:

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-w3VvkFwm-1693272688116)(C:\Users\Administrator\AppData\Roaming\Typora\typora-user-images\image-20230828191026978.png)]](https://img-blog.csdnimg.cn/cda4a6c200ef494aaa047b4ec8b6f1c4.png)

总结原因可能有以下几点:

- 图片尺寸过小:在代码中,如果设置了验证码图片的宽度和高度为64和32,这可能导致生成的验证码图片较小,从而导致字体较小且不清晰。可以尝试增大图片尺寸,例如将宽度设置为128,高度设置为64。

- 图片缩放导致失真:在生成图片时,使用了

np.array(self.generator.generate_image(random_str)) / 255.0将图像转换为numpy数组并进行了归一化处理。如果在这个过程中对图像进行了缩放操作,可能会导致图像失真,从而影响字体的清晰度。可以尝试去除归一化处理,或者调整归一化的方式,确保图像不失真。 - 字体类型和大小:验证码生成器

ImageCaptcha使用默认的字体类型和大小生成验证码。如果默认字体类型和大小不适合生成清晰的验证码,可以尝试更换字体类型并调整字体大小。可以参考ImageCaptcha的文档,了解如何更改字体类型和大小。 - 图像参数调整:除了上述因素外,还可以尝试调整一些图像生成的参数,例如干扰线的宽度、噪点的数量等,以提高验证码的清晰度。

五、定义网络结构

train_data = CaptchaSequence(characters, batch_size=512, steps=1000)

valid_data = CaptchaSequence(characters, batch_size=128, steps=100)

x_train, y_train = train_data[0]

x_val, y_val = valid_data[0]

from tensorflow.keras.models import *

from tensorflow.keras.layers import *input_tensor = Input((height, width, 3))

x = input_tensor

for num_cnn,num_kernel in enumerate([32,16,8]):for j in range(num_cnn):x = Conv2D(num_kernel, kernel_size=(3,3), padding='same', kernel_initializer='he_uniform')(x)x = Conv2D(num_kernel, kernel_size=(3,3), padding='same')(x)x = BatchNormalization()(x)x = Activation('relu')(x)x = MaxPooling2D(pool_size=(2,2))(x)x = Flatten()(x)

x = [Dense(n_class, activation='softmax',name='c%d'%(i+1))(x) for i in range(n_len)]

model = Model(inputs=input_tensor, outputs=x)

model.summary()

网络结构总结如下:

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 64, 128, 3)] 0

__________________________________________________________________________________________________

conv2d (Conv2D) (None, 64, 128, 16) 448 input_1[0][0]

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 64, 128, 16) 2320 conv2d[0][0]

__________________________________________________________________________________________________

batch_normalization (BatchNorma (None, 64, 128, 16) 64 conv2d_1[0][0]

__________________________________________________________________________________________________

activation (Activation) (None, 64, 128, 16) 0 batch_normalization[0][0]

__________________________________________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 32, 64, 16) 0 activation[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 32, 64, 8) 1160 max_pooling2d[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 32, 64, 8) 584 conv2d_2[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 32, 64, 8) 32 conv2d_3[0][0]

__________________________________________________________________________________________________

activation_1 (Activation) (None, 32, 64, 8) 0 batch_normalization_1[0][0]

__________________________________________________________________________________________________

max_pooling2d_1 (MaxPooling2D) (None, 16, 32, 8) 0 activation_1[0][0]

__________________________________________________________________________________________________

conv2d_4 (Conv2D) (None, 16, 32, 8) 584 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

conv2d_5 (Conv2D) (None, 16, 32, 8) 584 conv2d_4[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 16, 32, 8) 32 conv2d_5[0][0]

__________________________________________________________________________________________________

activation_2 (Activation) (None, 16, 32, 8) 0 batch_normalization_2[0][0]

__________________________________________________________________________________________________

max_pooling2d_2 (MaxPooling2D) (None, 8, 16, 8) 0 activation_2[0][0]

__________________________________________________________________________________________________

flatten (Flatten) (None, 1024) 0 max_pooling2d_2[0][0]

__________________________________________________________________________________________________

c1 (Dense) (None, 36) 36900 flatten[0][0]

__________________________________________________________________________________________________

c2 (Dense) (None, 36) 36900 flatten[0][0]

__________________________________________________________________________________________________

c3 (Dense) (None, 36) 36900 flatten[0][0]

__________________________________________________________________________________________________

c4 (Dense) (None, 36) 36900 flatten[0][0]

==================================================================================================

Total params: 153,408

Trainable params: 153,344

Non-trainable params: 64

六、训练模型

开始训练

from tensorflow.keras.callbacks import EarlyStopping, ModelCheckpoint

from tensorflow.keras.optimizers import Adamcallbacks = [EarlyStopping(patience=3), ModelCheckpoint('cnn_best.h5', save_best_only=True)]model.compile(loss='categorical_crossentropy',optimizer=Adam(1e-3), metrics=['accuracy'])

history = model.fit(x_train, y_train, epochs=100, validation_data=(x_val,y_val),callbacks=callbacks, verbose=1)

载入最好的模型继续训练一会

model.load_weights('cnn_best.h5')callbacks = [EarlyStopping(patience=3),ModelCheckpoint('cnn_best.h5', save_best_only=True)]

#学习率降低,

model.compile(loss='categorical_crossentropy',optimizer=Adam(1e-4), metrics=['accuracy'])

model.fit(x_train, y_train, epochs=100, validation_data=(x_val,y_val),callbacks=callbacks, verbose=1)

保存最好的模型

model.save('../cnn_best.h5')

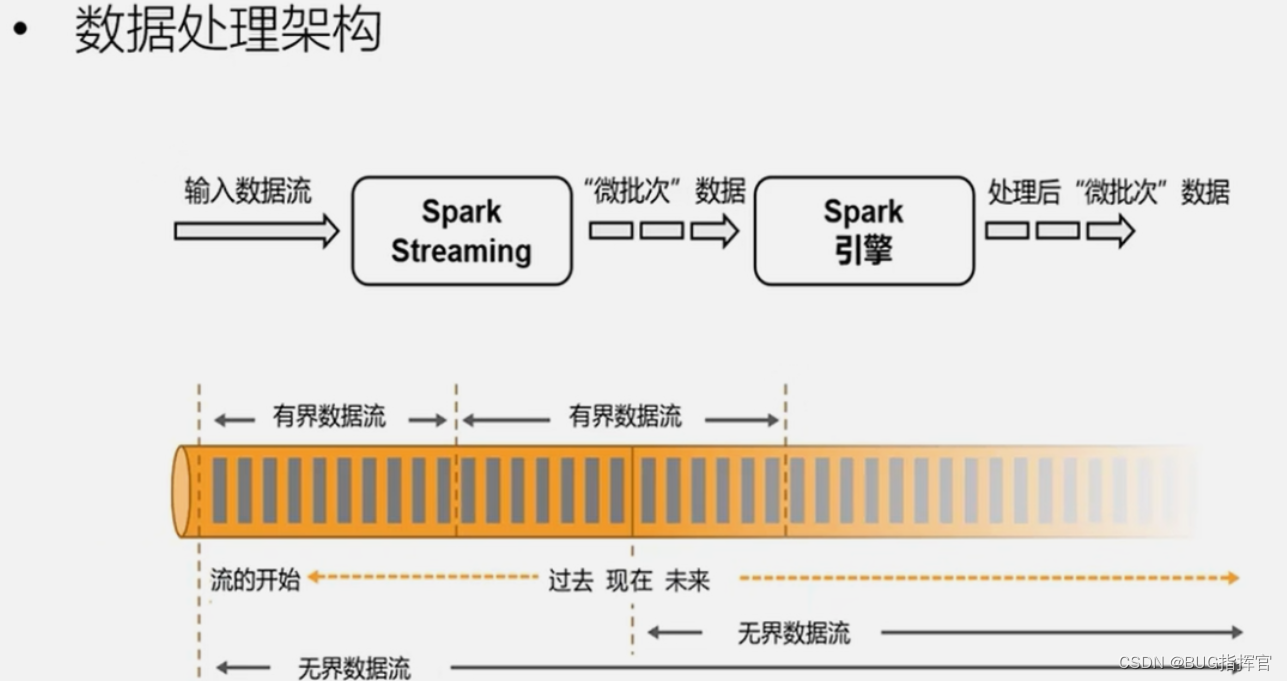

七、测试模型

X, y = data[0]

y_pred = model.predict(X)

plt.title('real: %s pred:%s'%(decode(y), decode(y_pred)))

plt.imshow(X[0], cmap='gray')

plt.axis('off')

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-Q0NnLMwd-1693272688117)(C:\Users\Administrator\AppData\Roaming\Typora\typora-user-images\image-20230828215633050.png)]](https://img-blog.csdnimg.cn/61a5d3a2b11a45ecac4f15364050c593.png)

可见测试模型效果并不理想,因为我们的训练迭代次数少,网络结构简单,不过我们可以增加网络层数,调节网络参数然后重新训练一个更好的模型。