一、发展

1989年,Yann LeCun提出了一种用反向传导进行更新的卷积神经网络,称为LeNet。

1998年,Yann LeCun提出了一种用反向传导进行更新的卷积神经网络,称为LeNet-5

AlexNet是2012年ISLVRC 2012(ImageNet Large Scale Visual Recognition Challenge)竞赛的冠军网络,分类准确率由传统的 70%+提升到 80%+。 它是由Hinton和他的学生Alex Krizhevsky设计的。也是在那年之后,深度学习开始迅速发展。

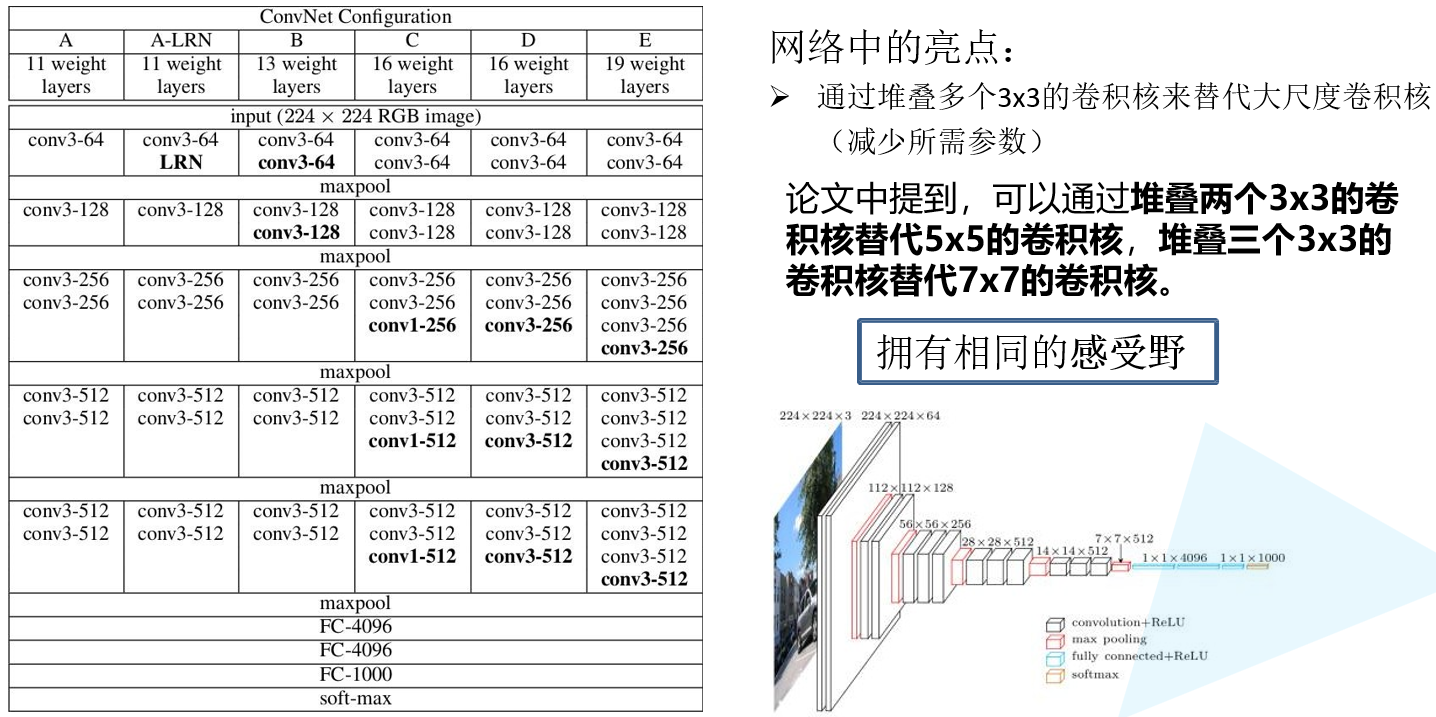

VGG在2014年由牛津大学著名研究组VGG (Visual Geometry Group) 提出,斩获该年ImageNet竞 中 Localization Task (定位 任务) 第一名 和 Classification Task (分类任务) 第二名。

GoogleNet,ResNet

二、VGG

2.1 详解

卷积核堆叠:通过小的卷积核堆叠实现大的卷积核效果,同时减少参数

c o n v 的 s tri de 为 1 , padd i ng 为 1m a x p ool 的 si z e 为 2 , s tri de 为 2

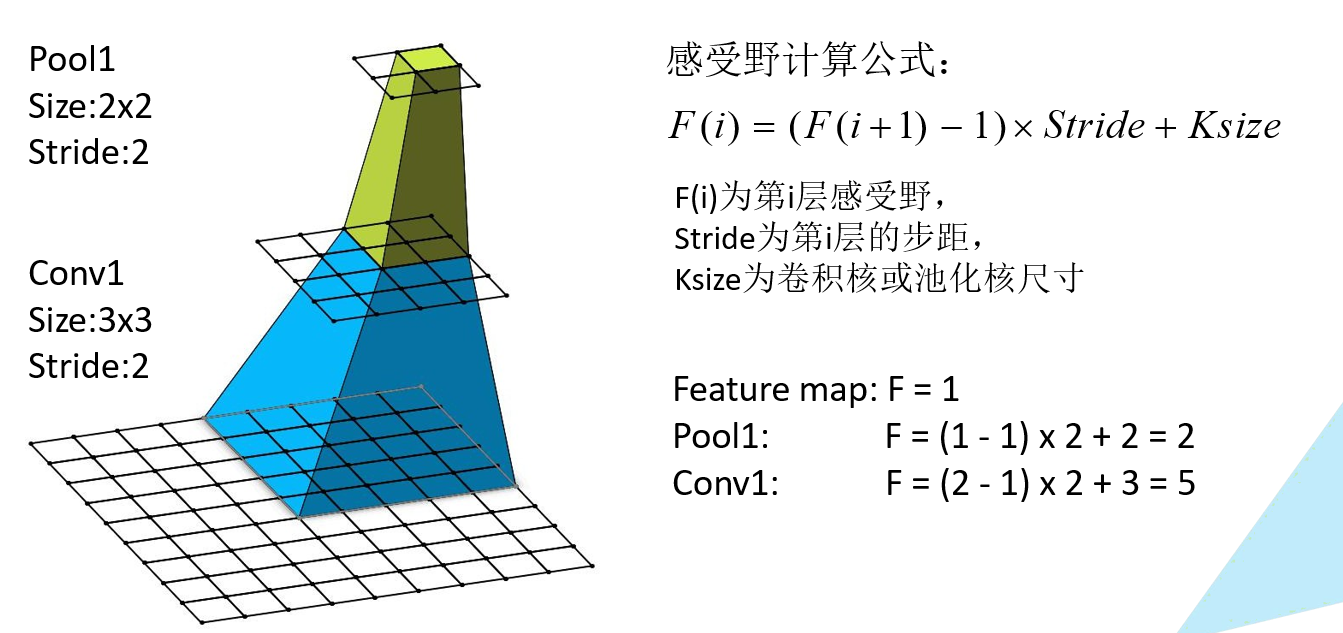

三、感受野

在卷积神经网络中,决定某一层输出结果中一个元素所对应的输入层的区域大 小,被称作感受野(receptive field)。通俗 的解释是,输出feature map上的一个单元 对应输入层上的区域大小。

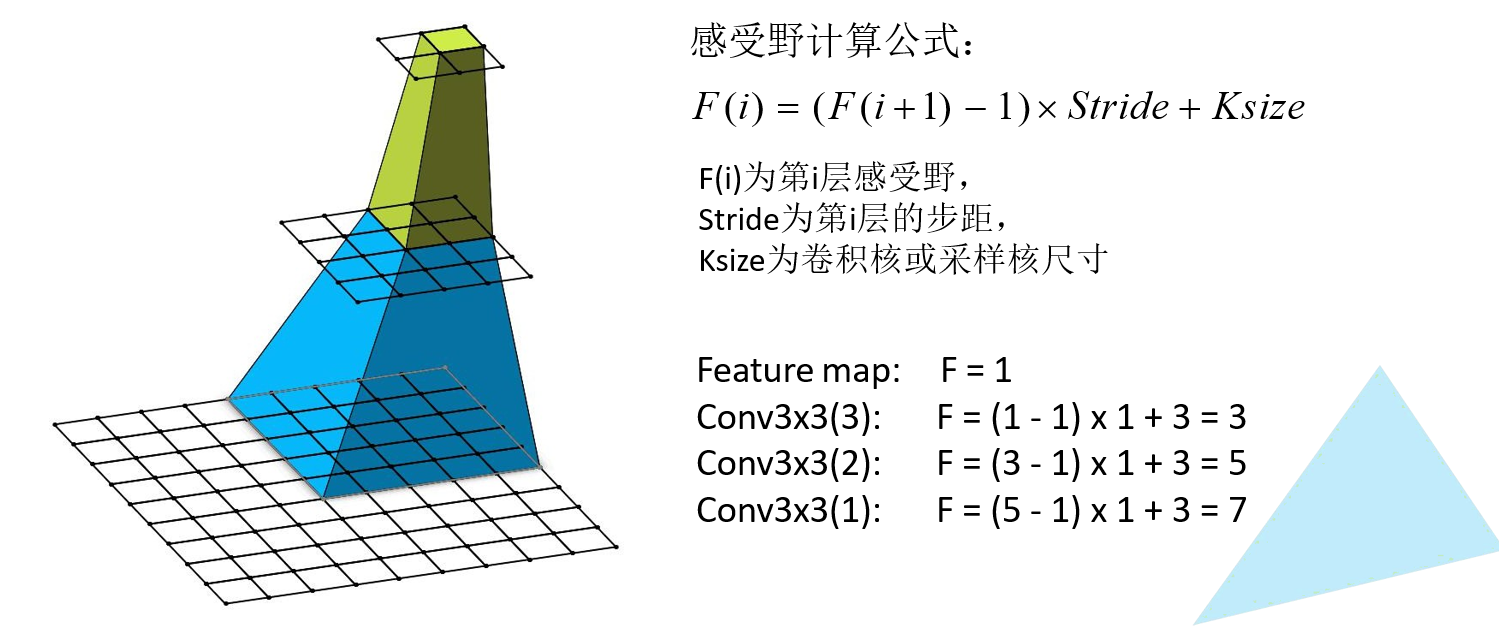

三次3*3卷积叠加效果可以达到7*7效果,二次3*3卷积叠加的感受野是5*5效果

(5*5卷积核需要25个参数,而两次3*3卷积只需要 18个,感受野能达到和5*5效果一样)

四、VGG实现

4.1 VGG各版本兼容

from tensorflow import keras

import tensorflow as tf

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt将几种VGG同时实现

cfgs = {'vgg11': [64, 'M', 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'], 'vgg13': [64, 64, 'M', 128, 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],'vgg16': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512, 'M'],'vgg19': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 256, 'M', 512, 512, 512, 512, 'M', 512, 512, 512, 512, 'M']

}def make_feature(cfg):feature_layers = []for v in cfg:if v == 'M':feature_layers.append(keras.layers.MaxPool2D(pool_size=2, strides=2))else:feature_layers.append(keras.layers.Conv2D(v, kernel_size=3, padding='SAME', activation='relu'))return keras.Sequential(feature_layers, name='feature')

4.2 模型实现

# 定义vgg的网络结构

def VGG(feature, im_height=224, im_width=224, num_classes=1000):input_image = keras.layers.Input(shape=(im_height, im_width, 3), dtype='float32')x = feature(input_image)x = keras.layers.Flatten()(x)x = keras.layers.Dropout(rate=0.5)(x)# 原论文是4096x = keras.layers.Dense(2048, activation='relu')(x)x = keras.layers.Dropout(rate=0.5)(x)x = keras.layers.Dense(2048, activation='relu')(x)x = keras.layers.Dense(num_classes)(x)output = keras.layers.Softmax()(x)model = keras.models.Model(inputs=input_image, outputs=output)return model4.3 数据准备

train_dir = './training/training/'

valid_dir = './validation/validation/'# 图片数据生成器

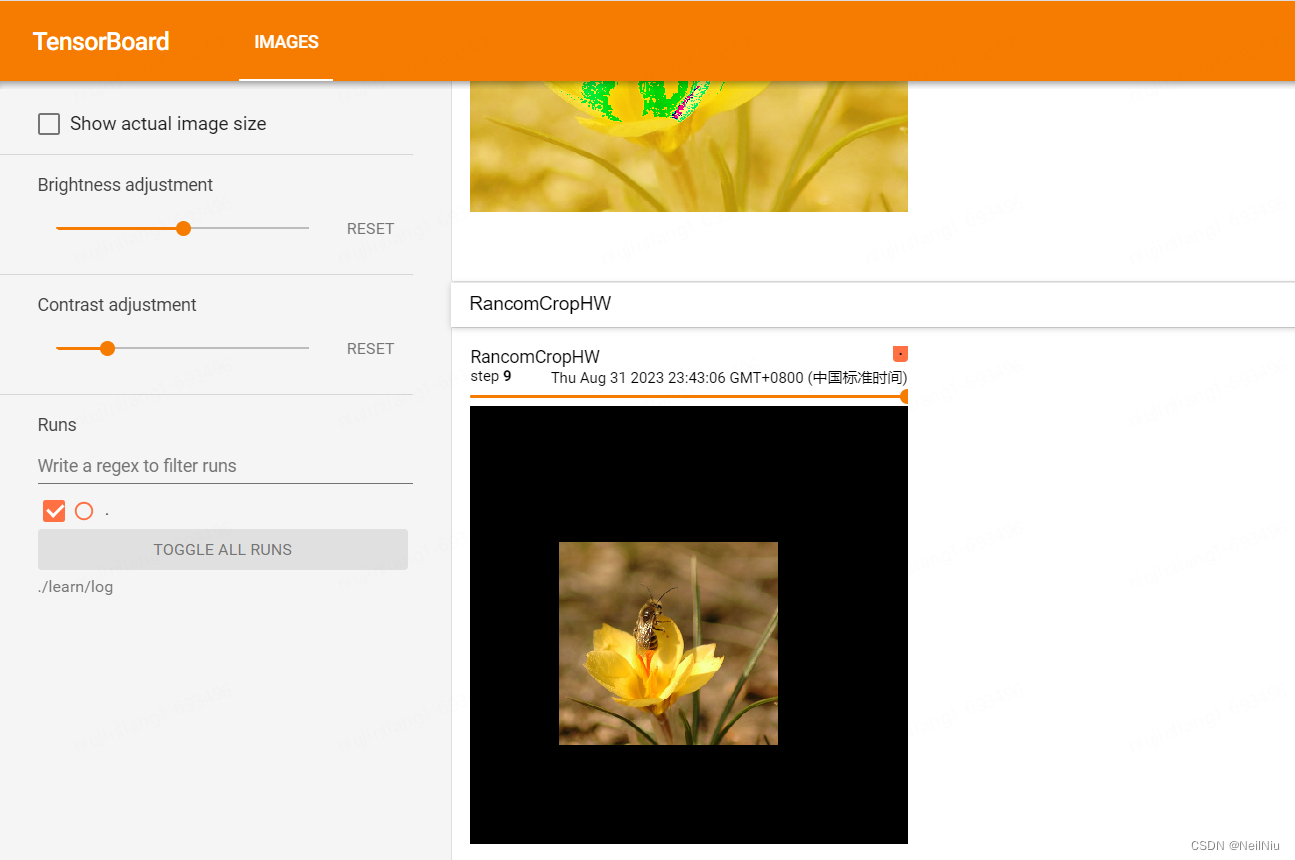

train_datagen = keras.preprocessing.image.ImageDataGenerator(rescale = 1. / 255,rotation_range = 40,width_shift_range = 0.2,height_shift_range = 0.2,shear_range = 0.2,zoom_range = 0.2,horizontal_flip = True,vertical_flip = True,fill_mode = 'nearest'

)height = 224

width = 224

channels = 3

batch_size = 32

num_classes = 10train_generator = train_datagen.flow_from_directory(train_dir,target_size = (height, width),batch_size = batch_size,shuffle = True,seed = 7,class_mode = 'categorical')valid_datagen = keras.preprocessing.image.ImageDataGenerator(rescale = 1. / 255

)

valid_generator = valid_datagen.flow_from_directory(valid_dir,target_size = (height, width),batch_size = batch_size,shuffle = True,seed = 7,class_mode = 'categorical')

print(train_generator.samples)

print(valid_generator.samples)4.4 获取模型

# 获取vgg模型

def vgg(model_name='vgg16', im_height=224, im_width=224, num_classes=1000):cfg = cfgs[model_name]model = VGG(make_feature(cfg), im_height=im_height, im_width=im_width, num_classes=num_classes)return modelvgg16 = vgg(num_classes=10)

vgg16.summary()4.5 训练

vgg16.compile(optimizer='adam', loss='categorical_crossentropy',metrics=['acc'])history = vgg16.fit(train_generator,steps_per_epoch=train_generator.samples // batch_size,epochs=10,validation_data=valid_generator,validation_steps = valid_generator.samples // batch_size)