150.逆波兰表达式求值

//需理解该计算方法和字符串消除类似,只是变成了两数的运算

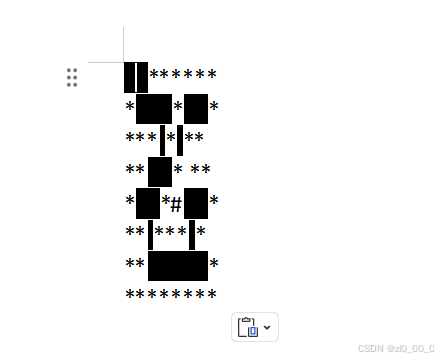

int evalRPN(vector<string>& tokens) {stack<long long> stack_eval;for(int i = 0; i < tokens.size(); i++){if(tokens[i] == "+" || tokens[i] == "-" || tokens[i] == "*" || tokens[i] == "/"){long long num_1 = stack_eval.top();stack_eval.pop();long long num_2 = stack_eval.top();stack_eval.pop();if(tokens[i] == "+") stack_eval.push(num_2 + num_1);if(tokens[i] == "-") stack_eval.push(num_2 - num_1);if(tokens[i] == "*") stack_eval.push(num_2 * num_1);if(tokens[i] == "/") stack_eval.push(num_2 / num_1);}else{stack_eval.push(stoll(tokens[i]));}}long long result = stack_eval.top();return result;}239.滑动窗口最大值,需二刷

//第一想法是判断k中最大值然后输出,时间复杂度为O(n*k),报超时

vector<int> maxSlidingWindow(vector<int>& nums, int k) {vector<int> result;for(int i = nums.size()-1; i >= k - 1; i--){int max = nums[i];for(int j = k-1 ; j > 0; j--){if(nums[i-j] > max){max = nums[i-j];}}result.push_back(max);}reverse(result.begin(), result.end());return result;}//解法二需了解单调队列的概念以及用哪种数据结构实现

class MyDeque{public:deque<int> _deque;void pop(int value){if(value == _deque.front()){_deque.pop_front();}}void push(int value){while(!_deque.empty() && value > _deque.back()){_deque.pop_back();}_deque.push_back(value);}int front(){return _deque.front();}};vector<int> maxSlidingWindow(vector<int>& nums, int k) {vector<int> result;MyDeque my_deque;for(int i = 0; i < k; i++){my_deque.push(nums[i]);}result.push_back(my_deque.front());for(int j = k; j < nums.size(); j++){my_deque.pop(nums[j-k]);my_deque.push(nums[j]);result.push_back(my_deque.front());}return result;}347.前K个高频元素,需二刷

//理解优先级队列中大顶堆,小顶堆的概念及用法

struct Compare{bool operator()(pair<int, int>lhs, pair<int, int>rhs){//小顶堆是大于,大顶堆是小于return lhs.second > rhs.second;}};vector<int> topKFrequent(vector<int>& nums, int k) {vector<int> result(k);unordered_map<int, int> _map;for(int i = 0; i < nums.size(); i++){_map[nums[i]]++;}std::priority_queue<pair<int, int>, std::vector<pair<int, int>>, Compare> _priority;for(unordered_map<int, int>::iterator it = _map.begin(); it != _map.end(); it++){_priority.push(*it);//保持k个元素在小顶堆中,删除小的堆顶留下的就是较大的K个if(_priority.size() > k){_priority.pop();}}for(int j = k-1; j >= 0; j--){//倒叙输出,最大的在堆最后result[j] = _priority.top().first;_priority.pop();}return result;}

![[学习笔记] Kotlin Compose-Multiplatform](https://img-home.csdnimg.cn/images/20230724024159.png?origin_url=compose.assets%2Ftooltips.gif&pos_id=img-JR8Vi11g-1738845957167)