背景:

学过或者你看过surfaceflinger相关文章同学都知道,vsync其实都是由surfaceflinger软件层面进行模拟的,但是软件模拟有可能会有误差或偏差,这个时候就需要有个硬件vsync帮忙校准。

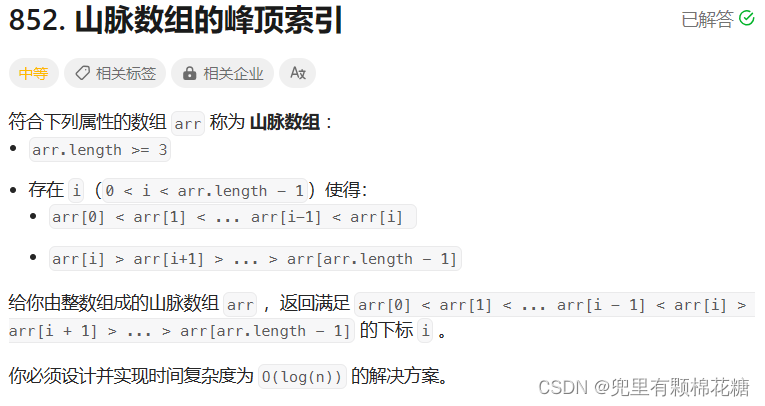

故才会在surfaceflinger的systrace出现如下校准波形图,这个可以看到硬件vsync开启后才有hw的vsync的脉冲产生,这个刚好可以看到成对的一上一下脉冲刚好6个,也就是经常看到的6个周期的,经过这个6个硬件vsync的校准后,软件vsync就可以调整正常。

但是。。。一切一切都是好像是这么一回事,具体怎么就产生这些硬件vsync的波形的呢?硬件vsync为啥说他来自硬件呢?怎么就来自硬件呢?好像一切都好虚是不是,那不是肯定的吗?那么今天就来彻底解密一下这个硬件vsync的执行哈,注意下面很多kernel驱动相关代码。。。。。具体相关代码付费课学员直接就会配套有哈。

但是。。。一切一切都是好像是这么一回事,具体怎么就产生这些硬件vsync的波形的呢?硬件vsync为啥说他来自硬件呢?怎么就来自硬件呢?好像一切都好虚是不是,那不是肯定的吗?那么今天就来彻底解密一下这个硬件vsync的执行哈,注意下面很多kernel驱动相关代码。。。。。具体相关代码付费课学员直接就会配套有哈。

更多framework实战课可以加我V:androidframework007

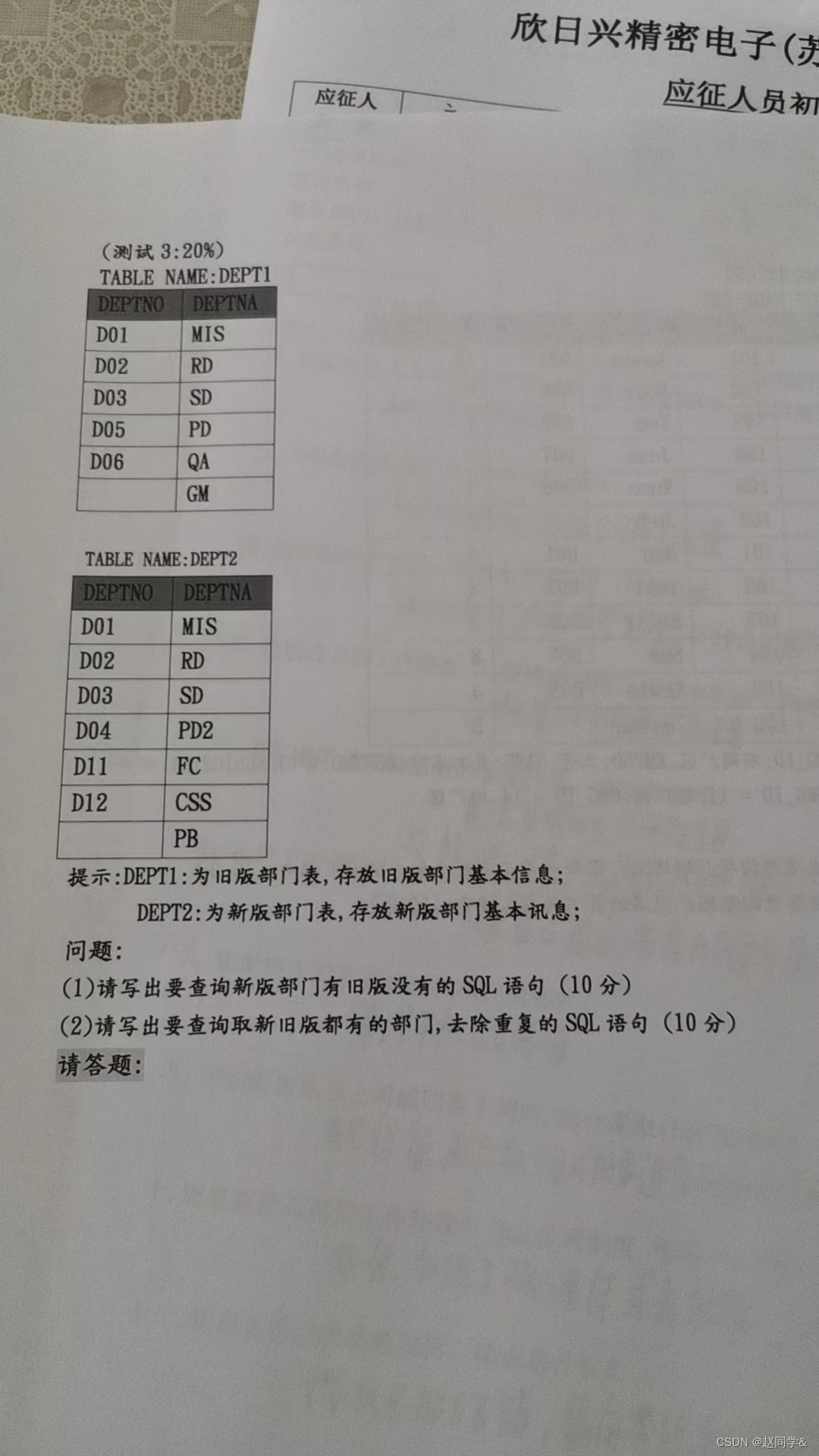

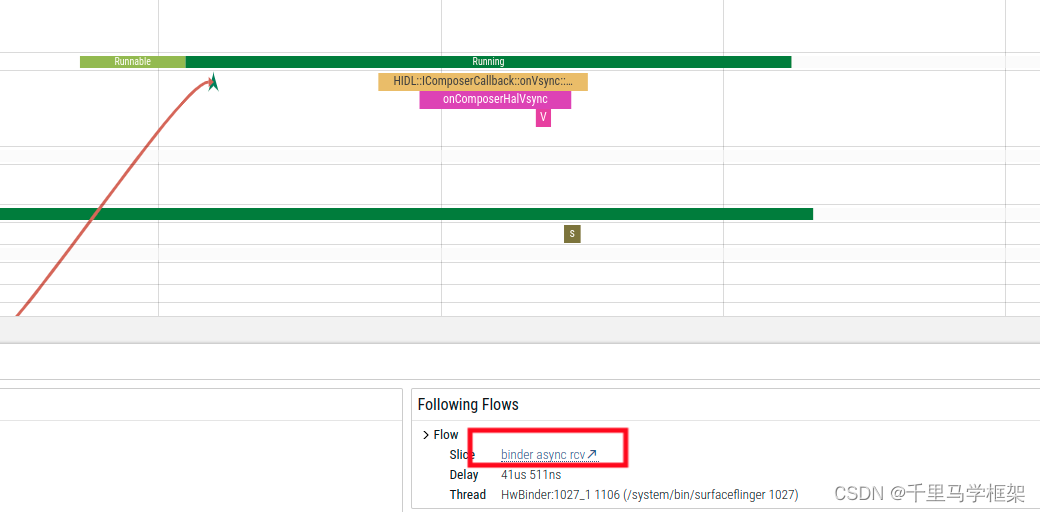

surfaceflinger端硬件Vsync的调用trace:

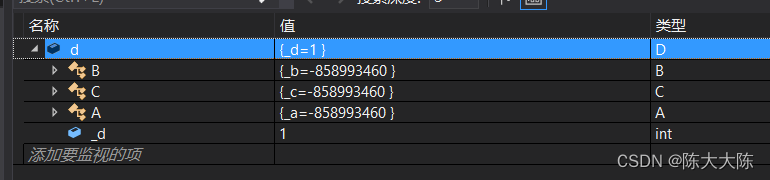

这个相对很好看到如下图所示:

明显看到这个东西实际是hal进程通过跨进程回调到了surfaceflinger进程的,那么就来看看hal部分

明显看到这个东西实际是hal进程通过跨进程回调到了surfaceflinger进程的,那么就来看看hal部分

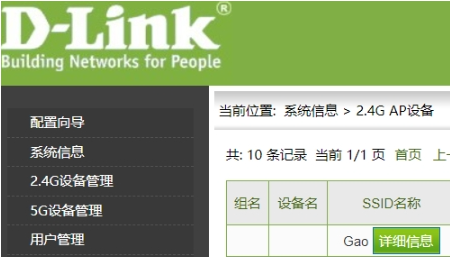

hal端的回调硬件Vsync堆栈

首先来看看我们的trace图形,这个直接surfaceflinger箭头点击一跳就可以了

这个时候就到了graphic的hal进程相关trace图形如下

这个时候就到了graphic的hal进程相关trace图形如下

可以看到这个确实是hal端的SDM_EventThread线程进行调用的,这里按着代码一直追的化追到如下地方了:

11-04 23:21:53.200 977 1065 D Vsync : #00 pc 000000000003b65c /vendor/lib64/hw/hwcomposer.msm8998.so (sdm::HWCCallbacks::Vsync(unsigned long, long)+76)

11-04 23:21:53.200 977 1065 D Vsync : #01 pc 000000000002f9c8 /vendor/lib64/hw/hwcomposer.msm8998.so (sdm::HWCDisplay::VSync(sdm::DisplayEventVSync const&)+28)

11-04 23:21:53.200 977 1065 D Vsync : #02 pc 000000000002c818 /vendor/lib64/libsdmcore.so (non-virtual thunk to sdm::DisplayPrimary::VSync(long)+68)

11-04 23:21:53.200 977 1065 D Vsync : #03 pc 0000000000048dc8 /vendor/lib64/libsdmcore.so (sdm::HWEvents::DisplayEventHandler()+288)

11-04 23:21:53.200 977 1065 D Vsync : #04 pc 0000000000048b60 /vendor/lib64/libsdmcore.so (sdm::HWEvents::DisplayEventThread(void*)+16)

11-04 23:21:53.200 977 1065 D Vsync : #05 pc 00000000000b63b0 /apex/com.android.runtime/lib64/bionic/libc.so (__pthread_start(void*)+208)

11-04 23:21:53.200 977 1065 D Vsync : #06 pc 00000000000530b8 /apex/com.android.runtime/lib64/bionic/libc.so (__start_thread+64)这里就来从最根部的地方开始看到底hal的这个事件是谁触发的?是hal直接和硬件通讯?哈哈,想想也不可能是吧,因为hal进程也是个应用空间的程序而已,无法直接操作硬件。直接上代码揭晓答案:

//初始化相关的poll的fd

pollfd HWEvents::InitializePollFd(HWEventData *event_data) {char node_path[kMaxStringLength] = {0};char data[kMaxStringLength] = {0};pollfd poll_fd = {0};poll_fd.fd = -1;if (event_data->event_type == HWEvent::EXIT) {// Create an eventfd to be used to unblock the poll system call when// a thread is exiting.poll_fd.fd = Sys::eventfd_(0, 0);poll_fd.events |= POLLIN;exit_fd_ = poll_fd.fd;} else {snprintf(node_path, sizeof(node_path), "%s%d/%s", fb_path_, fb_num_,map_event_to_node_[event_data->event_type]);poll_fd.fd = Sys::open_(node_path, O_RDONLY);poll_fd.events |= POLLPRI | POLLERR;}if (poll_fd.fd < 0) {DLOGW("open failed for display=%d event=%s, error=%s", fb_num_,map_event_to_node_[event_data->event_type], strerror(errno));return poll_fd;}// Read once on all fds to clear data on all fds.Sys::pread_(poll_fd.fd, data , kMaxStringLength, 0);return poll_fd;

}

//设置相关的event_type解析方法

DisplayError HWEvents::SetEventParser(HWEvent event_type, HWEventData *event_data) {DisplayError error = kErrorNone;switch (event_type) {case HWEvent::VSYNC:event_data->event_parser = &HWEvents::HandleVSync;break;case HWEvent::IDLE_NOTIFY:event_data->event_parser = &HWEvents::HandleIdleTimeout;break;case HWEvent::EXIT:event_data->event_parser = &HWEvents::HandleThreadExit;break;case HWEvent::SHOW_BLANK_EVENT:event_data->event_parser = &HWEvents::HandleBlank;break;case HWEvent::THERMAL_LEVEL:event_data->event_parser = &HWEvents::HandleThermal;break;case HWEvent::IDLE_POWER_COLLAPSE:event_data->event_parser = &HWEvents::HandleIdlePowerCollapse;break;default:error = kErrorParameters;break;}return error;

}void HWEvents::PopulateHWEventData() {for (uint32_t i = 0; i < event_list_.size(); i++) {HWEventData event_data;event_data.event_type = event_list_[i];SetEventParser(event_list_[i], &event_data);poll_fds_[i] = InitializePollFd(&event_data);event_data_list_.push_back(event_data);}

}DisplayError HWEvents::Init(int fb_num, HWEventHandler *event_handler,const vector<HWEvent> &event_list) {

//创建线程执行循环pollif (pthread_create(&event_thread_, NULL, &DisplayEventThread, this) < 0) {DLOGE("Failed to start %s, error = %s", event_thread_name_.c_str());return kErrorResources;}return kErrorNone;

}void* HWEvents::DisplayEventThread(void *context) {if (context) {return reinterpret_cast<HWEvents *>(context)->DisplayEventHandler();}return NULL;

}

//真的线程执行体

void* HWEvents::DisplayEventHandler() {char data[kMaxStringLength] = {0};prctl(PR_SET_NAME, event_thread_name_.c_str(), 0, 0, 0);setpriority(PRIO_PROCESS, 0, kThreadPriorityUrgent);//一直循环poll中的数据while (!exit_threads_) {int error = Sys::poll_(poll_fds_.data(), UINT32(event_list_.size()), -1);if (error <= 0) {DLOGW("poll failed. error = %s", strerror(errno));continue;}//poll跳出阻塞说明有数据,识别数据执行相关调用的操作for (uint32_t event = 0; event < event_list_.size(); event++) {pollfd &poll_fd = poll_fds_[event];if (event_list_.at(event) == HWEvent::EXIT) {if ((poll_fd.revents & POLLIN) && (Sys::read_(poll_fd.fd, data, kMaxStringLength) > 0)) {(this->*(event_data_list_[event]).event_parser)(data);}} else {if ((poll_fd.revents & POLLPRI) &&(Sys::pread_(poll_fd.fd, data, kMaxStringLength, 0) > 0)) {(this->*(event_data_list_[event]).event_parser)(data);}}}}pthread_exit(0);return NULL;

}

//会回调到这个方法

void HWEvents::HandleVSync(char *data) {int64_t timestamp = 0;if (!strncmp(data, "VSYNC=", strlen("VSYNC="))) {timestamp = strtoll(data + strlen("VSYNC="), NULL, 0);}event_handler_->VSync(timestamp);

}} // namespace sdm上面代码有注释,大家是不是看到熟悉的poll,是不是学了马哥跨进程专题后,这个都不是事分分钟可以看的懂这个逻辑,核心的就是观察相关的vsync的fd,有数据变化了,读取,属于vsync了就触发相关的,vsync回调,这个就是hal的vsync回调

总结其实hal的vsync回调也是监听的fd而已,没啥特殊,其实你说surfaceflinger是不是也可以监听fd直接拿不就行了么。。。哈哈哈确实可以,不过毕竟各个硬件厂商实现不一样,你不能保证其他家也这样实现,所以hal就是这个另一个作用就是解耦system 的aosp部分和vendor厂商的变化部分。

但是问题又来了,请问是谁触发了这个fd有数据的啊?

kernel进行fd的数据通知:

上面hal监听的fd来自哪里?其实大家猜想肯定应该是内核,因为毕竟是硬件vsync,所以可以触碰硬件东西当然是我们的内核驱动。这里最后找到如下代码:

drivers/video/fbdev/msm/mdss_mdp_overlay.c

//中断中调用的

/* function is called in irq context should have minimum processing */

static void mdss_mdp_overlay_handle_vsync(struct mdss_mdp_ctl *ctl,ktime_t t)

{dump_stack();ATRACE_BEGIN("mdss_mdp_overlay_handle_vsync");struct msm_fb_data_type *mfd = NULL;struct mdss_overlay_private *mdp5_data = NULL;if (!ctl) {pr_err("ctl is NULL\n");return;}mfd = ctl->mfd;if (!mfd || !mfd->mdp.private1) {pr_warn("Invalid handle for vsync\n");return;}mdp5_data = mfd_to_mdp5_data(mfd);if (!mdp5_data) {pr_err("mdp5_data is NULL\n");return;}pr_debug("vsync on fb%d play_cnt=%d\n", mfd->index, ctl->play_cnt);mdp5_data->vsync_time = t;sysfs_notify_dirent(mdp5_data->vsync_event_sd);//进行的fd数据通知ATRACE_END("mdss_mdp_overlay_handle_vsync");

}

驱动是vsync是靠相关的硬件中断触发的,具体的call stack如下:

11-05 00:24:24.470 0 0 I Call trace:

11-05 00:24:24.470 0 0 I : [<ffffff91f4c8a874>] dump_backtrace+0x0/0x3a8

11-05 00:24:24.470 0 0 I : [<ffffff91f4c8a86c>] show_stack+0x14/0x1c

11-05 00:24:24.470 0 0 I : [<ffffff91f50280d0>] dump_stack+0xe4/0x11c

11-05 00:24:24.470 0 0 I : [<ffffff91f51037b4>] mdss_mdp_overlay_handle_vsync+0x20/0x1dc

11-05 00:24:24.470 0 0 I : [<ffffff91f50f26d0>] mdss_mdp_cmd_readptr_done+0x154/0x33c

11-05 00:24:24.470 0 0 I : [<ffffff91f50b9534>] mdss_mdp_isr+0x140/0x3bc

11-05 00:24:24.470 0 0 I : [<ffffff91f5164d94>] mdss_irq_dispatch+0x50/0x68

11-05 00:24:24.470 0 0 I : [<ffffff91f50c15cc>] mdss_irq_handler+0x84/0x1c0

11-05 00:24:24.470 0 0 I : [<ffffff91f4d1a92c>] handle_irq_event_percpu+0x78/0x28c

11-05 00:24:24.470 0 0 I : [<ffffff91f4d1abc8>] handle_irq_event+0x44/0x74

11-05 00:24:24.470 0 0 I : [<ffffff91f4d1e714>] handle_fasteoi_irq+0xd8/0x1b0

11-05 00:24:24.470 0 0 I : [<ffffff91f4d1a118>] __handle_domain_irq+0x7c/0xbc

11-05 00:24:24.470 0 0 I : [<ffffff91f4c811d0>] gic_handle_irq+0x80/0x144

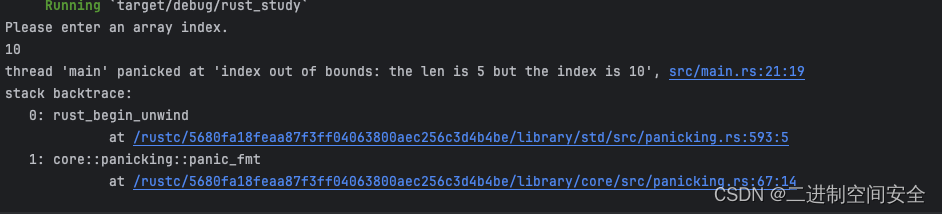

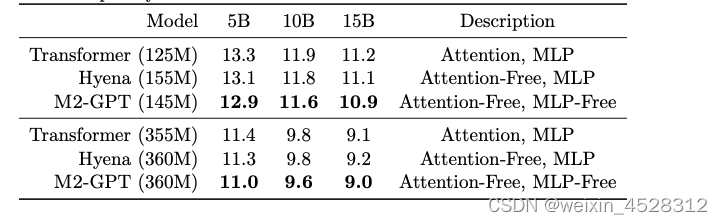

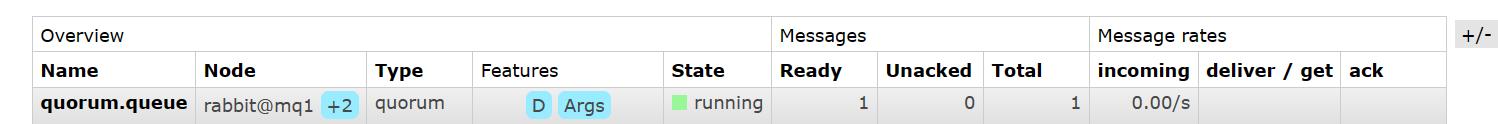

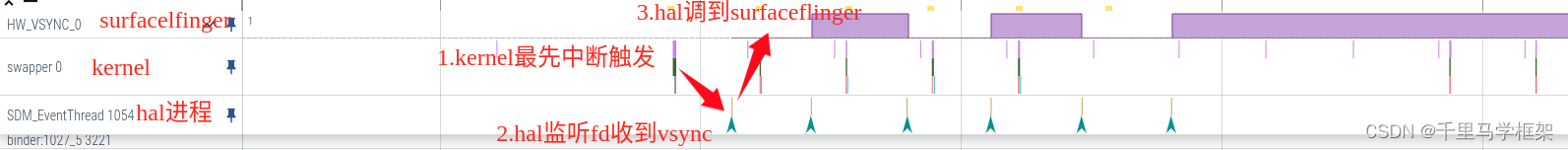

这里通过的给内核kernel打上trace结合看如下:

是不是结合trace看起来很方便,就可以清晰知道了整个调用流程:

硬件触发 kernel中断方法,写入对于的fd

—》hal进程监听fd,然后解析回调vsync进行跨进程通讯

----》surfaceflinger收到hal跨进程调用,改变自己相关的参数,调整自己软件vsync