由于大论文里需要对各个算法进行测速,因此抛开官方文档的使用说明,记录一下我是怎么使用mmdetection里的脚本进行测速的。

mmdetection版本:2.23.0

一、新版本benchmark.py(需要分布式)

打开tools/analysis_tools/benchmark.py

这里主要需要几个参数:训练的config文件和checkpoint文件

(这边建议config用work_dir保存下来的,跟checkpoint对齐,防止后续修改了config文件夹里的config跟当时训练的不对齐)

因此直接输入命令或者写和sh脚本:

python -m torch.distributed.launch --nproc_per_node=1 --master_port=29500 tools/analysis_tools/benchmark.py \

{CONFIG} \

{CHECKPOINT} \

--launcher pytorch注意这里一定要加分布式相关的代码,不然会报错,源码里规定了只支持分布式推理测速。。

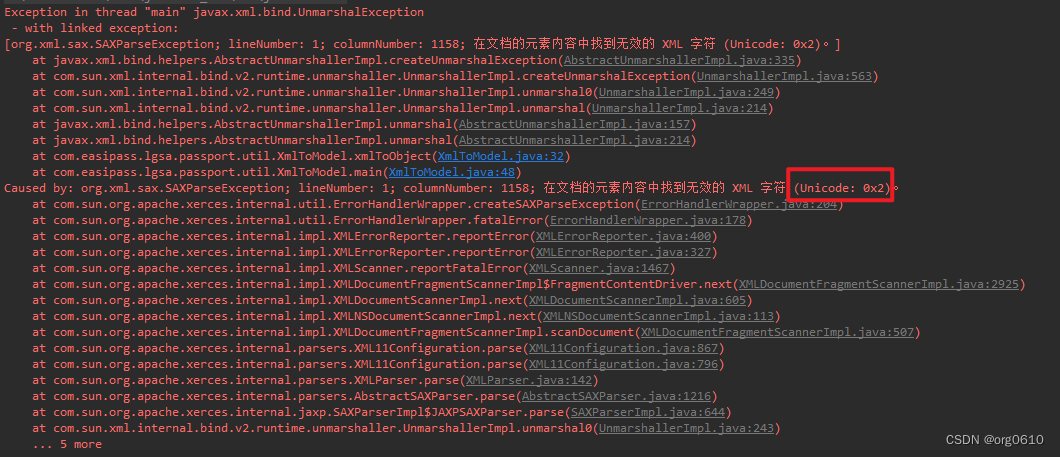

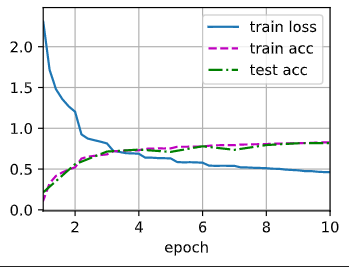

结果:

二、不用分布式的老版本推理benchmark.py

也可以采用老版本的benchmark.py文件进行推理,这边给出源码,可以自行建个py文件:

import argparse

import timeimport torch

from mmcv import Config, DictAction

from mmcv.cnn import fuse_conv_bn

from mmcv.parallel import MMDataParallel

from mmcv.runner import load_checkpoint, wrap_fp16_modelfrom mmdet.datasets import (build_dataloader, build_dataset,replace_ImageToTensor)

from mmdet.models import build_detectordef parse_args():parser = argparse.ArgumentParser(description='MMDet benchmark a model')parser.add_argument('config', help='test config file path')parser.add_argument('checkpoint', help='checkpoint file')parser.add_argument('--log-interval', default=50, help='interval of logging')parser.add_argument('--fuse-conv-bn',action='store_true',help='Whether to fuse conv and bn, this will slightly increase''the inference speed')parser.add_argument('--cfg-options',nargs='+',action=DictAction,help='override some settings in the used config, the key-value pair ''in xxx=yyy format will be merged into config file. If the value to ''be overwritten is a list, it should be like key="[a,b]" or key=a,b ''It also allows nested list/tuple values, e.g. key="[(a,b),(c,d)]" ''Note that the quotation marks are necessary and that no white space ''is allowed.')args = parser.parse_args()return argsdef main():args = parse_args()cfg = Config.fromfile(args.config)if args.cfg_options is not None:cfg.merge_from_dict(args.cfg_options)# import modules from string list.if cfg.get('custom_imports', None):from mmcv.utils import import_modules_from_stringsimport_modules_from_strings(**cfg['custom_imports'])# set cudnn_benchmarkif cfg.get('cudnn_benchmark', False):torch.backends.cudnn.benchmark = Truecfg.model.pretrained = Nonecfg.data.test.test_mode = True# build the dataloadersamples_per_gpu = cfg.data.test.pop('samples_per_gpu', 1)if samples_per_gpu > 1:# Replace 'ImageToTensor' to 'DefaultFormatBundle'cfg.data.test.pipeline = replace_ImageToTensor(cfg.data.test.pipeline)dataset = build_dataset(cfg.data.test)data_loader = build_dataloader(dataset,samples_per_gpu=1,workers_per_gpu=cfg.data.workers_per_gpu,dist=False,shuffle=False)# build the model and load checkpointcfg.model.train_cfg = Nonemodel = build_detector(cfg.model, test_cfg=cfg.get('test_cfg'))fp16_cfg = cfg.get('fp16', None)if fp16_cfg is not None:wrap_fp16_model(model)load_checkpoint(model, args.checkpoint, map_location='cpu')if args.fuse_conv_bn:model = fuse_conv_bn(model)model = MMDataParallel(model, device_ids=[0])model.eval()# the first several iterations may be very slow so skip themnum_warmup = 5pure_inf_time = 0# benchmark with 2000 image and take the averagefor i, data in enumerate(data_loader):torch.cuda.synchronize()start_time = time.perf_counter()with torch.no_grad():model(return_loss=False, rescale=True, **data)torch.cuda.synchronize()elapsed = time.perf_counter() - start_timeif i >= num_warmup:pure_inf_time += elapsedif (i + 1) % args.log_interval == 0:fps = (i + 1 - num_warmup) / pure_inf_timeprint(f'Done image [{i + 1:<3}/ 2000], fps: {fps:.1f} img / s')if (i + 1) == 2000:pure_inf_time += elapsedfps = (i + 1 - num_warmup) / pure_inf_timeprint(f'Overall fps: {fps:.1f} img / s')breakif __name__ == '__main__':main()主要就是不需要设置前面乱七八糟的分布式参数了,直接可以运行,简单上手:

python tools/analysis_tools/benchmark_old.py \

{CONFIG} \

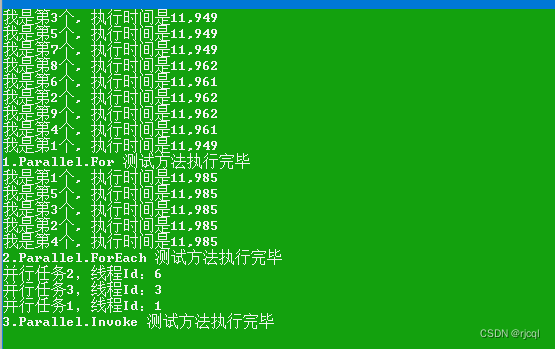

{CHECKPOINT} 结果:

确实会慢一点,不太懂分布式的底层原理,就先这样吧,能用新版本就新版本吧,多几个参数。

三、合并卷积+BN层

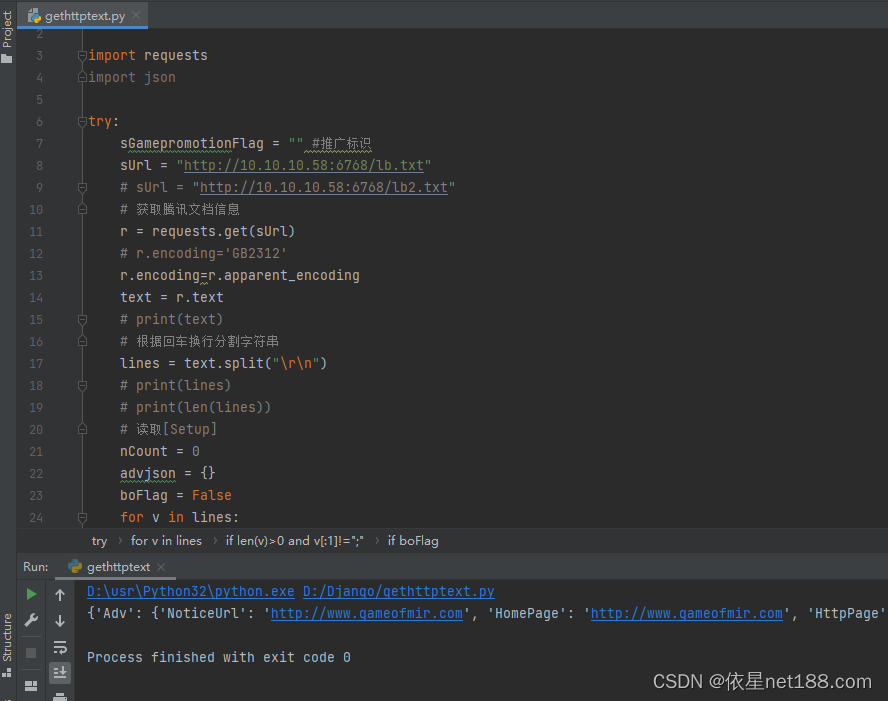

benchmark.py里有个参数:

据说会加快推理速度,试试

加入后(新版本)

python -m torch.distributed.launch --nproc_per_node=1 --master_port=29500 tools/analysis_tools/benchmark.py \

{CONFIG} \

{CHECKPOINT} \

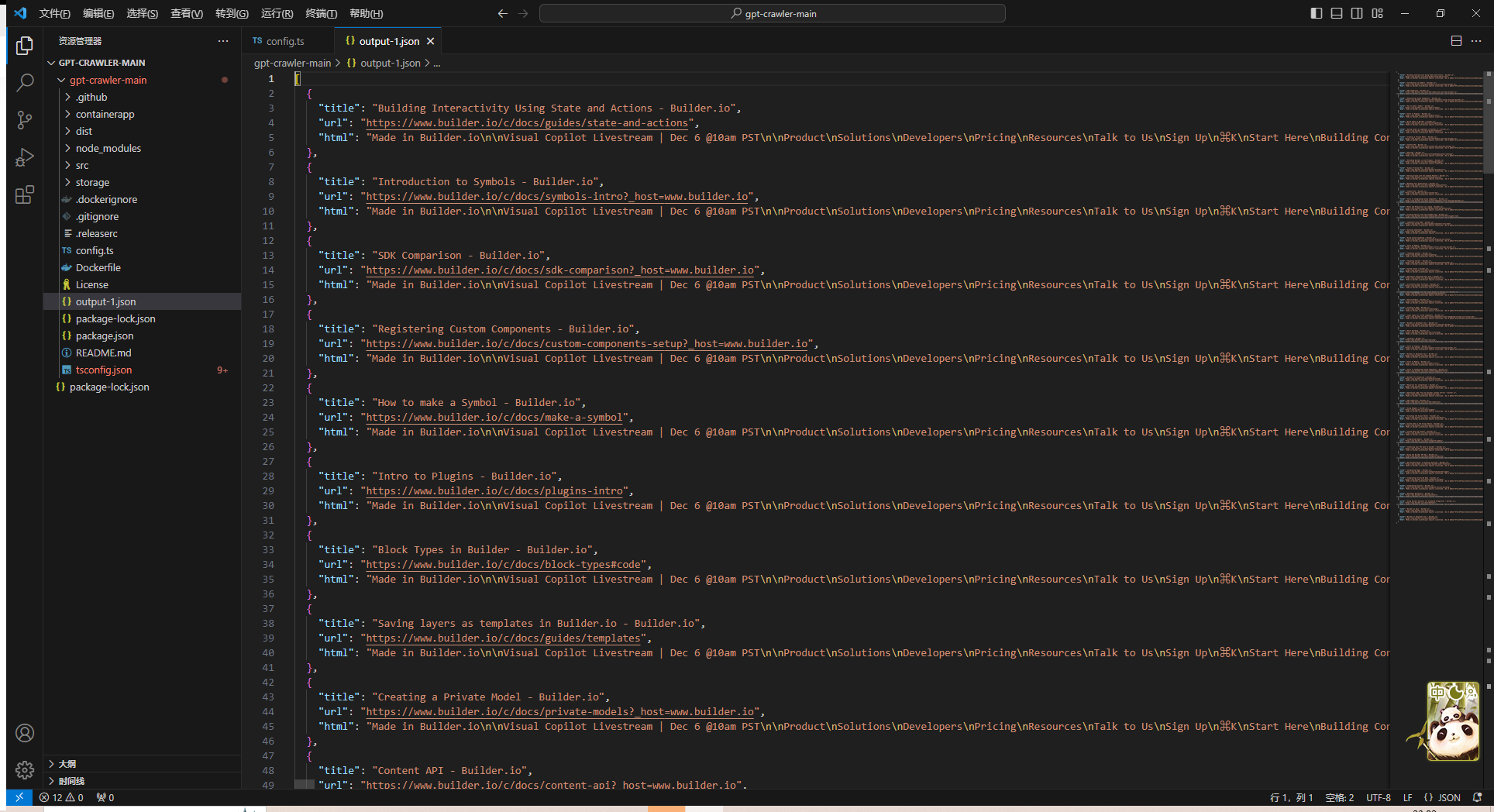

--launcher pytorch --fuse-conv-bn结果:

没啥区别。有空再来研究底层原理吧,就先这样

没啥区别。有空再来研究底层原理吧,就先这样