1、配置MYSQL

mysql> alter user 'root'@'localhost' identified by 'Yang3135989009';

Query OK, 0 rows affected (0.00 sec)mysql> grant all on *.* to 'root'@'%';

Query OK, 0 rows affected (0.00 sec)mysql> flush privileges;

Query OK, 0 rows affected (0.01 sec)

2、配置Hadoop

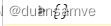

(1)进入Hadoop用户

hadoop@node1:/export/server/hadoop/etc/hadoop$ vim core-site.xml

(2)修改文件如下

给予Hadoop用户权限

</property><property><name>hadoop.proxyuser.hadoop.hosts</name><value>*</value></property> <property> <name>hadoop.proxyuser.hadoop.groups</name><value>*</value></property>

3、下载解压Hive

需要注意,此处应该切换到Hadoop用户

[hive下载链接]http://archive.apache.org/dist/hive/hive-3.1.3/apache-hive-3.1.3-bin.tar.gz

# 解压到node1服务器的:/export/server/内

tar -zxvf apache-hive-3.1.3-bin.tar.gz -C /export/server/

# 设置软连接

ln -s /export/server/apache-hive-3.1.3-bin /export/server/hive

4、下载MySQL驱动

需要注意,此处应该将用户切换为Hadoop。

[MySQL的驱动包]:https://repo1.maven.org/maven2/mysql/mysql-connector-java/5.1.34/mysql-connector-java-5.1.34.jar

# 将下载好的驱动jar包,放入:Hive安装文件夹的lib目录内

mv mysql-connector-java-5.1.34-sources.jar /export/server/hive/lib/

5、配置Hive

(1)修改hive-env.sh名称并配置path

目录:

hadoop@node1:/export/server/hive/conf$ pwd

/export/server/hive/conf改名:

hadoop@node1:/export/server/hive/conf$ mv hive-env.sh.template hive-env.sh配置:

export HADOOP_HOME=/export/server/hadoop

export HIVE_CONF_DIR=/export/server/hive/conf

export HIVE_AUX_JARS_PATH=/export/server/hive/lib

(2)创建hive-site.xml

<configuration><property><name>javax.jdo.option.ConnectionURL</name><value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true&useSSL=false&useUnicode=true&characterEncoding=UTF-8</value></property><property><name>javax.jdo.option.ConnectionDriverName</name><value>com.mysql.jdbc.Driver</value></property><property><name>javax.jdo.option.ConnectionUserName</name><value>root</value></property><property><name>javax.jdo.option.ConnectionPassword</name><value>密码</value></property><property><name>hive.server2.thrift.bind.host</name><value>node1</value></property><property><name>hive.metastore.uris</name><value>thrift://node1:9083</value></property><property><name>hive.metastore.event.db.notification.api.auth</name><value>false</value></property></configuration>

6、初始化元数据库

(1)配置hive在MySQL中的元数据库

CREATE DATABASE hive CHARSET UTF8;(2)初始化元数据库

# 进入hive路径

cd /export/server/hive

# 初始化MySQL元数据库

bin/schematool -initSchema -dbType mysql -verbos(3)验证

如果初始化成功,那么再次进入MySQL,我们可以在MySQL的hive库中看见274张新建的元数据的表。

mysql> show tables;

+-------------------------------+

| Tables_in_hive |

+-------------------------------+

| AUX_TABLE |

| BUCKETING_COLS |

| CDS |

| COLUMNS_V2 |

| COMPACTION_QUEUE |

| COMPLETED_COMPACTIONS |

| COMPLETED_TXN_COMPONENTS |

| CTLGS |

| DATABASE_PARAMS |

| DBS |

| DB_PRIVS |

| DELEGATION_TOKENS |

| FUNCS |

| FUNC_RU |

| GLOBAL_PRIVS |

| HIVE_LOCKS |

| IDXS |

| INDEX_PARAMS |

| I_SCHEMA |

| KEY_CONSTRAINTS |

| MASTER_KEYS |

| MATERIALIZATION_REBUILD_LOCKS |

| METASTORE_DB_PROPERTIES |

| MIN_HISTORY_LEVEL |

| MV_CREATION_METADATA |

| MV_TABLES_USED |

| NEXT_COMPACTION_QUEUE_ID |

| NEXT_LOCK_ID |

| NEXT_TXN_ID |

| NEXT_WRITE_ID |

| NOTIFICATION_LOG |

| NOTIFICATION_SEQUENCE |

| NUCLEUS_TABLES |

| PARTITIONS |

| PARTITION_EVENTS |

| PARTITION_KEYS |

| PARTITION_KEY_VALS |

| PARTITION_PARAMS |

| PART_COL_PRIVS |

| PART_COL_STATS |

| PART_PRIVS |

| REPL_TXN_MAP |

| ROLES |

| ROLE_MAP |

| RUNTIME_STATS |

| SCHEMA_VERSION |

| SDS |

| SD_PARAMS |

| SEQUENCE_TABLE |

| SERDES |

| SERDE_PARAMS |

| SKEWED_COL_NAMES |

| SKEWED_COL_VALUE_LOC_MAP |

| SKEWED_STRING_LIST |

| SKEWED_STRING_LIST_VALUES |

| SKEWED_VALUES |

| SORT_COLS |

| TABLE_PARAMS |

| TAB_COL_STATS |

| TBLS |

| TBL_COL_PRIVS |

| TBL_PRIVS |

| TXNS |

| TXN_COMPONENTS |

| TXN_TO_WRITE_ID |

| TYPES |

| TYPE_FIELDS |

| VERSION |

| WM_MAPPING |

| WM_POOL |

| WM_POOL_TO_TRIGGER |

| WM_RESOURCEPLAN |

| WM_TRIGGER |

| WRITE_SET |

+-------------------------------+

74 rows in set (0.00 sec)

7、给予权限和创建logs文件夹

(1)划分文件夹用户组和用户

在前面,使用的root用户,但是启动集群时,需要使用到Hadoop用户,所以这里我们需要将/export/server/路径下的hive 和apache-hive-3.1.3-bin的用户和用户组划给Hadoop,以便我们后序的使用。

root@node1:/export/server# chown -R hadoop:hadoop apache-hive-3.1.3-bin hive

root@node1:/export/server# ll

total 20

drwxr-xr-x 5 hadoop hadoop 4096 12月 23 13:17 ./

drwxr-xr-x 3 hadoop hadoop 4096 11月 4 14:16 ../

drwxr-xr-x 10 hadoop hadoop 4096 12月 23 13:03 apache-hive-3.1.3-bin/

lrwxrwxrwx 1 hadoop hadoop 27 11月 6 20:14 hadoop -> /export/server/hadoop-3.3.6/

drwxr-xr-x 11 hadoop hadoop 4096 11月 6 20:47 hadoop-3.3.6/

lrwxrwxrwx 1 hadoop hadoop 36 12月 23 13:17 hive -> /export/server/apache-hive-3.1.3-bin/

lrwxrwxrwx 1 hadoop hadoop 27 11月 4 14:25 jdk -> /export/server/jdk1.8.0_391/

drwxr-xr-x 8 hadoop hadoop 4096 11月 4 14:23 jdk1.8.0_391/(2)创建logs文件夹

切换到Hadoop用户创建logs文件夹

root@node1:/export/server# su - hadoop

hadoop@node1:~$ cd

hadoop@node1:~$ cd /export/server

hadoop@node1:/export/server$ cd hive

hadoop@node1:/export/server/hive$ ll

total 88

drwxr-xr-x 10 hadoop hadoop 4096 12月 23 13:03 ./

drwxr-xr-x 5 hadoop hadoop 4096 12月 23 13:17 ../

drwxr-xr-x 3 hadoop hadoop 4096 12月 23 13:02 bin/

drwxr-xr-x 2 hadoop hadoop 4096 12月 23 13:02 binary-package-licenses/

drwxr-xr-x 2 hadoop hadoop 4096 12月 23 13:55 conf/

drwxr-xr-x 4 hadoop hadoop 4096 12月 23 13:02 examples/

drwxr-xr-x 7 hadoop hadoop 4096 12月 23 13:02 hcatalog/

drwxr-xr-x 2 hadoop hadoop 4096 12月 23 13:03 jdbc/

drwxr-xr-x 4 hadoop hadoop 20480 12月 23 13:04 lib/

-rw-r--r-- 1 hadoop hadoop 20798 3月 29 2022 LICENSE

-rw-r--r-- 1 hadoop hadoop 230 3月 29 2022 NOTICE

-rw-r--r-- 1 hadoop hadoop 540 3月 29 2022 RELEASE_NOTES.txt

drwxr-xr-x 4 hadoop hadoop 4096 12月 23 13:02 scripts/hadoop@node1:/export/server/hive$ mkdir logshadoop@node1:/export/server/hive$ ll

total 92

drwxr-xr-x 11 hadoop hadoop 4096 12月 23 14:03 ./

drwxr-xr-x 5 hadoop hadoop 4096 12月 23 13:17 ../

drwxr-xr-x 3 hadoop hadoop 4096 12月 23 13:02 bin/

drwxr-xr-x 2 hadoop hadoop 4096 12月 23 13:02 binary-package-licenses/

drwxr-xr-x 2 hadoop hadoop 4096 12月 23 13:55 conf/

drwxr-xr-x 4 hadoop hadoop 4096 12月 23 13:02 examples/

drwxr-xr-x 7 hadoop hadoop 4096 12月 23 13:02 hcatalog/

drwxr-xr-x 2 hadoop hadoop 4096 12月 23 13:03 jdbc/

drwxr-xr-x 4 hadoop hadoop 20480 12月 23 13:04 lib/

-rw-r--r-- 1 hadoop hadoop 20798 3月 29 2022 LICENSE

drwxrwxr-x 2 hadoop hadoop 4096 12月 23 14:03 logs/

-rw-r--r-- 1 hadoop hadoop 230 3月 29 2022 NOTICE

-rw-r--r-- 1 hadoop hadoop 540 3月 29 2022 RELEASE_NOTES.txt

drwxr-xr-x 4 hadoop hadoop 4096 12月 23 13:02 scripts/

8、启动

1、后台启动元数据管理服务

nohup bin/hive --service metastore >> logs/metastore.log 2>&1 &hadoop@node1:/export/server/hive$ nohup bin/hive --service metastore >> logs/metastore.log 2>&1 &

[1] 66238

hadoop@node1:/export/server/hive$ cd logs

hadoop@node1:/export/server/hive/logs$ ll

total 12

drwxrwxr-x 2 hadoop hadoop 4096 12月 23 14:05 ./

drwxr-xr-x 11 hadoop hadoop 4096 12月 23 14:03 ../

-rw-rw-r-- 1 hadoop hadoop 1096 12月 23 14:06 metastore.log2、启动客户端

注意,需要提前开启hsfs和yarn集群。

cd /export/server/hive在该目录下有bin文件夹,bin中有hive启动程序,输入:

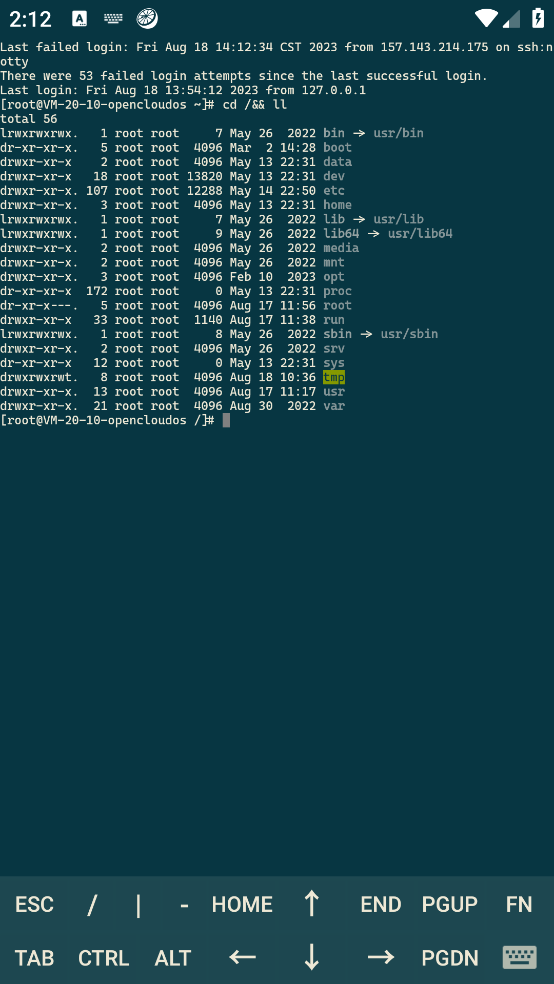

bin/hivehadoop@node1:/export/server/hive$ jps

71265 NodeManager

82227 Jps

71107 ResourceManager

69746 NameNode

69956 DataNode

71622 WebAppProxyServer

70271 SecondaryNameNode

66238 RunJar

【错误解决方案】:这里,很有可能出现报错,解决方案如下:

hdfs dfsadmin -safemode forceExit在开启hive之后,会出现如下:

hive> show databases;

OK

default

Time taken: 1.23 seconds, Fetched: 1 row(s)

hive>

![概率中的 50 个具有挑战性的问题 [05/50]:正方形硬币](https://img-blog.csdnimg.cn/direct/396a3369e9db4e9e8d7d252b1f9dd252.png)