1 前言

本文主要介绍使用 Filament 实现纹理贴图,读者如果对 Filament 不太熟悉,请回顾以下内容。

- Filament环境搭建

- 绘制三角形

- 绘制矩形

- 绘制圆形

- 绘制立方体

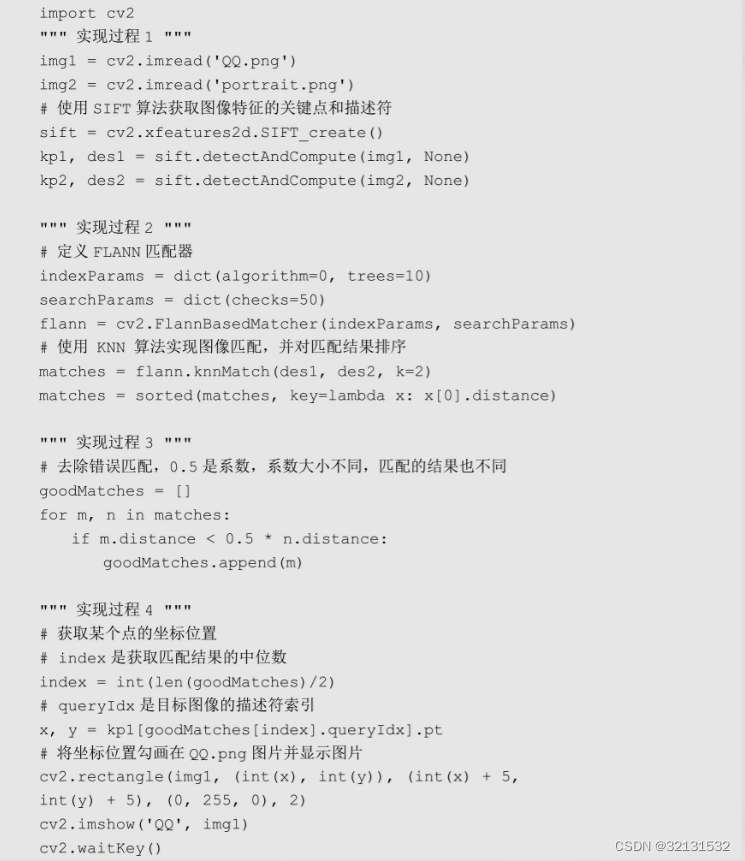

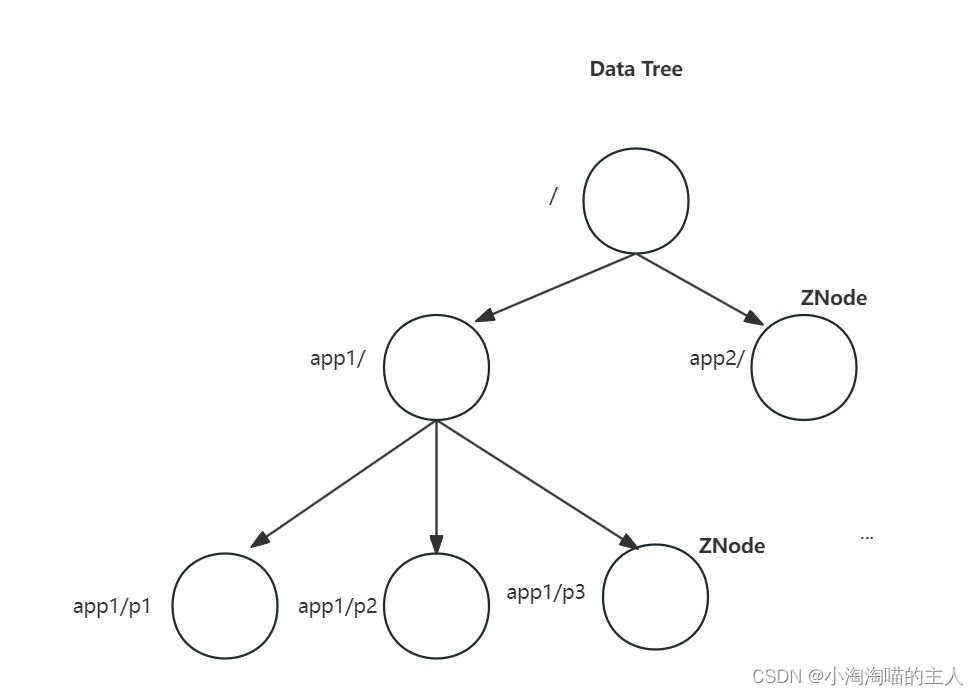

Filament 纹理坐标的 x、y 轴正方向分别朝右和朝上,其 y 轴正方向朝向与 OpenGL ES 和 libGDX 相反(详见【OpenGL ES】纹理贴图、【libGDX】Mesh纹理贴图),如下。

2 纹理贴图

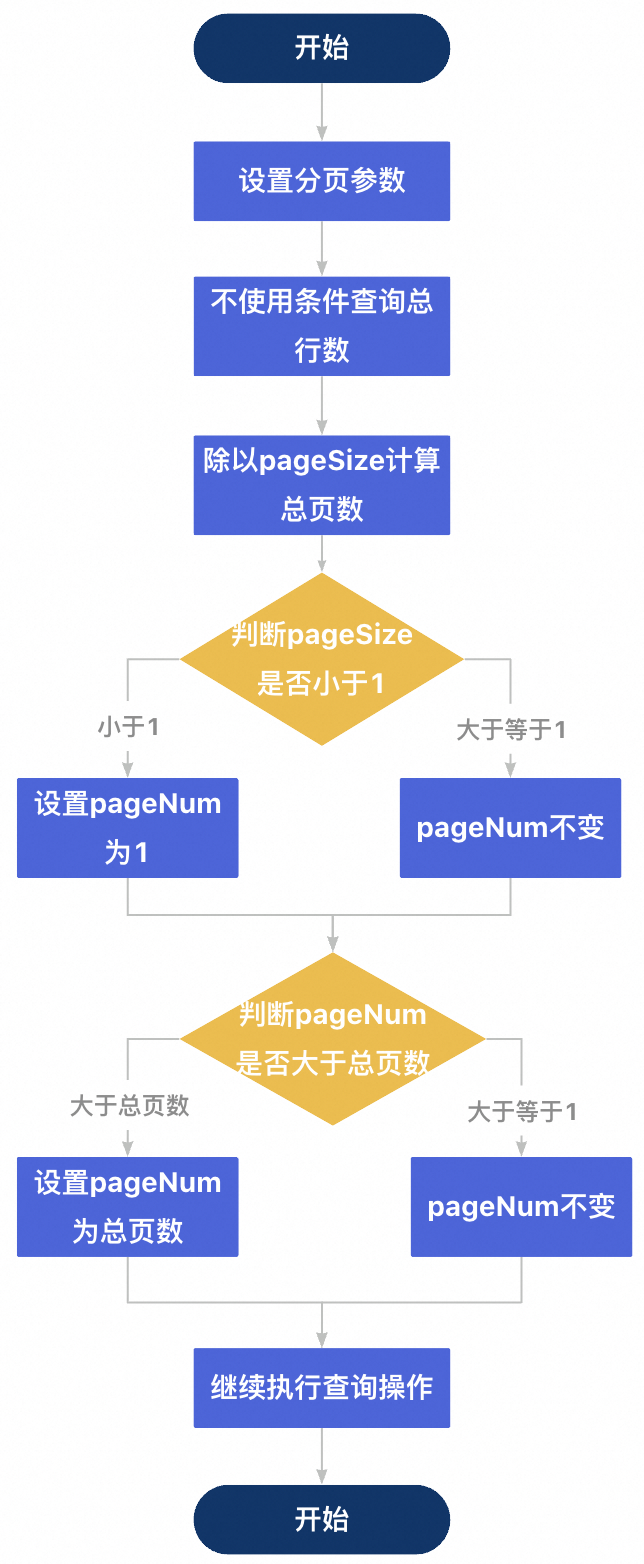

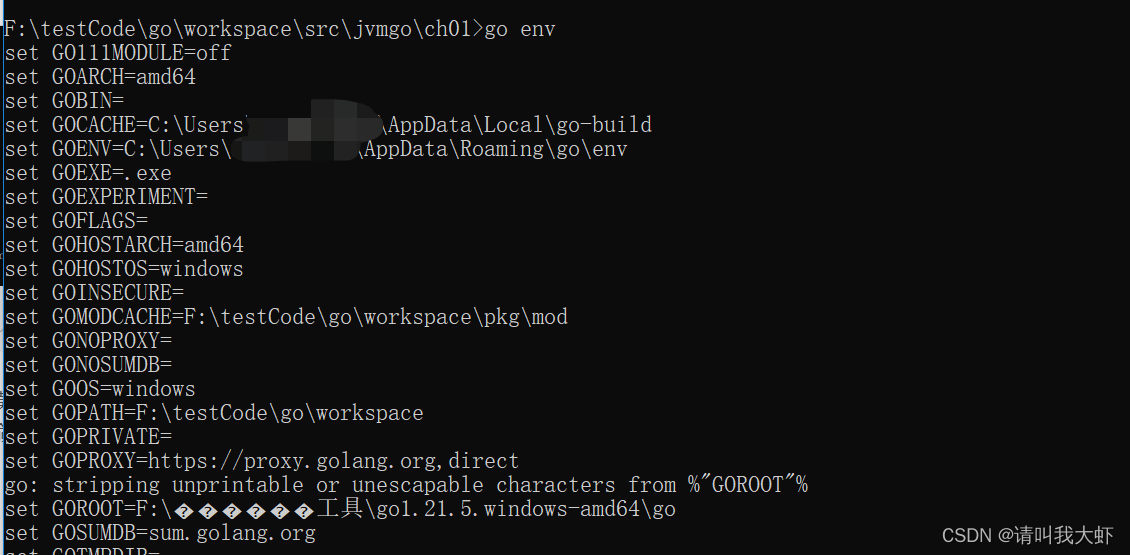

本文项目结构如下,完整代码资源 → Filament纹理贴图。

2.1 自定义基类

为方便读者将注意力聚焦在 Filament 的输入上,轻松配置复杂的环境依赖逻辑,笔者仿照 OpenGL ES 的写法,抽出了 FLSurfaceView 和 BaseModel 类。FLSurfaceView 与 GLSurfaceView 的功能类似,承载了渲染环境配置;BaseModel 中提供了一些 VertexBuffer、IndexBuffer、Material、Renderable 相关的工具类,方便子类直接使用这些工具类。

build.gradle

...

android {...aaptOptions { // 在应用程序打包过程中不压缩的文件noCompress 'filamat', 'ktx'}

}dependencies {implementation fileTree(dir: '../libs', include: ['*.aar'])...

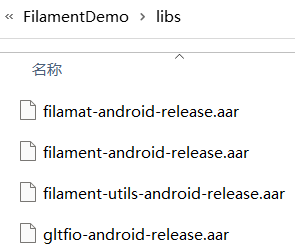

}说明:在项目根目录下的 libs 目录中,需要放入以下 aar 文件,它们源自Filament环境搭建中编译生成的 aar。

FLSurfaceView.java

package com.zhyan8.texture.filament;import android.content.Context;

import android.graphics.Point;

import android.view.Choreographer;

import android.view.Surface;

import android.view.SurfaceView;import com.google.android.filament.Camera;

import com.google.android.filament.Engine;

import com.google.android.filament.EntityManager;

import com.google.android.filament.Filament;

import com.google.android.filament.Renderer;

import com.google.android.filament.Scene;

import com.google.android.filament.Skybox;

import com.google.android.filament.SwapChain;

import com.google.android.filament.View;

import com.google.android.filament.Viewport;

import com.google.android.filament.android.DisplayHelper;

import com.google.android.filament.android.FilamentHelper;

import com.google.android.filament.android.UiHelper;import java.util.ArrayList;/** Filament中待渲染的SurfaceView* 功能可以类比OpenGL ES中的GLSurfaceView* 用于创建Filament的渲染环境*/

public class FLSurfaceView extends SurfaceView {public static int RENDERMODE_WHEN_DIRTY = 0; // 用户请求渲染才渲染一帧public static int RENDERMODE_CONTINUOUSLY = 1; // 持续渲染protected int mRenderMode = RENDERMODE_CONTINUOUSLY; // 渲染模式protected Choreographer mChoreographer; // 消息控制protected DisplayHelper mDisplayHelper; // 管理Display(可以监听分辨率或刷新率的变化)protected UiHelper mUiHelper; // 管理SurfaceView、TextureView、SurfaceHolderprotected Engine mEngine; // 引擎(跟踪用户创建的资源, 管理渲染线程和硬件渲染器)protected Renderer mRenderer; // 渲染器(用于操作系统窗口, 生成绘制命令, 管理帧延时)protected Scene mScene; // 场景(管理渲染对象、灯光)protected View mView; // 存储渲染数据(View是Renderer操作的对象)protected Camera mCamera; // 相机(视角管理)protected Point mDesiredSize; // 渲染分辨率protected float[] mSkyboxColor; // 背景颜色protected SwapChain mSwapChain; // 操作系统的本地可渲染表面(native renderable surface, 通常是一个window或view)protected FrameCallback mFrameCallback = new FrameCallback(); // 帧回调protected ArrayList<RenderCallback> mRenderCallbacks; // 每一帧渲染前的回调(一般用于处理模型变换、相机变换等)static {Filament.init();}public FLSurfaceView(Context context) {super(context);mChoreographer = Choreographer.getInstance();mDisplayHelper = new DisplayHelper(context);mRenderCallbacks = new ArrayList<>();}public void init() { // 初始化setupSurfaceView();setupFilament();setupView();setupScene();}public void setRenderMode(int renderMode) { // 设置渲染模式mRenderMode = renderMode;}public void addRenderCallback(RenderCallback renderCallback) { // 添加渲染回调if (renderCallback != null) {mRenderCallbacks.add(renderCallback);}}public void requestRender() { // 请求渲染mChoreographer.postFrameCallback(mFrameCallback);}public void onResume() { // 恢复mChoreographer.postFrameCallback(mFrameCallback);}public void onPause() { // 暂停mChoreographer.removeFrameCallback(mFrameCallback);}public void onDestroy() { // 销毁Filament环境mChoreographer.removeFrameCallback(mFrameCallback);mRenderCallbacks.clear();mUiHelper.detach();mEngine.destroyRenderer(mRenderer);mEngine.destroyView(mView);mEngine.destroyScene(mScene);mEngine.destroyCameraComponent(mCamera.getEntity());EntityManager entityManager = EntityManager.get();entityManager.destroy(mCamera.getEntity());mEngine.destroy();}protected void setupScene() { // 设置Scene参数}protected void onResized(int width, int height) { // Surface尺寸变化时回调double zoom = 1;double aspect = (double) width / (double) height;mCamera.setProjection(Camera.Projection.ORTHO,-aspect * zoom, aspect * zoom, -zoom, zoom, 0, 1000);}private void setupSurfaceView() { // 设置SurfaceViewmUiHelper = new UiHelper(UiHelper.ContextErrorPolicy.DONT_CHECK);mUiHelper.setRenderCallback(new SurfaceCallback());if (mDesiredSize != null) {mUiHelper.setDesiredSize(mDesiredSize.x, mDesiredSize.y);}mUiHelper.attachTo(this);}private void setupFilament() { // 设置Filament参数mEngine = Engine.create();// mEngine = (new Engine.Builder()).featureLevel(Engine.FeatureLevel.FEATURE_LEVEL_0).build();mRenderer = mEngine.createRenderer();mScene = mEngine.createScene();mView = mEngine.createView();mCamera = mEngine.createCamera(mEngine.getEntityManager().create());}private void setupView() { // 设置View参数float[] color = mSkyboxColor != null ? mSkyboxColor : new float[] {0, 0, 0, 1};Skybox skybox = (new Skybox.Builder()).color(color).build(mEngine);mScene.setSkybox(skybox);if (mEngine.getActiveFeatureLevel() == Engine.FeatureLevel.FEATURE_LEVEL_0) {mView.setPostProcessingEnabled(false); // FEATURE_LEVEL_0不支持post-processing}mView.setCamera(mCamera);mView.setScene(mScene);}/** 帧回调*/private class FrameCallback implements Choreographer.FrameCallback {@Overridepublic void doFrame(long frameTimeNanos) { // 渲染每帧数据if (mRenderMode == RENDERMODE_CONTINUOUSLY) {mChoreographer.postFrameCallback(this); // 请求下一帧}mRenderCallbacks.forEach(callback -> callback.onCall());if (mUiHelper.isReadyToRender()) {if (mRenderer.beginFrame(mSwapChain, frameTimeNanos)) {mRenderer.render(mView);mRenderer.endFrame();}}}}/** Surface回调*/private class SurfaceCallback implements UiHelper.RendererCallback {@Overridepublic void onNativeWindowChanged(Surface surface) { // Native窗口改变时回调if (mSwapChain != null) {mEngine.destroySwapChain(mSwapChain);}long flags = mUiHelper.getSwapChainFlags();if (mEngine.getActiveFeatureLevel() == Engine.FeatureLevel.FEATURE_LEVEL_0) {if (SwapChain.isSRGBSwapChainSupported(mEngine)) {flags = flags | SwapChain.CONFIG_SRGB_COLORSPACE;}}mSwapChain = mEngine.createSwapChain(surface, flags);mDisplayHelper.attach(mRenderer, getDisplay());}@Overridepublic void onDetachedFromSurface() { // 解绑Surface时回调mDisplayHelper.detach();if (mSwapChain != null) {mEngine.destroySwapChain(mSwapChain);mEngine.flushAndWait();mSwapChain = null;}}@Overridepublic void onResized(int width, int height) { // Surface尺寸变化时回调mView.setViewport(new Viewport(0, 0, width, height));FilamentHelper.synchronizePendingFrames(mEngine);FLSurfaceView.this.onResized(width, height);}}/** 每一帧渲染前的回调* 一般用于处理模型变换、相机变换等*/public static interface RenderCallback {void onCall();}

}BaseModel.java

package com.zhyan8.texture.filament;import android.content.Context;

import android.content.res.AssetFileDescriptor;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.os.Handler;

import android.os.Looper;

import android.util.Log;import com.google.android.filament.Box;

import com.google.android.filament.Engine;

import com.google.android.filament.EntityManager;

import com.google.android.filament.IndexBuffer;

import com.google.android.filament.Material;

import com.google.android.filament.MaterialInstance;

import com.google.android.filament.RenderableManager;

import com.google.android.filament.RenderableManager.PrimitiveType;

import com.google.android.filament.Texture;

import com.google.android.filament.TransformManager;

import com.google.android.filament.VertexBuffer;

import com.google.android.filament.VertexBuffer.AttributeType;

import com.google.android.filament.VertexBuffer.VertexAttribute;

import com.google.android.filament.android.TextureHelper;import java.io.FileInputStream;

import java.io.IOException;

import java.io.InputStream;

import java.nio.Buffer;

import java.nio.ByteBuffer;

import java.nio.ByteOrder;

import java.nio.channels.Channels;

import java.nio.channels.ReadableByteChannel;/** 模型基类* 管理模型的材质、顶点属性、顶点索引、渲染id*/

public class BaseModel {private static String TAG = "BaseModel";protected Context mContext; // 上下文protected Engine mEngine; // Filament引擎protected TransformManager mTransformManager; // 模型变换管理器protected Material mMaterial; // 模型材质protected MaterialInstance mMaterialInstance; // 模型材质实例protected VertexBuffer mVertexBuffer; // 顶点属性缓存protected IndexBuffer mIndexBuffer; // 顶点索引缓存protected int mRenderable; // 渲染idprotected int mTransformComponent; // 模型变换组件的idprotected Box mBox; // 渲染区域protected FLSurfaceView.RenderCallback mRenderCallback; // 每一帧渲染前的回调(一般用于处理模型变换、相机变换等)public BaseModel(Context context, Engine engine) {mContext = context;mEngine = engine;mTransformManager = mEngine.getTransformManager();}public Material getMaterial() { // 获取材质return mMaterial;}public VertexBuffer getVertexBuffer() { // 获取顶点属性缓存return mVertexBuffer;}public IndexBuffer getIndexBuffer() { // 获取顶点索引缓存return mIndexBuffer;}public int getRenderable() { // 获取渲染idreturn mRenderable;}public FLSurfaceView.RenderCallback getRenderCallback() { // 获取渲染回调return mRenderCallback;}public void destroy() { // 销毁模型mEngine.destroyEntity(mRenderable);mEngine.destroyVertexBuffer(mVertexBuffer);mEngine.destroyIndexBuffer(mIndexBuffer);mEngine.destroyMaterialInstance(mMaterialInstance);mEngine.destroyMaterial(mMaterial);EntityManager entityManager = EntityManager.get();entityManager.destroy(mRenderable);}protected Material loadMaterial(String materialPath) { // 加载材质Buffer buffer = readUncompressedAsset(materialPath);if (buffer != null) {Material material = (new Material.Builder()).payload(buffer, buffer.remaining()).build(mEngine);mMaterialInstance = material.createInstance();material.compile(Material.CompilerPriorityQueue.HIGH,Material.UserVariantFilterBit.ALL,new Handler(Looper.getMainLooper()),() -> Log.i(TAG, "Material " + material.getName() + " compiled."));mEngine.flush();return material;}return null;}protected VertexBuffer getVertexBuffer(float[] values) { // 获取顶点属性缓存ByteBuffer vertexData = getByteBuffer(values);int vertexCount = values.length / 3;int vertexSize = Float.BYTES * 3;VertexBuffer vertexBuffer = new VertexBuffer.Builder().bufferCount(1).vertexCount(vertexCount).attribute(VertexAttribute.POSITION, 0, AttributeType.FLOAT3, 0, vertexSize).build(mEngine);vertexBuffer.setBufferAt(mEngine, 0, vertexData);return vertexBuffer;}protected VertexBuffer getVertexBuffer(VertexPosCol[] values) { // 获取顶点属性缓存ByteBuffer vertexData = getByteBuffer(values);int vertexCount = values.length;int vertexSize = VertexPosCol.BYTES;VertexBuffer vertexBuffer = new VertexBuffer.Builder().bufferCount(1).vertexCount(vertexCount).attribute(VertexAttribute.POSITION, 0, AttributeType.FLOAT3, 0, vertexSize).attribute(VertexAttribute.COLOR, 0, AttributeType.UBYTE4, 3 * Float.BYTES, vertexSize).normalized(VertexAttribute.COLOR).build(mEngine);vertexBuffer.setBufferAt(mEngine, 0, vertexData);return vertexBuffer;}protected VertexBuffer getVertexBuffer(VertexPosUV[] values) { // 获取顶点属性缓存ByteBuffer vertexData = getByteBuffer(values);int vertexCount = values.length;int vertexSize = VertexPosUV.BYTES;VertexBuffer vertexBuffer = new VertexBuffer.Builder().bufferCount(1).vertexCount(vertexCount).attribute(VertexAttribute.POSITION, 0, AttributeType.FLOAT3, 0, vertexSize).attribute(VertexAttribute.UV0, 0, AttributeType.FLOAT2, 3 * Float.BYTES, vertexSize).build(mEngine);vertexBuffer.setBufferAt(mEngine, 0, vertexData);return vertexBuffer;}protected IndexBuffer getIndexBuffer(short[] values) { // 获取顶点索引缓存ByteBuffer indexData = getByteBuffer(values);int indexCount = values.length;IndexBuffer indexBuffer = new IndexBuffer.Builder().indexCount(indexCount).bufferType(IndexBuffer.Builder.IndexType.USHORT).build(mEngine);indexBuffer.setBuffer(mEngine, indexData);return indexBuffer;}protected int getRenderable(PrimitiveType primitiveType, int vertexCount) { // 获取渲染idint renderable = EntityManager.get().create();new RenderableManager.Builder(1).boundingBox(mBox).geometry(0, primitiveType, mVertexBuffer, mIndexBuffer, 0, vertexCount).material(0, mMaterialInstance).build(mEngine, renderable);return renderable;}protected Texture getTexture(String texturePath) { // 获取TextureBitmap bitmap = loadBitmapFromAsset(texturePath);if (bitmap != null) {return generateTexture(bitmap);}return null;}protected Texture getTexture(int resourceId) { // 获取TextureBitmap bitmap = loadBitmapFromDrawable(resourceId);if (bitmap != null) {return generateTexture(bitmap);}return null;}private Texture generateTexture(Bitmap bitmap) { // 生成TextureTexture texture = new Texture.Builder().width(bitmap.getWidth()).height(bitmap.getHeight()).sampler(Texture.Sampler.SAMPLER_2D).format(Texture.InternalFormat.SRGB8_A8).levels(0xff).build(mEngine);TextureHelper.setBitmap(mEngine, texture, 0, bitmap, new Handler(), () ->Log.i(TAG, "getTexture, Bitmap is released."));texture.generateMipmaps(mEngine);return texture;}private Buffer readUncompressedAsset(String assetPath) { // 加载资源ReadableByteChannel src = null;FileInputStream fis = null;ByteBuffer dist = null;try {AssetFileDescriptor fd = mContext.getAssets().openFd(assetPath);fis = fd.createInputStream();dist = ByteBuffer.allocate((int) fd.getLength());src = Channels.newChannel(fis);src.read(dist);} catch (IOException e) {e.printStackTrace();} finally {if (src != null) {try {src.close();} catch (IOException e) {e.printStackTrace();}}if (fis != null) {try {fis.close();} catch (IOException e) {e.printStackTrace();}}}if (dist != null) {return dist.rewind();}return null;}private Bitmap loadBitmapFromAsset(String assetPath) { // 从asset中加载bitmapInputStream inputStream = null;Bitmap bitmap = null;try {inputStream = mContext.getAssets().open(assetPath);bitmap = BitmapFactory.decodeStream(inputStream);} catch (IOException e) {e.printStackTrace();} finally {if (inputStream != null) {try {inputStream.close();} catch (IOException e) {e.printStackTrace();}}}return bitmap;}private Bitmap loadBitmapFromDrawable(int resourceId) { // 从drawable中加载bitmapBitmapFactory.Options options = new BitmapFactory.Options();options.inPremultiplied = true;Bitmap bitmap = BitmapFactory.decodeResource(mContext.getResources(), resourceId, options);return bitmap;}private ByteBuffer getByteBuffer(float[] values) { // float数组转换为ByteBufferByteBuffer byteBuffer = ByteBuffer.allocate(values.length * Float.BYTES);byteBuffer.order(ByteOrder.nativeOrder());for (int i = 0; i < values.length; i++) {byteBuffer.putFloat(values[i]);}byteBuffer.flip();return byteBuffer;}private ByteBuffer getByteBuffer(short[] values) { // short数组转换为ByteBufferByteBuffer byteBuffer = ByteBuffer.allocate(values.length * Short.BYTES);byteBuffer.order(ByteOrder.nativeOrder());for (int i = 0; i < values.length; i++) {byteBuffer.putShort(values[i]);}byteBuffer.flip();return byteBuffer;}private ByteBuffer getByteBuffer(VertexPosCol[] values) { // VertexPosCol数组转换为ByteBufferByteBuffer byteBuffer = ByteBuffer.allocate(values.length * VertexPosCol.BYTES);byteBuffer.order(ByteOrder.nativeOrder());for (int i = 0; i < values.length; i++) {values[i].put(byteBuffer);}byteBuffer.flip();return byteBuffer;}private ByteBuffer getByteBuffer(VertexPosUV[] values) { // VertexPosUV数组转换为ByteBufferByteBuffer byteBuffer = ByteBuffer.allocate(values.length * VertexPosUV.BYTES);byteBuffer.order(ByteOrder.nativeOrder());for (int i = 0; i < values.length; i++) {values[i].put(byteBuffer);}byteBuffer.flip();return byteBuffer;}/** 顶点数据(位置+颜色)* 包含顶点位置和颜色*/public static class VertexPosCol {public static int BYTES = 16;public float x;public float y;public float z;public int color;public VertexPosCol() {}public VertexPosCol(float x, float y, float z, int color) {this.x = x;this.y = y;this.z = z;this.color = color;}public ByteBuffer put(ByteBuffer buffer) { // VertexPosCol转换为ByteBufferbuffer.putFloat(x);buffer.putFloat(y);buffer.putFloat(z);buffer.putInt(color);return buffer;}}/** 顶点数据(位置+纹理坐标)* 包含顶点位置和纹理坐标*/public static class VertexPosUV {public static int BYTES = 20;public float x;public float y;public float z;public float u;public float v;public VertexPosUV() {}public VertexPosUV(float x, float y, float z, float u, float v) {this.x = x;this.y = y;this.z = z;this.u = u;this.v = v;}public ByteBuffer put(ByteBuffer buffer) { // VertexPosUV转换为ByteBufferbuffer.putFloat(x);buffer.putFloat(y);buffer.putFloat(z);buffer.putFloat(u);buffer.putFloat(v);return buffer;}}

}2.2 纹理贴图实现

MainActivity.java

package com.zhyan8.texture;import android.os.Bundle;import androidx.appcompat.app.AppCompatActivity;import com.zhyan8.texture.filament.FLSurfaceView;public class MainActivity extends AppCompatActivity {private FLSurfaceView mFLSurfaceView;@Overrideprotected void onCreate(Bundle savedInstanceState) {super.onCreate(savedInstanceState);mFLSurfaceView = new MyFLSurfaceView(this);setContentView(mFLSurfaceView);mFLSurfaceView.init();mFLSurfaceView.setRenderMode(FLSurfaceView.RENDERMODE_CONTINUOUSLY);}@Overridepublic void onResume() {super.onResume();mFLSurfaceView.onResume();}@Overridepublic void onPause() {super.onPause();mFLSurfaceView.onPause();}@Overridepublic void onDestroy() {super.onDestroy();mFLSurfaceView.onDestroy();}

}MyFLSurfaceView.java

package com.zhyan8.texture;import android.content.Context;import com.google.android.filament.Camera;

import com.zhyan8.texture.filament.BaseModel;

import com.zhyan8.texture.filament.FLSurfaceView;public class MyFLSurfaceView extends FLSurfaceView {private BaseModel mMyModel;public MyFLSurfaceView(Context context) {super(context);}public void init() {mSkyboxColor = new float[] {0.965f, 0.941f, 0.887f, 1};super.init();}@Overridepublic void onDestroy() {mMyModel.destroy();super.onDestroy();}@Overrideprotected void setupScene() { // 设置Scene参数mMyModel = new Square(getContext(), mEngine);mScene.addEntity(mMyModel.getRenderable());}@Overrideprotected void onResized(int width, int height) {mCamera.setProjection(Camera.Projection.ORTHO, -1, 1, -1, 1, 0, 10);}

}Square.java

package com.zhyan8.texture;import android.content.Context;import com.google.android.filament.Box;

import com.google.android.filament.Engine;

import com.google.android.filament.RenderableManager.PrimitiveType;

import com.google.android.filament.TextureSampler;

import com.zhyan8.texture.filament.BaseModel;public class Square extends BaseModel {private String materialPath = "materials/square.filamat";private String texturePath = "textures/1.jpg";private VertexPosUV[] mVertices = new VertexPosUV[] {new VertexPosUV(-1f, -1f, 0f, 0f, 0f), // 左下new VertexPosUV(1f, -1f, 0f, 1f, 0f), // 右下new VertexPosUV(1f, 1f, 0f, 1f, 1f), // 右上new VertexPosUV(-1f, 1f, 0f, 0f, 1f) // 左上};private short[] mIndex = new short[] {0, 1, 2, 0, 2, 3};public Square(Context context, Engine engine) {super(context, engine);init();}private void init() {mBox = new Box(0.0f, 0.0f, 0.0f, 1.0f, 1.0f, 0.01f);mMaterial = loadMaterial(materialPath);mMaterialInstance.setParameter("mainTex", getTexture(texturePath), new TextureSampler());mVertexBuffer = getVertexBuffer(mVertices);mIndexBuffer = getIndexBuffer(mIndex);mRenderable = getRenderable(PrimitiveType.TRIANGLES, mIndex.length);}

}square.mat

material {name : square,shadingModel : unlit, // 禁用所有lighting// 自定义变量参数parameters : [{type : sampler2d,name : mainTex}],// 顶点着色器入参MaterialVertexInputs中需要的顶点属性requires : [uv0]

}fragment {void material(inout MaterialInputs material) {prepareMaterial(material); // 在方法返回前必须回调该函数material.baseColor = texture(materialParams_mainTex, getUV0());}

}transform.bat

@echo off

setlocal enabledelayedexpansion

set "srcFolder=../src/main/materials"

set "distFolder=../src/main/assets/materials"for %%f in ("%srcFolder%\*.mat") do (set "matfile=%%~nf"matc -p mobile -a opengl -o "!matfile!.filamat" "%%f"move "!matfile!.filamat" "%distFolder%\!matfile!.filamat"

)echo Processing complete.

pause说明:需要将 matc.exe 文件与 transform.bat 文件放在同一个目录下面,matc.exe 源自Filament环境搭建中编译生成的 exe 文件。双击 transform.bat 文件,会自动将 /src/main/materials/ 下面的所有 mat 文件全部转换为 filamat 文件,并移到 /src/main/assets/materials/ 目录下面。

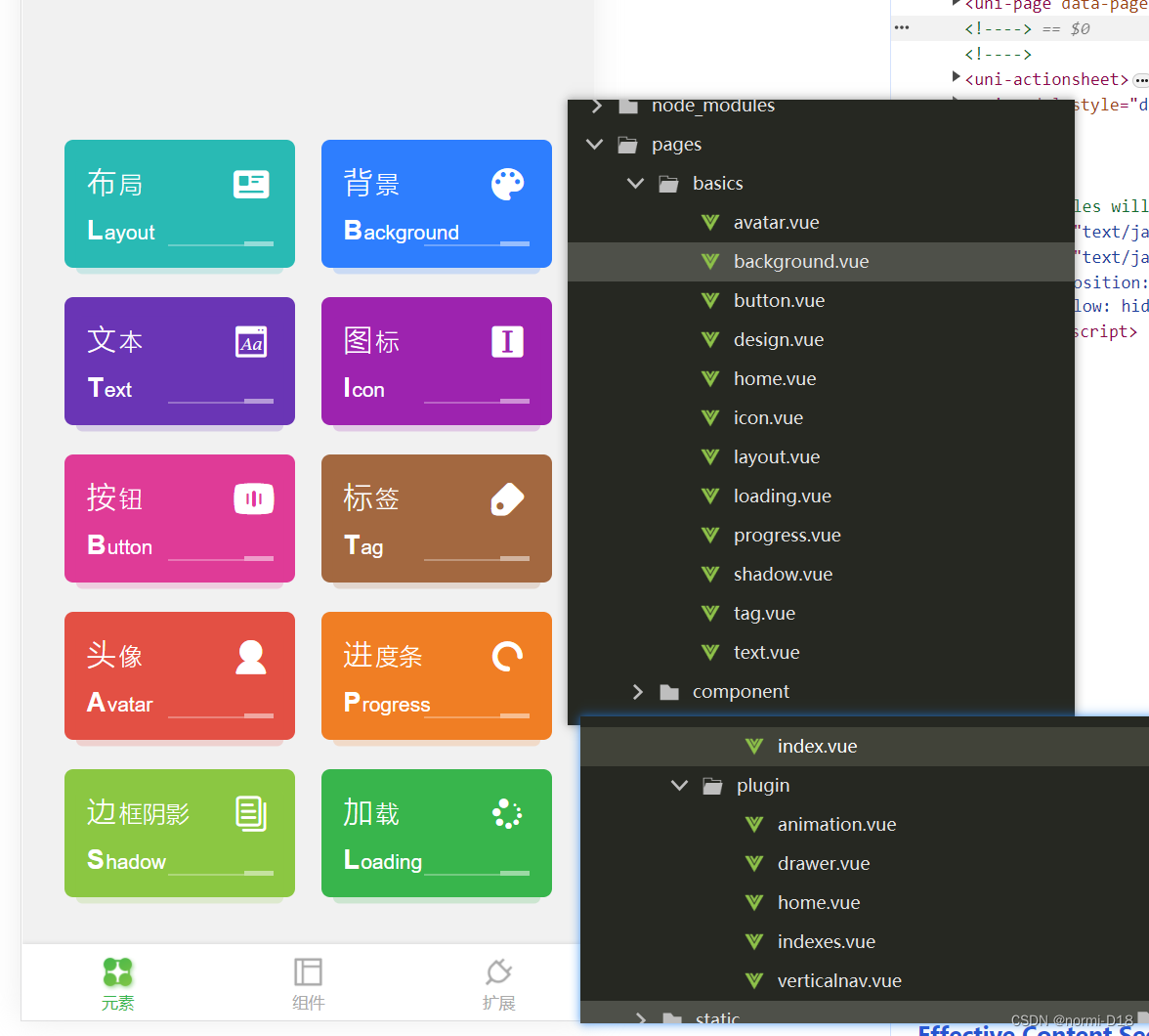

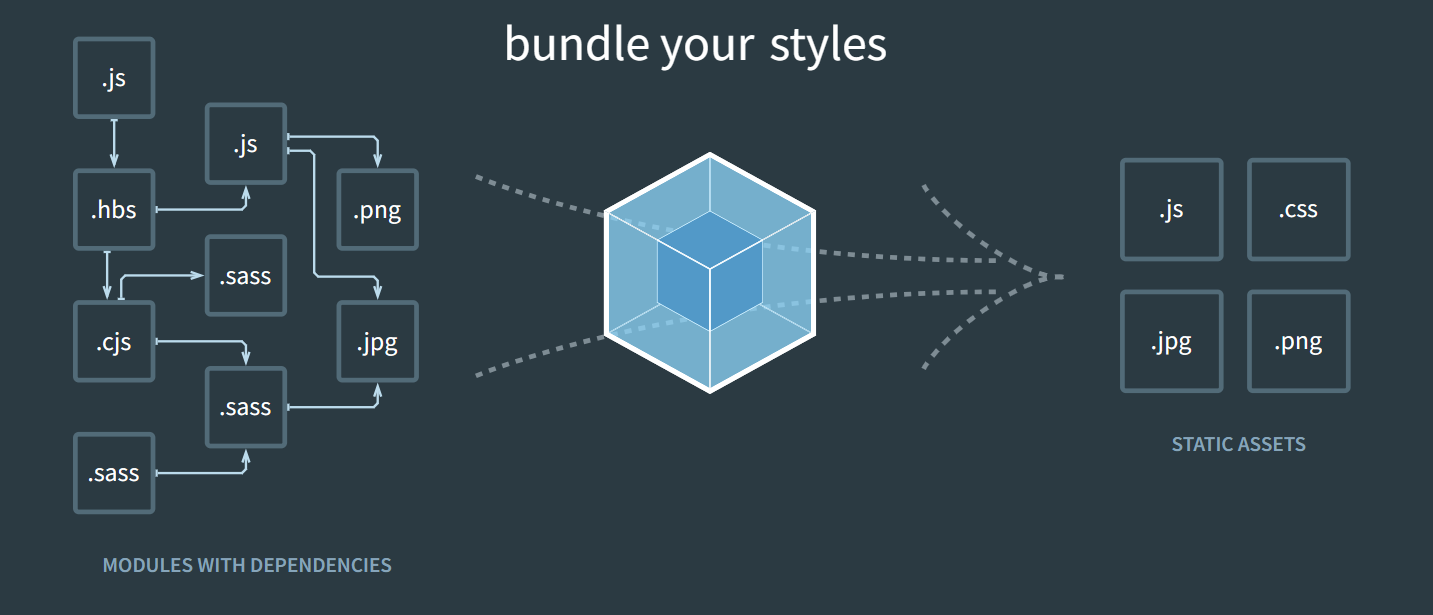

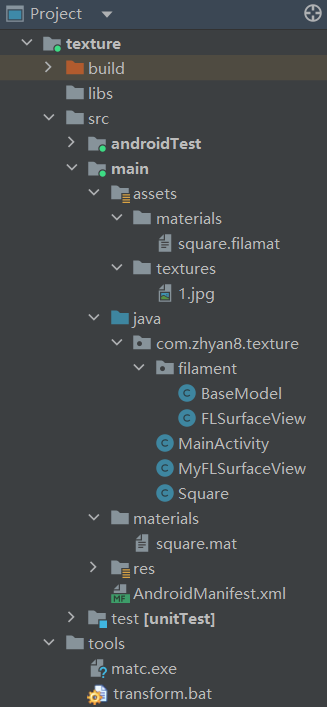

运行效果如下。