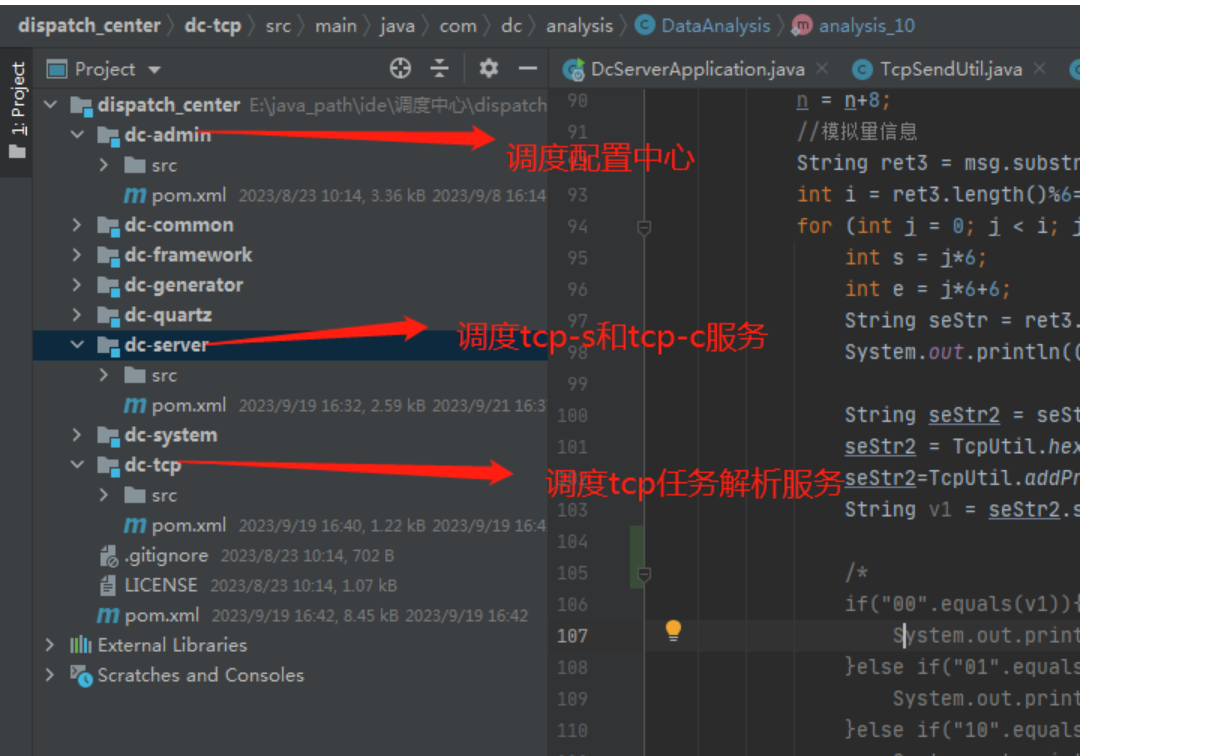

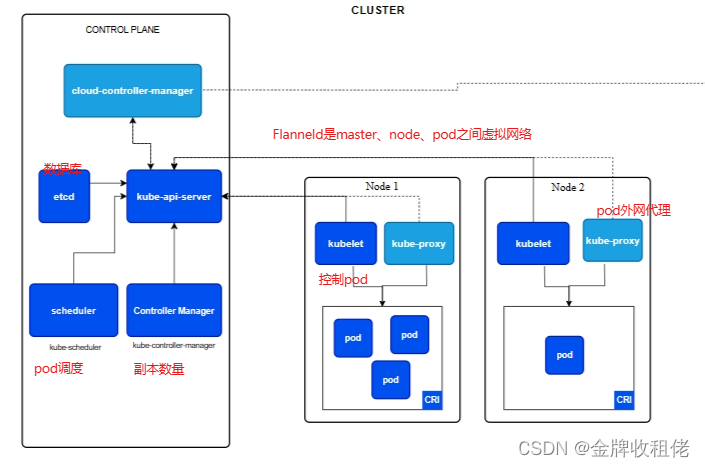

| Hostname | IP地址 | flanned | APP |

| master | 192.169.116.10 | ETCD\APIserver\Scheduler\Controller-Manager | |

| node1 | 192.168.116.11 | 172.17.28.0 | ETCD,Flanned,Kubelet,kube-proxy |

| node2 | 192.168.116.12 | 172.17.26.0 | ETCD,Flanned,Kubelet,kube-proxy |

Kubernetes社区

Kubernetes文档

ETCD

master节点

制作证书

1、下载证书工具

[root@master ~]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 --no-check-certificate

[root@master ~]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 --no-check-certificate

[root@master ~]# wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 --no-check-certificate

[root@master ~]# chmod +x cfssl*

[root@master ~]# ls

anaconda-ks.cfg cfssljson_linux-amd64

cfssl-certinfo_linux-amd64 cfssl_linux-amd64

[root@master ~]# mv cfssl_linux-amd64 /usr/local/bin/cfssl

[root@master ~]# mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

[root@master ~]# mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo2、证书配置文件

[root@master ~]# mkdir -p /opt/kubernetes/etcd/{bin,cfg,ssl,data}[root@master ~]# tree /opt/kubernetes/etcd/

/opt/kubernetes/etcd/

├── bin

├── cfg

├── data

└── ssl[root@master ~]# cd /opt/kubernetes/etcd/ssl/

[root@master ssl]# cat >ca-config.json<<EOF

{"signing": {"default": {"expiry": "87600h"},"profiles": {"www": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}

}

EOF[root@master ssl]# cat >ca-csr.json<<EOF

{"CN": "etcd CA","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing"}]

}

EOF[root@master ssl]# cat >server-csr.json<<EOF

{"CN": "etcd","hosts": ["192.168.116.10","192.168.116.11","192.168.116.12"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing"}]

}

EOF3、制作证书

[root@master ssl]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -[root@master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server[root@master ssl]# ls

ca-config.json ca-csr.json ca.pem server-csr.json server.pem

ca.csr ca-key.pem server.csr server-key.pem安装ETCD

1、下载ETCD

[root@master ssl]# cd ~

[root@master ~]# wget https://github.com/etcd-io/etcd/releases/download/v3.5.0/etcd-v3.5.0-linux-amd64.tar.gz

[root@master ~]# tar zxvf etcd-v3.5.0-linux-amd64.tar.gz

[root@master ~]# cp etcd-v3.5.0-linux-amd64/{etcd,etcdctl,etcdutl} /opt/kubernetes/etcd/bin/2、ETCD配置文件

[root@master ssl]# cat >/opt/kubernetes/etcd/cfg/etcd.conf<<EOF

#[Member]

ETCD_NAME="etcd-1" <<----对应主机编号

ETCD_DATA_DIR="/opt/kubernetes/etcd/data/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.116.10:2380" <<---对应主机IP

ETCD_LISTEN_CLIENT_URLS="https://192.168.116.10:2379" <<---对应主机IP#[Clustering]

ETCD_ENABLE_V2="true" <<----flanneld不支持V3版本,需要手动开启V2

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.116.10:2380" <<---对应主机IP

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.116.10:2379" <<---对应主机IP

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.116.10:2380,etcd-2=https://192.168.116.11:2380,etcd-3=https://192.168.116.12:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

2、ETCD服务文件

[root@master ssl]# cat >/usr/lib/systemd/system/etcd.service<<EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/etcd/cfg/etcd.conf

ExecStart=/opt/kubernetes/etcd/bin/etcd \

--cert-file=/opt/kubernetes/etcd/ssl/server.pem \

--key-file=/opt/kubernetes/etcd/ssl/server-key.pem \

--peer-cert-file=/opt/kubernetes/etcd/ssl/server.pem \

--peer-key-file=/opt/kubernetes/etcd/ssl/server-key.pem \

--trusted-ca-file=/opt/kubernetes/etcd/ssl/ca.pem \

--peer-trusted-ca-file=/opt/kubernetes/etcd/ssl/ca.pem \

--logger=zap

Restart=on-failure

LimitNOFILE=65536[Install]

WantedBy=multi-user.target

EOF3、发送证书、配置、运行文件

[root@master ~]# scp -r /opt/kubernetes/ node1:/opt/ <<-----拷贝到node1,并修改对应名字、IP地址

[root@master ~]# scp -r /opt/kubernetes/ node2:/opt/ <<-----拷贝到node2,并修改对应名字、IP地址

[root@master ~]# scp /usr/lib/systemd/system/etcd.service node1:/usr/lib/systemd/system/system/

[root@master ~]# scp /usr/lib/systemd/system/etcd.service node2:/usr/lib/systemd/system/system/运行ETCD

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl start etcd

[root@master ~]# systemctl enable etcd检查运行状态

[root@master ~]# ETCDCTL_API=2 /opt/kubernetes/etcd/bin/etcdctl --ca-file=/opt/kubernetes/etcd/ssl/ca.pem --cert-file=/opt/kubernetes/etcd/ssl/server.pem --key-file=/opt/kubernetes/etcd/ssl/server-key.pem --endpoints="https://192.168.116.10:2379,https://192.168.116.11:2379,https://192.168.116.12:2379" cluster-health

member 6c4bda8e201a71cf is healthy: got healthy result from https://192.168.116.12:2379

member 858c9c1ef126a0f4 is healthy: got healthy result from https://192.168.116.10:2379

member d72dcdd720e88b38 is healthy: got healthy result from https://192.168.116.11:2379

cluster is healthynode节点

修改文件

核实证书

[root@node1 ~]# ls /opt/kubernetes/etcd/ssl/

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem server.csr server-csr.json server-key.pem server.pem修改ETCD配置文件

[root@node1 ~]# vim /opt/kubernetes/etcd/cfg/etcd.conf

#[Member]

ETCD_NAME="etcd-2" <<------对应节点名字

ETCD_DATA_DIR="/opt/kubernetes/etcd/data/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.116.11:2380" <<-----对应IP地址

ETCD_LISTEN_CLIENT_URLS="https://192.168.116.11:2379" <<-----对应IP地址#[Clustering]

ETCD_ENABLE_V2="true"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.116.11:2380" <<-----对应IP地址

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.116.11:2379" <<-----对应IP地址

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.116.10:2380,etcd-2=https://192.168.116.11:2380,etcd-3=https://192.168.116.12:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

运行ETCD

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl start etcd

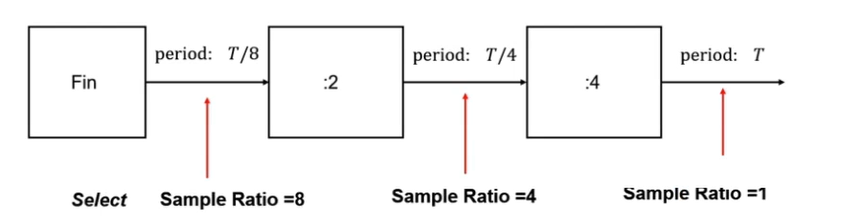

[root@master ~]# systemctl enable etcdFlanneld

因为Centos默认是禁止网卡之间数据包转发,所以需要打开

echo "net.ipv4.ip_forward=1" >> /etc/sysctl.confsystemctl restart networkflannel.1 DOWN or UNKNOWN的解决方法

设置数据包转发方法

master节点

配置通讯子网

[root@master ~]# cd /opt/kubernetes/etcd/ssl/

[root@master ssl]# ETCDCTL_API=2 /opt/kubernetes/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.116.10:2379,https://192.168.116.11:2379,https://192.168.116.12:2379" set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'etcd 报错 Error: unknown command “set“ for “etcdctl“

node节点

安装Flanneld

1、安装

[root@node1 ~]# yum -y install docker-ce

[root@node1 ~]# wget https://github.com/flannel-io/flannel/releases/download/v0.10.0/flannel-v0.10.0-linux-amd64.tar.gz

[root@node1 ~]# tar zxvf flannel-v0.10.0-linux-amd64.tar.gz

[root@node1 ~]# mkdir -p /opt/kubernetes/flanneld/{bin,cfg}

[root@node1 ~]# cp flanneld mk-docker-opts.sh /opt/kubernetes/flanneld/bin/2、Flanneld配置文件

[root@node1 ~]# vim /opt/kubernetes/flanneld/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=https://192.168.116.10:2379,https://192.168.116.11:2379,https://192.168.116.12:2379 \

-etcd-cafile=/opt/kubernetes/etcd/ssl/ca.pem \

-etcd-certfile=/opt/kubernetes/etcd/ssl/server.pem \

-etcd-keyfile=/opt/kubernetes/etcd/ssl/server-key.pem"3、Flanneld服务文件

[root@node1 ~]# vim /usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target network-online.target

Before=docker.service[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/flanneld/cfg/flanneld

ExecStart=/opt/kubernetes/flanneld/bin/flanneld --ip-masq $FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/flanneld/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure[Install]

WantedBy=multi-user.target[root@node1 ~]# vim /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target[Service]

Type=notify

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s[Install]

WantedBy=multi-user.target4、修改docker服务文件

[root@node1 ~]# vim /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target[Service]

Type=notify

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s[Install]

WantedBy=multi-user.target运行Flanneld

[root@node1 ~]# systemctl daemon-reload

[root@node1 ~]# systemctl start flanneld

[root@node1 ~]# systemctl enable flanneldAPIServer

master节点

制作证书

证书配置文件

[root@master ~]# mkdir -p /opt/kubernetes/{bin,cfg,ssl}

[root@master ~]# cd /opt/kubernetes/ssl/

[root@master ssl]# vim ca-config.json

{"signing": {"default": {"expiry": "87600h"},"profiles": {"kubernetes": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}

}[root@master ssl]# vim ca-csr.json

{"CN": "kubernetes","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing","O": "k8s","OU": "System"}]

}[root@master ssl]#cfssl gencert -initca ca-csr.json | cfssljson -bare ca -[root@master ssl]# vim server-csr.json

{"CN": "kubernetes","hosts": ["10.0.0.1","127.0.0.1","192.168.116.10","192.168.116.11","192.168.116.12","kubernetes","kubernetes.default","kubernetes.default.svc","kubernetes.default.svc.cluster","kubernetes.default.svc.cluster.local"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "k8s","OU": "System"}]

}[root@k8s-master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server安装APIServer

1、下载

[root@master ~]# wget https://dl.k8s.io/v1.11.10/kubernetes-server-linux-amd64.tar.gz

[root@master ~]# tar zxcf kubernetes-server-linux-amd64.tar.gz

[root@master ~]# cd kubernetes/server/bin/

[root@master bin]# cp kube-apiserver kube-controller-manager kube-scheduler kubectl /opt/kubernetes/bin/2、制作口令、配置文件

[root@master bin]# vim /opt/kubernetes/cfg/token.csv

674c457d4dcf2eefe4920d7dbb6b0ddc,kubelet-bootstrap,10001,"system:kubelet-bootstrap"[root@master bin]# vim /opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://192.168.116.10:2379,https://192.168.116.11:2379,https://192.168.116.12:2379 \

--bind-address=192.168.116.10 \

--secure-port=6443 \

--advertise-address=192.168.116.10 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/kubernetes/etcd/ssl/ca.pem \

--etcd-certfile=/opt//kubernetes/etcd/ssl/server.pem \

--etcd-keyfile=/opt/kubernetes/etcd/ssl/server-key.pem"3、APIServer服务文件

[root@master bin]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver

ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure[Install]

WantedBy=multi-user.target运行APIServer

[root@master bin]# systemctl daemon-reload

[root@master bin]# systemctl start kube-apiserver

[root@master bin]# systemctl enable kube-apiserverScheduler

master节点

安装Scheduler

1、配置文件

[root@master ~]# vim /opt/kubernetes/cfg/kube-scheduler

KUBE_SCHEDULER_OPTS="--logtostderr=true \

--v=4 \

--master=127.0.0.1:8080 \

--leader-elect"2、服务文件

[root@master ~]# vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler

ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure[Install]

WantedBy=multi-user.target运行Scheduler

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl start kube-scheduler

[root@master ~]# systemctl enable kube-schedulerController-Manager

master节点

安装C-M

1、配置文件

[root@master ~]# vim /opt/kubernetes/cfg/kube-controller-manager

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \

--v=4 \

--master=127.0.0.1:8080 \

--leader-elect=true \

--address=127.0.0.1 \

--service-cluster-ip-range=10.0.0.0/24 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \

--root-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem"2、服务文件

[root@master ~]# vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager

ExecStart=/opt/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure[Install]

WantedBy=multi-user.target运行C-M

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl start kube-controller-manager.service

[root@master ~]# systemctl enable kube-controller-manager.service查看集群状态

[root@master ~]# /opt/kubernetes/bin/kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-1 Healthy {"health":"true","reason":""}

etcd-2 Healthy {"health":"true","reason":""}

etcd-0 Healthy {"health":"true","reason":""} Kubelet

master节点

制作Kubelet配置文件

kubelet-bootstrap:

[root@master ~]# cd ~

[root@master ~]# /opt/kubernetes/bin/kubectl create clusterrolebinding kubelet-bootstrap \--clusterrole=system:node-bootstrapper \--user=kubelet-bootstrapkubeconfig:

[root@master ~]# cd /opt/kubernetes/ssl/

[root@master ssl]# KUBE_APISERVER="https://192.168.116.10:6443"

[root@master ssl]# BOOTSTRAP_TOKEN=674c457d4dcf2eefe4920d7dbb6b0ddc

[root@master ssl]# /opt/kubernetes/bin/kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=bootstrap.kubeconfig

[root@master ssl]# /opt/kubernetes/bin/kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=bootstrap.kubeconfig

[root@master ssl]# /opt/kubernetes/bin/kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=bootstrap.kubeconfig

[root@master ssl]# /opt/kubernetes/bin/kubectl config use-context default --kubeconfig=bootstrap.kubeconfignode节点

1、创建文件夹、下载镜像

[root@node1 ~]# mkdir -p /opt/kubernetes/{bin,cfg,ssl}

[root@node1 ~]# systemctl start docker

[root@node1 ~]# systemctl enable docker

[root@node1 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.02、从master发送认证、程序

[root@master ssl]# scp *kubeconfig node1:/opt/kubernetes/cfg/

[root@master ssl]# scp *kubeconfig node2:/opt/kubernetes/cfg/[root@master ssl]# cd ~/kubernetes/server/bin/

[root@master bin]# scp kubelet kube-proxy node1:/opt/kubernetes/bin

[root@master bin]# scp kubelet kube-proxy node2:/opt/kubernetes/bin安装kubelet

1、配置文件

[root@node1 ~] vim /opt/kubernetes/cfg/kubelet

KUBELET_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=192.168.116.11 \ <<------对应的node地址

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \

--config=/opt/kubernetes/cfg/kubelet.config \

--cert-dir=/opt/kubernetes/ssl \

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0" <<-----pause镜像[root@node1 ~]# vim /opt/kubernetes/cfg/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 192.168.116.11 <<------对应的node地址

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS: ["10.0.0.2"]

clusterDomain: cluster.local.

failSwapOn: false

authentication:anonymous:enabled: truewebhook:enabled: false

2、服务文件

[root@node1 ~]# vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service[Service]

EnvironmentFile=/opt/kubernetes/cfg/kubelet

ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_OPTS

Restart=on-failure

KillMode=process[Install]

WantedBy=multi-user.target运行kubelet

[root@node1 ~]# systemctl daemon-reload

[root@node1 ~]# systemctl start kubelet

[root@node1 ~]# systemctl enable kubelet查看node状态

[root@master ~]# /opt/kubernetes/bin/kubectl get csr允许node进入集群

[root@master ~]# /opt/kubernetes/bin/kubectl certificate approve node-csr-B56hrh1mbKUfm2OP94hzzsM-9iSTjQuZsR0lG5wcW1o

k8s_kubelet启动时必须关闭swap

kube-proxy

master节点

制作证书

[root@master ~]# vim /opt/kubernetes/ssl/kube-proxy-csr.json

{"CN": "system:kube-proxy","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "k8s","OU": "System"}]

}[root@master ~]# cd /opt/kubernetes/ssl/

[root@master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

制作kube-proxy配置文件

[root@master ssl]# /opt/kubernetes/bin/kubectl config set-cluster kubernetes \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig[root@master ssl]# /opt/kubernetes/bin/kubectl config set-credentials kube-proxy \

--client-certificate=kube-proxy.pem \

--client-key=kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig[root@master ssl]# /opt/kubernetes/bin/kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig[root@master ssl]# /opt/kubernetes/bin/kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

node节点

从master发送认证

[root@master ssl]# scp kube-proxy.kubeconfig node1:/opt/kubernetes/cfg/

[root@master ssl]# scp kube-proxy.kubeconfig node2:/opt/kubernetes/cfg/安装proxy

1、配置文件

[root@node1 ~]# vim /opt/kubernetes/cfg/kube-proxy

KUBE_PROXY_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=192.168.116.11 \

--cluster-cidr=10.0.0.0/24 \

--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig"

2、服务文件

[root@node1 ~]# vim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy

ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure[Install]

WantedBy=multi-user.target

运行proxy

[root@node1 ~]# systemctl daemon-reload

[root@node1 ~]# systemctl enable kube-proxy

[root@node1 ~]# systemctl start kube-proxy查看集群状态

[root@master1 ~]# /opt/kubernetes/bin/kubectl get no

NAME STATUS ROLES AGE VERSION

192.168.116.11 Ready <none> 1m v1.11.10

192.168.116.12 Ready <none> 3m v1.11.10

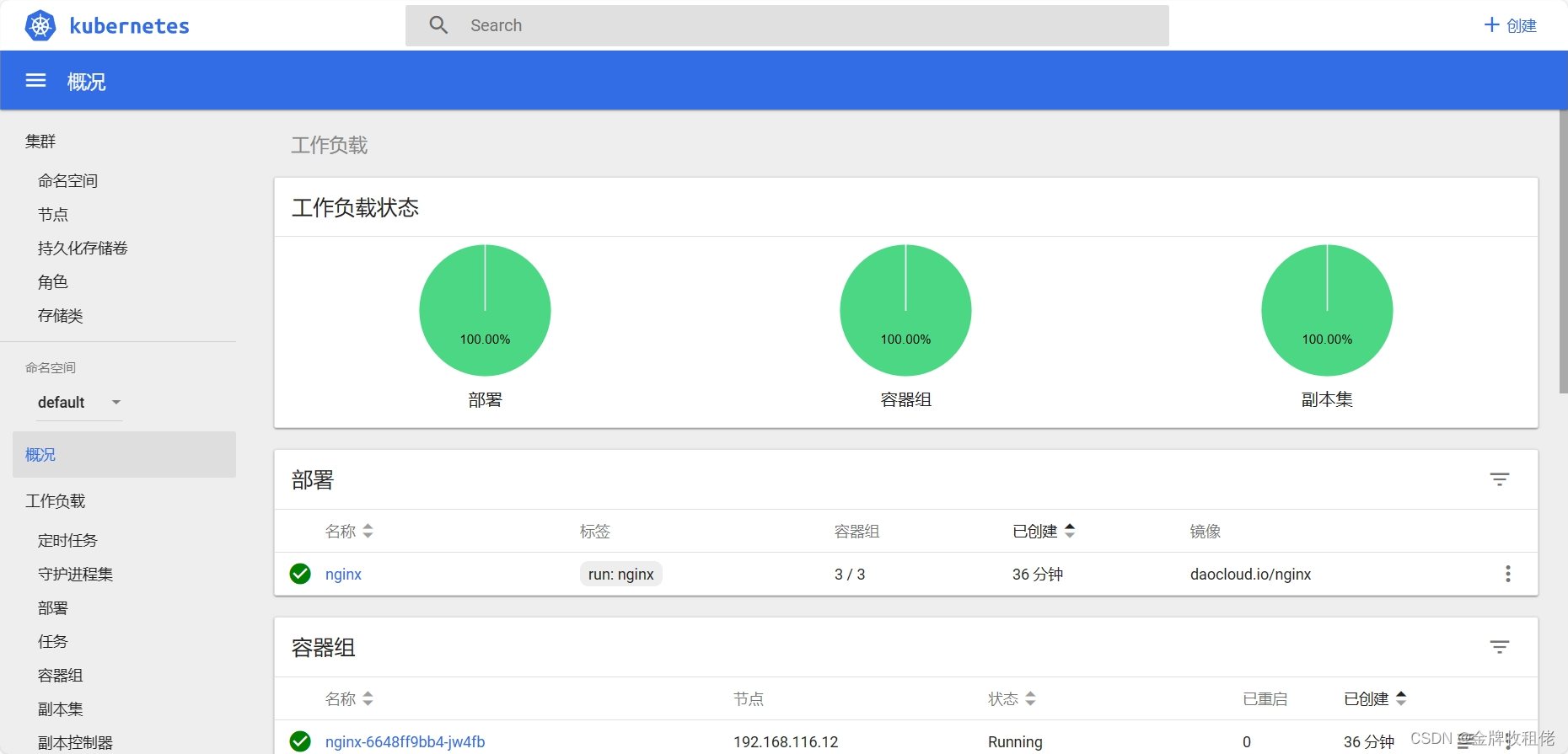

测试

master节点

运行测试镜像

[root@master ssl]# /opt/kubernetes/bin/kubectl run nginx --image=daocloud.io/nginx --replicas=3[root@master ssl]# /opt/kubernetes/bin/kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-6648ff9bb4-jw4fb 1/1 Running 0 35s

nginx-6648ff9bb4-lqb42 1/1 Running 0 35s

nginx-6648ff9bb4-xnkqh 1/1 Running 0 35s[root@master ssl]# /opt/kubernetes/bin/kubectl get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

nginx 3 3 3 3 1m[root@master ssl]# /opt/kubernetes/bin/kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

nginx-6648ff9bb4-jw4fb 1/1 Running 0 1m 172.17.26.3 192.168.116.12 <none>

nginx-6648ff9bb4-lqb42 1/1 Running 0 1m 172.17.26.2 192.168.116.12 <none>

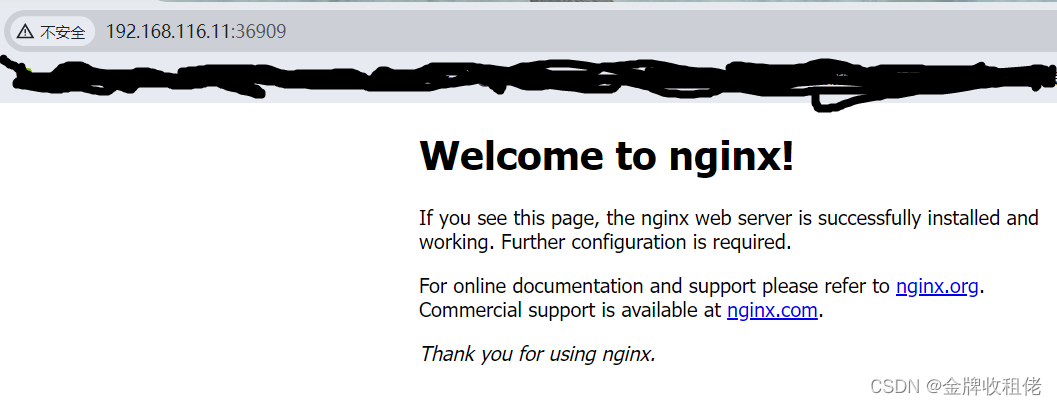

nginx-6648ff9bb4-xnkqh 1/1 Running 0 1m 172.17.28.2 192.168.116.11 <none>开放镜像端口

[root@master ssl]# /opt/kubernetes/bin/kubectl expose deployment nginx --port=88 --target-port=80 --type=NodePort

service/nginx exposed[root@master ssl]# /opt/kubernetes/bin/kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 3d

nginx NodePort 10.0.0.209 <none> 88:36909/TCP 10s

node节点

测试flanned网络

[root@node1 ~]# ping 172.17.26.3

PING 172.17.26.3 (172.17.26.3) 56(84) bytes of data.

64 bytes from 172.17.26.3: icmp_seq=1 ttl=63 time=0.397 ms

64 bytes from 172.17.26.3: icmp_seq=2 ttl=63 time=0.220 ms

^C

--- 172.17.26.3 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.220/0.308/0.397/0.090 ms[root@node1 ~]# ping 172.17.26.2

PING 172.17.26.2 (172.17.26.2) 56(84) bytes of data.

64 bytes from 172.17.26.2: icmp_seq=1 ttl=63 time=0.319 ms

64 bytes from 172.17.26.2: icmp_seq=2 ttl=63 time=0.288 ms

^C

--- 172.17.26.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 999ms

rtt min/avg/max/mdev = 0.288/0.303/0.319/0.023 ms[root@node1 ~]# ping 172.17.28.2

PING 172.17.28.2 (172.17.28.2) 56(84) bytes of data.

64 bytes from 172.17.28.2: icmp_seq=1 ttl=64 time=0.090 ms

64 bytes from 172.17.28.2: icmp_seq=2 ttl=64 time=0.040 ms

^C

--- 172.17.28.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 999ms

rtt min/avg/max/mdev = 0.040/0.065/0.090/0.025 ms

安装WEB-UI

POD配置文件

[root@master ~]# mkdir /opt/kubernetes/webui

[root@master ~]# cd /opt/kubernetes/webui/

[root@master webui]# vim dashboard-deployment.yaml namespace: kube-systemlabels:k8s-app: kubernetes-dashboardkubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

spec:selector:matchLabels:k8s-app: kubernetes-dashboardtemplate:metadata:labels:k8s-app: kubernetes-dashboardannotations:scheduler.alpha.kubernetes.io/critical-pod: ''spec:serviceAccountName: kubernetes-containers:- name: kubernetes-dashboardimage: registry.cn-hangzhou.aliyuncs.com/kube_containers/kubernetes-dashboard-amd64:v1.8.1resources:limits:cpu: 100mmemory: 300Mirequests:cpu: 100mmemory: 100Miports:- containerPort: 9090protocol: TCPlivenessProbe:httpGet:scheme: HTTPpath: /port: 9090initialDelaySeconds: 30timeoutSeconds: 30tolerations:- key: "CriticalAddonsOnly"operator: "Exists"配置APIServer

[root@master webui]# vim dashboard-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:labels:k8s-app: kubernetes-dashboardaddonmanager.kubernetes.io/mode: Reconcilename: kubernetes-dashboardnamespace: kube-system

---kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:name: kubernetes-dashboard-minimalnamespace: kube-systemlabels:k8s-app: kubernetes-dashboardaddonmanager.kubernetes.io/mode: Reconcile

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: cluster-admin

subjects:- kind: ServiceAccountname: kubernetes-dashboardnamespace: kube-system配置Dashboard

[root@master webui]# vim dashboard-service.yaml

apiVersion: v1

kind: Service

metadata:name: kubernetes-dashboardnamespace: kube-systemlabels:k8s-app: kubernetes-dashboardkubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

spec:type: NodePortselector:k8s-app: kubernetes-dashboardports:- port: 80targetPort: 9090部署

[root@master webui]# /opt/kubernetes/bin/kubectl create -f dashboard-rbac.yaml[root@master webui]# /opt/kubernetes/bin/kubectl create -f dashboard-deployment.yaml[root@master webui]# /opt/kubernetes/bin/kubectl create -f dashboard-service.yaml[root@master webui]# /opt/kubernetes/bin/kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

kubernetes-dashboard-d9545b947-lcdrv 1/1 Running 0 3m查看端口

[root@master webui]# /opt/kubernetes/bin/kubectl get service -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.0.0.39 <none> 80:34354/TCP 4m查看所在容器IP

[root@master webui]# /opt/kubernetes/bin/kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

kubernetes-dashboard-d9545b947-lcdrv 1/1 Running 0 5m 172.17.28.3 192.168.116.11 <none>