文章目录

- 概述

- 架构分析

- config

- mount-cgroup

- apply-sysctl-overwrites

- mount-bpf-fs

- clean-cilium-state

- install-cni-binaries

- cilium-agent

- 总结

- 参考资料

概述

本文主要分析 cilium-agent 作为 DaemonSet 在每个节点的启动流程。

架构分析

下面按照 cilium-agent 从 init-container 到业务容器启动的过程分析,可以先看看 cilium-agent 的 init-container 的定义。

initContainers:

- command:- cilium- build-configenv:image: quay.io/cilium/cilium:v1.14.4@sha256:4981767b787c69126e190e33aee93d5a076639083c21f0e7c29596a519c64a2eimagePullPolicy: IfNotPresentname: configvolumeMounts:- mountPath: /tmpname: tmp

- command:- sh- -ec- |cp /usr/bin/cilium-mount /hostbin/cilium-mount;nsenter --cgroup=/hostproc/1/ns/cgroup --mount=/hostproc/1/ns/mnt "${BIN_PATH}/cilium-mount" $CGROUP_ROOT;rm /hostbin/cilium-mountenv:- name: CGROUP_ROOTvalue: /run/cilium/cgroupv2- name: BIN_PATHvalue: /opt/cni/binimage: quay.io/cilium/cilium:v1.14.4@sha256:4981767b787c69126e190e33aee93d5a076639083c21f0e7c29596a519c64a2eimagePullPolicy: IfNotPresentname: mount-cgroupsecurityContext:capabilities:add:- SYS_ADMIN- SYS_CHROOT- SYS_PTRACEdrop:- ALLseLinuxOptions:level: s0type: spc_tvolumeMounts:- mountPath: /hostprocname: hostproc- mountPath: /hostbinname: cni-path

- command:- sh- -ec- |cp /usr/bin/cilium-sysctlfix /hostbin/cilium-sysctlfix;nsenter --mount=/hostproc/1/ns/mnt "${BIN_PATH}/cilium-sysctlfix";rm /hostbin/cilium-sysctlfixenv:- name: BIN_PATHvalue: /opt/cni/binimage: quay.io/cilium/cilium:v1.14.4@sha256:4981767b787c69126e190e33aee93d5a076639083c21f0e7c29596a519c64a2eimagePullPolicy: IfNotPresentname: apply-sysctl-overwritessecurityContext:capabilities:add:- SYS_ADMIN- SYS_CHROOT- SYS_PTRACEdrop:- ALLseLinuxOptions:level: s0type: spc_tvolumeMounts:- mountPath: /hostprocname: hostproc- mountPath: /hostbinname: cni-path

- args:- mount | grep "/sys/fs/bpf type bpf" || mount -t bpf bpf /sys/fs/bpfcommand:- /bin/bash- -c- --image: quay.io/cilium/cilium:v1.14.4@sha256:4981767b787c69126e190e33aee93d5a076639083c21f0e7c29596a519c64a2eimagePullPolicy: IfNotPresentname: mount-bpf-fssecurityContext:privileged: truevolumeMounts:- mountPath: /sys/fs/bpfmountPropagation: Bidirectionalname: bpf-maps

- command:- /init-container.shenv:- name: CILIUM_ALL_STATEvalueFrom:configMapKeyRef:key: clean-cilium-statename: cilium-configoptional: true- name: CILIUM_BPF_STATEvalueFrom:configMapKeyRef:key: clean-cilium-bpf-statename: cilium-configoptional: true- name: KUBERNETES_SERVICE_HOSTvalue: 192.168.1.200- name: KUBERNETES_SERVICE_PORTvalue: "6443"image: quay.io/cilium/cilium:v1.14.4@sha256:4981767b787c69126e190e33aee93d5a076639083c21f0e7c29596a519c64a2eimagePullPolicy: IfNotPresentname: clean-cilium-stateresources:requests:cpu: 100mmemory: 100MisecurityContext:capabilities:add:- NET_ADMIN- SYS_MODULE- SYS_ADMIN- SYS_RESOURCEdrop:- ALLseLinuxOptions:level: s0type: spc_tvolumeMounts:- mountPath: /sys/fs/bpfname: bpf-maps- mountPath: /run/cilium/cgroupv2mountPropagation: HostToContainername: cilium-cgroup- mountPath: /var/run/ciliumname: cilium-run

- command:- /install-plugin.shimage: quay.io/cilium/cilium:v1.14.4@sha256:4981767b787c69126e190e33aee93d5a076639083c21f0e7c29596a519c64a2eimagePullPolicy: IfNotPresentname: install-cni-binariesresources:requests:cpu: 100mmemory: 10MisecurityContext:capabilities:drop:- ALLseLinuxOptions:level: s0type: spc_tterminationMessagePath: /dev/termination-logterminationMessagePolicy: FallbackToLogsOnErrorvolumeMounts:- mountPath: /host/opt/cni/binname: cni-path

从 volumes 字段看,整个 Pod 启动需要创建一些宿主机的文件夹路径。

volumes:- emptyDir: {}name: tmp- hostPath:path: /var/run/ciliumtype: DirectoryOrCreatename: cilium-run- hostPath:path: /sys/fs/bpftype: DirectoryOrCreatename: bpf-maps- hostPath:path: /proctype: Directoryname: hostproc- hostPath:path: /run/cilium/cgroupv2type: DirectoryOrCreatename: cilium-cgroup- hostPath:path: /opt/cni/bintype: DirectoryOrCreatename: cni-path- hostPath:path: /etc/cni/net.dtype: DirectoryOrCreatename: etc-cni-netd- hostPath:path: /lib/modulestype: ""name: lib-modules- hostPath:path: /run/xtables.locktype: FileOrCreatename: xtables-lock- name: clustermesh-secretsprojected:defaultMode: 256sources:- secret:name: cilium-clustermeshoptional: true- secret:items:- key: tls.keypath: common-etcd-client.key- key: tls.crtpath: common-etcd-client.crt- key: ca.crtpath: common-etcd-client-ca.crtname: clustermesh-apiserver-remote-certoptional: true- configMap:defaultMode: 420name: cni-configurationname: cni-configuration- hostPath:path: /proc/sys/nettype: Directoryname: host-proc-sys-net- hostPath:path: /proc/sys/kerneltype: Directoryname: host-proc-sys-kernel- name: hubble-tlsprojected:defaultMode: 256sources:- secret:items:- key: tls.crtpath: server.crt- key: tls.keypath: server.key- key: ca.crtpath: client-ca.crtname: hubble-server-certsoptional: true

再看看 cilium-agent container 的定义。

containers:- name: cilium-agentimage: "quay.io/cilium/cilium:v1.14.4"imagePullPolicy: IfNotPresentcommand:- cilium-agentargs:- --config-dir=/tmp/cilium/config-mapstartupProbe:httpGet:host: "127.0.0.1"path: /healthzport: 9879scheme: HTTPhttpHeaders:- name: "brief"value: "true"failureThreshold: 105periodSeconds: 2successThreshold: 1livenessProbe:httpGet:host: "127.0.0.1"path: /healthzport: 9879scheme: HTTPhttpHeaders:- name: "brief"value: "true"periodSeconds: 30successThreshold: 1failureThreshold: 10timeoutSeconds: 5readinessProbe:httpGet:host: "127.0.0.1"path: /healthzport: 9879scheme: HTTPhttpHeaders:- name: "brief"value: "true"periodSeconds: 30successThreshold: 1failureThreshold: 3timeoutSeconds: 5env:- name: K8S_NODE_NAMEvalueFrom:fieldRef:apiVersion: v1fieldPath: spec.nodeName- name: CILIUM_K8S_NAMESPACEvalueFrom:fieldRef:apiVersion: v1fieldPath: metadata.namespace- name: CILIUM_CLUSTERMESH_CONFIGvalue: /var/lib/cilium/clustermesh/- name: KUBERNETES_SERVICE_HOSTvalue: hh-k8s-noah-sc-staging001-master.api.vip.comlifecycle:postStart:exec:command:- "bash"- "-c"- |set -o errexitset -o pipefailset -o nounset# When running in AWS ENI mode, it's likely that 'aws-node' has# had a chance to install SNAT iptables rules. These can result# in dropped traffic, so we should attempt to remove them.# We do it using a 'postStart' hook since this may need to run# for nodes which might have already been init'ed but may still# have dangling rules. This is safe because there are no# dependencies on anything that is part of the startup script# itself, and can be safely run multiple times per node (e.g. in# case of a restart).if [[ "$(iptables-save | grep -c 'AWS-SNAT-CHAIN|AWS-CONNMARK-CHAIN')" != "0" ]];thenecho 'Deleting iptables rules created by the AWS CNI VPC plugin'iptables-save | grep -v 'AWS-SNAT-CHAIN|AWS-CONNMARK-CHAIN' | iptables-restorefiecho 'Done!'preStop:exec:command:- /cni-uninstall.shsecurityContext:seLinuxOptions:level: s0type: spc_tcapabilities:add:- CHOWN- KILL- NET_ADMIN- NET_RAW- IPC_LOCK- SYS_MODULE- SYS_ADMIN- SYS_RESOURCE- DAC_OVERRIDE- FOWNER- SETGID- SETUIDdrop:- ALLterminationMessagePolicy: FallbackToLogsOnErrorvolumeMounts:# Unprivileged containers need to mount /proc/sys/net from the host# to have write access- mountPath: /host/proc/sys/netname: host-proc-sys-net# /proc/sys/net# Unprivileged containers need to mount /proc/sys/kernel from the host# to have write access- mountPath: /host/proc/sys/kernelname: host-proc-sys-kernel# /host/proc/sys/kernel- name: bpf-mapsmountPath: /sys/fs/bpf# /sys/fs/bpf# Unprivileged containers can't set mount propagation to bidirectional# in this case we will mount the bpf fs from an init container that# is privileged and set the mount propagation from host to container# in Cilium.mountPropagation: HostToContainer- name: cilium-runmountPath: /var/run/cilium- name: etc-cni-netdmountPath: /host/etc/cni/net.d- name: clustermesh-secretsmountPath: /var/lib/cilium/clustermeshreadOnly: true- name: cni-configurationmountPath: /tmp/cni-configurationreadOnly: true# Needed to be able to load kernel modules- name: lib-modulesmountPath: /lib/modules# /lib/modulesreadOnly: true- name: xtables-lockmountPath: /run/xtables.lock- name: hubble-tlsmountPath: /var/lib/cilium/tls/hubblereadOnly: true- name: tmpmountPath: /tmp

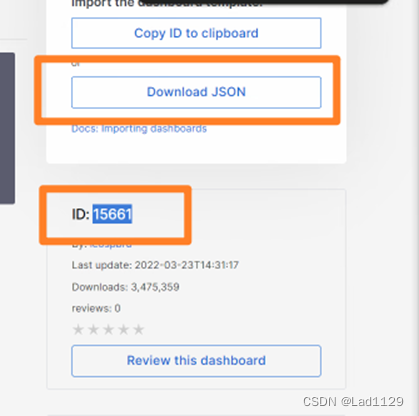

根据定义,可以梳理出下面的流程图。

config

在 config 的容器中,执行了下面的命令。首先 cilium 这个是 Cilium 提供的一个 CLI 的客户端工具。

cilium build-config

可以看看 build-config 这个子命令的 help 信息。

# cilium build-config -h

Resolve all of the configuration sources that apply to this nodeUsage:cilium build-config --node-name $K8S_NODE_NAME [flags]Flags:--allow-config-keys strings List of configuration keys that are allowed to be overridden (e.g. set from not the first source. Takes precedence over deny-config-keys--deny-config-keys strings List of configuration keys that are not allowed to be overridden (e.g. set from not the first source. If allow-config-keys is set, this field is ignored--dest string Destination directory to write the fully-resolved configuration. (default "/tmp/cilium/config-map")--enable-k8s Enable the k8s clientset (default true)--enable-k8s-api-discovery Enable discovery of Kubernetes API groups and resources with the discovery API-h, --help help for build-config--k8s-api-server string Kubernetes API server URL--k8s-client-burst int Burst value allowed for the K8s client--k8s-client-qps float32 Queries per second limit for the K8s client--k8s-heartbeat-timeout duration Configures the timeout for api-server heartbeat, set to 0 to disable (default 30s)--k8s-kubeconfig-path string Absolute path of the kubernetes kubeconfig file--node-name string The name of the node on which we are running. Also set via K8S_NODE_NAME environment. (default "master")--source strings Ordered list of configuration sources. Supported values: config-map:<namespace>/name - a ConfigMap with <name>, optionally in namespace <namespace>. cilium-node-config:<NAMESPACE> - any CiliumNodeConfigs in namespace <NAMESPACE>. node:<NODENAME> - Annotations on the node. Namespace and nodename are optional (default [config-map:cilium-config,cilium-node-config:kube-system])Global Flags:--config string Config file (default is $HOME/.cilium.yaml)-D, --debug Enable debug messages-H, --host string URI to server-side API

查看相关的日志,从帮助信息可以看到,默认的输出地址是 /tmp/cilium/config-map。

# k logs cilium-hms7c -c config

Running

level=info msg=Invoked duration=1.338661ms function="cmd.glob..func36 (build-config.go:32)" subsys=hive

level=info msg=Starting subsys=hive

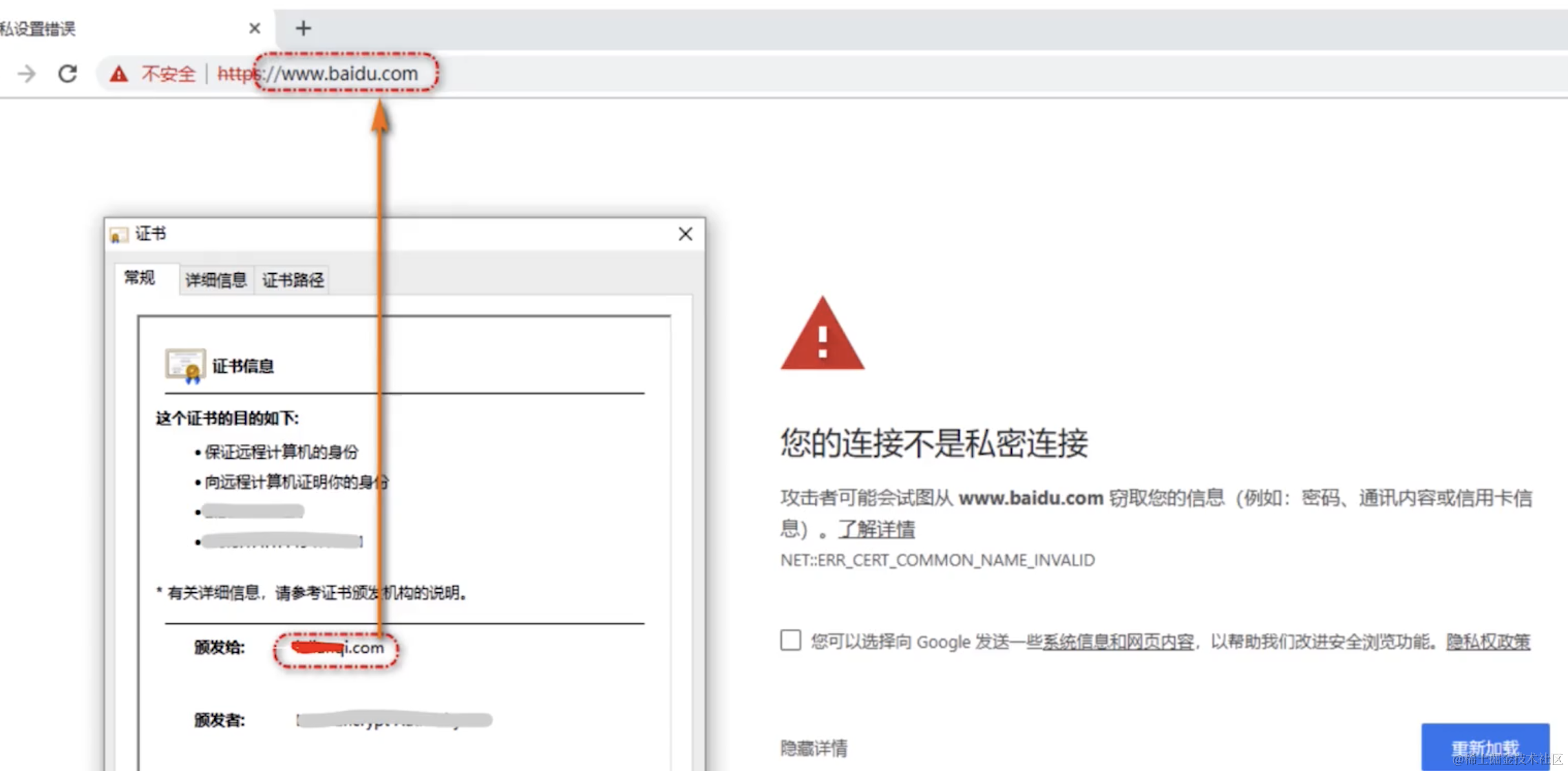

level=info msg="Establishing connection to apiserver" host="https://192.168.1.200:6443" subsys=k8s-client

level=info msg="Connected to apiserver" subsys=k8s-client

level=info msg="Start hook executed" duration=17.191079ms function="client.(*compositeClientset).onStart" subsys=hive

level=info msg="Reading configuration from config-map:kube-system/cilium-config" configSource="config-map:kube-system/cilium-config" subsys=option-resolver

level=info msg="Got 106 config pairs from source" configSource="config-map:kube-system/cilium-config" subsys=option-resolver

level=info msg="Reading configuration from cilium-node-config:kube-system/" configSource="cilium-node-config:kube-system/" subsys=option-resolver

level=info msg="Got 0 config pairs from source" configSource="cilium-node-config:kube-system/" subsys=option-resolver

level=info msg="Start hook executed" duration=13.721533ms function="cmd.(*buildConfig).onStart" subsys=hive

level=info msg=Stopping subsys=hive

level=info msg="Stop hook executed" duration="20.722µs" function="client.(*compositeClientset).onStop" subsys=hive

mount-cgroup

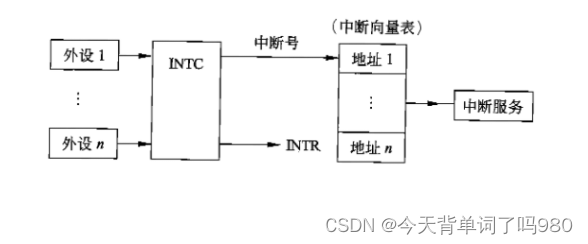

Cilium 使用 cgroup v2 来实施网络策略、监控和其他关键功能。例如,它可能用于确保只有经过验证的网络流量可以进入和离开容器化的应用程序,或者用于控制容器可以使用多少系统资源。

#- name: CGROUP_ROOT value: /run/cilium/cgroupv2

#- name: BIN_PATH value: /opt/cni/bin

cp /usr/bin/cilium-mount /hostbin/cilium-mount;

nsenter --cgroup=/hostproc/1/ns/cgroup --mount=/hostproc/1/ns/mnt "/opt/cni/bin/cilium-mount" /run/cilium/cgroupv2;

nsenter --cgroup=/proc/1/ns/cgroup --mount=/proc/1/ns/mnt "/hostbin/cilium-mount" /run/cilium/cgroupv2;

rm /hostbin/cilium-mount

查看一下帮助信息。

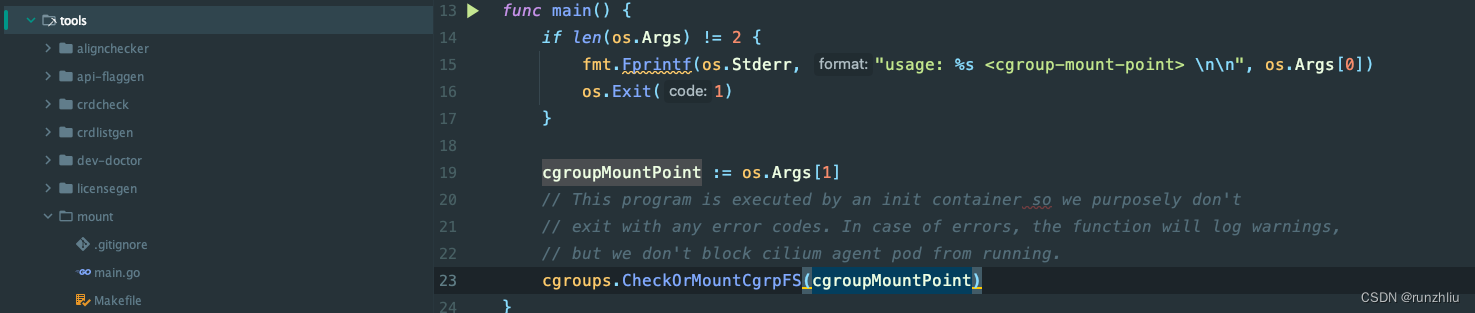

# /usr/bin/cilium-mount

usage: /usr/bin/cilium-mount <cgroup-mount-point>

实际上,这是 tools 包里一个很简单的 Go 程序,大部分的 init-container 都是通过这些小型的 Go 程序来完成一些配置化的工作。

Cilium will automatically mount cgroup v2 filesystem required to attach BPF cgroup programs by default at the path

/run/cilium/cgroupv2.

查看一下日志的输出。

# k logs cilium-hms7c -c mount-cgroup

level=info msg="Mounted cgroupv2 filesystem at /run/cilium/cgroupv2" subsys=cgroups

apply-sysctl-overwrites

# - name: BIN_PATH value: /opt/cni/bin

cp /usr/bin/cilium-sysctlfix /hostbin/cilium-sysctlfix;

cp /usr/bin/cilium-sysctlfix /opt/cni/bin/cilium-sysctlfix;

nsenter --mount=/hostproc/1/ns/mnt "/opt/cni/bin/cilium-sysctlfix";

nsenter --mount=/proc/1/ns/mnt "/opt/cni/bin/cilium-sysctlfix";

rm /hostbin/cilium-sysctlfix

# /usr/bin/cilium-sysctlfix -h

Usage of /usr/bin/cilium-sysctlfix:--sysctl-conf-dir string Path to the sysctl config directory (default "/etc/sysctl.d/")--sysctl-config-file string Filename of the cilium sysctl overwrites config file (default "99-zzz-override_cilium.conf")--systemd-sysctl-unit string Name of the systemd sysctl unit to reload (default "systemd-sysctl.service")

parse flags: pflag: help requested

从宿主机看下面的文件。

# cat /etc/sysctl.d/99-zzz-override_cilium.conf# Disable rp_filter on Cilium interfaces since it may cause mangled packets to be dropped

-net.ipv4.conf.lxc*.rp_filter = 0

-net.ipv4.conf.cilium_*.rp_filter = 0

# The kernel uses max(conf.all, conf.{dev}) as its value, so we need to set .all. to 0 as well.

# Otherwise it will overrule the device specific settings.

net.ipv4.conf.all.rp_filter = 0

# k logs cilium-hms7c -c apply-sysctl-overwrites

sysctl config up-to-date, nothing to do

mount-bpf-fs

这个 init-container 使得进程可以通过文件系统接口来访问 BPF 虚拟文件系统,从而加载和管理 BPF 程序和 maps。提供了一个持久化的存储空间来存储 BPF 对象,这些对象可以在进程之间共享,即使是在进程终止后。

mount | grep "/sys/fs/bpf type bpf" || mount -t bpf bpf /sys/fs/bpf

查看一下容器的日志。

# k logs cilium-hms7c -c mount-bpf-fs

none on /sys/fs/bpf type bpf (rw,nosuid,nodev,noexec,relatime,mode=700)

clean-cilium-state

这个容器主要做的就是清理一些跟 Cilium 相关的网络接口上的配置,主要是防止 cilium-agent 启动或者重启的时候一些网络配置的残留会影响 Cilium 的正常操作。

/init-container.sh

从容器查看脚本的内容。

# cat /init-container.sh

#!/bin/sh# Check for CLEAN_CILIUM_BPF_STATE and CLEAN_CILIUM_STATE

# is there for backwards compatibility as we've used those

# two env vars in our old kubernetes yaml files.if [ "${CILIUM_BPF_STATE}" = "true" ] \|| [ "${CLEAN_CILIUM_BPF_STATE}" = "true" ]; thencilium cleanup -f --bpf-state

fiif [ "${CILIUM_ALL_STATE}" = "true" ] \|| [ "${CLEAN_CILIUM_STATE}" = "true" ]; thencilium cleanup -f --all-state

fi

看看 cilium clean 可以有什么作用。

# cilium cleanup -h

Clean up CNI configurations, CNI binaries, attached BPF programs,

bpffs, tc filters, routes, links and named network namespaces.Running this command might be necessary to get the worker node back into

working condition after uninstalling the Cilium agent.Usage:cilium cleanup [flags]Flags:--all-state Remove all cilium state--bpf-state Remove BPF state-f, --force Skip confirmation-h, --help help for cleanupGlobal Flags:--config string Config file (default is $HOME/.cilium.yaml)-D, --debug Enable debug messages-H, --host string URI to server-side API

如果还需要做什么清理工作,可以在这个子命令中添加。

func (c ciliumCleanup) whatWillBeRemoved() []string {toBeRemoved := []string{}if len(c.tcFilters) > 0 {section := "tc filters\n"for linkName, f := range c.tcFilters {section += fmt.Sprintf("%s %v\n", linkName, f)}toBeRemoved = append(toBeRemoved, section)}if len(c.xdpLinks) > 0 {section := "xdp programs\n"for _, l := range c.xdpLinks {section += fmt.Sprintf("%s: xdp/prog id %v\n", l.Attrs().Name, l.Attrs().Xdp.ProgId)}toBeRemoved = append(toBeRemoved, section)}if c.bpfOnly {return toBeRemoved}if len(c.routes) > 0 {section := "routes\n"for _, v := range c.routes {section += fmt.Sprintf("%v\n", v)}toBeRemoved = append(toBeRemoved, section)}if len(c.links) > 0 {section := "links\n"for _, v := range c.links {section += fmt.Sprintf("%v\n", v)}toBeRemoved = append(toBeRemoved, section)}if len(c.netNSs) > 0 {section := "network namespaces\n"for _, n := range c.netNSs {section += fmt.Sprintf("%s\n", n)}toBeRemoved = append(toBeRemoved, section)}toBeRemoved = append(toBeRemoved, fmt.Sprintf("socketlb bpf programs at %s",defaults.DefaultCgroupRoot))toBeRemoved = append(toBeRemoved, fmt.Sprintf("mounted cgroupv2 at %s",defaults.DefaultCgroupRoot))toBeRemoved = append(toBeRemoved, fmt.Sprintf("library code in %s",defaults.LibraryPath))toBeRemoved = append(toBeRemoved, fmt.Sprintf("endpoint state in %s",defaults.RuntimePath))toBeRemoved = append(toBeRemoved, fmt.Sprintf("CNI configuration at %s, %s, %s, %s, %s",cniConfigV1, cniConfigV2, cniConfigV3, cniConfigV4, cniConfigV5))return toBeRemoved

}

这个容器没有日志输出,这里其实可以也打一些日志,确保是否有真正执行一些清理的工作。

# k logs cilium-hms7c -c clean-cilium-state

install-cni-binaries

这个就很熟悉了,大部分的 CNI 都会通过这样的方式将 CNI 的二进制放到宿主机的 /etc/cni/net.d/ 这个目录里。

/install-plugin.sh

# cat /install-plugin.sh

#!/bin/bash# Copy the cilium-cni plugin binary to the hostset -eHOST_PREFIX=${HOST_PREFIX:-/host}BIN_NAME=cilium-cni

CNI_DIR=${CNI_DIR:-${HOST_PREFIX}/opt/cni}

CILIUM_CNI_CONF=${CILIUM_CNI_CONF:-${HOST_PREFIX}/etc/cni/net.d/${CNI_CONF_NAME}}if [ ! -d "${CNI_DIR}/bin" ]; thenmkdir -p "${CNI_DIR}/bin"

fi# Install the CNI loopback driver if not installed already

if [ ! -f "${CNI_DIR}/bin/loopback" ]; thenecho "Installing loopback driver..."# Don't fail hard if this fails as it is usually not requiredcp /cni/loopback "${CNI_DIR}/bin/" || true

fiecho "Installing ${BIN_NAME} to ${CNI_DIR}/bin/ ..."# Copy the binary, then do a rename

# so the move is atomic

rm -f "${CNI_DIR}/bin/${BIN_NAME}.new" || true

cp "/opt/cni/bin/${BIN_NAME}" "${CNI_DIR}/bin/.${BIN_NAME}.new"

mv "${CNI_DIR}/bin/.${BIN_NAME}.new" "${CNI_DIR}/bin/${BIN_NAME}"echo "wrote ${CNI_DIR}/bin/${BIN_NAME}"

# k logs cilium-hms7c -c install-cni-binaries

Installing cilium-cni to /host/opt/cni/bin/ ...

wrote /host/opt/cni/bin/cilium-cni

cilium-agent

关于 cilium-agent 的启动分析,会在后面的文章里详细展开。

总结

根据以上的分析,可以总结一下,在 Kubernetes 集群内部的节点下,启动 cilium-agent 的二进制之前需要执行下面的脚本。

# config

cilium build-config

cilium build-config --k8s-kubeconfig-path /root/.kube/config --source config-map:kube-system/cilium-configmkdir -p /hostbin /var/run/cilium /sys/fs/bpf /run/cilium/cgroupv2 /opt/cni/bin /etc/cni/net.d /proc/sys/net /proc/sys/kernel

# mount-cgroup# /hostbin是一个非常临时的目录

# /hostproc相当于/proc

mkdir -p /hostbin

mkdir -p /hostproc

mount --bind /proc /hostproc

mount --bind /opt/cni/bin /hostbin

export BIN_PATH=/opt/cni/bin

export CGROUP_ROOT=/run/cilium/cgroupv2cp /usr/bin/cilium-mount /hostbin/cilium-mount;

nsenter --cgroup=/hostproc/1/ns/cgroup --mount=/hostproc/1/ns/mnt "${BIN_PATH}/cilium-mount" $CGROUP_ROOT;

rm /hostbin/cilium-mount# apply-sysctl-overwrites

mkdir -p /hostbin

mkdir -p /hostproc

mount --bind /proc /hostproc

mount --bind /opt/cni/bin /hostbin

export BIN_PATH=/opt/cni/bincp /usr/bin/cilium-sysctlfix /hostbin/cilium-sysctlfix;

nsenter --mount=/hostproc/1/ns/mnt "${BIN_PATH}/cilium-sysctlfix";

rm /hostbin/cilium-sysctlfix# mount-bpf-fs

mount | grep "/sys/fs/bpf type bpf" || mount -t bpf bpf /sys/fs/bpf# clean-cilium-state

/init-container.sh# install-cni-binaries

/install-plugin.sh