目录

1 图像的特征匹配

2 图像中提取GPS位置信息

2.1 写入GPS信息到图像中

2.2 读取带有GPS的图像

3 SIFT/AKAZE/AKAZE_MLDB特征提取对比

4 GMS Filter

5 将球形全景图转换为6个透视视图

6 照片组重建点云

1 图像的特征匹配

#include "openMVG/features/feature.hpp" // 引入特征处理相关功能

#include "openMVG/features/sift/SIFT_Anatomy_Image_Describer.hpp" // 引入SIFT特征描述器

#include "openMVG/features/svg_features.hpp" // 引入SVG特征可视化功能

#include "openMVG/image/image_io.hpp" // 引入图像输入输出功能

#include "openMVG/image/image_concat.hpp" // 引入图像拼接功能

#include "openMVG/matching/regions_matcher.hpp" // 引入区域匹配功能

#include "openMVG/matching/svg_matches.hpp" // 引入匹配结果的SVG可视化功能

#include "third_party/stlplus3/filesystemSimplified/file_system.hpp" // 引入文件系统处理功能#include <cstdlib>

#include <string>

#include <iostream>using namespace openMVG; // 使用openMVG命名空间简化代码

using namespace openMVG::image; // 使用image子命名空间

using namespace openMVG::matching; // 使用matching子命名空间int main() {Image<RGBColor> image; // 定义RGB颜色空间的图像变量// 定义两个图像文件的路径,使用stlplus库函数获取相对路径std::string jpg_filenameL = stlplus::folder_up(std::string(THIS_SOURCE_DIR))+ "/imageData/StanfordMobileVisualSearch/Ace_0.png";std::string jpg_filenameR = stlplus::folder_up(std::string(THIS_SOURCE_DIR))+ "/imageData/StanfordMobileVisualSearch/Ace_1.png";Image<unsigned char> imageL, imageR; // 定义两个灰度图像变量ReadImage(jpg_filenameL.c_str(), &imageL); // 读取左图像ReadImage(jpg_filenameR.c_str(), &imageR); // 读取右图像// 检测图像中的特征区域using namespace openMVG::features; // 使用features子命名空间std::unique_ptr<Image_describer> image_describer(new SIFT_Anatomy_Image_describer); // 创建SIFT特征描述器std::map<IndexT, std::unique_ptr<features::Regions>> regions_perImage; // 创建一个存储每幅图像特征区域的容器image_describer->Describe(imageL, regions_perImage[0]); // 描述左图像的特征区域image_describer->Describe(imageR, regions_perImage[1]); // 描述右图像的特征区域// 获取特征区域的位置const PointFeaturesfeatsL = regions_perImage.at(0)->GetRegionsPositions(), // 左图像的特征位置featsR = regions_perImage.at(1)->GetRegionsPositions(); // 右图像的特征位置// 将两幅图像并排显示{Image<unsigned char> concat; // 定义一个图像变量用于存放拼接后的图像ConcatV(imageL, imageR, concat); // 纵向拼接两幅图像std::string out_filename = "00_images.jpg"; // 定义输出文件名WriteImage(out_filename.c_str(), concat); // 写出拼接后的图像}// 将检测到的特征转换为SIFT特征类型const SIFT_Regions* regionsL = dynamic_cast<SIFT_Regions*>(regions_perImage.at(0).get());const SIFT_Regions* regionsR = dynamic_cast<SIFT_Regions*>(regions_perImage.at(1).get());// 在两幅图像上绘制特征点{Features2SVG(jpg_filenameL,{ imageL.Width(), imageL.Height() }, // 左图像的尺寸regionsL->Features(), // 左图像的特征jpg_filenameR,{ imageR.Width(), imageR.Height() }, // 右图像的尺寸regionsR->Features(), // 右图像的特征"01_features.svg" // 输出SVG文件名);}// 执行匹配操作,找到最近邻点,并通过距离比率过滤std::vector<IndMatch> vec_PutativeMatches; // 定义存储匹配对的容器{// 找到对应点matching::DistanceRatioMatch(0.8, matching::BRUTE_FORCE_L2, // 使用L2范数和0.8的距离比率阈值*regions_perImage.at(0).get(),*regions_perImage.at(1).get(),vec_PutativeMatches); // 存储匹配结果// 绘制经过最近邻比率过滤后的对应关系const bool bVertical = true; // 设置SVG图像为垂直布局Matches2SVG(jpg_filenameL,{ imageL.Width(), imageL.Height() }, // 左图像尺寸regionsL->GetRegionsPositions(), // 左图像特征位置jpg_filenameR,{ imageR.Width(), imageR.Height() }, // 右图像尺寸regionsR->GetRegionsPositions(), // 右图像特征位置vec_PutativeMatches, // 匹配对"02_Matches.svg", // 输出SVG文件名bVertical // 使用垂直布局);}// 显示一些统计信息std::cout << featsL.size() << " Features on image A" << std::endl<< featsR.size() << " Features on image B" << std::endl<< vec_PutativeMatches.size() << " matches after matching with Distance Ratio filter" << std::endl;return EXIT_SUCCESS; // 程序成功执行完毕

}

2 图像中提取GPS位置信息

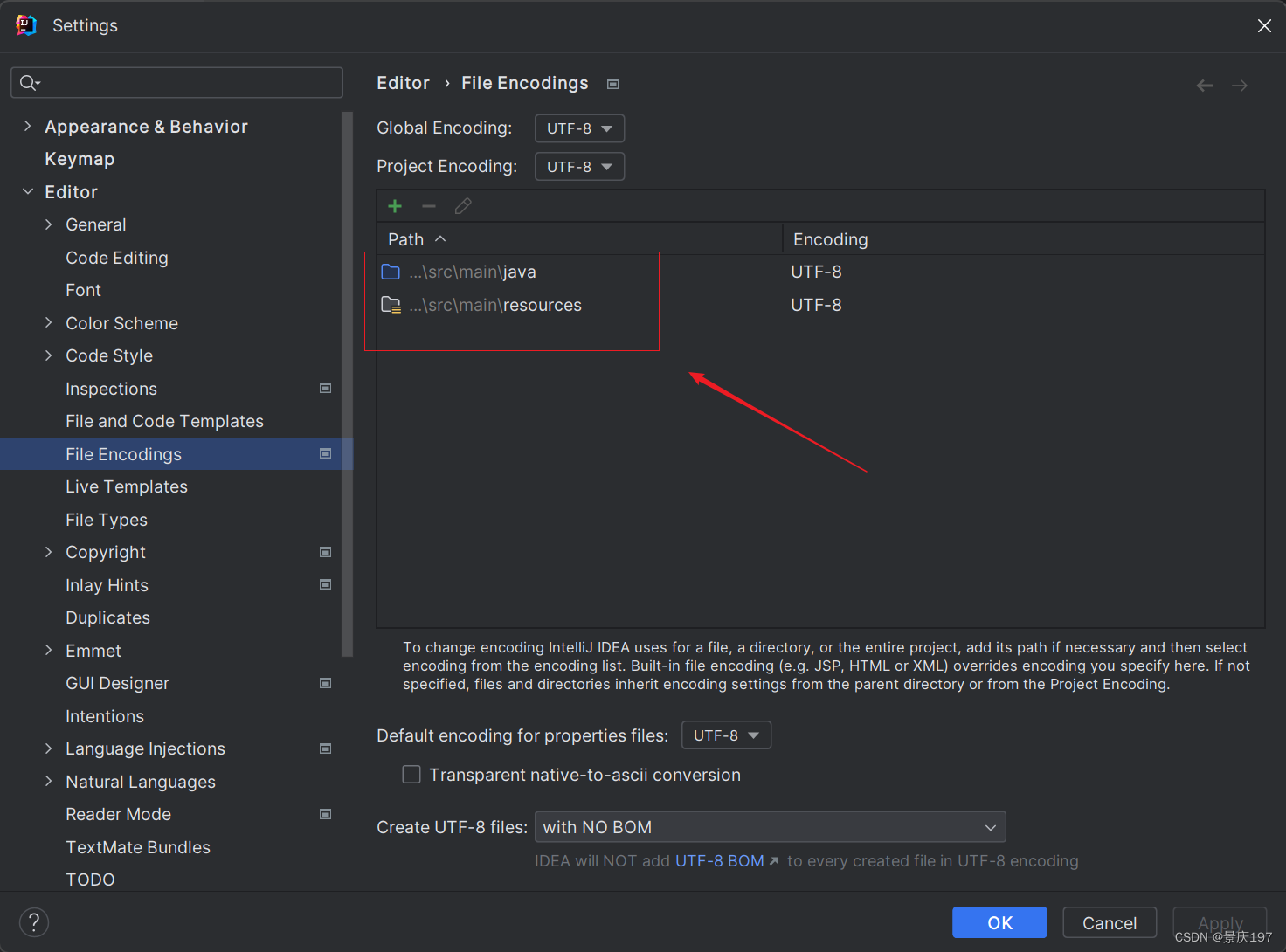

2.1 写入GPS信息到图像中

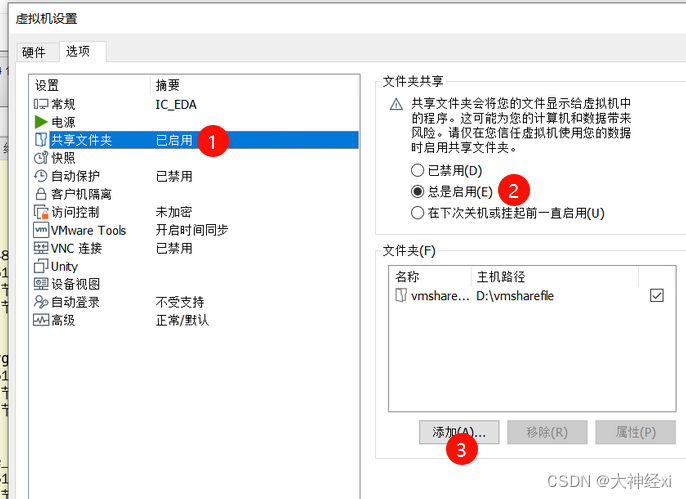

准备带有GPS的照片

ExifTool by Phil Harvey

当前目录下执行CMD,查看dog图片的信息

"exiftool(-k).exe" D:\CPlusProject\MVS_program\ALLTestData\GPSImage\dog.jpg

添加GPS信息到该图片中(经纬度和高程)

"exiftool(-k).exe" -GPSLatitude=34.052234 -GPSLatitudeRef=N -GPSLongitude=-118.243685 -GPSLongitudeRef=W -GPSAltitude=10 -GPSAltitudeRef=0 D:\CPlusProject\MVS_program\ALLTestData\GPSImage\dog.jpg

检查是否有GPS的信息

2.2 读取带有GPS的图像

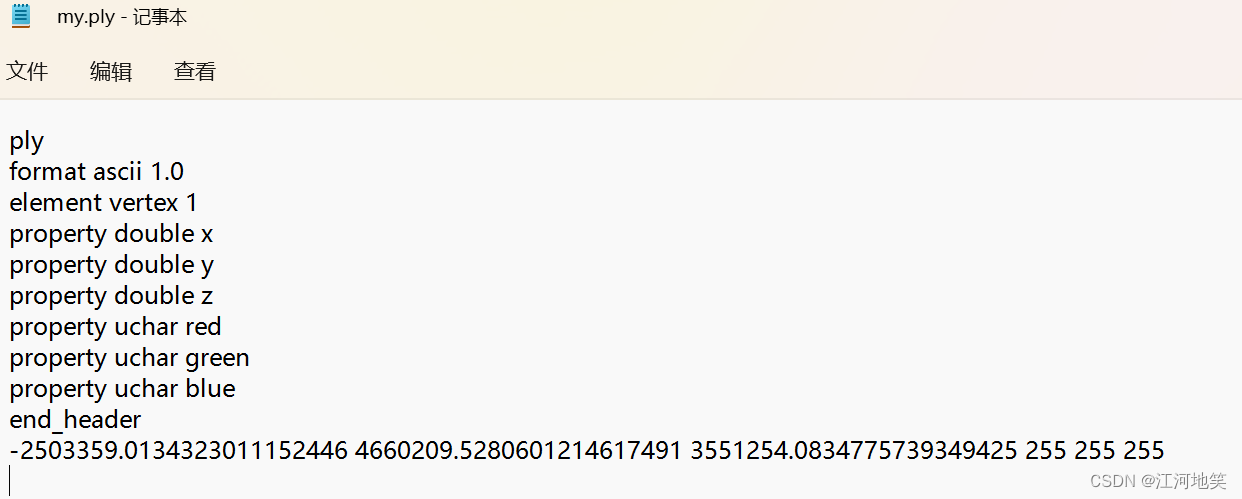

读取带有GPS的图像,然后将GPS信息转换为ECEF,随后保存下来。

#include "openMVG/exif/exif_IO_EasyExif.hpp" // 引入处理EXIF信息的头文件

#include "openMVG/geodesy/geodesy.hpp" // 引入地理学相关的头文件#include "software/SfM/SfMPlyHelper.hpp" // 引入辅助导出PLY文件的头文件#include "third_party/stlplus3/filesystemSimplified/file_system.hpp" // 引入处理文件系统的头文件

#include "third_party/cmdLine/cmdLine.h" // 引入命令行处理的头文件#include <iostream>

#include <memory>

#include <string>using namespace openMVG; // 使用openMVG命名空间

using namespace openMVG::exif; // 使用exif子命名空间

using namespace openMVG::geodesy; // 使用geodesy子命名空间int main(int argc, char** argv)

{std::stringsInputDirectory = "", // 定义输入目录的字符串sOutputPLYFile = "GPS_POSITION.ply"; // 定义输出PLY文件的名称CmdLine cmd; // 创建命令行对象// 添加输入目录和输出文件的命令行选项cmd.add(make_option('i', sInputDirectory, "input-directory"));cmd.add(make_option('o', sOutputPLYFile, "output-file"));try{if (argc == 1) throw std::string("Invalid parameter."); // 如果没有提供参数,抛出异常cmd.process(argc, argv); // 处理命令行参数}catch (const std::string& s) // 捕获异常{// 如果捕获到异常,显示帮助信息并退出std::cout<< "Geodesy demo:\n"<< " Export as PLY points the parsed image EXIF GPS positions,\n"<< " -[i|input-directory] Directory that will be parsed.\n"<< "-- OPTIONAL PARAMETERS --\n"<< " -[o|output-file] Output PLY file.\n"<< std::endl;std::cerr << s << std::endl;return EXIT_FAILURE;}// 初始化EXIF读取器std::unique_ptr<Exif_IO> exifReader(new Exif_IO_EasyExif);if (!exifReader){std::cerr << "Cannot instantiate the EXIF metadata reader." << std::endl;return EXIT_FAILURE;}std::vector<Vec3> vec_gps_xyz_position; // 定义一个存储XYZ坐标的向量size_t valid_exif_count = 0; // 有效的EXIF数据计数// 获取输入目录下的所有文件const std::vector<std::string> vec_image = stlplus::folder_files(sInputDirectory);for (const std::string& it_string : vec_image){const std::string sImageFilename = stlplus::create_filespec(sInputDirectory, it_string);// 尝试解析EXIF元数据exifReader->open(sImageFilename);// 检查是否存在EXIF数据if (!exifReader->doesHaveExifInfo())continue; // 如果没有EXIF信息,则跳过此图像++valid_exif_count; // 有效EXIF计数加一// 检查是否存在GPS坐标double latitude, longitude, altitude; // 定义纬度、经度和高度变量if (exifReader->GPSLatitude(&latitude) &&exifReader->GPSLongitude(&longitude) &&exifReader->GPSAltitude(&altitude)){// 将GPS坐标转换为ECEF XYZ坐标,并添加到向量中vec_gps_xyz_position.push_back(lla_to_ecef(latitude, longitude, altitude));}}// 输出报告std::cout << std::endl<< "Report:\n"<< " #file listed: " << vec_image.size() << "\n"<< " #valid exif data: " << valid_exif_count << "\n"<< " #valid exif gps data: " << vec_gps_xyz_position.size() << std::endl;// 如果没有有效的GPS数据,退出程序if (vec_gps_xyz_position.empty()){std::cerr << "No valid GPS data found for the image list" << std::endl;return EXIT_FAILURE;}// 导出XYZ坐标到PLY文件if (plyHelper::exportToPly(vec_gps_xyz_position, sOutputPLYFile)){std::cout << sOutputPLYFile << " -> successfully exported on disk." << std::endl;return EXIT_SUCCESS; // 成功导出}return EXIT_FAILURE; // 导出失败

}

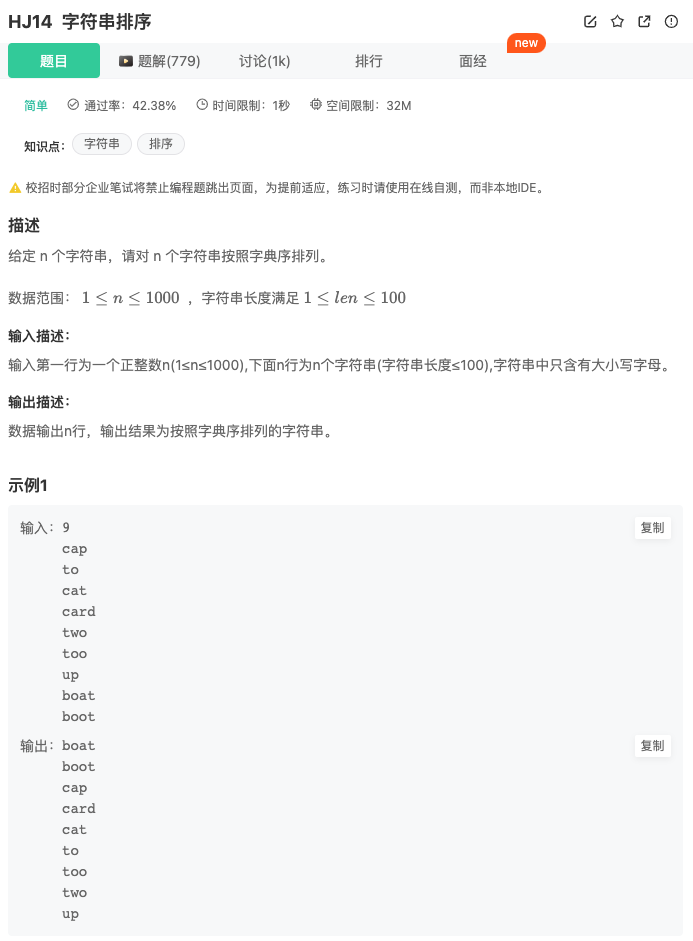

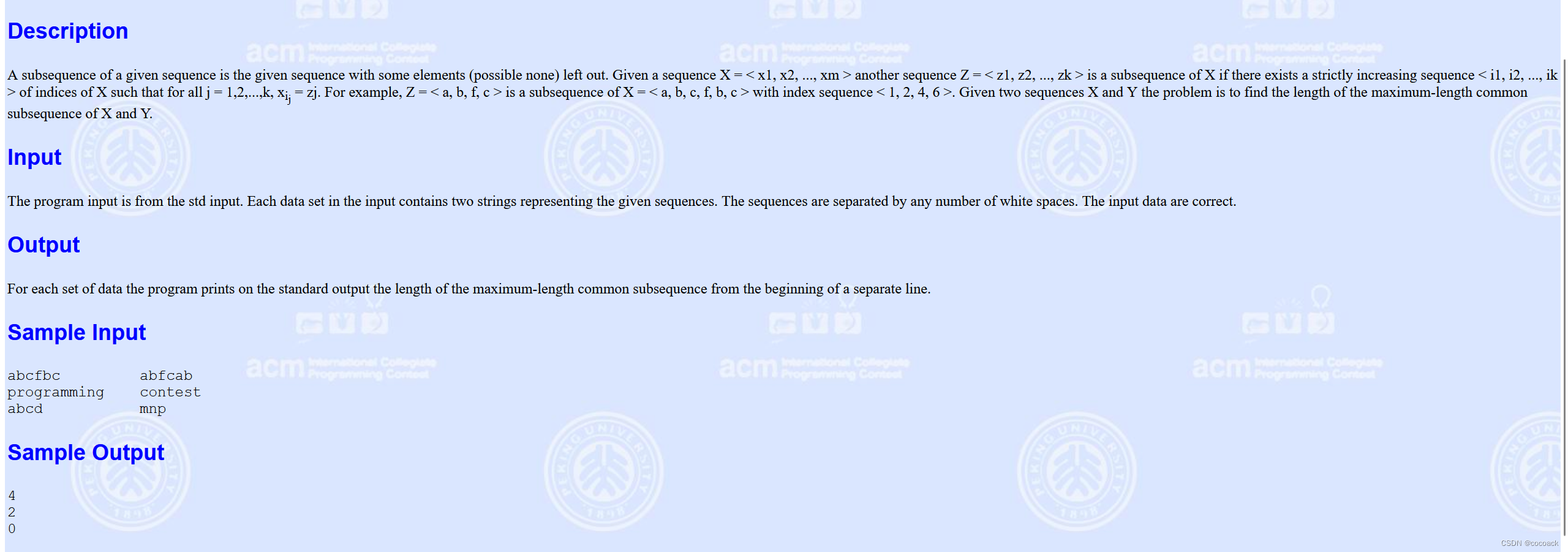

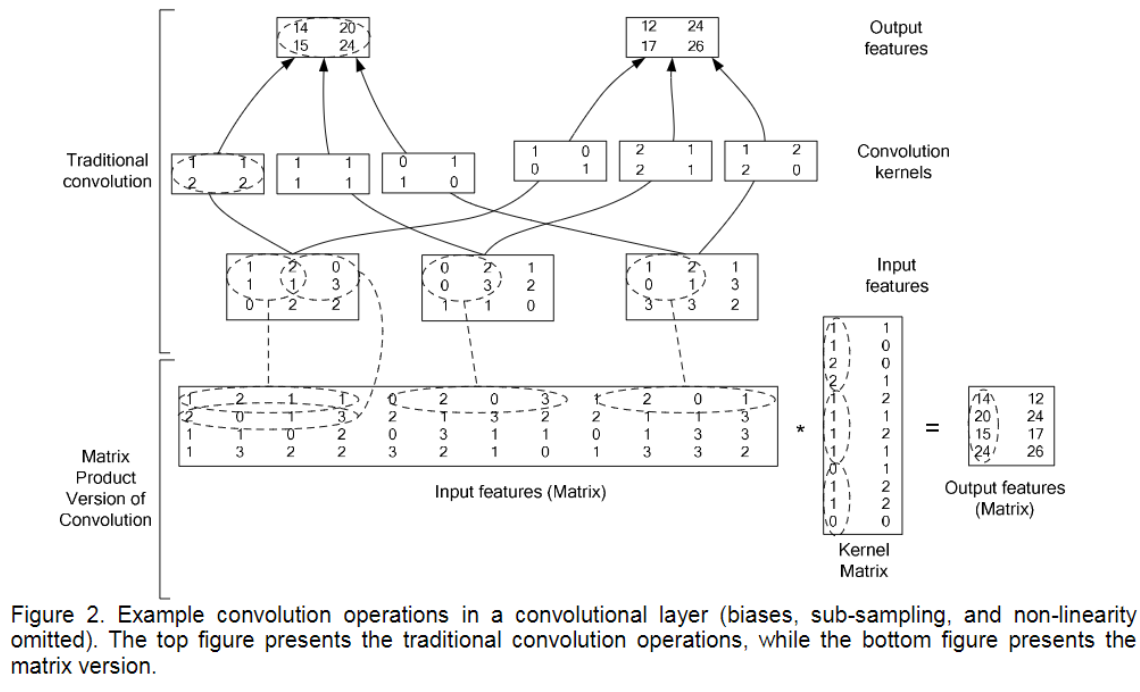

3 SIFT/AKAZE/AKAZE_MLDB特征提取对比

三者的区别网上多的是,但是还是因地制宜,多试试。代码在之前博客中已经详细介绍了。

1. SIFT (Scale-Invariant Feature Transform)

- 主要特点:SIFT 是一种用于检测和描述图像中的局部特征的算法。它对旋转、尺度缩放、亮度变化保持不变性,对视角变化、仿射变换、噪声也具有一定的稳定性。

- 应用:广泛用于图像匹配、物体识别、3D重建、追踪等。

- 优点:鲁棒性强,特征描述符独特且信息丰富。

- 缺点:计算量大,速度较慢,对于实时应用可能不太合适。

2. AKAZE (Accelerated-KAZE)

- 主要特点:AKAZE 是 KAZE 的加速版本,使用快速显著性检测和非线性尺度空间技术。它提供了一种计算效率更高的特征检测和描述符提取方法。

- 应用:适用于需要快速特征提取的场景,如实时视频处理、移动应用中的图像识别等。

- 优点:比 KAZE 更快,保留了对尺度和旋转的不变性。

- 缺点:虽然比 SIFT 快,但在特征描述符的区分度和鲁棒性方面可能略逊一筹。

3. AKAZE_MLDB (Modified Local Difference Binary)

- 主要特点:AKAZE_MLDB 是 AKAZE 的变体,使用二进制字符串作为特征描述符,以进一步提高计算效率和匹配速度。

- 应用:特别适用于对速度要求很高且对匹配准确性要求较高的应用。

- 优点:速度快,内存需求低,适合在资源受限的设备上运行。

- 缺点:虽然速度快,但相较于 SIFT,其特征描述符的鉴别力可能较低。

总结

- 速度和效率:AKAZE > AKAZE_MLDB > SIFT。

- 特征鉴别力和鲁棒性:SIFT > AKAZE > AKAZE_MLDB。

- 适用场景:SIFT 更适合对特征描述的鲁棒性和准确性要求较高的复杂应用;AKAZE 和 AKAZE_MLDB 更适合对计算速度和效率要求较高的应用。

// This file is part of OpenMVG, an Open Multiple View Geometry C++ library.// Copyright (c) 2015 Pierre MOULON.// This Source Code Form is subject to the terms of the Mozilla Public

// License, v. 2.0. If a copy of the MPL was not distributed with this

// file, You can obtain one at http://mozilla.org/MPL/2.0/.#include "openMVG/image/image_io.hpp"

#include "openMVG/image/image_concat.hpp"

#include "openMVG/features/akaze/image_describer_akaze.hpp"

#include "openMVG/features/sift/SIFT_Anatomy_Image_Describer.hpp"

#include "openMVG/features/svg_features.hpp"

#include "openMVG/matching/regions_matcher.hpp"

#include "openMVG/matching/svg_matches.hpp"#include "third_party/stlplus3/filesystemSimplified/file_system.hpp"

#include "third_party/cmdLine/cmdLine.h"

#include <memory>

#include <string>using namespace openMVG;

using namespace openMVG::image;int main(int argc, char **argv) {// Add options to choose the desired Image_describerstd::string sImage_describer_type = "SIFT";CmdLine cmd;cmd.add( make_option('t', sImage_describer_type, "type") );try {if (argc == 1) throw std::string("Invalid command line parameter.");cmd.process(argc, argv);} catch (const std::string& s) {std::cerr << "Usage: " << argv[0] << '\n'<< "\n[Optional]\n"<< "[-t|--type\n"<< " (choose an image_describer interface):\n"<< " SIFT: SIFT keypoint & descriptor,\n"<< " AKAZE: AKAZE keypoint & floating point descriptor]"<< std::endl;std::cerr << s << std::endl;return EXIT_FAILURE;}const std::string jpg_filenameL = stlplus::folder_up(std::string(THIS_SOURCE_DIR))+ "/imageData/StanfordMobileVisualSearch/Ace_0.png";const std::string jpg_filenameR = stlplus::folder_up(std::string(THIS_SOURCE_DIR))+ "/imageData/StanfordMobileVisualSearch/Ace_1.png";Image<unsigned char> imageL, imageR;ReadImage(jpg_filenameL.c_str(), &imageL);ReadImage(jpg_filenameR.c_str(), &imageR);assert(imageL.data() && imageR.data());// Call Keypoint extractorusing namespace openMVG::features;std::unique_ptr<Image_describer> image_describer;if (sImage_describer_type == "SIFT")image_describer.reset(new SIFT_Anatomy_Image_describer(SIFT_Anatomy_Image_describer::Params()));else if (sImage_describer_type == "AKAZE")image_describer = AKAZE_Image_describer::create(AKAZE_Image_describer::Params(AKAZE::Params(), AKAZE_MSURF));else if (sImage_describer_type == "AKAZE_MLDB")image_describer = AKAZE_Image_describer::create(AKAZE_Image_describer::Params(AKAZE::Params(), AKAZE_MLDB));if (!image_describer){std::cerr << "Invalid Image_describer type" << std::endl;return EXIT_FAILURE;}//--// Detect regions thanks to the image_describer//--std::map<IndexT, std::unique_ptr<features::Regions>> regions_perImage;image_describer->Describe(imageL, regions_perImage[0]);image_describer->Describe(imageR, regions_perImage[1]);//--// Display used images & Features//--{//- Show images side by sideImage<unsigned char> concat;ConcatH(imageL, imageR, concat);const std::string out_filename = "00_images.jpg";WriteImage(out_filename.c_str(), concat);}{//- Draw features on the images (side by side)Features2SVG(jpg_filenameL,{imageL.Width(), imageL.Height()},regions_perImage.at(0)->GetRegionsPositions(),jpg_filenameR,{imageR.Width(), imageR.Height()},regions_perImage.at(1)->GetRegionsPositions(),"01_features.svg");}//--// Compute corresponding points//--//-- Perform matching -> find Nearest neighbor, filtered with Distance ratiomatching::IndMatches vec_PutativeMatches;matching::DistanceRatioMatch(0.8, matching::BRUTE_FORCE_L2,*regions_perImage.at(0).get(),*regions_perImage.at(1).get(),vec_PutativeMatches);// Draw correspondences after Nearest Neighbor ratio filter{const bool bVertical = true;Matches2SVG(jpg_filenameL,{imageL.Width(), imageL.Height()},regions_perImage.at(0)->GetRegionsPositions(),jpg_filenameR,{imageR.Width(), imageR.Height()},regions_perImage.at(1)->GetRegionsPositions(),vec_PutativeMatches,"02_Matches.svg",bVertical);}// Display some statisticsstd::cout<< regions_perImage.at(0)->RegionCount() << " #Features on image A" << std::endl<< regions_perImage.at(1)->RegionCount() << " #Features on image B" << std::endl<< vec_PutativeMatches.size() << " #matches with Distance Ratio filter" << std::endl;return EXIT_SUCCESS;

}

SIFT

AKAZE

AKAZE_MLDB

4 GMS Filter

- 将运动平滑约束转换为剔除错误匹配的统计量,实验证明该算法能够应对较为棘手的场景;

- 提出了一种高效的基于网格的得分估计器,使得该算法能够用于实时特征匹配;

- 能够取得比Lowe Ratio更好的特征匹配筛选效果,该结论已经在传统特征如SIFT,SURF以及CNN特征如LIFT上得到验证;

GMS:一种基于运动统计的快速鲁棒特征匹配过滤算法 - 知乎 (zhihu.com)

GMS滤波特征点前需要大量的特征点,有利于得到更好的筛选结果

// This file is part of OpenMVG, an Open Multiple View Geometry C++ library.// Copyright (c) 2017 Pierre MOULON.// This Source Code Form is subject to the terms of the Mozilla Public

// License, v. 2.0. If a copy of the MPL was not distributed with this

// file, You can obtain one at http://mozilla.org/MPL/2.0/.#include "openMVG/image/image_io.hpp"

#include "openMVG/image/image_concat.hpp"

#include "openMVG/features/akaze/image_describer_akaze.hpp"

#include "openMVG/features/sift/SIFT_Anatomy_Image_Describer.hpp"

#include "openMVG/features/svg_features.hpp"

#include "openMVG/matching/regions_matcher.hpp"

#include "openMVG/matching/svg_matches.hpp"

#include "openMVG/robust_estimation/gms_filter.hpp"#include "third_party/cmdLine/cmdLine.h"

#include "third_party/stlplus3/filesystemSimplified/file_system.hpp"#include <memory>

#include <string>using namespace openMVG;

using namespace openMVG::image;

using namespace openMVG::robust;int main(int argc, char **argv) {// Add options to choose the desired Image_describerstd::string sImage_describer_type = "SIFT";CmdLine cmd;cmd.add( make_option('t', sImage_describer_type, "type") );cmd.add( make_switch('d', "distance_ratio"));try {if (argc == 1) throw std::string("Invalid command line parameter.");cmd.process(argc, argv);} catch(const std::string& s) {std::cerr << "Usage: " << argv[0] << '\n'<< "\n[Optional]\n"<< "[-t|--type\n"<< " (choose an image_describer interface):\n"<< " SIFT: SIFT keypoint & descriptor,\n"<< " AKAZE: AKAZE keypoint & floating point descriptor]\n"<< " AKAZE_MLDB: AKAZE keypoint & binary descriptor]\n"<< "[-d|distance_ratio] Use distance ratio filter before GMS, else 1-1 matching is used."<< std::endl;std::cerr << s << std::endl;return EXIT_FAILURE;}const std::string jpg_filenameL = stlplus::folder_up(std::string(THIS_SOURCE_DIR))+ "/imageData/StanfordMobileVisualSearch/Ace_0.png";const std::string jpg_filenameR = stlplus::folder_up(std::string(THIS_SOURCE_DIR))+ "/imageData/StanfordMobileVisualSearch/Ace_1.png";Image<unsigned char> imageL, imageR;ReadImage(jpg_filenameL.c_str(), &imageL);ReadImage(jpg_filenameR.c_str(), &imageR);assert(imageL.data() && imageR.data());// Call Keypoint extractorusing namespace openMVG::features;std::unique_ptr<Image_describer> image_describer;if (sImage_describer_type == "SIFT")image_describer.reset(new SIFT_Anatomy_Image_describer(SIFT_Anatomy_Image_describer::Params()));else if (sImage_describer_type == "AKAZE")image_describer = AKAZE_Image_describer::create(AKAZE_Image_describer::Params(AKAZE::Params(), AKAZE_MSURF));else if (sImage_describer_type == "AKAZE_MLDB")image_describer = AKAZE_Image_describer::create(AKAZE_Image_describer::Params(AKAZE::Params(), AKAZE_MLDB));if (!image_describer){std::cerr << "Invalid Image_describer type" << std::endl;return EXIT_FAILURE;}// GMS requires to have a lot of featuresimage_describer->Set_configuration_preset(features::ULTRA_PRESET);//--// Detect regions thanks to the image_describer//--std::map<IndexT, std::unique_ptr<features::Regions> > regions_perImage;image_describer->Describe(imageL, regions_perImage[0]);image_describer->Describe(imageR, regions_perImage[1]);//--// Display used images & Features//--{//- Show images side by sideImage<unsigned char> concat;ConcatH(imageL, imageR, concat);const std::string out_filename = "00_images.jpg";WriteImage(out_filename.c_str(), concat);}{//- Draw features on the images (side by side)Features2SVG(jpg_filenameL,{imageL.Width(), imageL.Height()},regions_perImage.at(0)->GetRegionsPositions(),jpg_filenameR,{imageR.Width(), imageR.Height()},regions_perImage.at(1)->GetRegionsPositions(),"01_features.svg");}//--// Compute corresponding points//--//-- Perform matching -> find Nearest neighbor, filtered with Distance ratiomatching::IndMatches vec_PutativeMatches;const bool distance_ratio_matching = cmd.used('d');if (distance_ratio_matching){const float kDistanceRatio = 0.8f;matching::DistanceRatioMatch(kDistanceRatio,(sImage_describer_type == "AKAZE_MLDB") ? matching::BRUTE_FORCE_HAMMING: matching::BRUTE_FORCE_L2,*regions_perImage.at(0).get(),*regions_perImage.at(1).get(),vec_PutativeMatches);}else{matching::Match((sImage_describer_type == "AKAZE_MLDB") ? matching::BRUTE_FORCE_HAMMING: matching::BRUTE_FORCE_L2,*regions_perImage.at(0).get(),*regions_perImage.at(1).get(),vec_PutativeMatches);}// Draw the putative photometric correspondences{const bool bVertical = true;Matches2SVG(jpg_filenameL,{imageL.Width(), imageL.Height()},regions_perImage.at(0)->GetRegionsPositions(),jpg_filenameR,{imageR.Width(), imageR.Height()},regions_perImage.at(1)->GetRegionsPositions(),vec_PutativeMatches,"02_Matches.svg",bVertical);}// Display some statisticsstd::cout << vec_PutativeMatches.size() << " #matches Found" << std::endl;// Apply the GMS filter{std::vector<Eigen::Vector2f> vec_points_left;{const auto & regions_pos = regions_perImage.at(0)->GetRegionsPositions();vec_points_left.reserve(regions_pos.size());for (const auto & it : regions_pos)vec_points_left.push_back({it.x(), it.y()});}std::vector<Eigen::Vector2f> vec_points_right;{const auto & regions_pos = regions_perImage.at(1)->GetRegionsPositions();vec_points_right.reserve(regions_pos.size());for (const auto & it : regions_pos)vec_points_right.push_back({it.x(), it.y()});}const int kGmsThreshold = 6;robust::GMSFilter gms(vec_points_left, {imageL.Width(), imageL.Height()},vec_points_right, {imageR.Width(), imageR.Height()},vec_PutativeMatches,kGmsThreshold);const bool with_scale_invariance = true;const bool with_rotation_invariance = true;std::vector<bool> inlier_flags;const int nb_inliers = gms.GetInlierMask(inlier_flags,with_scale_invariance,with_rotation_invariance);std::cout<< vec_points_left.size() << " #Features on image A" << std::endl<< vec_points_right.size() << " #Features on image B" << std::endl<< nb_inliers << " #matches kept by the GMS Filter" << std::endl;matching::IndMatches vec_gms_matches;for (int i = 0; i < static_cast<int>(inlier_flags.size()); ++i){if (inlier_flags[i])vec_gms_matches.push_back(vec_PutativeMatches[i]);}// Draw the correspondences kept by the GMSFilter{const bool bVertical = true;Matches2SVG(jpg_filenameL,{imageL.Width(), imageL.Height()},regions_perImage.at(0)->GetRegionsPositions(),jpg_filenameR,{imageR.Width(), imageR.Height()},regions_perImage.at(1)->GetRegionsPositions(),vec_gms_matches,"03_GMSMatches.svg",bVertical);}}return EXIT_SUCCESS;

}

5 将球形全景图转换为6个透视视图

#include "openMVG/image/image_io.hpp" // 包含处理图像输入输出的头文件

#include "openMVG/spherical/cubic_image_sampler.hpp" // 包含球形到立方体采样转换的头文件#include "third_party/cmdLine/cmdLine.h" // 包含命令行处理的第三方库头文件

#include "third_party/stlplus3/filesystemSimplified/file_system.hpp" // 包含文件系统处理的第三方库头文件#include <string> // 包含字符串处理的标准库头文件// 将球形全景图转换为6个透视视图(立方体全景图)

int main(int argc, char** argv)

{CmdLine cmd; // 命令行处理对象std::strings_input_image, // 输入图片路径s_output_image; // 输出图片路径// 需要的参数cmd.add(make_option('i', s_input_image, "input_image")); // 添加输入图片路径的选项cmd.add(make_option('o', s_output_image, "output_image")); // 添加输出图片路径的选项try {if (argc == 1) throw std::string("Invalid command line parameter."); // 如果没有提供参数,抛出异常cmd.process(argc, argv); // 处理命令行参数}catch (const std::string& s) {std::cerr << "Usage: " << argv[0] << '\n'<< "[-i|--input_image] the path to the spherical panorama\n"<< "[-o|--output_image] the export directory path \n"<< std::endl; // 捕获异常并打印使用方法return EXIT_FAILURE; // 程序异常退出}if (s_input_image.empty() || s_output_image.empty()){std::cerr << "input_image and output_image options must not be empty" << std::endl; // 确保输入输出路径不为空return EXIT_FAILURE;}using namespace openMVG; // 使用OpenMVG命名空间image::Image<image::RGBColor> spherical_image; // 创建一个用于存储球形全景图的图像对象if (!ReadImage(s_input_image.c_str(), &spherical_image)) // 读取输入的球形全景图{std::cerr << "Cannot read the input panoramic image: " << s_input_image << std::endl; // 如果读取失败,打印错误信息return EXIT_FAILURE;}const double cubic_image_size = spherical_image.Width() / 4; // 计算立方体图像的大小,这里简单地将球形图像的宽度除以4const openMVG::cameras::Pinhole_Intrinsic pinhole_camera =spherical::ComputeCubicCameraIntrinsics(cubic_image_size); // 计算立方体相机内参std::vector<image::Image<image::RGBColor>> cube_images(6); // 创建一个向量,用于存储转换后的六个立方体面图像spherical::SphericalToCubic(spherical_image,pinhole_camera,cube_images,image::Sampler2d<image::SamplerNearest>() // 使用最近邻采样); // 将球形图像转换为立方体图像if (WriteImage("cube_0.png", cube_images[0]) &&WriteImage("cube_1.png", cube_images[1]) &&WriteImage("cube_2.png", cube_images[2]) &&WriteImage("cube_3.png", cube_images[3]) &&WriteImage("cube_4.png", cube_images[4]) &&WriteImage("cube_5.png", cube_images[5])) // 将六个面的图像写入文件return EXIT_SUCCESS; // 成功退出return EXIT_FAILURE; // 如果写入失败,程序异常退出

}

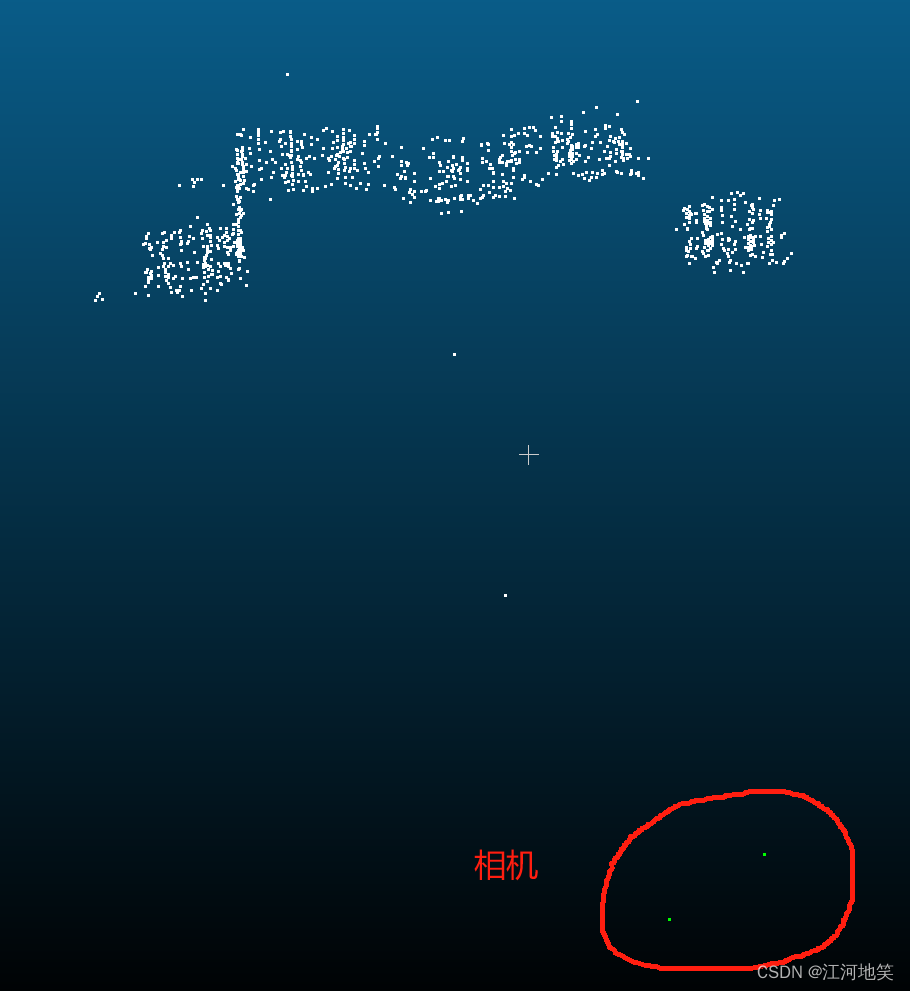

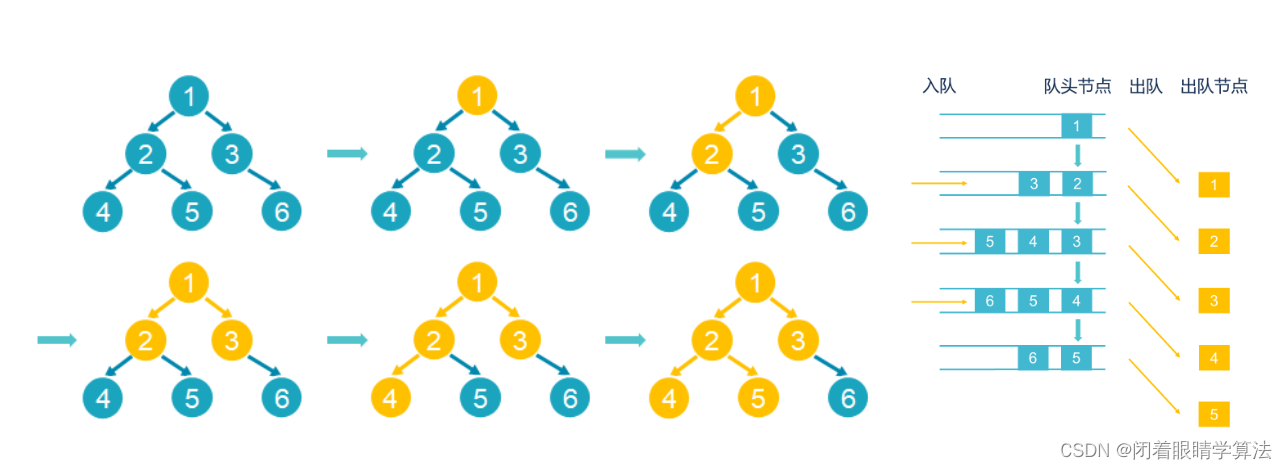

6 照片组重建点云

图像处理中,outlier和inlier分别指什么?_inliers-CSDN博客

两张图片重建点云

相机内参

#include "openMVG/cameras/Camera_Pinhole.hpp" // 包含针孔相机模型头文件

#include "openMVG/features/feature.hpp" // 包含特征点检测相关头文件

#include "openMVG/features/sift/SIFT_Anatomy_Image_Describer.hpp" // 包含SIFT特征描述器相关头文件

#include "openMVG/features/svg_features.hpp" // 包含SVG特征导出相关头文件

#include "openMVG/geometry/pose3.hpp" // 包含3D姿态相关头文件

#include "openMVG/image/image_io.hpp" // 包含图像输入输出相关头文件

#include "openMVG/image/image_concat.hpp" // 包含图像拼接相关头文件

#include "openMVG/matching/indMatchDecoratorXY.hpp" // 包含匹配点装饰器相关头文件

#include "openMVG/matching/regions_matcher.hpp" // 包含区域匹配器相关头文件

#include "openMVG/matching/svg_matches.hpp" // 包含SVG匹配导出相关头文件

#include "openMVG/multiview/triangulation.hpp" // 包含多视图三角化相关头文件

#include "openMVG/numeric/eigen_alias_definition.hpp" // 包含Eigen库别名定义头文件

#include "openMVG/sfm/pipelines/sfm_robust_model_estimation.hpp" // 包含鲁棒模型估计相关头文件#include "third_party/stlplus3/filesystemSimplified/file_system.hpp" // 包含简化的文件系统操作相关头文件#include <iostream> // 包含标准输入输出流头文件

#include <string> // 包含字符串处理头文件

#include <utility> // 包含实用工具头文件,例如pairusing namespace openMVG; // 使用OpenMVG命名空间

using namespace openMVG::matching; // 使用OpenMVG匹配命名空间

using namespace openMVG::image; // 使用OpenMVG图像命名空间

using namespace openMVG::cameras; // 使用OpenMVG相机命名空间

using namespace openMVG::geometry; // 使用OpenMVG几何命名空间/// 从文件中读取内参矩阵K(ASCII格式)

bool readIntrinsic(const std::string& fileName, Mat3& K);/// 将3D点向量和相机位置导出到PLY格式

bool exportToPly(const std::vector<Vec3>& vec_points,const std::vector<Vec3>& vec_camPos,const std::string& sFileName);int main() {// 定义输入目录路径const std::string sInputDir = stlplus::folder_up(std::string(THIS_SOURCE_DIR))+ "/imageData/SceauxCastle/";// 定义两张图片的文件名const std::string jpg_filenameL = sInputDir + "100_7101.jpg";const std::string jpg_filenameR = sInputDir + "100_7102.jpg";// 创建两个图像对象Image<unsigned char> imageL, imageR;// 读取两张图片ReadImage(jpg_filenameL.c_str(), &imageL);ReadImage(jpg_filenameR.c_str(), &imageR);//--// 利用图像描述器检测区域//--using namespace openMVG::features; // 使用OpenMVG特征命名空间std::unique_ptr<Image_describer> image_describer(new SIFT_Anatomy_Image_describer); // 创建SIFT图像描述器std::map<IndexT, std::unique_ptr<features::Regions>> regions_perImage; // 创建存储每张图像区域的映射image_describer->Describe(imageL, regions_perImage[0]); // 描述第一张图像image_describer->Describe(imageR, regions_perImage[1]); // 描述第二张图像// 将区域转换为SIFT区域const SIFT_Regions* regionsL = dynamic_cast<SIFT_Regions*>(regions_perImage.at(0).get());const SIFT_Regions* regionsR = dynamic_cast<SIFT_Regions*>(regions_perImage.at(1).get());// 获取特征点位置const PointFeaturesfeatsL = regions_perImage.at(0)->GetRegionsPositions(),featsR = regions_perImage.at(1)->GetRegionsPositions();// 将两张图像并排显示{Image<unsigned char> concat;ConcatH(imageL, imageR, concat);std::string out_filename = "01_concat.jpg";WriteImage(out_filename.c_str(), concat);}//- 在两幅图像上绘制特征点(并排显示){Features2SVG(jpg_filenameL,{ imageL.Width(), imageL.Height() },regionsL->Features(),jpg_filenameR,{ imageR.Width(), imageR.Height() },regionsR->Features(),"02_features.svg");}std::vector<IndMatch> vec_PutativeMatches; // 创建存储假设匹配的向量//-- 执行匹配 -> 寻找最近邻点,通过距离比率过滤{// 寻找对应点matching::DistanceRatioMatch(0.8, matching::BRUTE_FORCE_L2,*regions_perImage.at(0).get(),*regions_perImage.at(1).get(),vec_PutativeMatches);IndMatchDecorator<float> matchDeduplicator(vec_PutativeMatches, featsL, featsR);matchDeduplicator.getDeduplicated(vec_PutativeMatches);std::cout<< regions_perImage.at(0)->RegionCount() << " #Features on image A" << std::endl<< regions_perImage.at(1)->RegionCount() << " #Features on image B" << std::endl<< vec_PutativeMatches.size() << " #matches with Distance Ratio filter" << std::endl;// 绘制经过最近邻比率过滤后的对应点const bool bVertical = true;Matches2SVG(jpg_filenameL,{ imageL.Width(), imageL.Height() },regionsL->GetRegionsPositions(),jpg_filenameR,{ imageR.Width(), imageR.Height() },regionsR->GetRegionsPositions(),vec_PutativeMatches,"03_Matches.svg",bVertical);}//-----------------------------------------------------------------------------------// 对假设匹配进行基本的几何过滤{Mat3 K; // 创建内参矩阵K// 从文件读取K矩阵if (!readIntrinsic(stlplus::create_filespec(sInputDir, "K", "txt"), K)){std::cerr << "Cannot read intrinsic parameters." << std::endl;return EXIT_FAILURE;}// 定义两个针孔相机内参const Pinhole_IntrinsiccamL(imageL.Width(), imageL.Height(), K(0, 0), K(0, 2), K(1, 2)),camR(imageR.Width(), imageR.Height(), K(0, 0), K(0, 2), K(1, 2));//A. 准备对应的假设点Mat xL(2, vec_PutativeMatches.size());Mat xR(2, vec_PutativeMatches.size());for (size_t k = 0; k < vec_PutativeMatches.size(); ++k) {const PointFeature& imaL = featsL[vec_PutativeMatches[k].i_];const PointFeature& imaR = featsR[vec_PutativeMatches[k].j_];xL.col(k) = imaL.coords().cast<double>();xR.col(k) = imaR.coords().cast<double>();}//B. 通过本质矩阵估计计算相对姿态const std::pair<size_t, size_t> size_imaL(imageL.Width(), imageL.Height());const std::pair<size_t, size_t> size_imaR(imageR.Width(), imageR.Height());sfm::RelativePose_Info relativePose_info;if (!sfm::robustRelativePose(&camL, &camR, xL, xR, relativePose_info, size_imaL, size_imaR, 256)){std::cerr << " /!\\ Robust relative pose estimation failure."<< std::endl;return EXIT_FAILURE;}std::cout << "\nFound an Essential matrix:\n"<< "\tprecision: " << relativePose_info.found_residual_precision << " pixels\n"<< "\t#inliers: " << relativePose_info.vec_inliers.size() << "\n"<< "\t#matches: " << vec_PutativeMatches.size()<< std::endl;// 展示经过本质矩阵验证的点const bool bVertical = true;InlierMatches2SVG(jpg_filenameL,{ imageL.Width(), imageL.Height() },regionsL->GetRegionsPositions(),jpg_filenameR,{ imageR.Width(), imageR.Height() },regionsR->GetRegionsPositions(),vec_PutativeMatches,//一个匹配对的表示形式为{i_=1761 j_=1580},这意味着左图像中的第1761个特征点与右图像中的第1580个特征点被认为是匹配的。relativePose_info.vec_inliers,//每个元素,如169,表示在vec_PutativeMatches中索引为169的匹配对是一个内点,即这个匹配对被认为是可靠的,能够反映两个图像间真实的几何关系。"04_ACRansacEssential.svg",bVertical//true,则图像垂直排列;如果为false,则水平排列);//C. 三角化并导出内点作为PLY场景std::vector<Vec3> vec_3DPoints; // 创建3D点向量// 设置相机内参和姿态const Pinhole_Intrinsic intrinsic0(imageL.Width(), imageL.Height(), K(0, 0), K(0, 2), K(1, 2));const Pinhole_Intrinsic intrinsic1(imageR.Width(), imageR.Height(), K(0, 0), K(0, 2), K(1, 2));const Pose3 pose0 = Pose3(Mat3::Identity(), Vec3::Zero()); // 第一个相机的姿态const Pose3 pose1 = relativePose_info.relativePose; // 第二个相机的姿态// 通过内点的三角化初始化结构std::vector<double> vec_residuals; // 创建存储重投影误差的向量vec_residuals.reserve(relativePose_info.vec_inliers.size() * 4);for (const auto inlier_idx : relativePose_info.vec_inliers) {const SIOPointFeature& LL = regionsL->Features()[vec_PutativeMatches[inlier_idx].i_];const SIOPointFeature& RR = regionsR->Features()[vec_PutativeMatches[inlier_idx].j_];// 点三角化Vec3 X; // 三角化得到的3D点const ETriangulationMethod triangulation_method = ETriangulationMethod::DEFAULT; // 选择三角化方法if (Triangulate2View(pose0.rotation(), pose0.translation(), intrinsic0(LL.coords().cast<double>()),pose1.rotation(), pose1.translation(), intrinsic1(RR.coords().cast<double>()),X,triangulation_method)){const Vec2 residual0 = intrinsic0.residual(pose0(X), LL.coords().cast<double>()); // 计算第一个相机的重投影误差(重投影误差是二维的,x和y轴上的)const Vec2 residual1 = intrinsic1.residual(pose1(X), RR.coords().cast<double>()); // 计算第二个相机的重投影误差vec_residuals.emplace_back(std::abs(residual0(0))); // 存储误差:重投影误差是三维点投影回相机成像平面后的误差,它是衡量三维重建质量的一个关键指标。vec_residuals.emplace_back(std::abs(residual0(1)));vec_residuals.emplace_back(std::abs(residual1(0)));vec_residuals.emplace_back(std::abs(residual1(1)));vec_3DPoints.emplace_back(X); // 存储3D点}}// 显示重投影误差的一些统计信息float dMin, dMax, dMean, dMedian; // 定义最小值、最大值、平均值、中位数minMaxMeanMedian<float>(vec_residuals.cbegin(), vec_residuals.cend(),dMin, dMax, dMean, dMedian); // 计算统计值std::cout << std::endl<< "Triangulation residuals statistics:" << "\n"<< "\t-- Residual min:\t" << dMin << "\n"<< "\t-- Residual median:\t" << dMedian << "\n"<< "\t-- Residual max:\t " << dMax << "\n"<< "\t-- Residual mean:\t " << dMean << std::endl;// 导出为PLY格式(相机位置+3D点)std::vector<Vec3> vec_camPos; // 创建存储相机位置的向量vec_camPos.push_back(pose0.center()); // 第一个相机的中心vec_camPos.push_back(pose1.center()); // 第二个相机的中心exportToPly(vec_3DPoints, vec_camPos, "EssentialGeometry.ply"); // 导出PLY文件}return EXIT_SUCCESS; // 程序成功退出

}bool readIntrinsic(const std::string& fileName, Mat3& K)

{// 加载K矩阵std::ifstream in;in.open(fileName.c_str(), std::ifstream::in);if (in) {for (int j = 0; j < 3; ++j)for (int i = 0; i < 3; ++i)in >> K(j, i); // 从文件读取K矩阵元素}else {std::cerr << std::endl<< "Invalid input K.txt file" << std::endl;return false; // 读取失败}return true; // 读取成功

}bool exportToPly(const std::vector<Vec3>& vec_points,const std::vector<Vec3>& vec_camPos,const std::string& sFileName)

{std::ofstream outfile; // 创建文件输出流outfile.open(sFileName.c_str(), std::ios_base::out); // 打开文件// 写入PLY文件头outfile << "ply"<< '\n' << "format ascii 1.0"<< '\n' << "element vertex " << vec_points.size() + vec_camPos.size()<< '\n' << "property float x"<< '\n' << "property float y"<< '\n' << "property float z"<< '\n' << "property uchar red"<< '\n' << "property uchar green"<< '\n' << "property uchar blue"<< '\n' << "end_header" << std::endl;// 写入3D点数据for (size_t i = 0; i < vec_points.size(); ++i) {outfile << vec_points[i].transpose()<< " 255 255 255" << "\n"; // 3D点为白色}// 写入相机位置数据for (size_t i = 0; i < vec_camPos.size(); ++i) {outfile << vec_camPos[i].transpose()<< " 0 255 0" << "\n"; // 相机位置为绿色}outfile.flush(); // 刷新输出流const bool bOk = outfile.good(); // 检查写入是否成功outfile.close(); // 关闭文件return bOk; // 返回写入状态

}