>- **🍨 本文为[🔗365天深度学习训练营](https://mp.weixin.qq.com/s/rbOOmire8OocQ90QM78DRA) 中的学习记录博客** >- **🍖 原作者:[K同学啊 | 接辅导、项目定制](https://mtyjkh.blog.csdn.net/)**

# -*- coding: utf-8 -*-

import torch

import torch.nn as nn

import torchvision

from torchvision import transforms, datasets

import os,PIL,pathlib,warnings#忽略警告信息

warnings.filterwarnings("ignore")

#win10

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")import pandas as pd#加载自定义中文数据

train_data = pd.read_csv('./data/train.csv', sep='\t', header=None)

train_data.head()#构造数据集迭代器

def coustom_data_iter(texts,labels):for x,y in zip(texts,labels):yield x,ytrain_iter =coustom_data_iter(train_data[0].values[:],train_data[1].values[:])from torchtext.data.utils import get_tokenizer

from torchtext.vocab import build_vocab_from_iterator

import jieba

#中文分词方法

tokenizer =jieba.lcut

def yield_tokens(data_iter):for text,_ in data_iter:yield tokenizer(text)

vocab =build_vocab_from_iterator(yield_tokens(train_iter),specials=["<unk>"])

vocab.set_default_index(vocab["<unk>"])#设置默认索引,如果找不到单词,则会选择默认索引13

vocab(['我','想','看','和平','精英','上','战神','必备','技巧','的','游戏','视频'])label_name =list(set(train_data[1].values[:]))

print(label_name)

['TVProgram-Play','Other','Radio-Listen','FilmTele-Play','Weather-Query','Calendar-Query','Audio-Play', 'Travel-Query', 'Video-Play','HomeAppliance-Control', 'Music-Play', 'Alarm-Update']text_pipeline =lambda x:vocab(tokenizer(x))

label_pipeline =lambda x:label_name.index(x)

print(text_pipeline('我想看和平精英上战神必备技巧的游戏视频'))

print(label_pipeline('Video-Play'))from torch.utils.data import DataLoaderdef collate_batch(batch):label_list,text_list,offsets =[],[],[0]for(_text,_label)in batch:#标签列表label_list.append(label_pipeline(_label))#文本列表processed_text =torch.tensor(text_pipeline(_text),dtype=torch.int64)text_list.append(processed_text)#偏移量,即语句的总词汇量offsets.append(processed_text.size(0))label_list =torch.tensor(label_list,dtype=torch.int64)text_list =torch.cat(text_list)offsets=torch.tensor(offsets[:-1]).cumsum(dim=0)#返回维度dim中输入元素的累计和offsetsreturn text_list.to(device),label_list.to(device),offsets.to(device)#数据加载器,调用示例

dataloader = DataLoader(train_iter,batch_size=8,shuffle=False,collate_fn=collate_batch)from torch import nn

class TextClassificationModel(nn.Module):def __init__(self,vocab_size,embed_dim,num_class):super(TextClassificationModel,self).__init__()self.embedding =nn.EmbeddingBag(vocab_size, #词典大小embed_dim, #嵌入的维度sparse=False)#self.fc =nn.Linear(embed_dim,num_class)self.init_weights()def init_weights(self):initrange =0.5self.embedding.weight.data.uniform_(-initrange,initrange)#初始化权重self.fc.weight.data.uniform_(-initrange,initrange)self.fc.bias.data.zero_()#偏置值归零def forward(self,text,offsets):embedded =self.embedding(text,offsets)return self.fc(embedded)num_class =len(label_name)

vocab_size =len(vocab)

em_size= 64

model=TextClassificationModel(vocab_size,em_size,num_class).to(device)import timedef train(dataloader):model.train()#切换为训练模式total_acc,train_loss,total_count =0,0,0log_interval =50start_time =time.time()for idx,(text,label,offsets) in enumerate(dataloader):predicted_label = model(text,offsets)optimizer.zero_grad()#grad属性归零loss =criterion(predicted_label,label)#计算网络输出和真实值之间的差距,label为真实值loss.backward()#反向传播torch.nn.utils.clip_grad_norm_(model.parameters(),0.1)#梯度裁剪optimizer.step()#每一步自动更新#记录acc与losstotal_acc +=(predicted_label.argmax(1)==label).sum().item()train_loss +=loss.item()total_count +=label.size(0)if idx % log_interval ==0 and idx>0:elapsed =time.time()-start_timeprint('| epoch {:1d} | {:4d}/{:4d} batches''| train_acc {:4.3f} train_loss {:4.5f}'.format(epoch,idx,len(dataloader),total_acc/total_count,train_loss/total_count))total_acc,train_loss,total_count =0,0,0start_time = time.time()

def evaluate(dataloader):model.eval()#切换为测试模式total_acc,train_loss,total_count =0,0,0with torch.no_grad():for idx,(text,label,offsets)in enumerate(dataloader):predicted_label =model(text,offsets)loss = criterion(predicted_label,label)#计算loss值#记录测试数据total_acc +=(predicted_label.argmax(1)==label).sum().item()train_loss +=loss.item()total_count +=label.size(0)return total_acc/total_count,train_loss/total_countfrom torch.utils.data.dataset import random_split

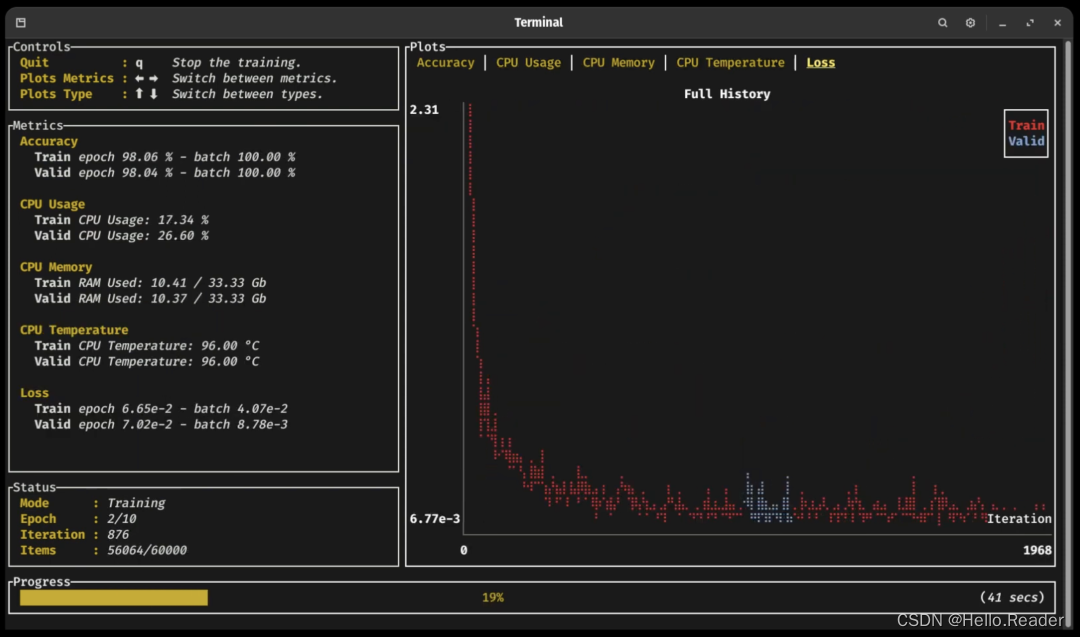

from torchtext.data.functional import to_map_style_dataset#超参数EPOCHS=10 #epoch

LR=5 #学习率

BATCH_SIZE =64 #batch size for training

criterion =torch.nn.CrossEntropyLoss()

optimizer =torch.optim.SGD(model.parameters(),lr=LR)

scheduler =torch.optim.lr_scheduler.StepLR(optimizer,1.0,gamma=0.1)

total_accu =None#构建数据集

train_iter =coustom_data_iter(train_data[0].values[:],train_data[1].values[:])

train_dataset =to_map_style_dataset(train_iter)split_train_,split_valid_=random_split(train_dataset,[int(len(train_dataset)*0.8),int(len(train_dataset)*0.2)])train_dataloader =DataLoader(split_train_,batch_size=BATCH_SIZE,shuffle=True,collate_fn=collate_batch)

valid_dataloader =DataLoader(split_valid_,batch_size=BATCH_SIZE,shuffle=True,collate_fn=collate_batch)for epoch in range(1,EPOCHS +1):epoch_start_time =time.time()train(train_dataloader)val_acc,val_loss =evaluate(valid_dataloader)#获取当前的学习率lr =optimizer.state_dict()['param_groups'][0]['lr']if total_accu is not None and total_accu >val_acc:scheduler.step()else:total_accu =val_accprint('-'*69)print('l epoch {:1d}|time:{:4.2f}s |''valid_acc {:4.3f}valid_loss {:4.3f}|lr {:4.6f}'.format(epoch,time.time()-epoch_start_time,val_acc,val_loss,lr))print('-'*69)test_acc,test_loss =evaluate(valid_dataloader)

print('模型准确率为:{:5.4f}'.format(test_acc))def predict(text,text_pipeline):with torch.no_grad():text =torch.tensor(text_pipeline(text))output =model(text,torch.tensor([0]))return output.argmax(1).item()

#ex_text_str="随便播放一首专辑阁楼里的佛里的歌"

ex_text_str ="还有双鸭山到淮阴的汽车票吗13号的"

model =model.to("cpu")

print("该文本的类别是:%s"%label_name[predict(ex_text_str,text_pipeline)])下面是运行结果:

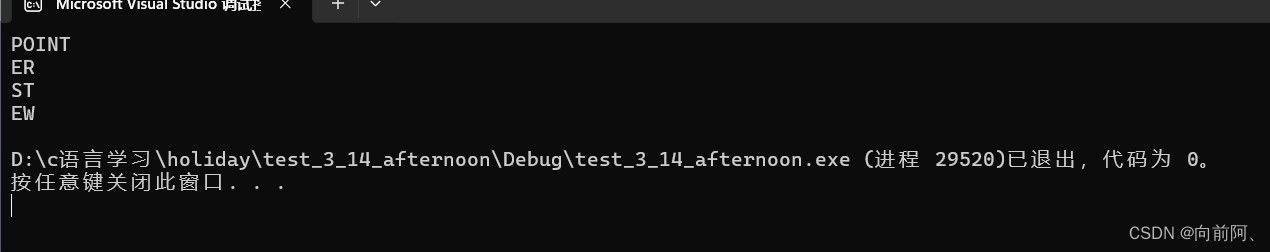

| epoch 1 | 50/ 152 batches| train_acc 0.453 train_loss 0.03016

| epoch 1 | 100/ 152 batches| train_acc 0.696 train_loss 0.01937

| epoch 1 | 150/ 152 batches| train_acc 0.760 train_loss 0.01392

---------------------------------------------------------------------

l epoch 1|time:1.15s |valid_acc 0.795valid_loss 0.012|lr 5.000000

---------------------------------------------------------------------

| epoch 2 | 50/ 152 batches| train_acc 0.813 train_loss 0.01067

| epoch 2 | 100/ 152 batches| train_acc 0.836 train_loss 0.00929

| epoch 2 | 150/ 152 batches| train_acc 0.850 train_loss 0.00823

---------------------------------------------------------------------

l epoch 2|time:1.03s |valid_acc 0.847valid_loss 0.008|lr 5.000000

---------------------------------------------------------------------

| epoch 3 | 50/ 152 batches| train_acc 0.874 train_loss 0.00688

| epoch 3 | 100/ 152 batches| train_acc 0.882 train_loss 0.00648

| epoch 3 | 150/ 152 batches| train_acc 0.889 train_loss 0.00610

---------------------------------------------------------------------

l epoch 3|time:1.03s |valid_acc 0.865valid_loss 0.007|lr 5.000000

---------------------------------------------------------------------

| epoch 4 | 50/ 152 batches| train_acc 0.905 train_loss 0.00530

| epoch 4 | 100/ 152 batches| train_acc 0.914 train_loss 0.00464

| epoch 4 | 150/ 152 batches| train_acc 0.913 train_loss 0.00478

---------------------------------------------------------------------

l epoch 4|time:1.03s |valid_acc 0.882valid_loss 0.006|lr 5.000000

---------------------------------------------------------------------

| epoch 5 | 50/ 152 batches| train_acc 0.933 train_loss 0.00389

| epoch 5 | 100/ 152 batches| train_acc 0.940 train_loss 0.00346

| epoch 5 | 150/ 152 batches| train_acc 0.928 train_loss 0.00410

---------------------------------------------------------------------

l epoch 5|time:1.05s |valid_acc 0.889valid_loss 0.006|lr 5.000000

---------------------------------------------------------------------

| epoch 6 | 50/ 152 batches| train_acc 0.956 train_loss 0.00275

| epoch 6 | 100/ 152 batches| train_acc 0.945 train_loss 0.00306

| epoch 6 | 150/ 152 batches| train_acc 0.943 train_loss 0.00321

---------------------------------------------------------------------

l epoch 6|time:1.03s |valid_acc 0.893valid_loss 0.006|lr 5.000000

---------------------------------------------------------------------

| epoch 7 | 50/ 152 batches| train_acc 0.962 train_loss 0.00231

| epoch 7 | 100/ 152 batches| train_acc 0.962 train_loss 0.00240

| epoch 7 | 150/ 152 batches| train_acc 0.962 train_loss 0.00237

---------------------------------------------------------------------

l epoch 7|time:1.01s |valid_acc 0.898valid_loss 0.005|lr 5.000000

---------------------------------------------------------------------

| epoch 8 | 50/ 152 batches| train_acc 0.971 train_loss 0.00203

| epoch 8 | 100/ 152 batches| train_acc 0.978 train_loss 0.00170

| epoch 8 | 150/ 152 batches| train_acc 0.971 train_loss 0.00183

---------------------------------------------------------------------

l epoch 8|time:1.02s |valid_acc 0.898valid_loss 0.005|lr 5.000000

---------------------------------------------------------------------

| epoch 9 | 50/ 152 batches| train_acc 0.983 train_loss 0.00142

| epoch 9 | 100/ 152 batches| train_acc 0.980 train_loss 0.00145

| epoch 9 | 150/ 152 batches| train_acc 0.978 train_loss 0.00151

---------------------------------------------------------------------

l epoch 9|time:1.01s |valid_acc 0.900valid_loss 0.005|lr 5.000000

---------------------------------------------------------------------

| epoch 10 | 50/ 152 batches| train_acc 0.987 train_loss 0.00116

| epoch 10 | 100/ 152 batches| train_acc 0.985 train_loss 0.00117

| epoch 10 | 150/ 152 batches| train_acc 0.986 train_loss 0.00111

---------------------------------------------------------------------

l epoch 10|time:1.01s |valid_acc 0.903valid_loss 0.005|lr 5.000000

---------------------------------------------------------------------

模型准确率为:0.9033

该文本的类别是:Travel-Query

![[LVGL]:MACOS下使用LVGL模拟器](https://img-blog.csdnimg.cn/direct/253d7e24fe964757b700d356aed1f608.png#pic_center)