OpenI准备部分

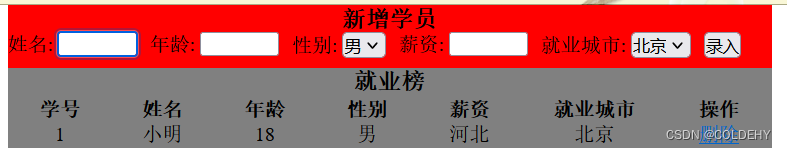

镜像代码仓

创建云脑任务

新建调试任务

镜像选择

如果不想体验整个安装配置过程的话,我准备了一个Open-Sora的环境镜像应该可以直接开箱即用

地址:

192.168.204.22:5000/default-workspace/99280a9940ae44ca8f5892134386fddb/image:OpenSoraV2

如果想自己体验整个环境配置准备阶段的可以使用这个镜像地址镜像地址:

192.168.204.22:5000/default-workspace/99280a9940ae44ca8f5892134386fddb/image:ubuntu22.04-cuda12.1.0-py310-torch2.1.2-tf2.14

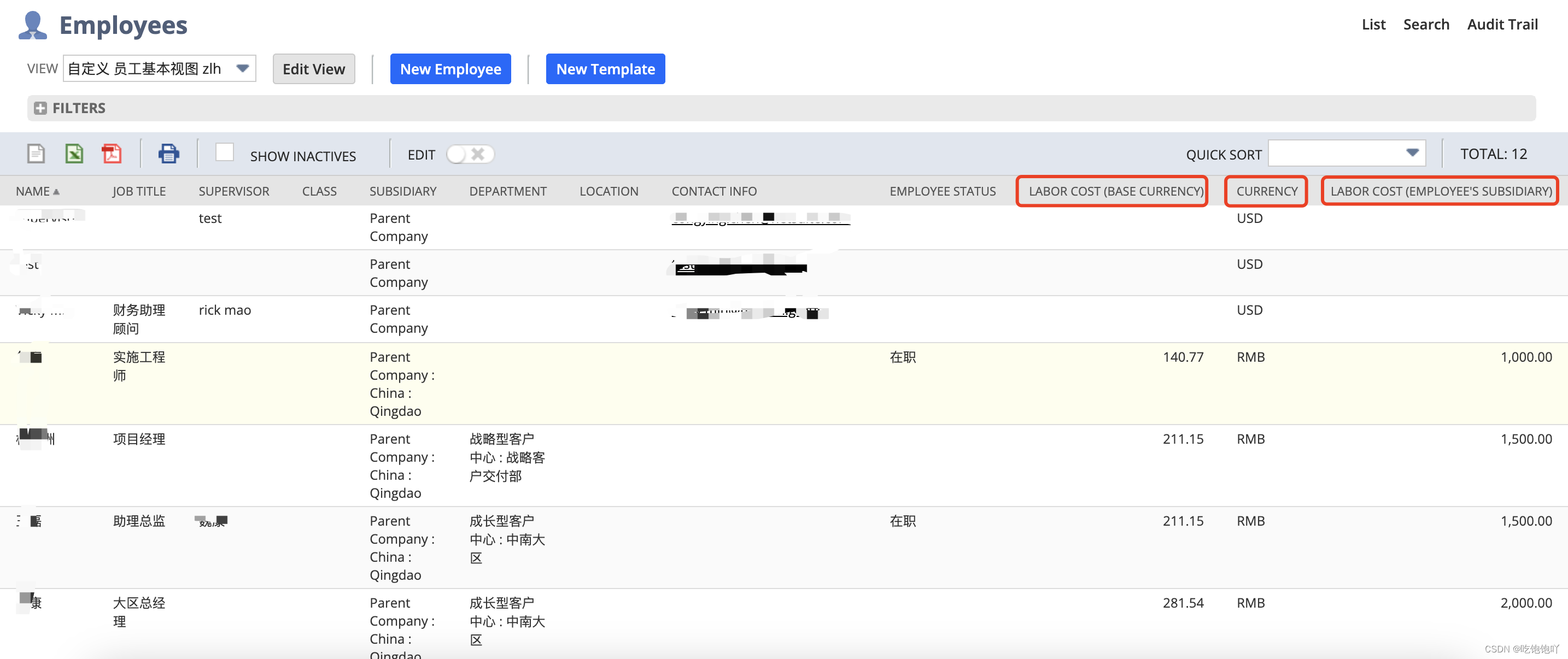

模型选择:搜索打勾的两个选中添加

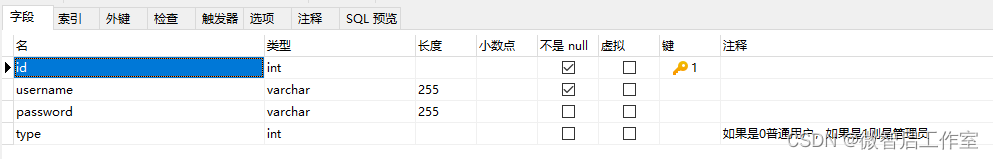

参数设置部分基本如下图所示

点击新建任务完成创建调试任务的工作

环境准备

如果使用我制造好的Open-Sora镜像的话直接跳转到克隆代码仓那一步

安装flash attention

pip install packaging ninja

pip install flash-attn --no-build-isolation

安装apex

cd /tmp/code

git clone https://github.com/NVIDIA/apex

cd apex

pip install -v --disable-pip-version-check --no-cache-dir --no-build-isolation --config-settings "--build-option=--cpp_ext" --config-settings "--build-option=--cuda_ext" ./

安装xformers

pip install -U xformers --index-url https://download.pytorch.org/whl/cu121

克隆代码仓及安装

cd /temp/code

git clone https://github.com/hpcaitech/Open-Sora #这边可以替换成克隆后在openi的代码仓地址

cd Open-Sora

pip install -v . #如果使用Open-Sora的话这一步不要执行

注:如果使用我制作号的Open-Sora镜像的话这一步不要执行pip install! Git Clone完成就好!

如果不使用我公开的模型文件,想自己体验下载模型的话,可以使用下面的文件脚本(download_model.py)放到Open-Sora代码仓文件夹的上一层执行

安装依赖

pip install modelscope

下载脚本

import torch

from modelscope import snapshot_download, AutoModel, AutoTokenizer

import os

snapshot_download('AI-ModelScope/sd-vae-ft-ema', cache_dir='./Open-Sora/opensora/models/', revision='master')

snapshot_download('AI-ModelScope/Open-Sora', cache_dir='./Open-Sora/opensora/models/', revision='master')

重新安装torch==2.2.1

如果使用我配置的Open-Sora镜像的话这一步不要执行

不重新安装在openi平台有概率会被重新安装成torch==2.1.1,这样就造成了版本不匹配

pip uninstlal torch torchvision torchaudio

pip3 install torch==2.2.1 torchvision torchaudio==2.2.1 --index-url https://download.pytorch.org/whl/cu121

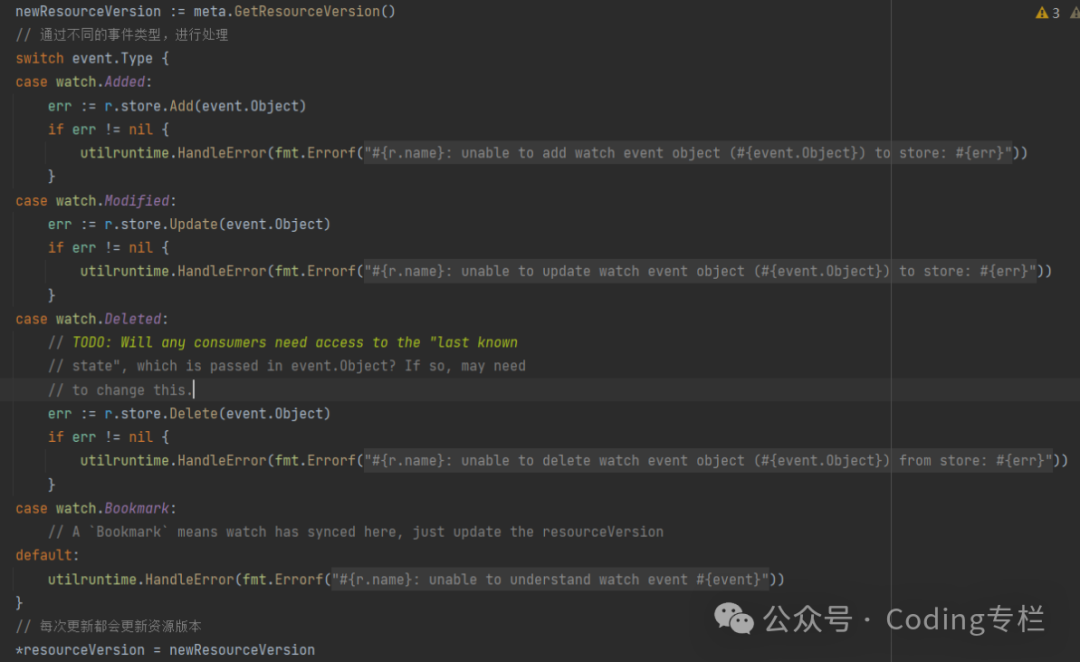

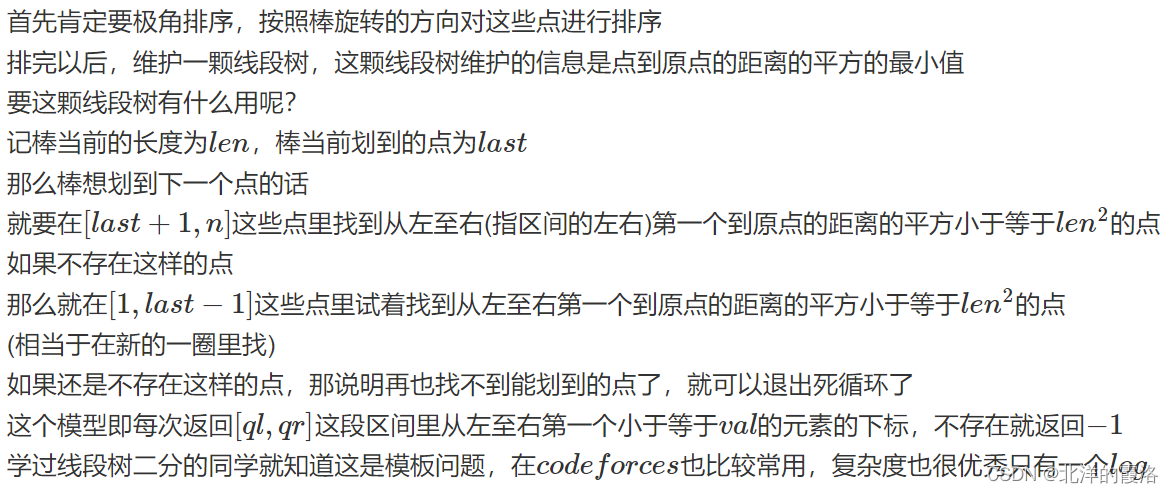

修改文件(Open-Sora/configs/opensora/inference/16x256x256.py)

num_frames = 16

fps = 24 // 3

image_size = (256, 256)# Define model

model = dict(type="STDiT-XL/2",space_scale=0.5,time_scale=1.0,enable_flashattn=True,enable_layernorm_kernel=True,from_pretrained="/tmp/code/Open-Sora/opensora/models/Open-Sora/OpenSora-v1-HQ-16x256x256.pth",

)

vae = dict(type="VideoAutoencoderKL",from_pretrained="/tmp/code/Open-Sora/opensora/models/sd-vae-ft-ema",

)

text_encoder = dict(type="t5",from_pretrained="DeepFloyd/t5-v1_1-xxl",model_max_length=120,

)

scheduler = dict(type="iddpm",num_sampling_steps=100,cfg_scale=7.0,

)

dtype = "fp16"# Others

batch_size = 2

seed = 42

prompt_path = "./assets/texts/t2v_samples.txt"

save_dir = "./outputs/samples/"

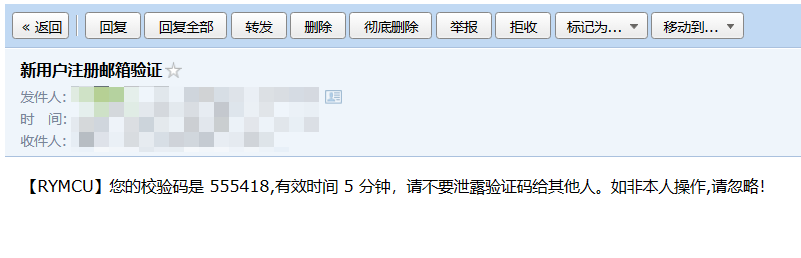

设置HF镜像

export HF_ENDPOINT=https://hf-mirror.com

拷贝模型

cd opensora/models/

cp -r /tmp/pretrainmodel/* ./

运行代码

cd ../..

torchrun --standalone --nproc_per_node 1 scripts/inference.py configs/opensora/inference/16x256x256.py

运行时GPU情况:

Mon Mar 25 18:07:00 2024

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 515.65.01 Driver Version: 515.65.01 CUDA Version: 12.1 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA A100-PCI... Off | 00000000:92:00.0 Off | 0 |

| N/A 48C P0 210W / 250W | 22662MiB / 40960MiB | 100% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------++-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

+-----------------------------------------------------------------------------+