《博主简介》

小伙伴们好,我是阿旭。专注于人工智能、AIGC、python、计算机视觉相关分享研究。

✌更多学习资源,可关注公-仲-hao:【阿旭算法与机器学习】,共同学习交流~

👍感谢小伙伴们点赞、关注!

《------往期经典推荐------》

一、AI应用软件开发实战专栏【链接】

| 项目名称 | 项目名称 |

|---|---|

| 1.【人脸识别与管理系统开发】 | 2.【车牌识别与自动收费管理系统开发】 |

| 3.【手势识别系统开发】 | 4.【人脸面部活体检测系统开发】 |

| 5.【图片风格快速迁移软件开发】 | 6.【人脸表表情识别系统】 |

| 7.【YOLOv8多目标识别与自动标注软件开发】 | 8.【基于YOLOv8深度学习的行人跌倒检测系统】 |

| 9.【基于YOLOv8深度学习的PCB板缺陷检测系统】 | 10.【基于YOLOv8深度学习的生活垃圾分类目标检测系统】 |

| 11.【基于YOLOv8深度学习的安全帽目标检测系统】 | 12.【基于YOLOv8深度学习的120种犬类检测与识别系统】 |

| 13.【基于YOLOv8深度学习的路面坑洞检测系统】 | 14.【基于YOLOv8深度学习的火焰烟雾检测系统】 |

| 15.【基于YOLOv8深度学习的钢材表面缺陷检测系统】 | 16.【基于YOLOv8深度学习的舰船目标分类检测系统】 |

| 17.【基于YOLOv8深度学习的西红柿成熟度检测系统】 | 18.【基于YOLOv8深度学习的血细胞检测与计数系统】 |

| 19.【基于YOLOv8深度学习的吸烟/抽烟行为检测系统】 | 20.【基于YOLOv8深度学习的水稻害虫检测与识别系统】 |

| 21.【基于YOLOv8深度学习的高精度车辆行人检测与计数系统】 | 22.【基于YOLOv8深度学习的路面标志线检测与识别系统】 |

| 23.【基于YOLOv8深度学习的智能小麦害虫检测识别系统】 | 24.【基于YOLOv8深度学习的智能玉米害虫检测识别系统】 |

| 25.【基于YOLOv8深度学习的200种鸟类智能检测与识别系统】 | 26.【基于YOLOv8深度学习的45种交通标志智能检测与识别系统】 |

| 27.【基于YOLOv8深度学习的人脸面部表情识别系统】 | 28.【基于YOLOv8深度学习的苹果叶片病害智能诊断系统】 |

| 29.【基于YOLOv8深度学习的智能肺炎诊断系统】 | 30.【基于YOLOv8深度学习的葡萄簇目标检测系统】 |

| 31.【基于YOLOv8深度学习的100种中草药智能识别系统】 | 32.【基于YOLOv8深度学习的102种花卉智能识别系统】 |

| 33.【基于YOLOv8深度学习的100种蝴蝶智能识别系统】 | 34.【基于YOLOv8深度学习的水稻叶片病害智能诊断系统】 |

| 35.【基于YOLOv8与ByteTrack的车辆行人多目标检测与追踪系统】 | 36.【基于YOLOv8深度学习的智能草莓病害检测与分割系统】 |

| 37.【基于YOLOv8深度学习的复杂场景下船舶目标检测系统】 | 38.【基于YOLOv8深度学习的农作物幼苗与杂草检测系统】 |

| 39.【基于YOLOv8深度学习的智能道路裂缝检测与分析系统】 | 40.【基于YOLOv8深度学习的葡萄病害智能诊断与防治系统】 |

| 41.【基于YOLOv8深度学习的遥感地理空间物体检测系统】 | 42.【基于YOLOv8深度学习的无人机视角地面物体检测系统】 |

| 43.【基于YOLOv8深度学习的木薯病害智能诊断与防治系统】 | 44.【基于YOLOv8深度学习的野外火焰烟雾检测系统】 |

| 45.【基于YOLOv8深度学习的脑肿瘤智能检测系统】 | 46.【基于YOLOv8深度学习的玉米叶片病害智能诊断与防治系统】 |

| 47.【基于YOLOv8深度学习的橙子病害智能诊断与防治系统】 |

二、机器学习实战专栏【链接】,已更新31期,欢迎关注,持续更新中~~

三、深度学习【Pytorch】专栏【链接】

四、【Stable Diffusion绘画系列】专栏【链接】

五、YOLOv8改进专栏【链接】,持续更新中~~

六、YOLO性能对比专栏【链接】,持续更新中~

《------正文------》

前言

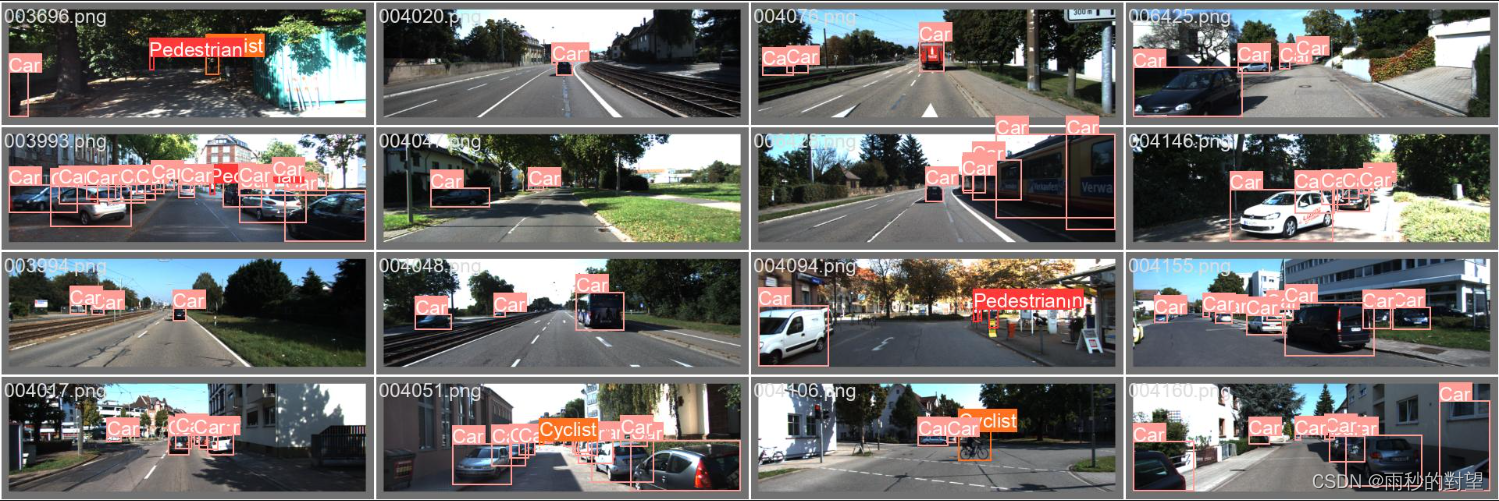

本文主要介绍如何打印并且查看YOLOv8网络模型的网络结构配置信息、每一层结构详细信息、以及参数量、计算量等模型相关信息。 该方法同样适用于改进后的模型网络结构信息及相关参数查看。可用于不同模型进行参数量、计算量等对比使用。

查看配置文件结构信息

在每次进行YOLOv8模型训练前,都会打印相应的模型结构信息,如上图。但是如何自己能够直接打印出上述网络结构配置信息呢?,博主通过查看源码发现,信息是在源码DetectionModel类中,打印出来的。因此我们直接使用该类,传入我们自己的模型配置文件,运行该类即可,代码如下:

class DetectionModel(BaseModel):"""YOLOv8 detection model."""def __init__(self, cfg="yolov8n.yaml", ch=3, nc=None, verbose=True): # model, input channels, number of classes"""Initialize the YOLOv8 detection model with the given config and parameters."""super().__init__()self.yaml = cfg if isinstance(cfg, dict) else yaml_model_load(cfg) # cfg dict# Define modelch = self.yaml["ch"] = self.yaml.get("ch", ch) # input channelsif nc and nc != self.yaml["nc"]:LOGGER.info(f"Overriding model.yaml nc={self.yaml['nc']} with nc={nc}")self.yaml["nc"] = nc # override YAML valueself.model, self.save = parse_model(deepcopy(self.yaml), ch=ch, verbose=verbose) # model, savelistself.names = {i: f"{i}" for i in range(self.yaml["nc"])} # default names dictself.inplace = self.yaml.get("inplace", True)# Build stridesm = self.model[-1] # Detect()if isinstance(m, Detect): # includes all Detect subclasses like Segment, Pose, OBB, WorldDetects = 256 # 2x min stridem.inplace = self.inplaceforward = lambda x: self.forward(x)[0] if isinstance(m, (Segment, Pose, OBB)) else self.forward(x)m.stride = torch.tensor([s / x.shape[-2] for x in forward(torch.zeros(1, ch, s, s))]) # forwardself.stride = m.stridem.bias_init() # only run onceelse:self.stride = torch.Tensor([32]) # default stride for i.e. RTDETR# Init weights, biasesinitialize_weights(self)if verbose:self.info()LOGGER.info("")# 模型网络结构配置文件路径

yaml_path = 'ultralytics/cfg/models/v8/yolov8n.yaml'

# 改进的模型结构路径

# yaml_path = 'ultralytics/cfg/models/v8/yolov8n-CBAM.yaml'

# 传入模型网络结构配置文件cfg, nc为模型检测类别数

DetectionModel(cfg=yaml_path,nc=5)

运行代码后,打印结果如下:

打印结果说明:

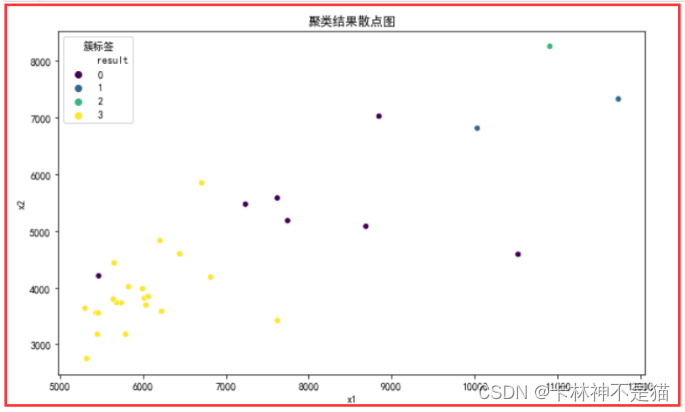

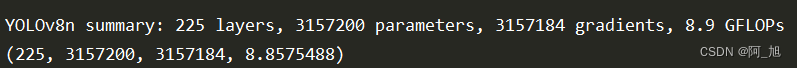

可以看到模型配置文件一共有23行,params为每一层的参数量大小,module为每一层的结构名称,arguments为每一层结构需要传入的参数。最后一行summary为总的信息参数,模型一共有225层,参参数量(parameters)为:3157200,计算量GFLOPs为:8.9.

查看详细的网络结构

上面只是打印出了网络配置文件每一层相关的信息,如果我们想看更加细致的每一步信息,可以直接使用model.info()来进行查看,代码如下:

加载训练好的模型或者网络结构配置文件

from ultralytics import YOLO

# 加载训练好的模型或者网络结构配置文件

model = YOLO('best.pt')

# model = YOLO('ultralytics/cfg/models/v8/yolov8n.yaml')

打印模型参数信息:

# 打印模型参数信息

print(model.info())

结果如下:

打印模型每一层结构信息:

在上面代码中加入detailed参数即可。

print(model.info(detailed=True))

打印信息如下:

layer name gradient parameters shape mu sigma0 model.0.conv.weight False 432 [16, 3, 3, 3] -0.00404 0.153 torch.float321 model.0.bn.weight False 16 [16] 2.97 1.87 torch.float322 model.0.bn.bias False 16 [16] 0.244 4.17 torch.float323 model.1.conv.weight False 4608 [32, 16, 3, 3] -3.74e-05 0.0646 torch.float324 model.1.bn.weight False 32 [32] 5.01 1.12 torch.float325 model.1.bn.bias False 32 [32] 0.936 1.51 torch.float326 model.2.cv1.conv.weight False 1024 [32, 32, 1, 1] -0.0103 0.0918 torch.float327 model.2.cv1.bn.weight False 32 [32] 2.21 1.39 torch.float328 model.2.cv1.bn.bias False 32 [32] 0.803 1.39 torch.float329 model.2.cv2.conv.weight False 1536 [32, 48, 1, 1] -0.00279 0.0831 torch.float3210 model.2.cv2.bn.weight False 32 [32] 1.21 0.576 torch.float3211 model.2.cv2.bn.bias False 32 [32] 0.542 1.13 torch.float3212 model.2.m.0.cv1.conv.weight False 2304 [16, 16, 3, 3] -0.000871 0.0574 torch.float3213 model.2.m.0.cv1.bn.weight False 16 [16] 2.36 0.713 torch.float3214 model.2.m.0.cv1.bn.bias False 16 [16] 1.02 1.71 torch.float3215 model.2.m.0.cv2.conv.weight False 2304 [16, 16, 3, 3] -0.00071 0.05 torch.float3216 model.2.m.0.cv2.bn.weight False 16 [16] 2.11 0.519 torch.float3217 model.2.m.0.cv2.bn.bias False 16 [16] 0.92 1.9 torch.float3218 model.3.conv.weight False 18432 [64, 32, 3, 3] -0.00137 0.0347 torch.float3219 model.3.bn.weight False 64 [64] 0.818 0.206 torch.float3220 model.3.bn.bias False 64 [64] 0.249 0.936 torch.float3221 model.4.cv1.conv.weight False 4096 [64, 64, 1, 1] -0.00235 0.0553 torch.float3222 model.4.cv1.bn.weight False 64 [64] 0.824 0.359 torch.float3223 model.4.cv1.bn.bias False 64 [64] 0.26 0.779 torch.float3224 model.4.cv2.conv.weight False 8192 [64, 128, 1, 1] -0.0019 0.0474 torch.float3225 model.4.cv2.bn.weight False 64 [64] 0.718 0.21 torch.float3226 model.4.cv2.bn.bias False 64 [64] -0.05 0.754 torch.float3227 model.4.m.0.cv1.conv.weight False 9216 [32, 32, 3, 3] -0.00178 0.0368 torch.float3228 model.4.m.0.cv1.bn.weight False 32 [32] 0.751 0.154 torch.float3229 model.4.m.0.cv1.bn.bias False 32 [32] -0.263 0.629 torch.float3230 model.4.m.0.cv2.conv.weight False 9216 [32, 32, 3, 3] -0.00156 0.0346 torch.float3231 model.4.m.0.cv2.bn.weight False 32 [32] 0.769 0.203 torch.float3232 model.4.m.0.cv2.bn.bias False 32 [32] 0.183 0.694 torch.float3233 model.4.m.1.cv1.conv.weight False 9216 [32, 32, 3, 3] -0.00209 0.0339 torch.float3234 model.4.m.1.cv1.bn.weight False 32 [32] 0.686 0.106 torch.float3235 model.4.m.1.cv1.bn.bias False 32 [32] -0.82 0.469 torch.float3236 model.4.m.1.cv2.conv.weight False 9216 [32, 32, 3, 3] -0.00253 0.0309 torch.float3237 model.4.m.1.cv2.bn.weight False 32 [32] 1.05 0.242 torch.float3238 model.4.m.1.cv2.bn.bias False 32 [32] 0.523 0.868 torch.float3239 model.5.conv.weight False 73728 [128, 64, 3, 3] -0.000612 0.0224 torch.float3240 model.5.bn.weight False 128 [128] 0.821 0.231 torch.float3241 model.5.bn.bias False 128 [128] -0.235 0.678 torch.float3242 model.6.cv1.conv.weight False 16384 [128, 128, 1, 1] -0.00307 0.0347 torch.float3243 model.6.cv1.bn.weight False 128 [128] 0.895 0.398 torch.float3244 model.6.cv1.bn.bias False 128 [128] -0.134 0.746 torch.float3245 model.6.cv2.conv.weight False 32768 [128, 256, 1, 1] -0.00204 0.0303 torch.float3246 model.6.cv2.bn.weight False 128 [128] 0.773 0.205 torch.float3247 model.6.cv2.bn.bias False 128 [128] -0.584 0.755 torch.float3248 model.6.m.0.cv1.conv.weight False 36864 [64, 64, 3, 3] -0.00186 0.0232 torch.float3249 model.6.m.0.cv1.bn.weight False 64 [64] 1.06 0.176 torch.float3250 model.6.m.0.cv1.bn.bias False 64 [64] -0.915 0.598 torch.float3251 model.6.m.0.cv2.conv.weight False 36864 [64, 64, 3, 3] -0.00196 0.0221 torch.float3252 model.6.m.0.cv2.bn.weight False 64 [64] 0.833 0.25 torch.float3253 model.6.m.0.cv2.bn.bias False 64 [64] -0.0806 0.526 torch.float3254 model.6.m.1.cv1.conv.weight False 36864 [64, 64, 3, 3] -0.00194 0.0225 torch.float3255 model.6.m.1.cv1.bn.weight False 64 [64] 0.916 0.178 torch.float3256 model.6.m.1.cv1.bn.bias False 64 [64] -1.13 0.732 torch.float3257 model.6.m.1.cv2.conv.weight False 36864 [64, 64, 3, 3] -0.00147 0.0213 torch.float3258 model.6.m.1.cv2.bn.weight False 64 [64] 1.18 0.278 torch.float3259 model.6.m.1.cv2.bn.bias False 64 [64] 0.206 0.767 torch.float3260 model.7.conv.weight False 294912 [256, 128, 3, 3] -0.000707 0.0142 torch.float3261 model.7.bn.weight False 256 [256] 0.961 0.197 torch.float3262 model.7.bn.bias False 256 [256] -0.718 0.451 torch.float3263 model.8.cv1.conv.weight False 65536 [256, 256, 1, 1] -0.00323 0.0224 torch.float3264 model.8.cv1.bn.weight False 256 [256] 1.14 0.33 torch.float3265 model.8.cv1.bn.bias False 256 [256] -0.734 0.573 torch.float3266 model.8.cv2.conv.weight False 98304 [256, 384, 1, 1] -0.00194 0.0198 torch.float3267 model.8.cv2.bn.weight False 256 [256] 1.19 0.223 torch.float3268 model.8.cv2.bn.bias False 256 [256] -0.689 0.486 torch.float3269 model.8.m.0.cv1.conv.weight False 147456 [128, 128, 3, 3] -0.0011 0.0152 torch.float3270 model.8.m.0.cv1.bn.weight False 128 [128] 1.14 0.205 torch.float3271 model.8.m.0.cv1.bn.bias False 128 [128] -0.821 0.72 torch.float3272 model.8.m.0.cv2.conv.weight False 147456 [128, 128, 3, 3] -0.00112 0.0151 torch.float3273 model.8.m.0.cv2.bn.weight False 128 [128] 1.65 0.369 torch.float3274 model.8.m.0.cv2.bn.bias False 128 [128] -0.2 0.606 torch.float3275 model.9.cv1.conv.weight False 32768 [128, 256, 1, 1] -0.00452 0.0257 torch.float3276 model.9.cv1.bn.weight False 128 [128] 0.926 0.251 torch.float3277 model.9.cv1.bn.bias False 128 [128] 1.43 0.654 torch.float3278 model.9.cv2.conv.weight False 131072 [256, 512, 1, 1] -0.000201 0.018 torch.float3279 model.9.cv2.bn.weight False 256 [256] 0.936 0.257 torch.float3280 model.9.cv2.bn.bias False 256 [256] -1.27 0.828 torch.float3281 model.12.cv1.conv.weight False 49152 [128, 384, 1, 1] -0.00243 0.0255 torch.float3282 model.12.cv1.bn.weight False 128 [128] 0.87 0.224 torch.float3283 model.12.cv1.bn.bias False 128 [128] -0.373 0.821 torch.float3284 model.12.cv2.conv.weight False 24576 [128, 192, 1, 1] -0.00462 0.0284 torch.float3285 model.12.cv2.bn.weight False 128 [128] 0.73 0.201 torch.float3286 model.12.cv2.bn.bias False 128 [128] -0.281 0.661 torch.float3287 model.12.m.0.cv1.conv.weight False 36864 [64, 64, 3, 3] -0.0022 0.0224 torch.float3288 model.12.m.0.cv1.bn.weight False 64 [64] 0.832 0.134 torch.float3289 model.12.m.0.cv1.bn.bias False 64 [64] -0.841 0.611 torch.float3290 model.12.m.0.cv2.conv.weight False 36864 [64, 64, 3, 3] -0.000787 0.0213 torch.float3291 model.12.m.0.cv2.bn.weight False 64 [64] 0.824 0.182 torch.float3292 model.12.m.0.cv2.bn.bias False 64 [64] -0.107 0.636 torch.float3293 model.15.cv1.conv.weight False 12288 [64, 192, 1, 1] -0.00189 0.0321 torch.float3294 model.15.cv1.bn.weight False 64 [64] 0.536 0.215 torch.float3295 model.15.cv1.bn.bias False 64 [64] 0.16 0.967 torch.float3296 model.15.cv2.conv.weight False 6144 [64, 96, 1, 1] -0.00124 0.0364 torch.float3297 model.15.cv2.bn.weight False 64 [64] 0.561 0.275 torch.float3298 model.15.cv2.bn.bias False 64 [64] 0.127 0.942 torch.float3299 model.15.m.0.cv1.conv.weight False 9216 [32, 32, 3, 3] -0.00236 0.0306 torch.float32100 model.15.m.0.cv1.bn.weight False 32 [32] 0.663 0.14 torch.float32101 model.15.m.0.cv1.bn.bias False 32 [32] -0.567 0.583 torch.float32102 model.15.m.0.cv2.conv.weight False 9216 [32, 32, 3, 3] -0.00152 0.0289 torch.float32103 model.15.m.0.cv2.bn.weight False 32 [32] 0.741 0.161 torch.float32104 model.15.m.0.cv2.bn.bias False 32 [32] 0.21 0.786 torch.float32105 model.16.conv.weight False 36864 [64, 64, 3, 3] -0.000977 0.0176 torch.float32106 model.16.bn.weight False 64 [64] 0.842 0.216 torch.float32107 model.16.bn.bias False 64 [64] -0.389 0.592 torch.float32108 model.18.cv1.conv.weight False 24576 [128, 192, 1, 1] -0.00217 0.0241 torch.float32109 model.18.cv1.bn.weight False 128 [128] 0.876 0.208 torch.float32110 model.18.cv1.bn.bias False 128 [128] -0.307 0.611 torch.float32111 model.18.cv2.conv.weight False 24576 [128, 192, 1, 1] -0.00254 0.0237 torch.float32112 model.18.cv2.bn.weight False 128 [128] 0.726 0.297 torch.float32113 model.18.cv2.bn.bias False 128 [128] -0.434 0.761 torch.float32114 model.18.m.0.cv1.conv.weight False 36864 [64, 64, 3, 3] -0.00257 0.0203 torch.float32115 model.18.m.0.cv1.bn.weight False 64 [64] 0.798 0.175 torch.float32116 model.18.m.0.cv1.bn.bias False 64 [64] -0.773 0.493 torch.float32117 model.18.m.0.cv2.conv.weight False 36864 [64, 64, 3, 3] -0.0014 0.0195 torch.float32118 model.18.m.0.cv2.bn.weight False 64 [64] 1.19 0.285 torch.float32119 model.18.m.0.cv2.bn.bias False 64 [64] -0.07 0.668 torch.float32120 model.19.conv.weight False 147456 [128, 128, 3, 3] -0.000819 0.0118 torch.float32121 model.19.bn.weight False 128 [128] 0.876 0.21 torch.float32122 model.19.bn.bias False 128 [128] -0.508 0.365 torch.float32123 model.21.cv1.conv.weight False 98304 [256, 384, 1, 1] -0.00166 0.0159 torch.float32124 model.21.cv1.bn.weight False 256 [256] 1.06 0.225 torch.float32125 model.21.cv1.bn.bias False 256 [256] -0.592 0.579 torch.float32126 model.21.cv2.conv.weight False 98304 [256, 384, 1, 1] -0.00257 0.0148 torch.float32127 model.21.cv2.bn.weight False 256 [256] 1.03 0.317 torch.float32128 model.21.cv2.bn.bias False 256 [256] -0.893 0.491 torch.float32129 model.21.m.0.cv1.conv.weight False 147456 [128, 128, 3, 3] -0.00142 0.0129 torch.float32130 model.21.m.0.cv1.bn.weight False 128 [128] 1.03 0.223 torch.float32131 model.21.m.0.cv1.bn.bias False 128 [128] -0.96 0.618 torch.float32132 model.21.m.0.cv2.conv.weight False 147456 [128, 128, 3, 3] -0.00124 0.0128 torch.float32133 model.21.m.0.cv2.bn.weight False 128 [128] 1.35 0.253 torch.float32134 model.21.m.0.cv2.bn.bias False 128 [128] -0.553 0.516 torch.float32135 model.22.cv2.0.0.conv.weight False 36864 [64, 64, 3, 3] -0.00193 0.0181 torch.float32136 model.22.cv2.0.0.bn.weight False 64 [64] 0.88 0.351 torch.float32137 model.22.cv2.0.0.bn.bias False 64 [64] -0.492 0.707 torch.float32138 model.22.cv2.0.1.conv.weight False 36864 [64, 64, 3, 3] -0.00163 0.0178 torch.float32139 model.22.cv2.0.1.bn.weight False 64 [64] 2.41 1.04 torch.float32140 model.22.cv2.0.1.bn.bias False 64 [64] 0.922 0.757 torch.float32141 model.22.cv2.0.2.weight False 4096 [64, 64, 1, 1] -0.00542 0.0553 torch.float32142 model.22.cv2.0.2.bias False 64 [64] 0.997 1.39 torch.float32143 model.22.cv2.1.0.conv.weight False 73728 [64, 128, 3, 3] -0.0017 0.014 torch.float32144 model.22.cv2.1.0.bn.weight False 64 [64] 1.28 0.485 torch.float32145 model.22.cv2.1.0.bn.bias False 64 [64] -0.389 0.68 torch.float32146 model.22.cv2.1.1.conv.weight False 36864 [64, 64, 3, 3] -0.00128 0.0167 torch.float32147 model.22.cv2.1.1.bn.weight False 64 [64] 2.56 1 torch.float32148 model.22.cv2.1.1.bn.bias False 64 [64] 0.855 0.564 torch.float32149 model.22.cv2.1.2.weight False 4096 [64, 64, 1, 1] -0.00756 0.0594 torch.float32150 model.22.cv2.1.2.bias False 64 [64] 0.992 1.32 torch.float32151 model.22.cv2.2.0.conv.weight False 147456 [64, 256, 3, 3] -0.000553 0.0115 torch.float32152 model.22.cv2.2.0.bn.weight False 64 [64] 1.55 0.413 torch.float32153 model.22.cv2.2.0.bn.bias False 64 [64] -0.259 0.62 torch.float32154 model.22.cv2.2.1.conv.weight False 36864 [64, 64, 3, 3] -0.00065 0.0149 torch.float32155 model.22.cv2.2.1.bn.weight False 64 [64] 2.95 0.852 torch.float32156 model.22.cv2.2.1.bn.bias False 64 [64] 0.822 0.556 torch.float32157 model.22.cv2.2.2.weight False 4096 [64, 64, 1, 1] -0.00674 0.0609 torch.float32158 model.22.cv2.2.2.bias False 64 [64] 0.99 1.33 torch.float32159 model.22.cv3.0.0.conv.weight False 36864 [64, 64, 3, 3] -0.00138 0.0275 torch.float32160 model.22.cv3.0.0.bn.weight False 64 [64] 1 0.00984 torch.float32161 model.22.cv3.0.0.bn.bias False 64 [64] 0.00709 0.0156 torch.float32162 model.22.cv3.0.1.conv.weight False 36864 [64, 64, 3, 3] -0.00549 0.0281 torch.float32163 model.22.cv3.0.1.bn.weight False 64 [64] 1.01 0.0248 torch.float32164 model.22.cv3.0.1.bn.bias False 64 [64] 0.0356 0.0581 torch.float32165 model.22.cv3.0.2.weight False 64 [1, 64, 1, 1] -0.0297 0.0872 torch.float32166 model.22.cv3.0.2.bias False 1 [1] -7.2 nan torch.float32167 model.22.cv3.1.0.conv.weight False 73728 [64, 128, 3, 3] -0.00178 0.0217 torch.float32168 model.22.cv3.1.0.bn.weight False 64 [64] 1 0.0165 torch.float32169 model.22.cv3.1.0.bn.bias False 64 [64] 0.00244 0.0185 torch.float32170 model.22.cv3.1.1.conv.weight False 36864 [64, 64, 3, 3] -0.00481 0.0278 torch.float32171 model.22.cv3.1.1.bn.weight False 64 [64] 1.02 0.0239 torch.float32172 model.22.cv3.1.1.bn.bias False 64 [64] 0.0411 0.0545 torch.float32173 model.22.cv3.1.2.weight False 64 [1, 64, 1, 1] -0.0327 0.104 torch.float32174 model.22.cv3.1.2.bias False 1 [1] -5.8 nan torch.float32175 model.22.cv3.2.0.conv.weight False 147456 [64, 256, 3, 3] -0.000784 0.0158 torch.float32176 model.22.cv3.2.0.bn.weight False 64 [64] 1 0.00871 torch.float32177 model.22.cv3.2.0.bn.bias False 64 [64] 0.00025 0.0116 torch.float32178 model.22.cv3.2.1.conv.weight False 36864 [64, 64, 3, 3] -0.00326 0.0272 torch.float32179 model.22.cv3.2.1.bn.weight False 64 [64] 1.06 0.0776 torch.float32180 model.22.cv3.2.1.bn.bias False 64 [64] 0.048 0.0988 torch.float32181 model.22.cv3.2.2.weight False 64 [1, 64, 1, 1] -0.0661 0.101 torch.float32182 model.22.cv3.2.2.bias False 1 [1] -4.48 nan torch.float32183 model.22.dfl.conv.weight False 16 [1, 16, 1, 1] 7.5 4.76 torch.float32

Model summary: 225 layers, 3011043 parameters, 0 gradients, 8.2 GFLOPs

(225, 3011043, 0, 8.1941504)

可以看到,打印出了模型每一层网络结构的名字、参数量以及该层的结构形状。

本文方法同样适用于ultralytics框架的其他模型结构,使用方法相同,可用于不同模型进行参数量、计算量等对比使用。

为方便大家学习使用,本文涉及到的所有代码均已打包好。免费获取方式如下:

关注下方名片GZH:【阿旭算法与机器学习】,发送【知识点】即可免费获取

如果文章对你有帮助,麻烦动动你的小手,给点个赞,鼓励一下吧,谢谢~~~

好了,这篇文章就介绍到这里,喜欢的小伙伴感谢给点个赞和关注,更多精彩内容持续更新~~

关于本篇文章大家有任何建议或意见,欢迎在评论区留言交流!