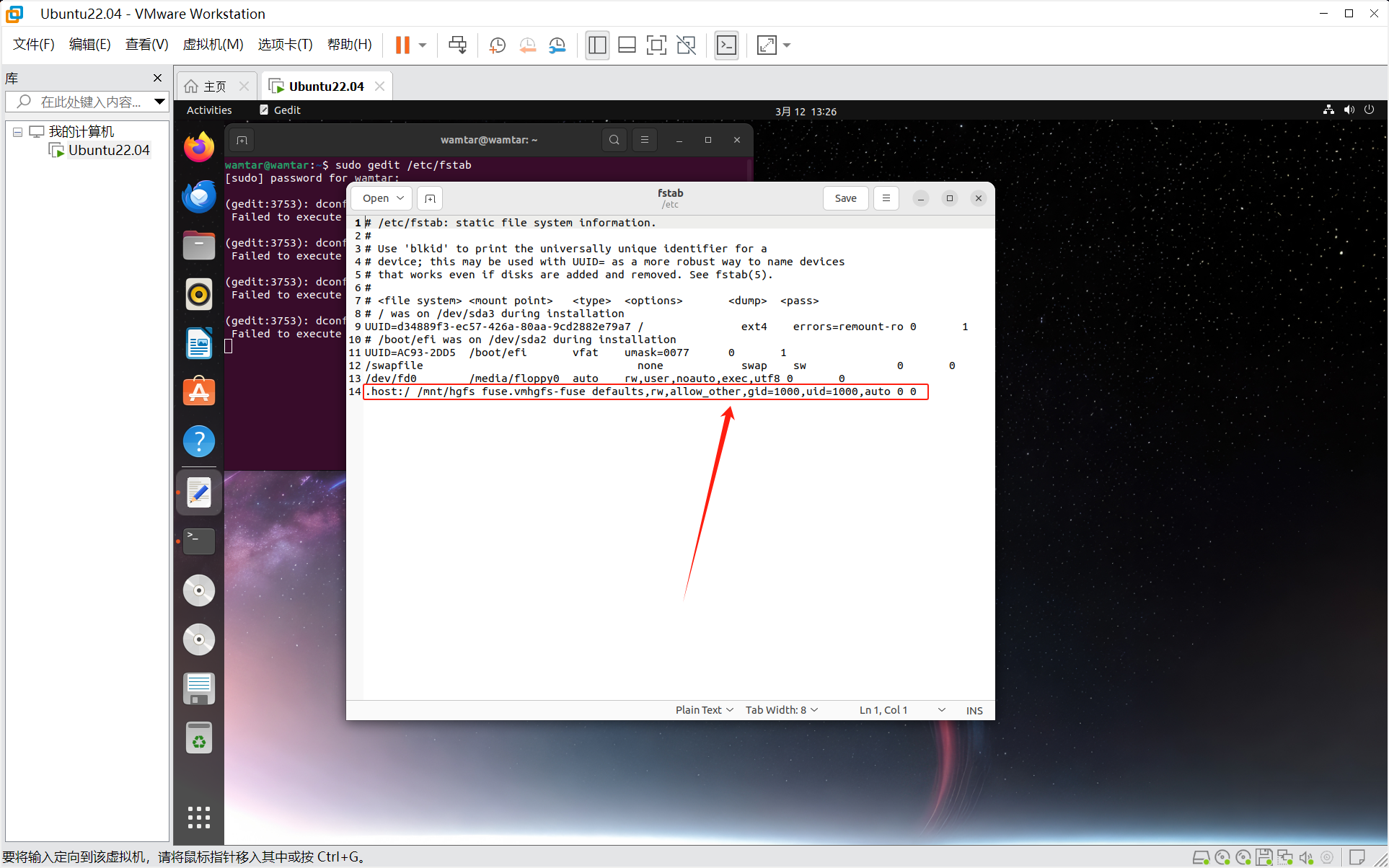

BigFoot EventAlertMod lua脚本插件,追踪当前目标的DOT,自身的HOT,持续时间监控

D:\Battle.net\World of Warcraft\_classic_\Interface\AddOns\EventAlertMod

想知道技能的ID,执行命令如下:本例子为“神圣牺牲”

/eam lookup 神圣牺牲

/eam lookupfull 神圣牺牲

比NugRunning监控更加明显,但是没有NugRunning多目标监控,互补吧

BigFoot NugRunning_nugrunning怎么关掉-CSDN博客