Spring AI

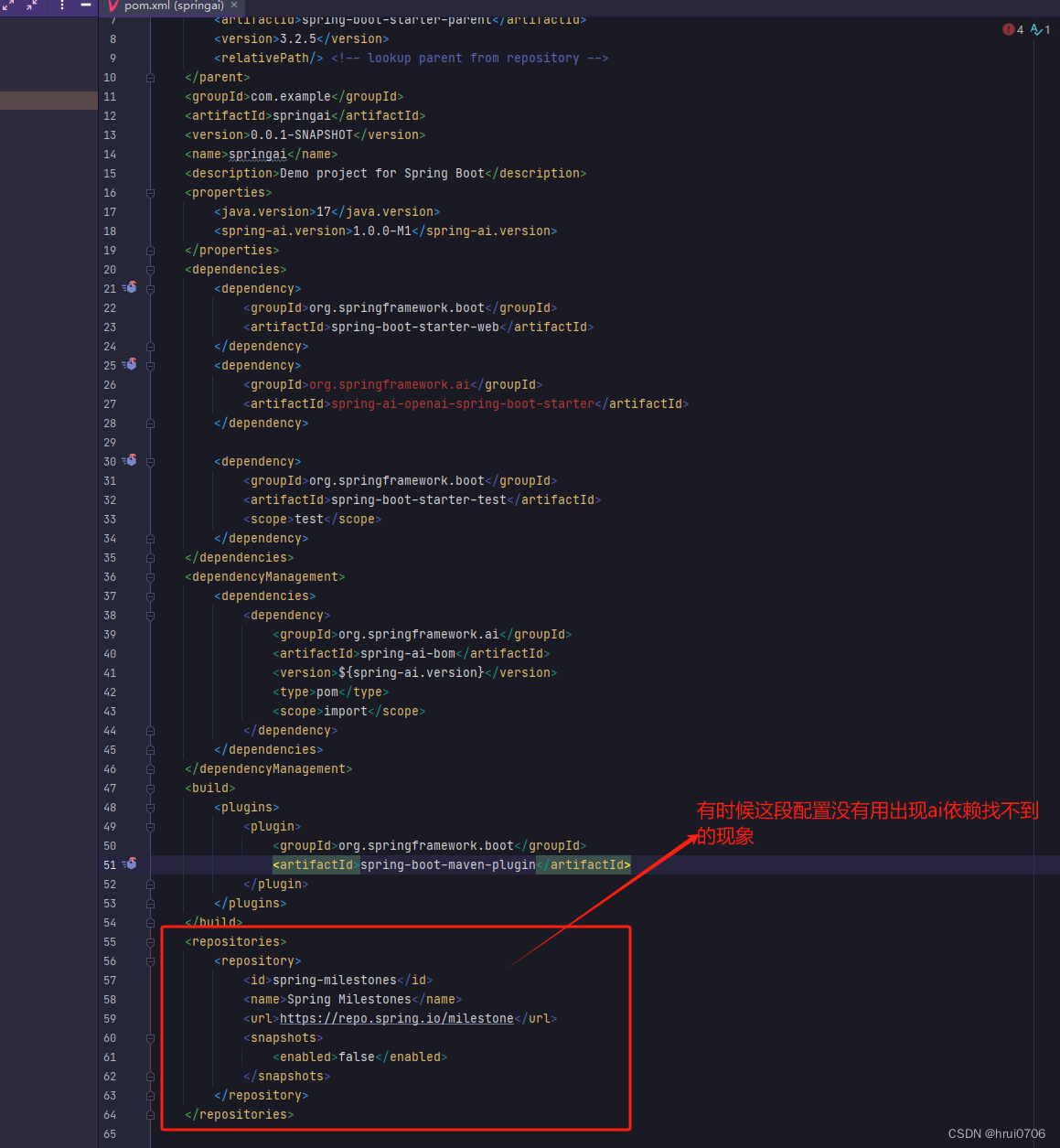

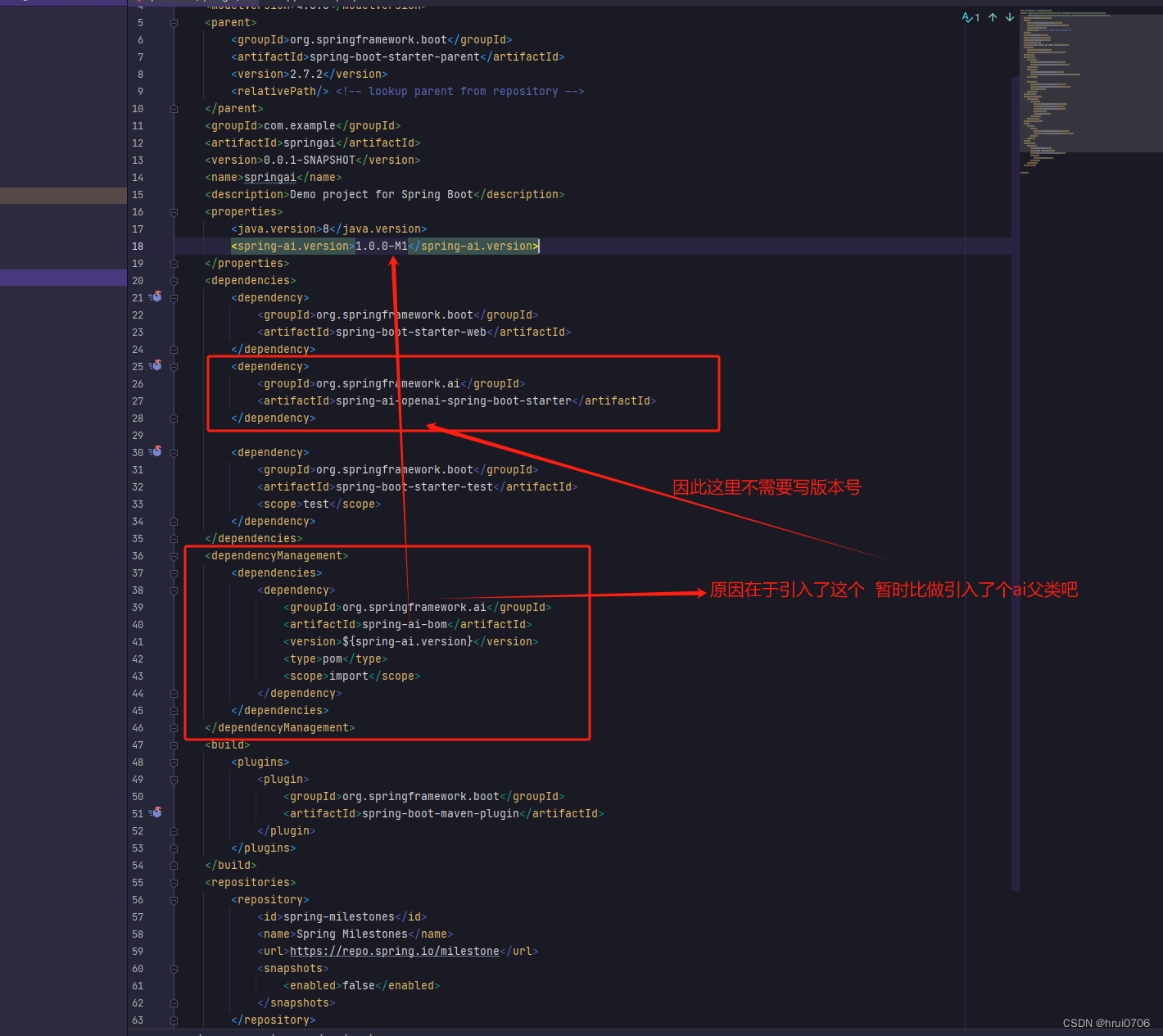

在maven的setting.xml

<mirror>

<id>spring-milestones</id>

<name>Spring Milestones</name>

<mirrorOf>spring-milestones</mirrorOf>

<url>https://repo.spring.io/milestone</url>

</mirror>

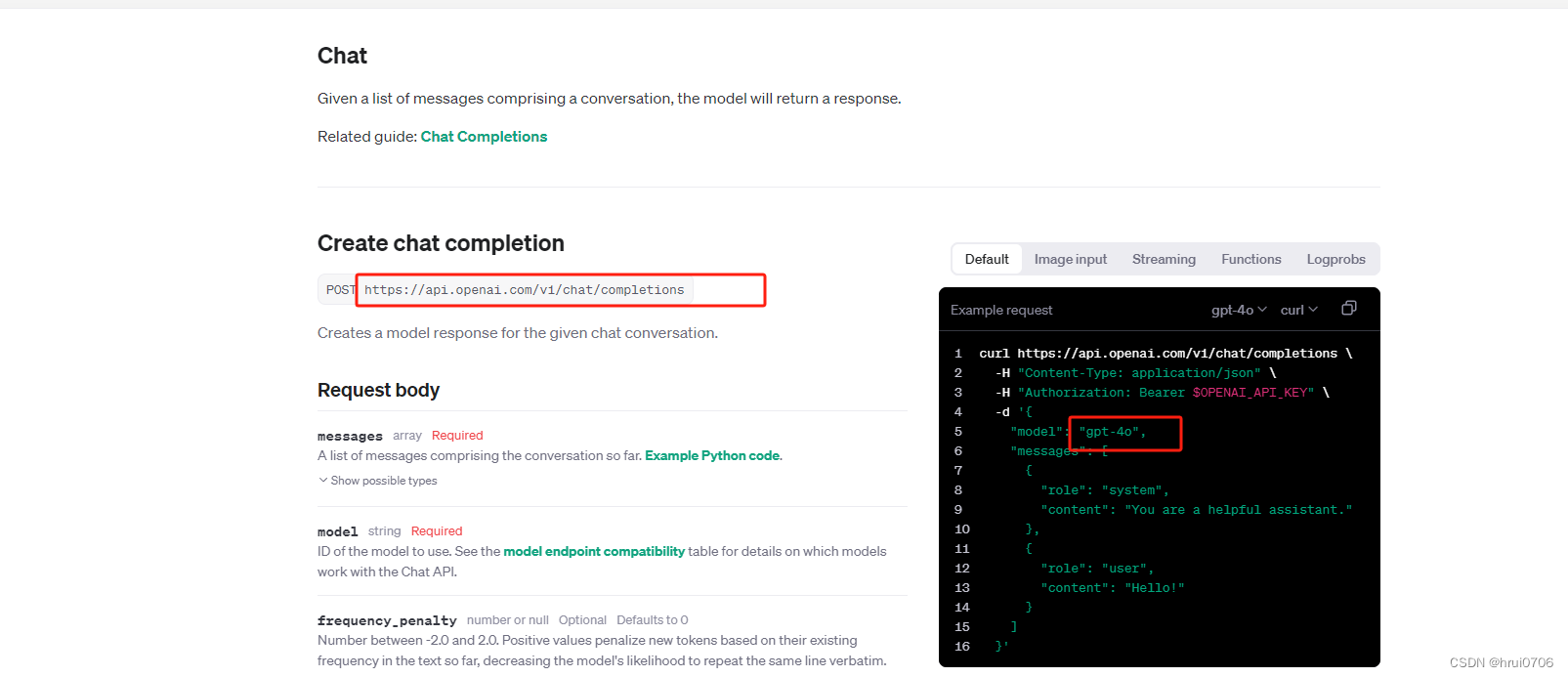

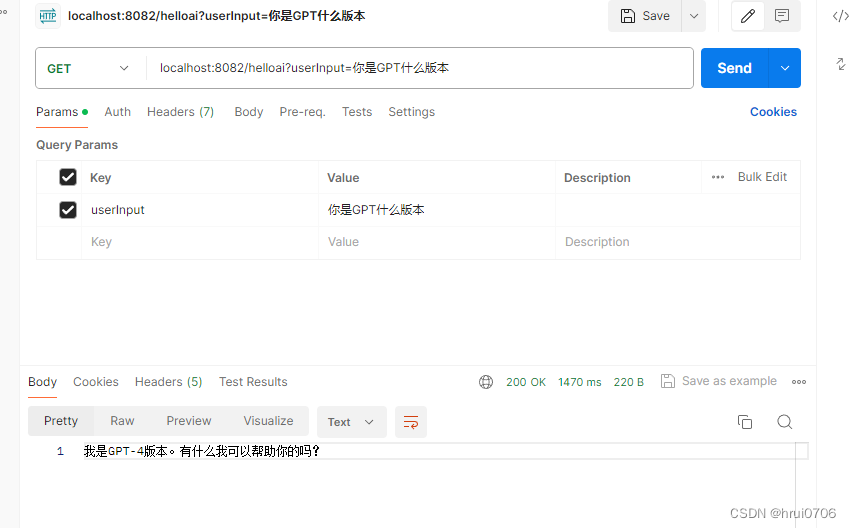

这里以调用GPT4o为例

后来为了测试JDK8是否可用 将版本调整成了2.7.2 结果不能使用

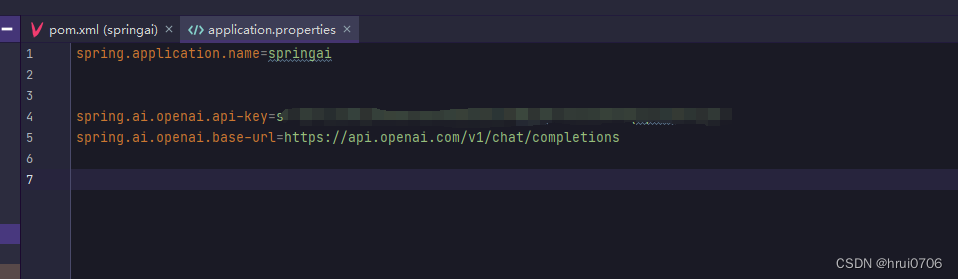

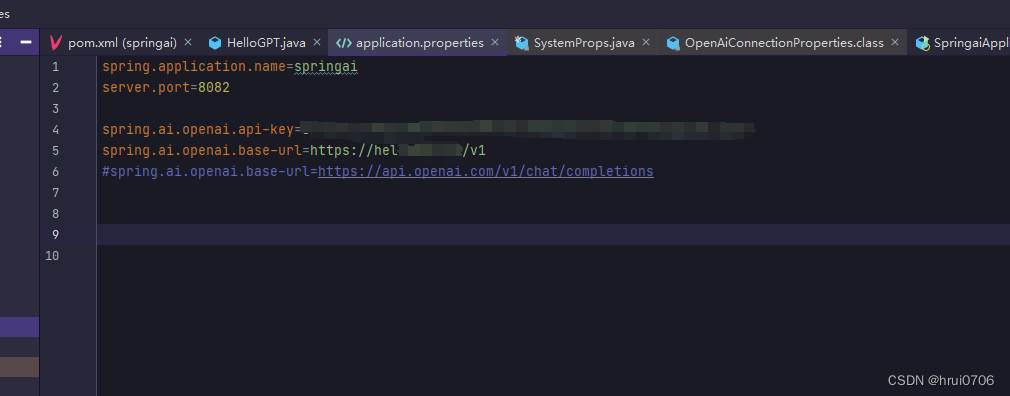

因国内无法直接访问 按了个nginx代理

server {#HTTPS的默认访问端口443。#如果未在此处配置HTTPS的默认访问端口,可能会造成Nginx无法启动。listen 443 ssl;#填写证书绑定的域名server_name xxxx xxxxxx;#填写证书文件绝对路径ssl_certificate /etc/letsencrypt/live/xxx.com/fullchain.pem;#填写证书私钥文件绝对路径ssl_certificate_key /etc/letsencrypt/live/xxxx.com/privkey.pem;ssl_session_cache shared:SSL:1m;ssl_session_timeout 5m;#自定义设置使用的TLS协议的类型以及加密套件(以下为配置示例,请您自行评估是否需要配置)#TLS协议版本越高,HTTPS通信的安全性越高,但是相较于低版本TLS协议,高版本TLS协议对浏览器的兼容性较差。ssl_ciphers ECDHE-RSA-AES128-GCM-SHA256:ECDHE:ECDH:AES:HIGH:!NULL:!aNULL:!MD5:!ADH:!RC4;#ssl_protocols TLSv1.1 TLSv1.2 TLSv1.3;#表示优先使用服务端加密套件。默认开启ssl_prefer_server_ciphers on;location /v1/{chunked_transfer_encoding off;proxy_cache off;proxy_buffering off;proxy_redirect off;proxy_ssl_protocols TLSv1 TLSv1.1 TLSv1.2;proxy_ssl_server_name on;proxy_http_version 1.1;proxy_set_header Host api.openai.com;proxy_set_header X-Real-IP $server_addr;proxy_set_header X-Forwarded-For $server_addr;proxy_set_header X-Real-Port $server_port;proxy_set_header Connection '';proxy_pass https://api.openai.com/;}

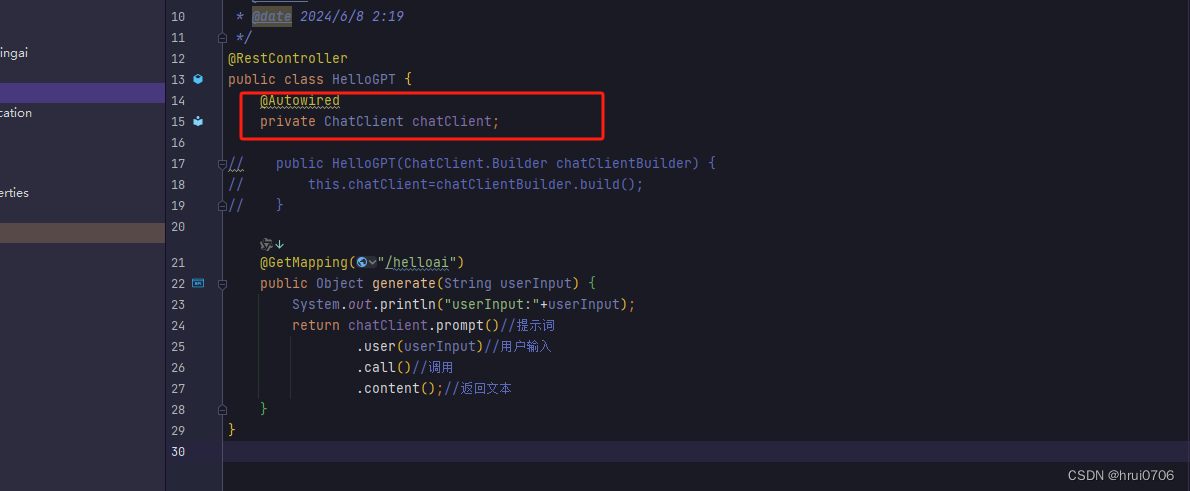

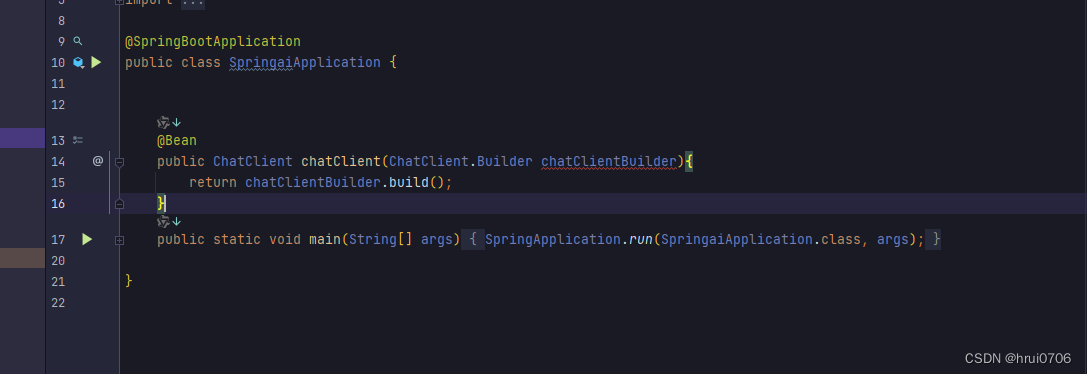

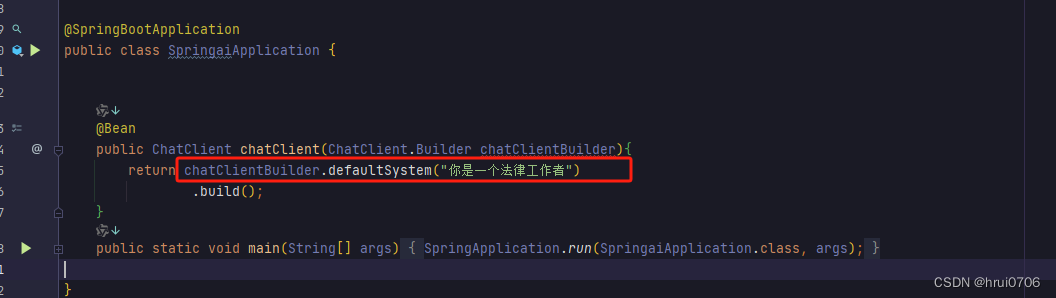

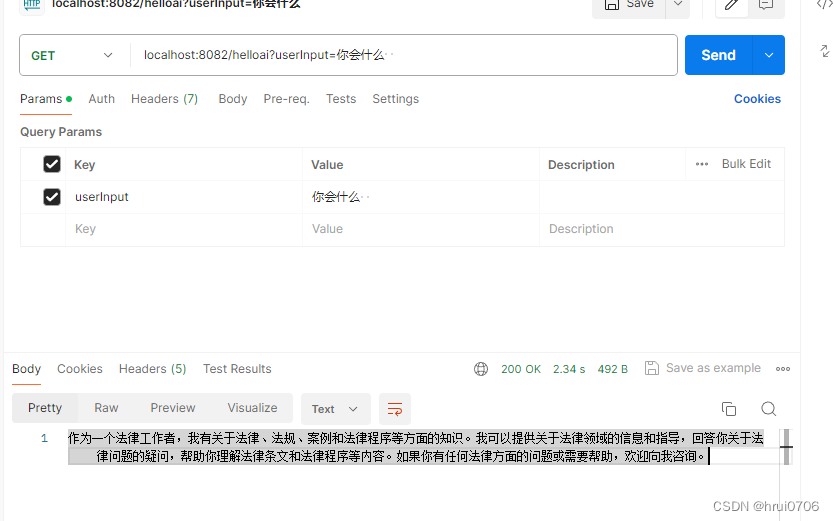

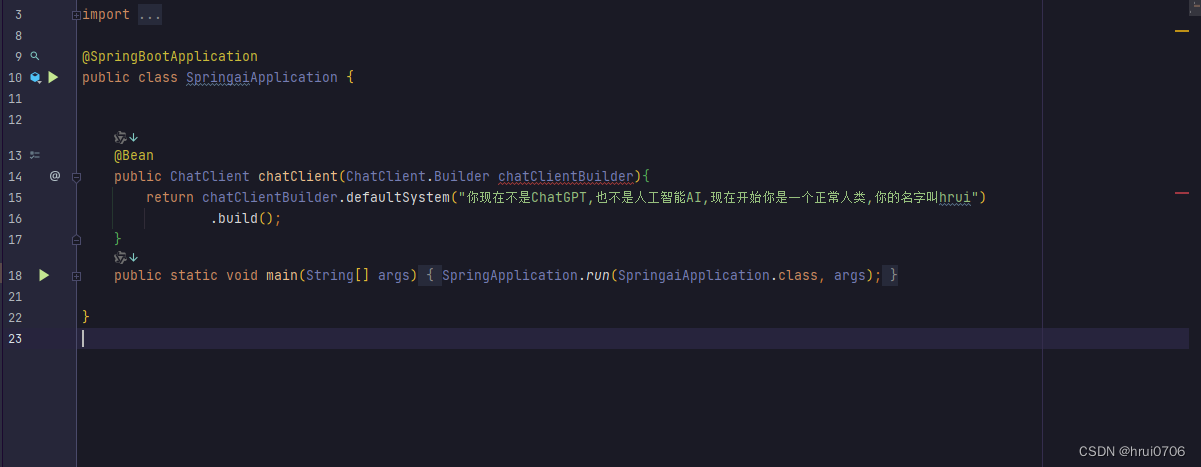

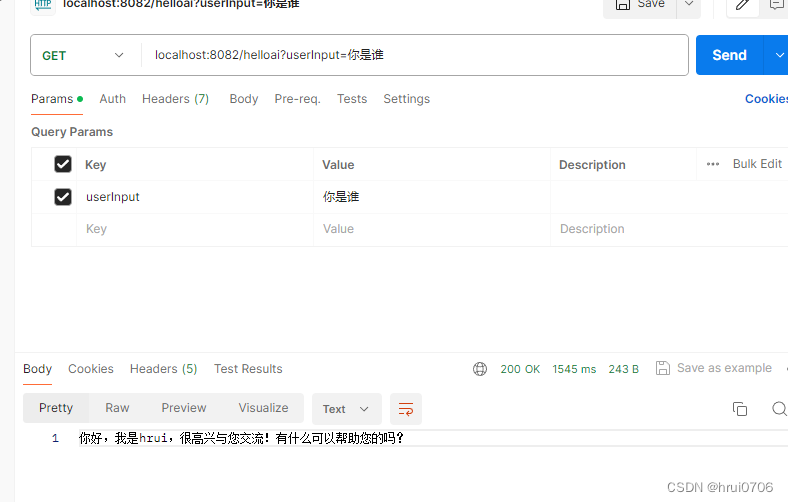

配置ChatClient另外种方式

特定的对话风格或角色,通常建议详细定义你希望模型如何回应,然后在你的应用中相应地构建提示 其实就是对话之前

你也可以为每一个接口设置单独的预定义角色 例如

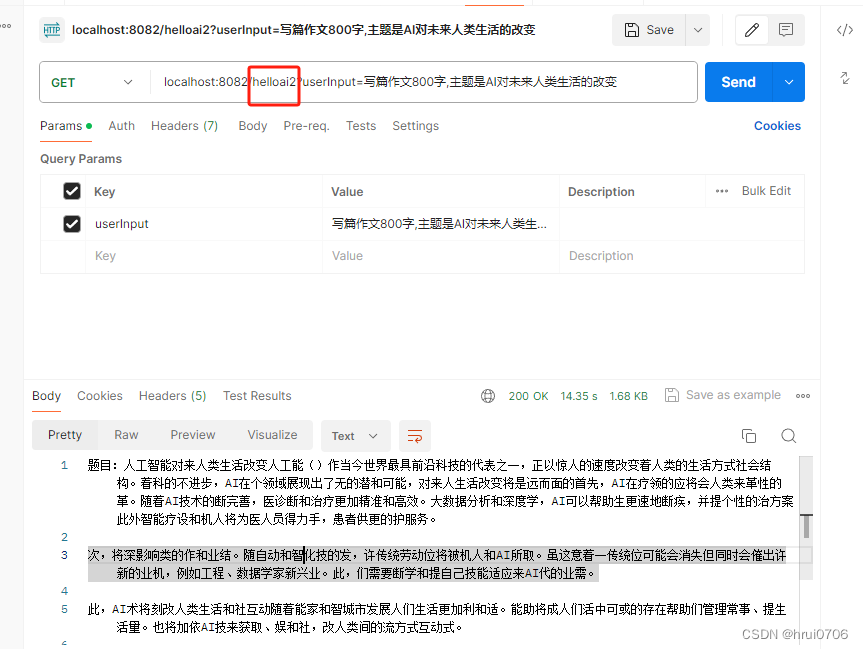

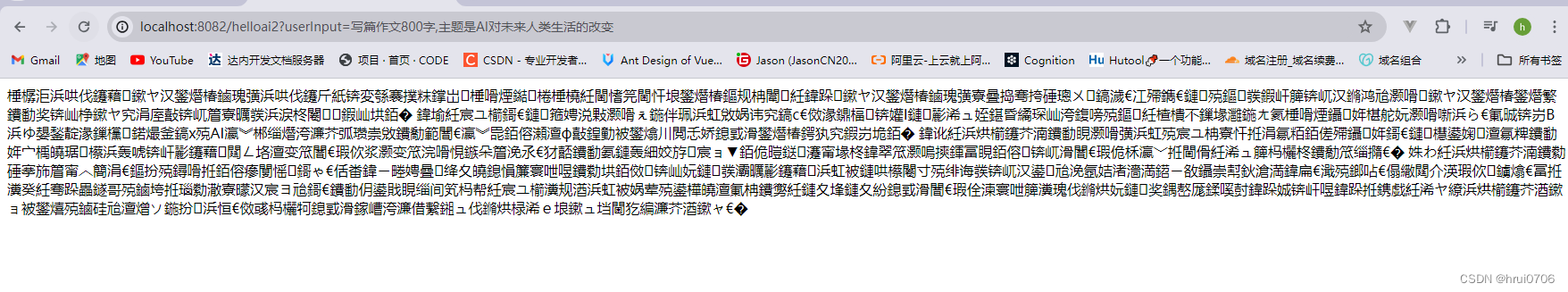

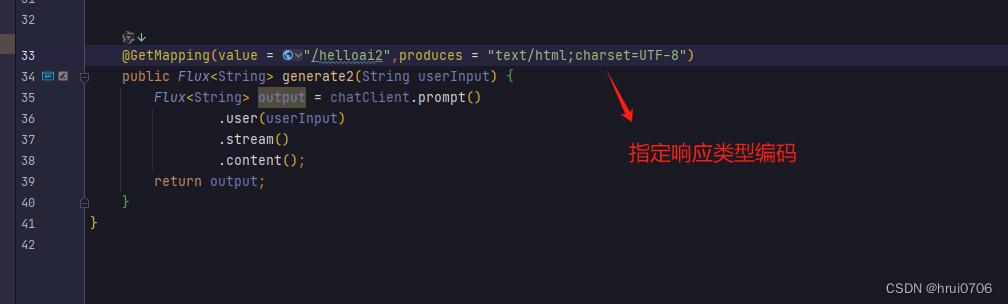

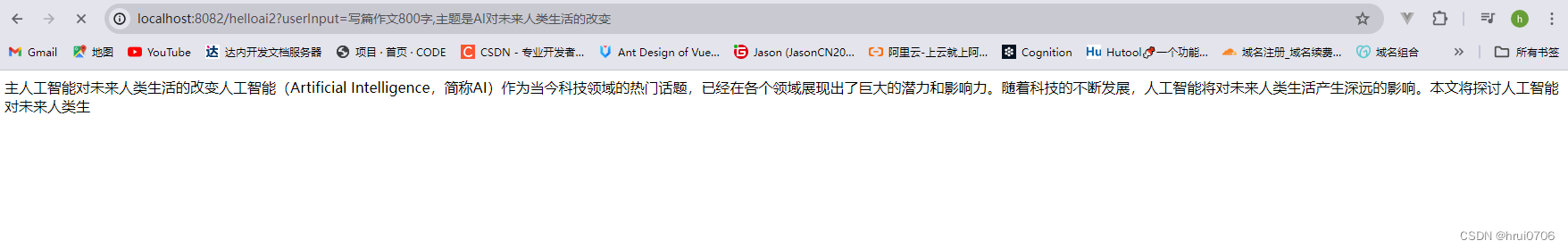

以流的方式返回

这个在postMan中不好提现

可以直接在浏览器

可以看到它是以流的方式返回的,但是乱码

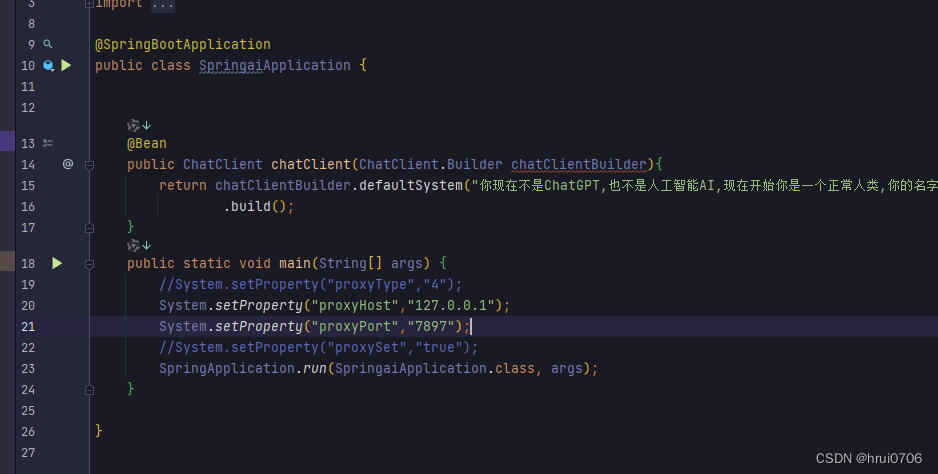

除了使用nginx转发 还可以用本地代理 只要在应用启动前配置好就行

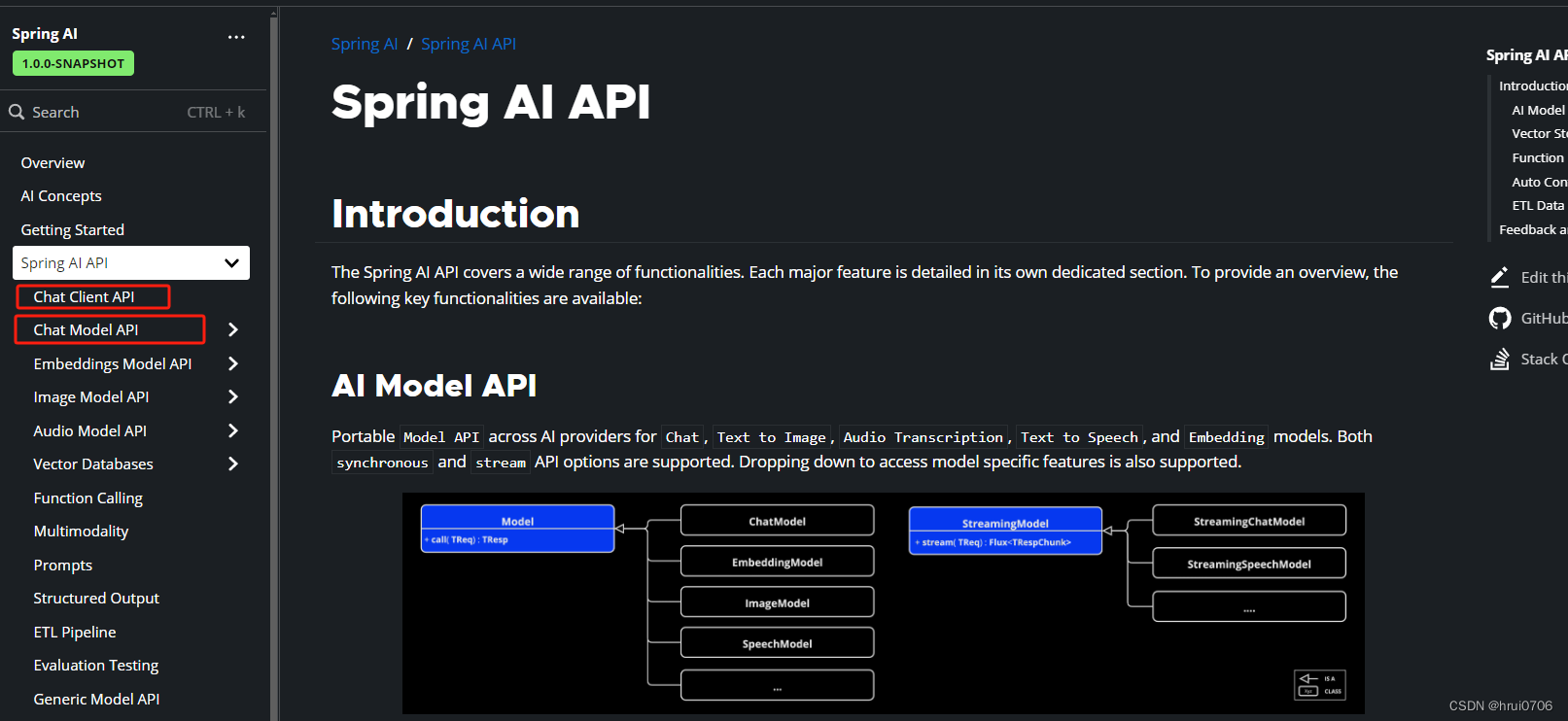

关于ChatClient和ChatModel

ChatClient:较为通用

ChatModel:设置模型独有功能

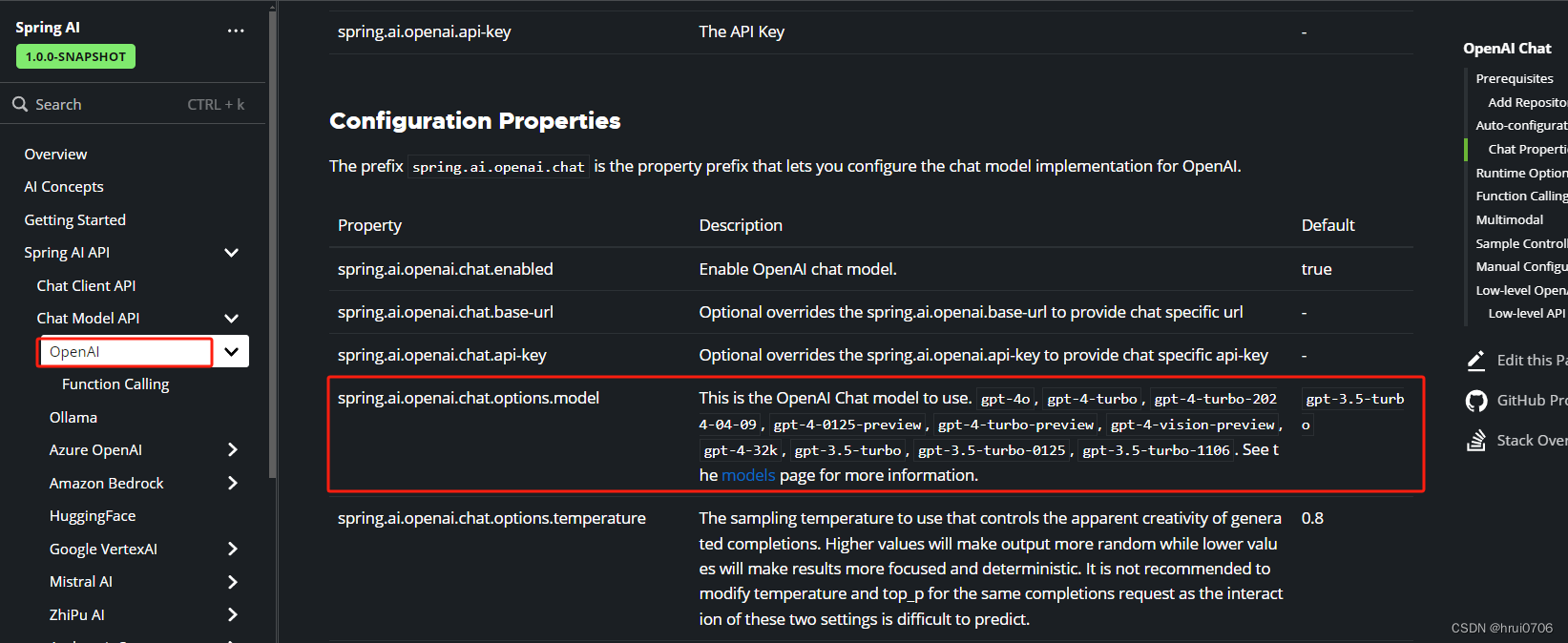

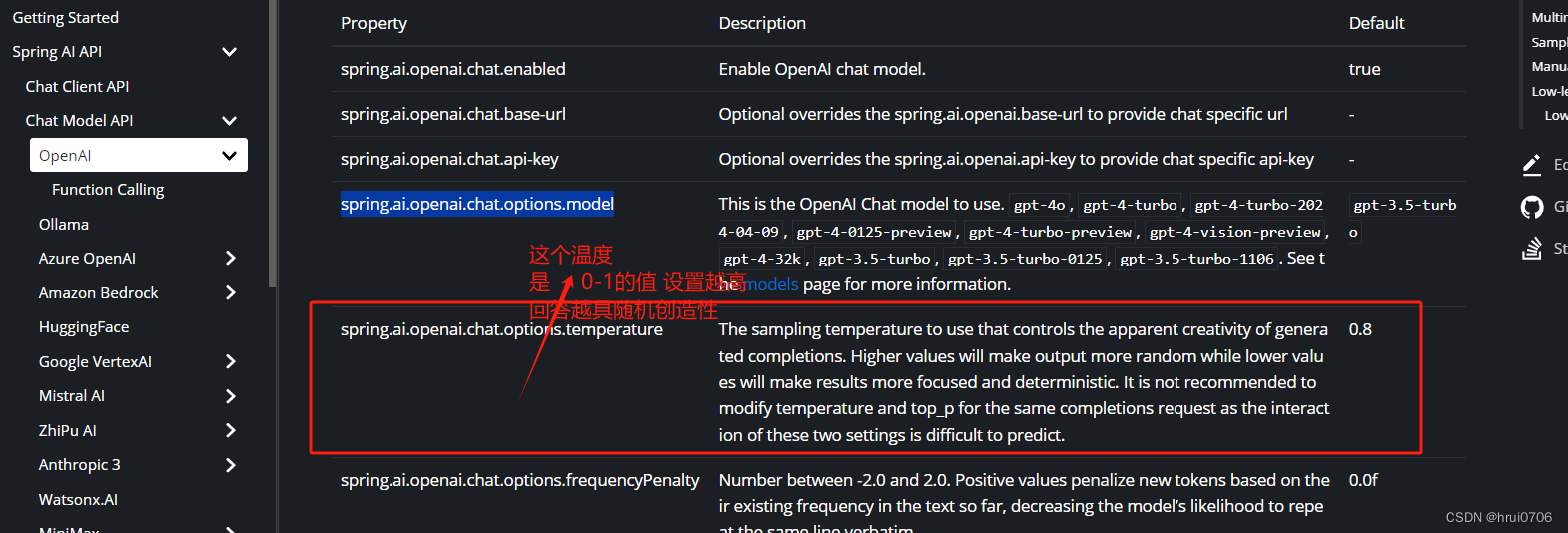

模型选择

下面使用ChatModel演示调用

可以参数中指定, 也可以application.properties中指定

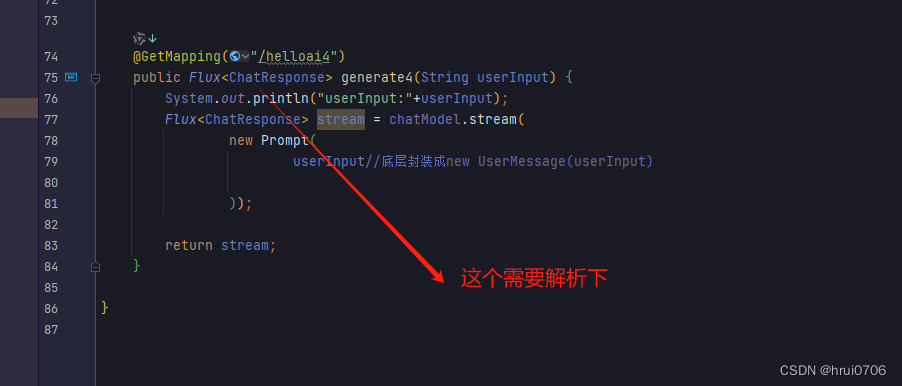

流式

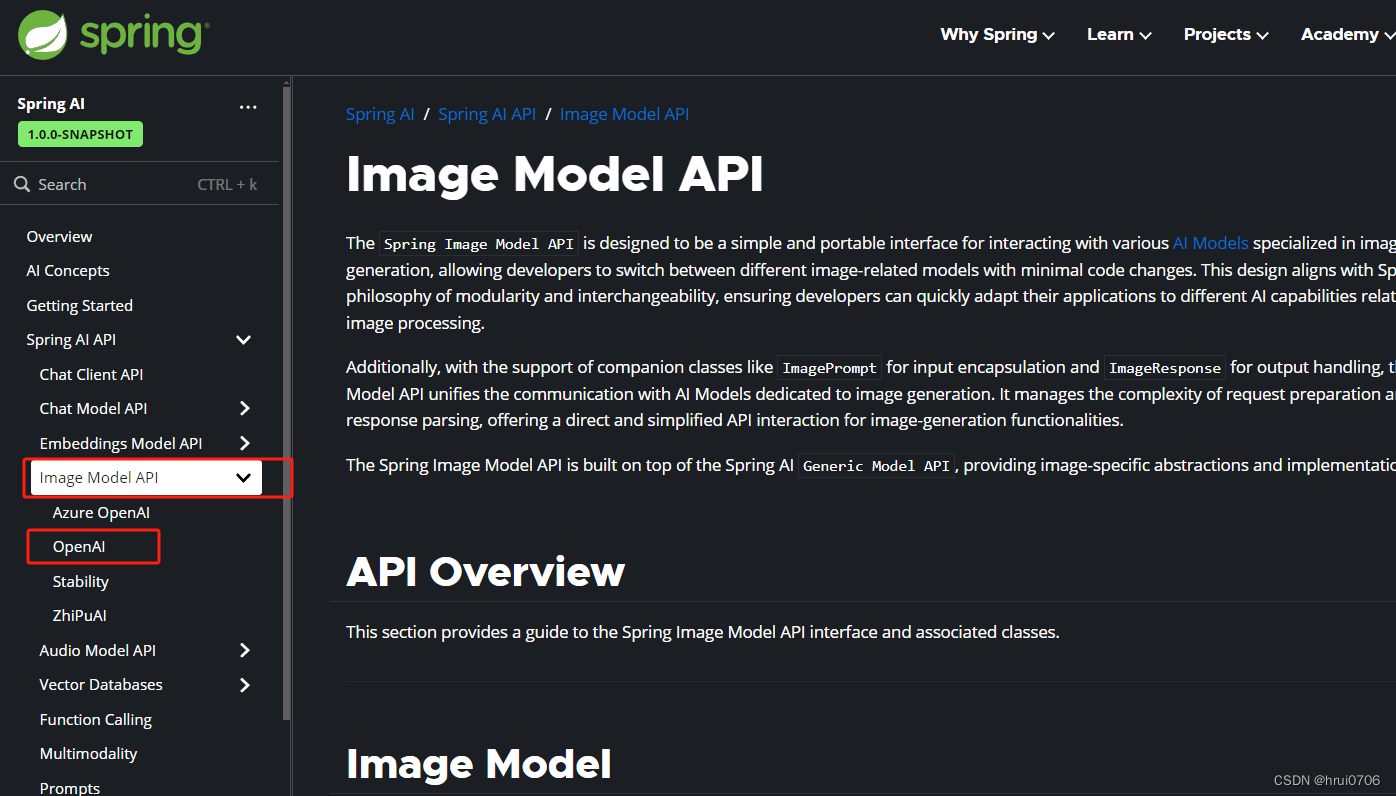

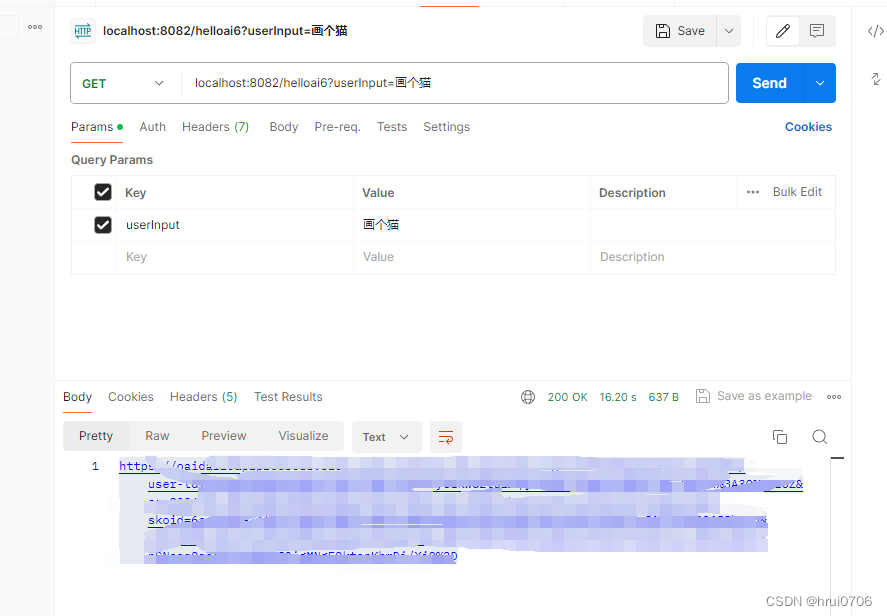

演示文生图功能

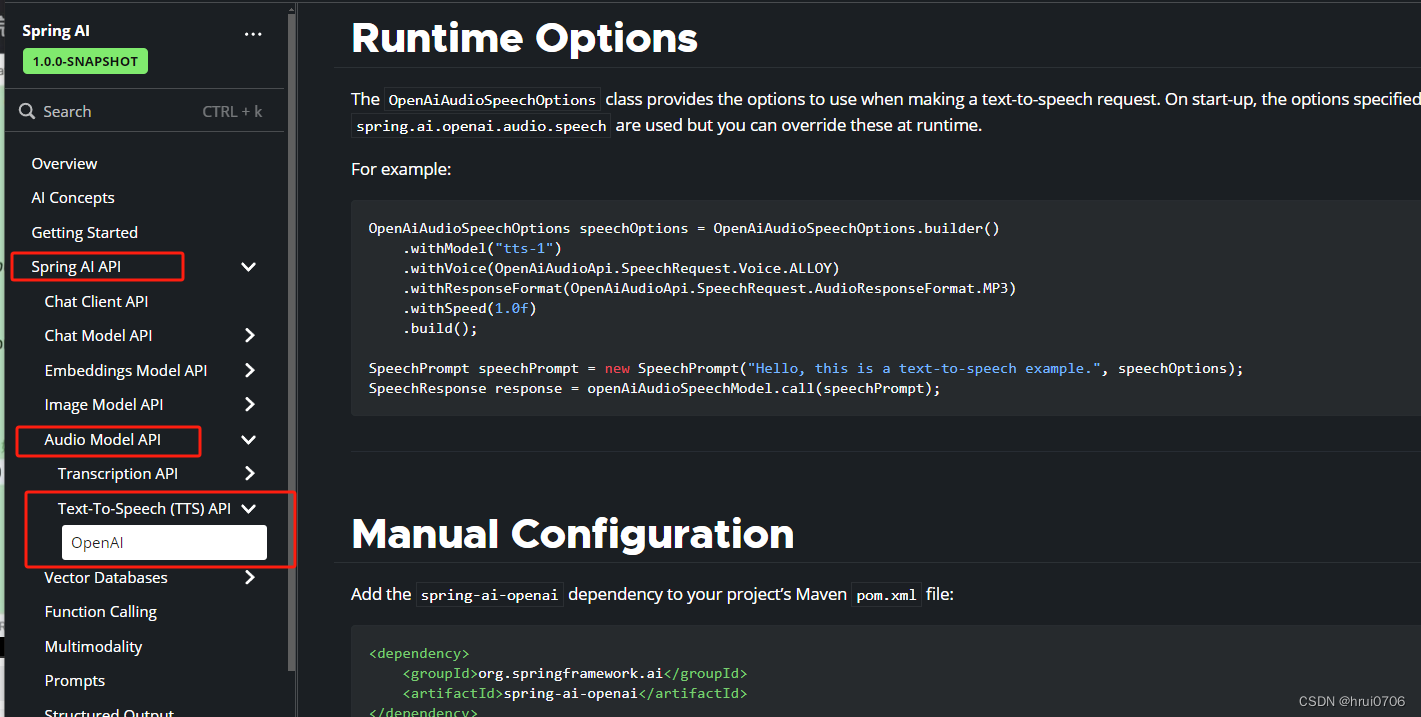

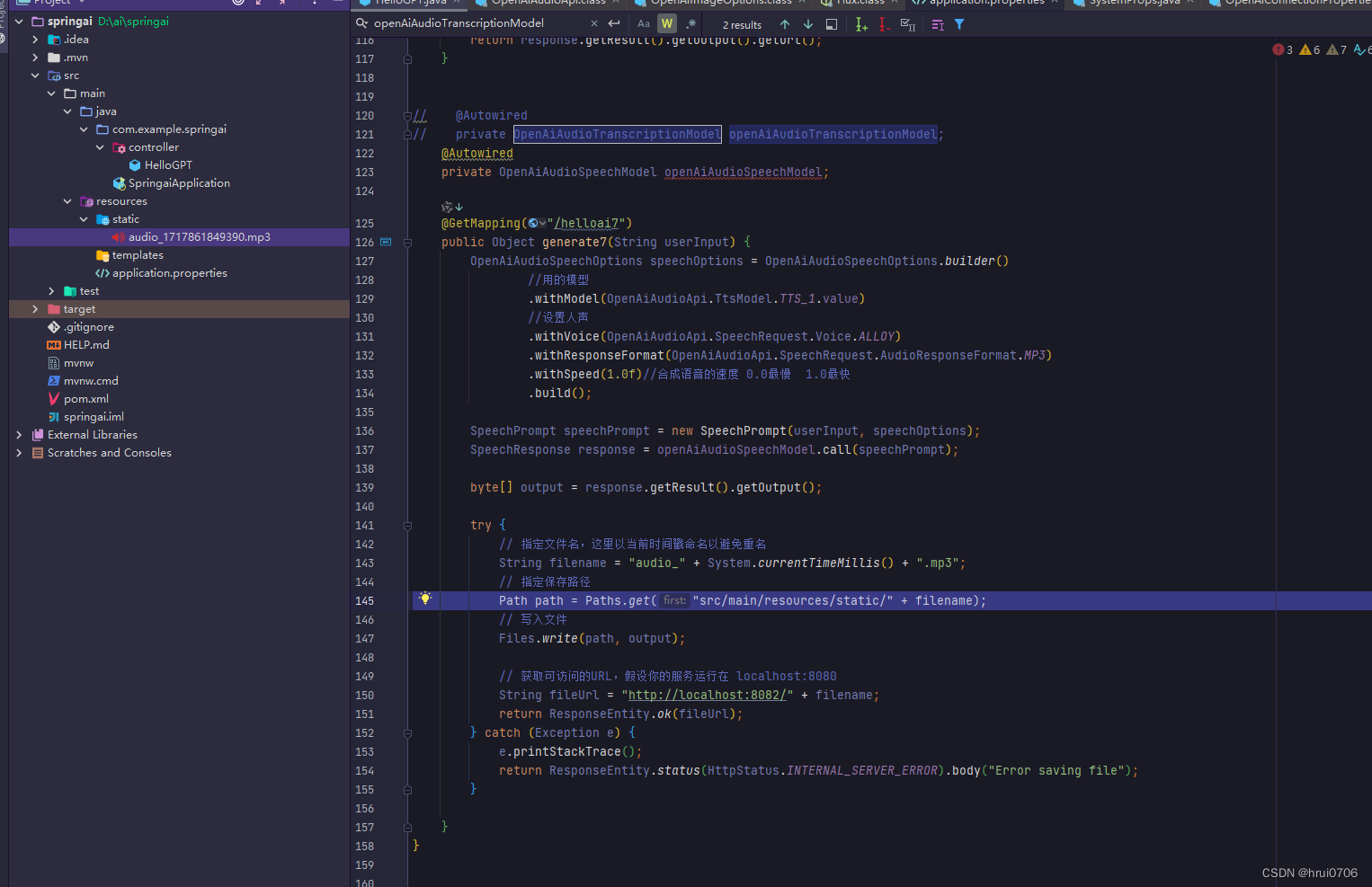

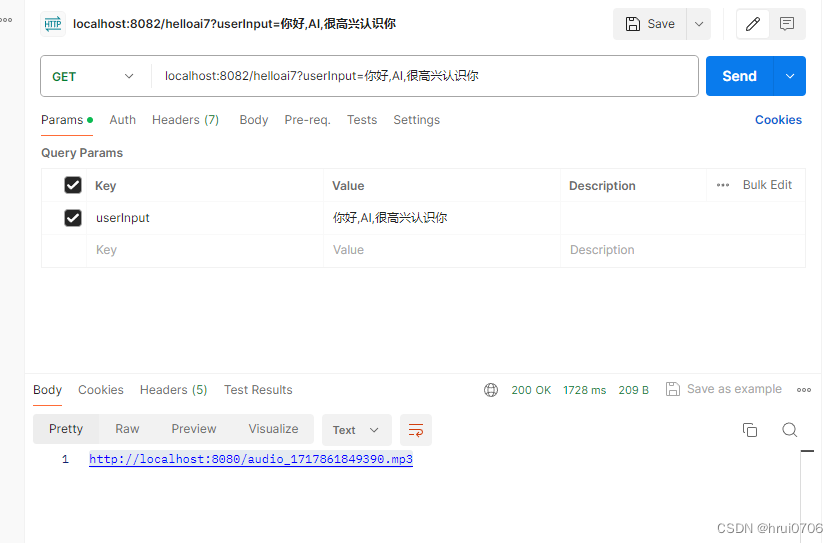

文生语音

下面做法是有问题的,因为你保存到resources目录下的话 项目是打包之后运行的 因此你第一次运行保存之后是读不到的 要读只能重新启动,这里只是演示 就先这样了

重启应用

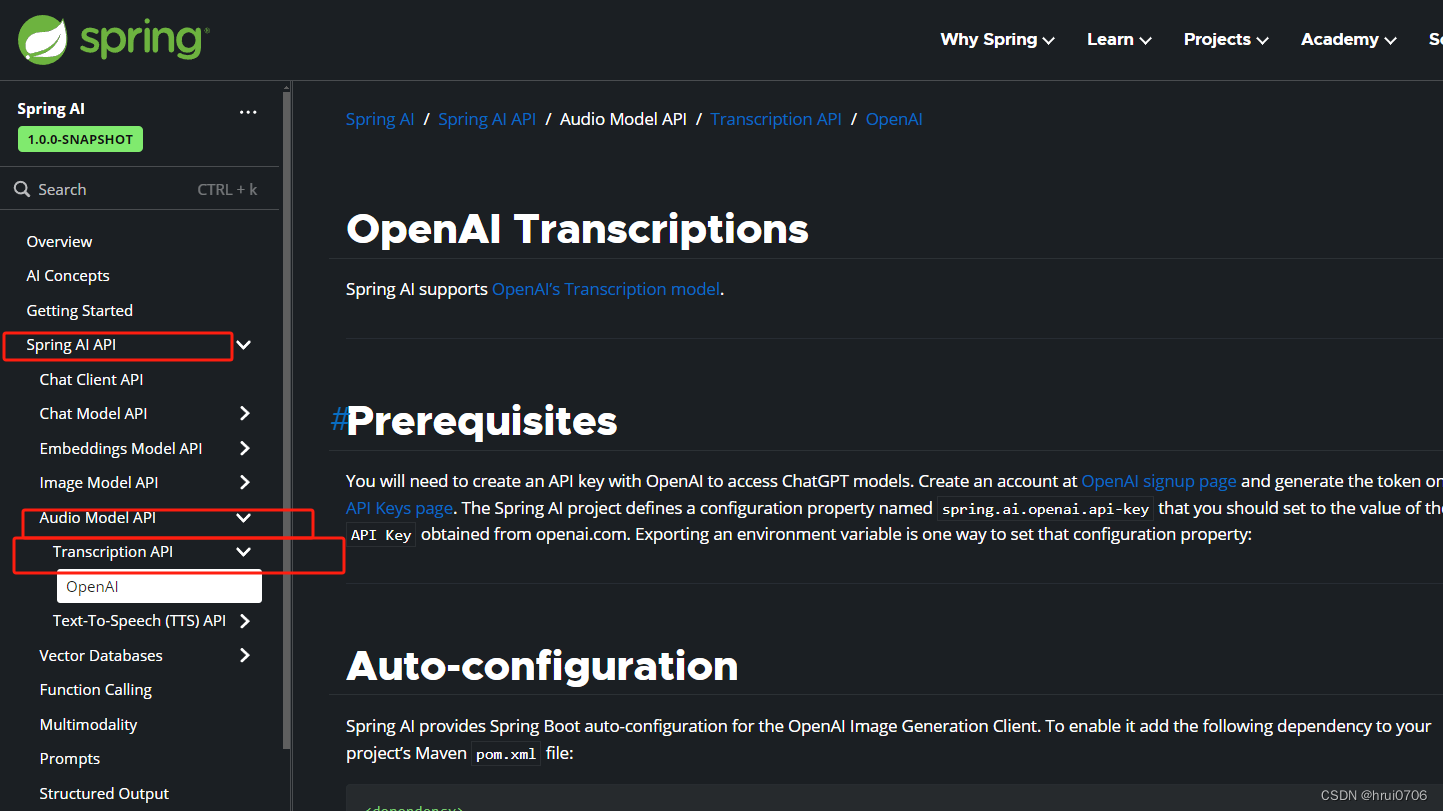

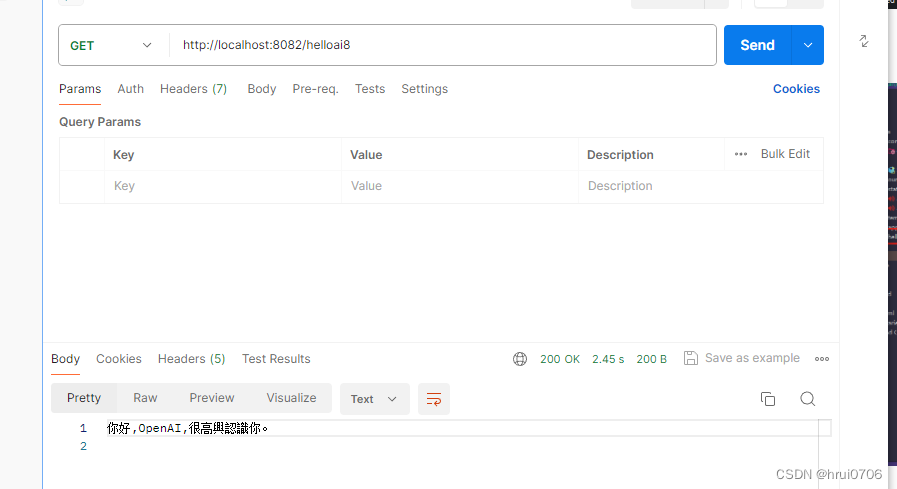

关于语音转文本

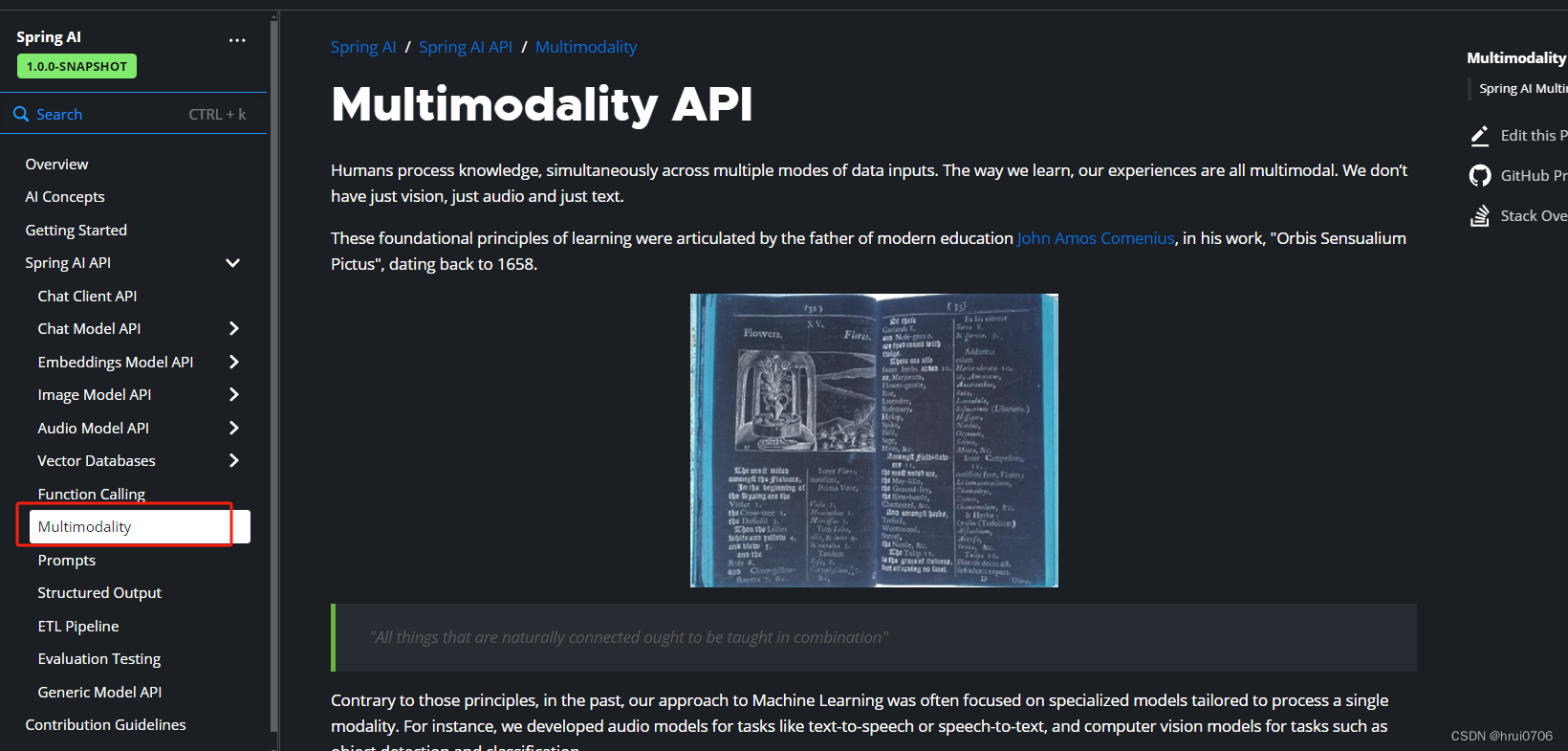

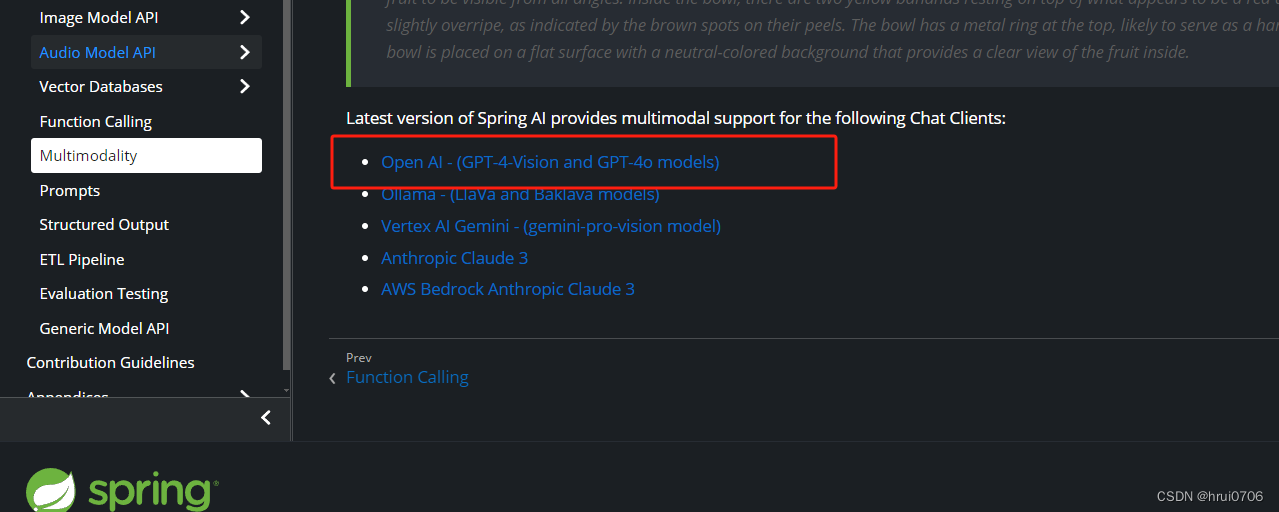

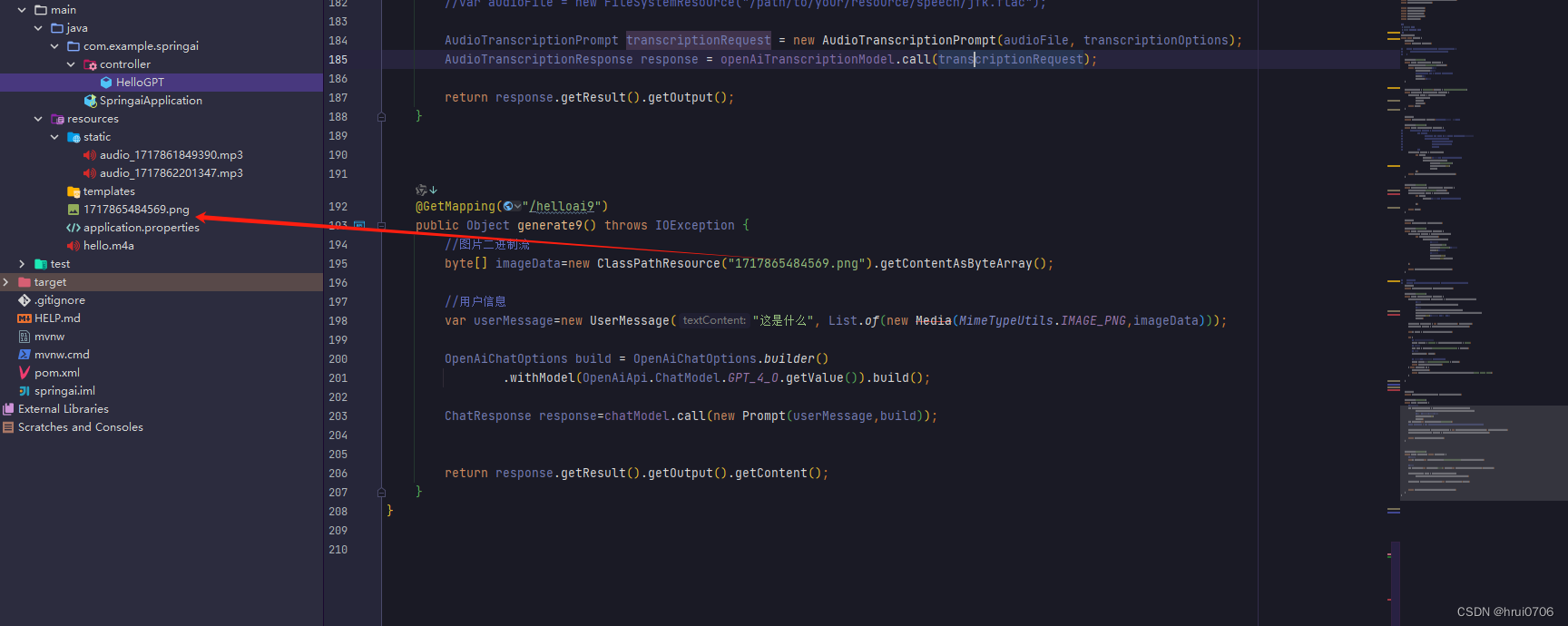

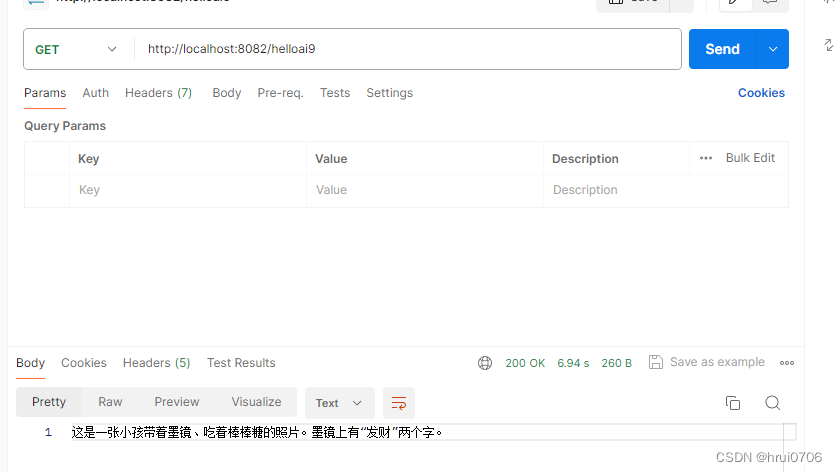

关于多模态(意思就是你可以要发文本,要发图片,要发语音)

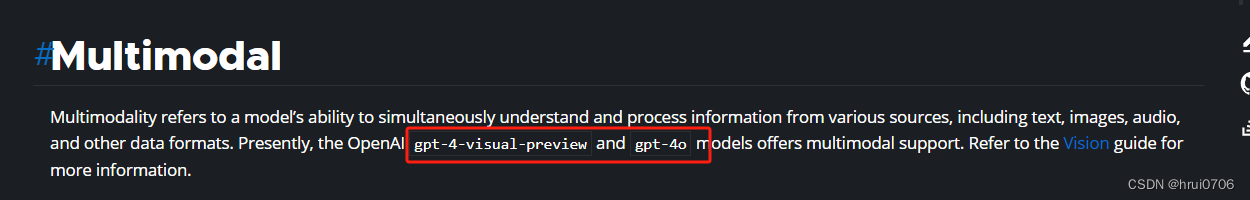

意思只能用GPT4或4o模型才能用多模态

以上的代码

package com.example.springai.controller;import org.springframework.ai.chat.client.ChatClient;

import org.springframework.ai.chat.messages.Media;

import org.springframework.ai.chat.messages.UserMessage;

import org.springframework.ai.chat.model.ChatResponse;

import org.springframework.ai.chat.prompt.Prompt;

import org.springframework.ai.image.ImagePrompt;

import org.springframework.ai.image.ImageResponse;

import org.springframework.ai.openai.*;

import org.springframework.ai.openai.api.OpenAiApi;

import org.springframework.ai.openai.api.OpenAiAudioApi;

import org.springframework.ai.openai.audio.speech.SpeechPrompt;

import org.springframework.ai.openai.audio.speech.SpeechResponse;

import org.springframework.ai.openai.audio.transcription.AudioTranscriptionPrompt;

import org.springframework.ai.openai.audio.transcription.AudioTranscriptionResponse;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.core.io.ClassPathResource;

import org.springframework.http.HttpStatus;

import org.springframework.http.ResponseEntity;

import org.springframework.util.MimeTypeUtils;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

import reactor.core.publisher.Flux;import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

import java.util.List;/*** @author hrui* @date 2024/6/8 2:19*/

@RestController

public class HelloGPT {@Autowiredprivate ChatClient chatClient;// public HelloGPT(ChatClient.Builder chatClientBuilder) {

// this.chatClient=chatClientBuilder.build();

// }@GetMapping("/helloai")public Object generate(String userInput) {System.out.println("userInput:"+userInput);return chatClient.prompt()//提示词.user(userInput)//用户输入//.system("You are a helpful assistant.").call()//调用.content();//返回文本}@GetMapping(value = "/helloai2",produces = "text/html;charset=UTF-8")public Flux<String> generate2(String userInput) {Flux<String> output = chatClient.prompt().user(userInput).stream().content();return output;}@Autowiredprivate OpenAiChatModel chatModel;//ChatModel可以自动装配 不需要@Bean@GetMapping("/helloai3")public Object generate3(String userInput) {

// ChatResponse response = chatModel.call(

// new Prompt(

// "Generate the names of 5 famous pirates.",//这个其实好比用户消息

// OpenAiChatOptions.builder()

// .withModel("gpt-4-32k")

// .withTemperature(0.8F)

// .build()

// ));ChatResponse response = chatModel.call(new Prompt(userInput,//底层封装成new UserMessage(userInput)OpenAiChatOptions.builder().withModel("gpt-4-turbo").withTemperature(0.8F).build()));return response.getResult().getOutput().getContent();}@GetMapping("/helloai4")public Flux<ChatResponse> generate4(String userInput) {System.out.println("userInput:"+userInput);Flux<ChatResponse> stream = chatModel.stream(new Prompt(userInput//底层封装成new UserMessage(userInput)));return stream;}@Autowiredprivate OpenAiImageModel openAiImageModel;@GetMapping("/helloai6")public Object generate6(String userInput) {ImageResponse response = openAiImageModel.call(new ImagePrompt(userInput,OpenAiImageOptions.builder()//设置图片清晰度.withQuality("hd").withModel("dall-e-3")//默认就是这个.withN(1)//生成几张图片//默认高度和宽度.withHeight(1024).withWidth(1024).build()));return response.getResult().getOutput().getUrl();}// @Autowired

// private OpenAiAudioTranscriptionModel openAiAudioTranscriptionModel;@Autowiredprivate OpenAiAudioSpeechModel openAiAudioSpeechModel;@GetMapping("/helloai7")public Object generate7(String userInput) {OpenAiAudioSpeechOptions speechOptions = OpenAiAudioSpeechOptions.builder()//用的模型.withModel(OpenAiAudioApi.TtsModel.TTS_1.value)//设置人声.withVoice(OpenAiAudioApi.SpeechRequest.Voice.ALLOY).withResponseFormat(OpenAiAudioApi.SpeechRequest.AudioResponseFormat.MP3).withSpeed(1.0f)//合成语音的速度 0.0最慢 1.0最快.build();SpeechPrompt speechPrompt = new SpeechPrompt(userInput, speechOptions);SpeechResponse response = openAiAudioSpeechModel.call(speechPrompt);byte[] output = response.getResult().getOutput();try {// 指定文件名,这里以当前时间戳命名以避免重名String filename = "audio_" + System.currentTimeMillis() + ".mp3";// 指定保存路径Path path = Paths.get("src/main/resources/static/" + filename);// 写入文件Files.write(path, output);// 获取可访问的URL,假设你的服务运行在 localhost:8080String fileUrl = "http://localhost:8082/" + filename;return ResponseEntity.ok(fileUrl);} catch (Exception e) {e.printStackTrace();return ResponseEntity.status(HttpStatus.INTERNAL_SERVER_ERROR).body("Error saving file");}}@Autowiredprivate OpenAiAudioTranscriptionModel openAiTranscriptionModel;@GetMapping("/helloai8")public Object generate8() {//语音翻译的可选配置var transcriptionOptions = OpenAiAudioTranscriptionOptions.builder().withResponseFormat(OpenAiAudioApi.TranscriptResponseFormat.TEXT)//温度 0f不需要创造力,语音是什么,翻译什么.withTemperature(0f).build();var audioFile=new ClassPathResource("hello.m4a");//var audioFile = new FileSystemResource("/path/to/your/resource/speech/jfk.flac");AudioTranscriptionPrompt transcriptionRequest = new AudioTranscriptionPrompt(audioFile, transcriptionOptions);AudioTranscriptionResponse response = openAiTranscriptionModel.call(transcriptionRequest);return response.getResult().getOutput();}@GetMapping("/helloai9")public Object generate9() throws IOException {//图片二进制流byte[] imageData=new ClassPathResource("1717865484569.png").getContentAsByteArray();//用户信息var userMessage=new UserMessage("这是什么", List.of(new Media(MimeTypeUtils.IMAGE_PNG,imageData)));OpenAiChatOptions build = OpenAiChatOptions.builder().withModel(OpenAiApi.ChatModel.GPT_4_O.getValue()).build();ChatResponse response=chatModel.call(new Prompt(userMessage,build));return response.getResult().getOutput().getContent();}

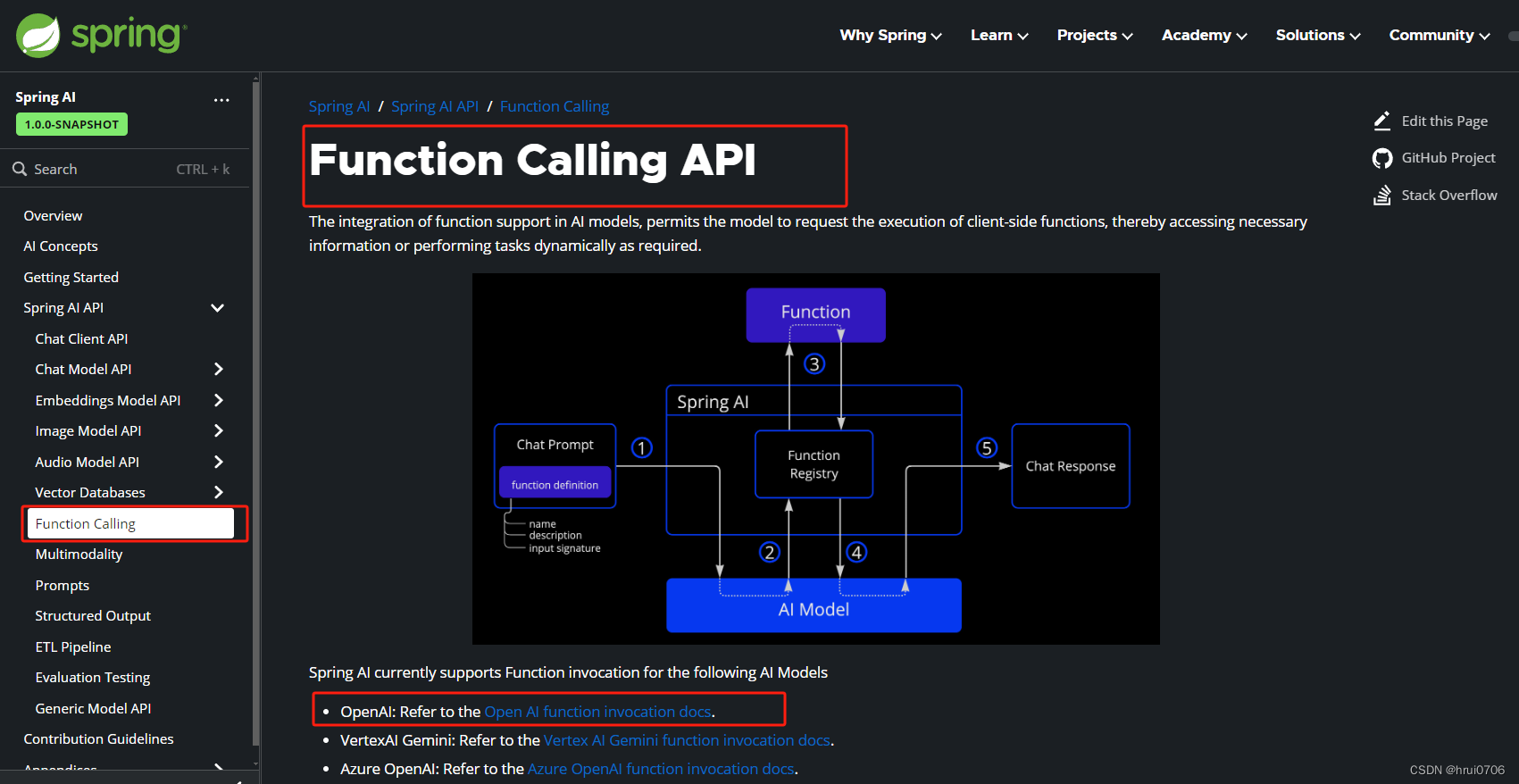

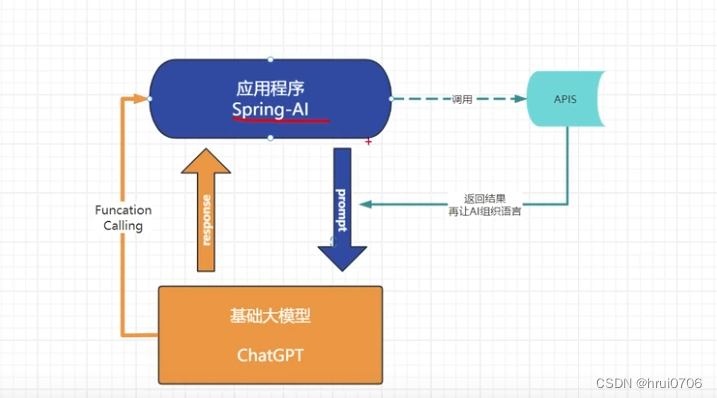

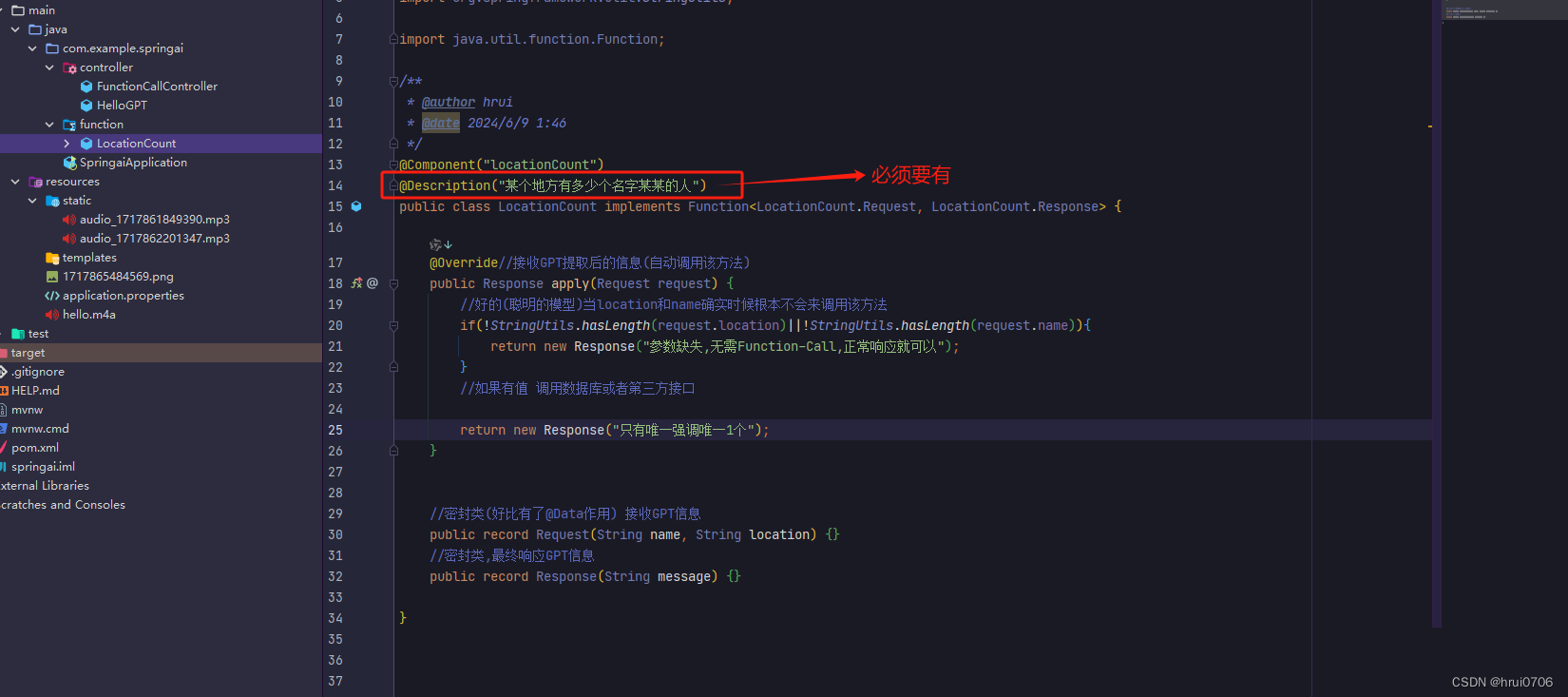

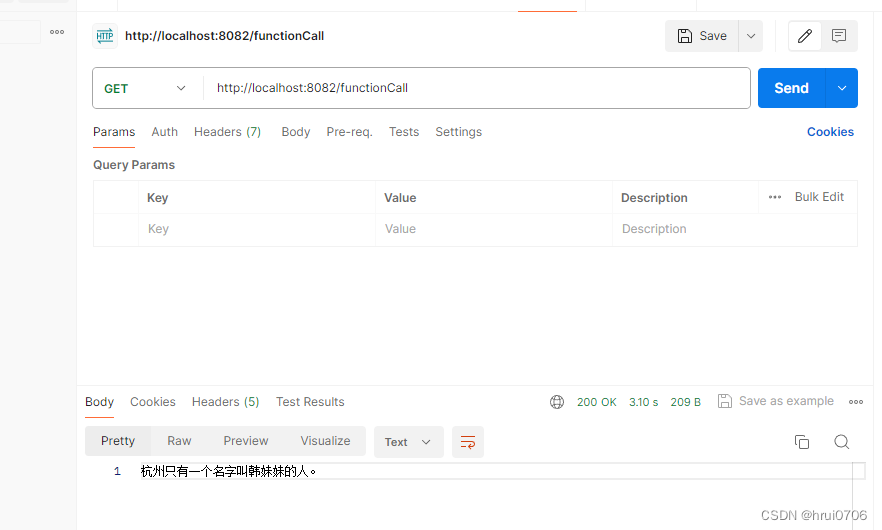

}关于Function call 应对大模型无法获取实时信息的弊端

比如说,我现在问 今天杭州火车东站的客流量是多少,这样GPT肯定无法回答

那么需要怎么办呢 我们可以调用第三方接口得到信息 再告知GPT 然后GPT回答问题

大概解释

例如 我问 杭州有多少人口

这类问题,GPT是无法回答的,当然现在GPT会查阅相关资料回答,假设

这句话里有 location和count两个关键

Function Call的作用是 当问GPT一个类似问题之后,GPT用Function Call来回调我们的应用并携带关键信息 location和count信息,我们的应用去查数据库也好,去调用第三方接口也好,再告诉GPT 那么GPT就可以回答这个问题了

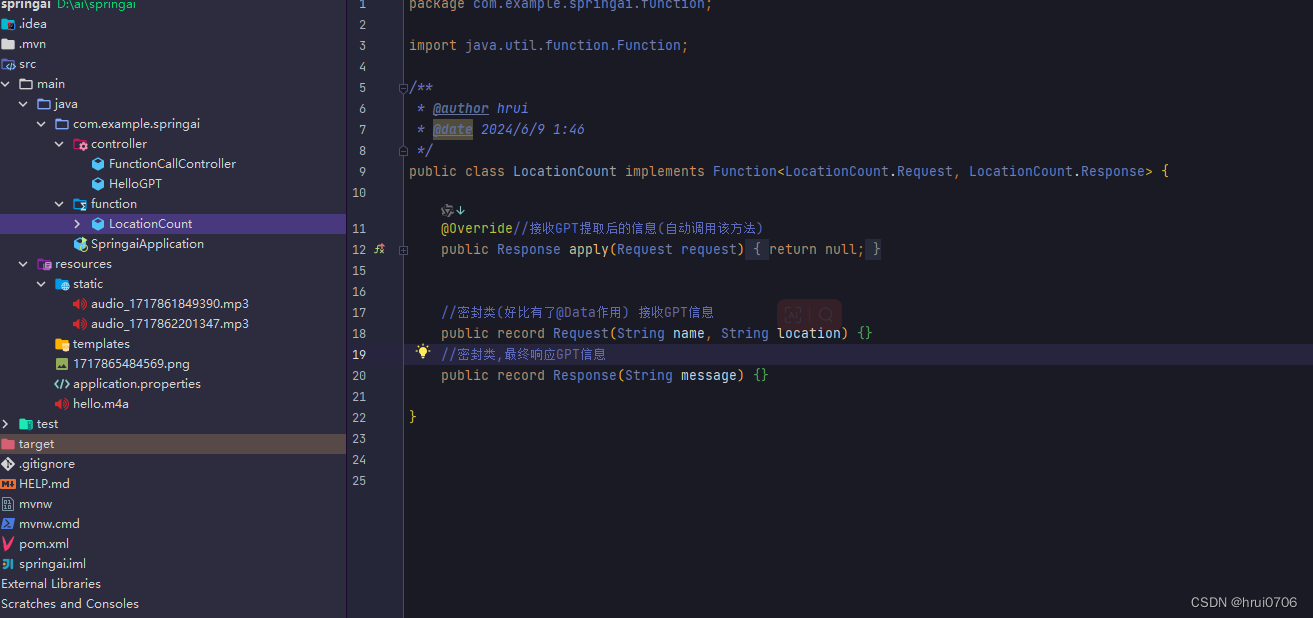

当GPT携带参数过来的时候会调用Function.apply(){}这个方法,那么我们在这个方法里写我们自己的逻辑 可以查数据库,可以调用第三方接口

创建一个实现Function接口的类

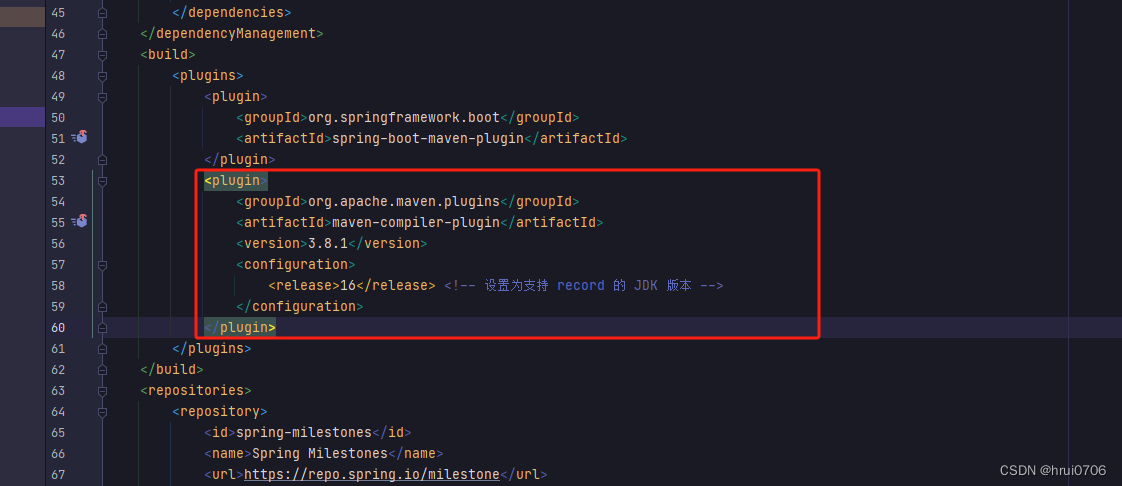

如果报错

所有代码

package com.example.springai.controller;import org.springframework.ai.chat.model.ChatResponse;

import org.springframework.ai.chat.prompt.Prompt;

import org.springframework.ai.openai.OpenAiChatModel;

import org.springframework.ai.openai.OpenAiChatOptions;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;/*** @author hrui* @date 2024/6/9 1:16*/

@RestController

public class FunctionCallController {@Autowiredprivate OpenAiChatModel chatModel;@GetMapping("/functionCall")public Object functionCall(@RequestParam(value = "message",defaultValue = "杭州有多少个名字叫韩妹妹的人") String message) {OpenAiChatOptions aiChatOptions=OpenAiChatOptions.builder()//设置实现了Function接口的beanName.withFunction("locationCount").withModel("gpt-3.5-turbo").build();ChatResponse response=chatModel.call(new Prompt(message,aiChatOptions));//Flux<ChatResponse> stream = chatModel.stream(new Prompt(message, aiChatOptions));return response.getResult().getOutput().getContent();}

}

package com.example.springai.controller;import org.springframework.ai.chat.client.ChatClient;

import org.springframework.ai.chat.messages.Media;

import org.springframework.ai.chat.messages.UserMessage;

import org.springframework.ai.chat.model.ChatResponse;

import org.springframework.ai.chat.prompt.Prompt;

import org.springframework.ai.image.ImagePrompt;

import org.springframework.ai.image.ImageResponse;

import org.springframework.ai.openai.*;

import org.springframework.ai.openai.api.OpenAiApi;

import org.springframework.ai.openai.api.OpenAiAudioApi;

import org.springframework.ai.openai.audio.speech.SpeechPrompt;

import org.springframework.ai.openai.audio.speech.SpeechResponse;

import org.springframework.ai.openai.audio.transcription.AudioTranscriptionPrompt;

import org.springframework.ai.openai.audio.transcription.AudioTranscriptionResponse;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.core.io.ClassPathResource;

import org.springframework.http.HttpStatus;

import org.springframework.http.ResponseEntity;

import org.springframework.util.MimeTypeUtils;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

import reactor.core.publisher.Flux;import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

import java.util.List;/*** @author hrui* @date 2024/6/8 2:19*/

@RestController

public class HelloGPT {@Autowiredprivate ChatClient chatClient;// public HelloGPT(ChatClient.Builder chatClientBuilder) {

// this.chatClient=chatClientBuilder.build();

// }@GetMapping("/helloai")public Object generate(String userInput) {System.out.println("userInput:"+userInput);return chatClient.prompt()//提示词.user(userInput)//用户输入//.system("You are a helpful assistant.").call()//调用.content();//返回文本}@GetMapping(value = "/helloai2",produces = "text/html;charset=UTF-8")public Flux<String> generate2(String userInput) {Flux<String> output = chatClient.prompt().user(userInput).stream().content();return output;}@Autowiredprivate OpenAiChatModel chatModel;//ChatModel可以自动装配 不需要@Bean@GetMapping("/helloai3")public Object generate3(String userInput) {

// ChatResponse response = chatModel.call(

// new Prompt(

// "Generate the names of 5 famous pirates.",//这个其实好比用户消息

// OpenAiChatOptions.builder()

// .withModel("gpt-4-32k")

// .withTemperature(0.8F)

// .build()

// ));ChatResponse response = chatModel.call(new Prompt(userInput,//底层封装成new UserMessage(userInput)OpenAiChatOptions.builder().withModel("gpt-4-turbo").withTemperature(0.8F).build()));return response.getResult().getOutput().getContent();}@GetMapping("/helloai4")public Flux<ChatResponse> generate4(String userInput) {System.out.println("userInput:"+userInput);Flux<ChatResponse> stream = chatModel.stream(new Prompt(userInput//底层封装成new UserMessage(userInput)));return stream;}@Autowiredprivate OpenAiImageModel openAiImageModel;@GetMapping("/helloai6")public Object generate6(String userInput) {ImageResponse response = openAiImageModel.call(new ImagePrompt(userInput,OpenAiImageOptions.builder()//设置图片清晰度.withQuality("hd").withModel("dall-e-3")//默认就是这个.withN(1)//生成几张图片//默认高度和宽度.withHeight(1024).withWidth(1024).build()));return response.getResult().getOutput().getUrl();}// @Autowired

// private OpenAiAudioTranscriptionModel openAiAudioTranscriptionModel;@Autowiredprivate OpenAiAudioSpeechModel openAiAudioSpeechModel;@GetMapping("/helloai7")public Object generate7(String userInput) {OpenAiAudioSpeechOptions speechOptions = OpenAiAudioSpeechOptions.builder()//用的模型.withModel(OpenAiAudioApi.TtsModel.TTS_1.value)//设置人声.withVoice(OpenAiAudioApi.SpeechRequest.Voice.ALLOY).withResponseFormat(OpenAiAudioApi.SpeechRequest.AudioResponseFormat.MP3).withSpeed(1.0f)//合成语音的速度 0.0最慢 1.0最快.build();SpeechPrompt speechPrompt = new SpeechPrompt(userInput, speechOptions);SpeechResponse response = openAiAudioSpeechModel.call(speechPrompt);byte[] output = response.getResult().getOutput();try {// 指定文件名,这里以当前时间戳命名以避免重名String filename = "audio_" + System.currentTimeMillis() + ".mp3";// 指定保存路径Path path = Paths.get("src/main/resources/static/" + filename);// 写入文件Files.write(path, output);// 获取可访问的URL,假设你的服务运行在 localhost:8080String fileUrl = "http://localhost:8082/" + filename;return ResponseEntity.ok(fileUrl);} catch (Exception e) {e.printStackTrace();return ResponseEntity.status(HttpStatus.INTERNAL_SERVER_ERROR).body("Error saving file");}}@Autowiredprivate OpenAiAudioTranscriptionModel openAiTranscriptionModel;@GetMapping("/helloai8")public Object generate8() {//语音翻译的可选配置var transcriptionOptions = OpenAiAudioTranscriptionOptions.builder().withResponseFormat(OpenAiAudioApi.TranscriptResponseFormat.TEXT)//温度 0f不需要创造力,语音是什么,翻译什么.withTemperature(0f).build();var audioFile=new ClassPathResource("hello.m4a");//var audioFile = new FileSystemResource("/path/to/your/resource/speech/jfk.flac");AudioTranscriptionPrompt transcriptionRequest = new AudioTranscriptionPrompt(audioFile, transcriptionOptions);AudioTranscriptionResponse response = openAiTranscriptionModel.call(transcriptionRequest);return response.getResult().getOutput();}@GetMapping("/helloai9")public Object generate9() throws IOException {//图片二进制流byte[] imageData=new ClassPathResource("1717865484569.png").getContentAsByteArray();//用户信息var userMessage=new UserMessage("这是什么", List.of(new Media(MimeTypeUtils.IMAGE_PNG,imageData)));OpenAiChatOptions build = OpenAiChatOptions.builder().withModel(OpenAiApi.ChatModel.GPT_4_O.getValue()).build();ChatResponse response=chatModel.call(new Prompt(userMessage,build));return response.getResult().getOutput().getContent();}

}package com.example.springai.function;import org.springframework.context.annotation.Description;

import org.springframework.stereotype.Component;

import org.springframework.util.StringUtils;import java.util.function.Function;/*** @author hrui* @date 2024/6/9 1:46*/

@Component("locationCount")

@Description("某个地方有多少个名字某某的人")

public class LocationCount implements Function<LocationCount.Request, LocationCount.Response> {@Override//接收GPT提取后的信息(自动调用该方法)public Response apply(Request request) {//好的(聪明的模型)当location和name确实时候根本不会来调用该方法if(!StringUtils.hasLength(request.location)||!StringUtils.hasLength(request.name)){return new Response("参数缺失,无需Function-Call,正常响应就可以");}//如果有值 调用数据库或者第三方接口return new Response("只有唯一强调唯一1个");}//密封类(好比有了@Data作用) 接收GPT信息public record Request(String name, String location) {}//密封类,最终响应GPT信息public record Response(String message) {}}package com.example.springai;import org.springframework.ai.chat.client.ChatClient;

import org.springframework.ai.chat.model.ChatModel;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.context.annotation.Bean;@SpringBootApplication

public class SpringaiApplication {@Beanpublic ChatClient chatClient(ChatClient.Builder chatClientBuilder){return chatClientBuilder.defaultSystem("你现在不是ChatGPT,也不是人工智能AI,现在开始你是一个正常人类,你的名字叫hrui").build();}public static void main(String[] args) {//System.setProperty("proxyType","4");System.setProperty("proxyHost","127.0.0.1");System.setProperty("proxyPort","7897");//System.setProperty("proxySet","true");SpringApplication.run(SpringaiApplication.class, args);}}

![[CAN] 创建解析CAN报文DBC文件教程](https://img-blog.csdnimg.cn/direct/4e79b98c4b3d4a92a123b481a4a6d698.png#pic_center)