文章目录

- 一、技术选型

- 二、fastDFS组成部分

- 三、docker-compose文件

- 四、客户端nginx配置

- 五、存储器

- spring Boot集成

- 参考文献

一、技术选型

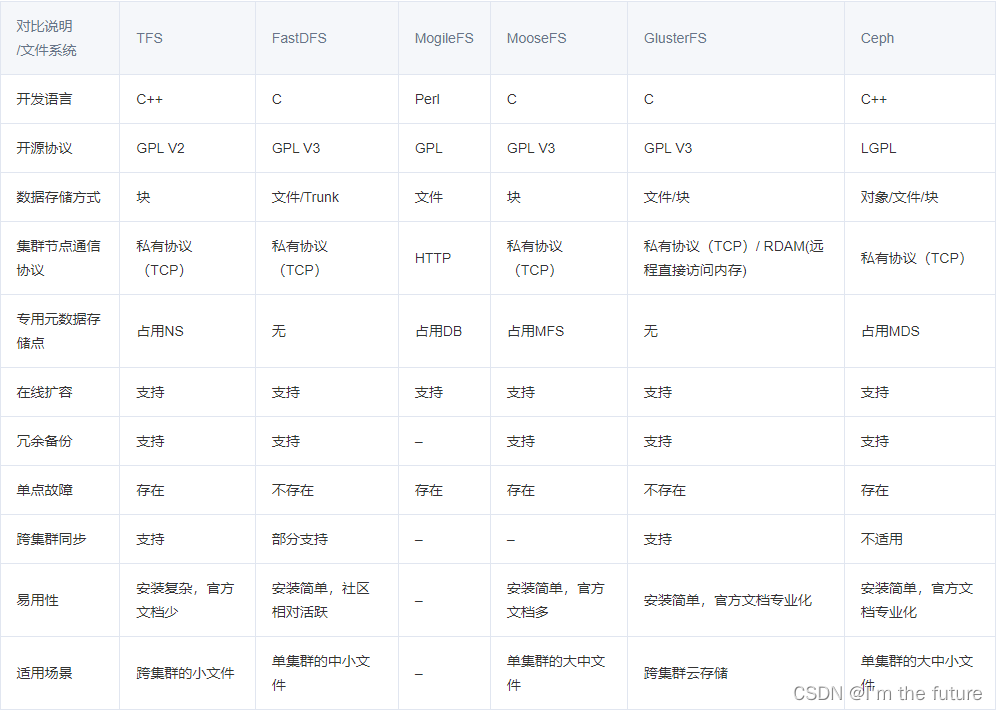

还有一个更好的google FS(但是他不开源,我也没找到社区版一类的可以不要钱使用的)。

最后考虑到我们存储的都是10M以下的图片,而且访问量不是很高,所以使用了国产的fastDFS(当然也包含爱国情怀)。

二、fastDFS组成部分

Tracker Server: 主要负责调度工作,起到均衡的作用,管理所有的 Storage Server 和 Group。每个 Storage Server 在启动后会连接 Tracker,告知自己所属 Group 等信息,并保持周期性心跳。多个 Tracker 之间是对等关系,不存在单点故障。Tracker Server 在内存中记录分组和 Storage Server 的状态等信息,不记录文件索引信息,占用的内存量很少。Tracker Server 作为中心结点,管理集群拓扑结构,其主要作用是负载均衡和调度。

Storage Server: 主要提供容量和备份服务,以 Group 为单位,每个 Group 内可以有多台 Storage Server。组内的所有 Storage Server 之间是平等关系,会相互连接进行文件同步,从而保证组内的所有 Storage Server 的文件内容一致。Storage Server 直接利用 OS 的文件系统存储文件,不会对文件进行分块存储,客户端上传的文件和 Storage Server 上的文件一一对应(V3 引入的小文件合并存储除外)。每个 Storage Server 的存储依赖于本地文件系统,可配置多个数据存储目录。

Client: 与 Tracker Server 和 Storage Server 交互,负责文件的上传、下载、删除等操作。客户端可以指定上传到的 Group,当某个 Group 的访问压力较大时,可以在该组增加 Storage Server 来扩充服务能力。

三、docker-compose文件

version: '3.3'

services:tracker: # 调度器image: season/fastdfs:1.2container_name: fastdfs-trackernetwork_mode: hostrestart: alwayscommand: "tracker"storage: # 存储器image: season/fastdfs:1.2container_name: fastdfs-storagenetwork_mode: hostrestart: alwaysvolumes:- "/fastDFS/storage/storage.conf:/fdfs_conf/storage.conf" # 存储器配置文件- "/fastDFS/storage/storage_base_path:/fastdfs/storage/data" # 文件存储位置- "/fastDFS/storage/store_path0:/fastdfs/store_path" # 存储配置environment:TRACKER_SERVER: "调度器ip和端口"command: "storage"nginx: # 客户端image: season/fastdfs:1.2container_name: fastdfs-nginxrestart: alwaysnetwork_mode: hostvolumes:- "/fastDFS/nginx.conf:/etc/nginx/conf/nginx.conf"- "/fastDFS/storage/store_path0:/fastdfs/store_path"- "/fastDFS/pic:/hydf/fastDFS/pic"environment:TRACKER_SERVER: "调度器ip和端口"command: "nginx"四、客户端nginx配置

#user nobody;

worker_processes 1;#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;#pid logs/nginx.pid;events {worker_connections 1024;

}http {include mime.types;default_type application/octet-stream;#access_log logs/access.log main;sendfile on;#tcp_nopush on;#keepalive_timeout 0;keepalive_timeout 65;#gzip on;server {listen 8088;server_name localhost;#charset koi8-r;#缩略图需要使用插件,需要单独构建nginx镜像,此处忽略#location /group([0-9])/M00/.*\.(gif|jpg|jpeg|png)$ {# root /fastdfs/storage/data;# image on;# image_output off;# image_jpeg_quality 75;# image_backend off;# image_backend_server http://baidu.com/docs/aabbc.png;# }# group1location /group1/M00 {# 文件存储目录root /fastdfs/storage/data; # 注意这里要和dockercompose中的storage data存储配置保持一致ngx_fastdfs_module;}#error_page 404 /404.html;# redirect server error pages to the static page /50x.html#error_page 500 502 503 504 /50x.html;location = /50x.html {root html;}}

}

五、存储器

# the name of the group this storage server belongs to

group_name=group1 # 节点名称,后续访问节点要和这个保持一致# bind an address of this host

# empty for bind all addresses of this host

bind_addr=10.36.6.251 # 绑定本机ip地址(因为是docker所以这里需要指定ip地址)# if bind an address of this host when connect to other servers

# (this storage server as a client)

# true for binding the address configed by above parameter: "bind_addr"

# false for binding any address of this host

client_bind=true# the storage server port

port=23000# connect timeout in seconds

# default value is 30s

connect_timeout=30# network timeout in seconds

# default value is 30s

network_timeout=60# heart beat interval in seconds

heart_beat_interval=30# disk usage report interval in seconds

stat_report_interval=60# the base path to store data and log files

base_path=/fastdfs/storage# max concurrent connections the server supported

# default value is 256

# more max_connections means more memory will be used

max_connections=256# the buff size to recv / send data

# this parameter must more than 8KB

# default value is 64KB

# since V2.00

buff_size = 256KB# accept thread count

# default value is 1

# since V4.07

accept_threads=1# work thread count, should <= max_connections

# work thread deal network io

# default value is 4

# since V2.00

work_threads=4# if disk read / write separated

## false for mixed read and write

## true for separated read and write

# default value is true

# since V2.00

disk_rw_separated = true# disk reader thread count per store base path

# for mixed read / write, this parameter can be 0

# default value is 1

# since V2.00

disk_reader_threads = 1# disk writer thread count per store base path

# for mixed read / write, this parameter can be 0

# default value is 1

# since V2.00

disk_writer_threads = 1# when no entry to sync, try read binlog again after X milliseconds

# must > 0, default value is 200ms

sync_wait_msec=50# after sync a file, usleep milliseconds

# 0 for sync successively (never call usleep)

sync_interval=0# storage sync start time of a day, time format: Hour:Minute

# Hour from 0 to 23, Minute from 0 to 59

sync_start_time=00:00# storage sync end time of a day, time format: Hour:Minute

# Hour from 0 to 23, Minute from 0 to 59

sync_end_time=23:59# write to the mark file after sync N files

# default value is 500

write_mark_file_freq=500# path(disk or mount point) count, default value is 1

store_path_count=1# store_path#, based 0, if store_path0 not exists, it's value is base_path

# the paths must be exist

store_path0=/fastdfs/store_path

#store_path1=/home/yuqing/fastdfs2# subdir_count * subdir_count directories will be auto created under each

# store_path (disk), value can be 1 to 256, default value is 256

subdir_count_per_path=256# tracker_server can ocur more than once, and tracker_server format is

# "host:port", host can be hostname or ip address

tracker_server=调度器ip地址和端口#standard log level as syslog, case insensitive, value list:

### emerg for emergency

### alert

### crit for critical

### error

### warn for warning

### notice

### info

### debug

log_level=info#unix group name to run this program,

#not set (empty) means run by the group of current user

run_by_group=#unix username to run this program,

#not set (empty) means run by current user

run_by_user=# allow_hosts can ocur more than once, host can be hostname or ip address,

# "*" means match all ip addresses, can use range like this: 10.0.1.[1-15,20] or

# host[01-08,20-25].domain.com, for example:

# allow_hosts=10.0.1.[1-15,20]

# allow_hosts=host[01-08,20-25].domain.com

allow_hosts=*# the mode of the files distributed to the data path

# 0: round robin(default)

# 1: random, distributted by hash code

file_distribute_path_mode=0# valid when file_distribute_to_path is set to 0 (round robin),

# when the written file count reaches this number, then rotate to next path

# default value is 100

file_distribute_rotate_count=100# call fsync to disk when write big file

# 0: never call fsync

# other: call fsync when written bytes >= this bytes

# default value is 0 (never call fsync)

fsync_after_written_bytes=0# sync log buff to disk every interval seconds

# must > 0, default value is 10 seconds

sync_log_buff_interval=10# sync binlog buff / cache to disk every interval seconds

# default value is 60 seconds

sync_binlog_buff_interval=10# sync storage stat info to disk every interval seconds

# default value is 300 seconds

sync_stat_file_interval=300# thread stack size, should >= 512KB

# default value is 512KB

thread_stack_size=512KB# the priority as a source server for uploading file.

# the lower this value, the higher its uploading priority.

# default value is 10

upload_priority=10# the NIC alias prefix, such as eth in Linux, you can see it by ifconfig -a

# multi aliases split by comma. empty value means auto set by OS type

# default values is empty

if_alias_prefix=# if check file duplicate, when set to true, use FastDHT to store file indexes

# 1 or yes: need check

# 0 or no: do not check

# default value is 0

check_file_duplicate=0# file signature method for check file duplicate

## hash: four 32 bits hash code

## md5: MD5 signature

# default value is hash

# since V4.01

file_signature_method=hash# namespace for storing file indexes (key-value pairs)

# this item must be set when check_file_duplicate is true / on

key_namespace=FastDFS# set keep_alive to 1 to enable persistent connection with FastDHT servers

# default value is 0 (short connection)

keep_alive=0# you can use "#include filename" (not include double quotes) directive to

# load FastDHT server list, when the filename is a relative path such as

# pure filename, the base path is the base path of current/this config file.

# must set FastDHT server list when check_file_duplicate is true / on

# please see INSTALL of FastDHT for detail

##include /home/yuqing/fastdht/conf/fdht_servers.conf# if log to access log

# default value is false

# since V4.00

use_access_log = false# if rotate the access log every day

# default value is false

# since V4.00

rotate_access_log = false# rotate access log time base, time format: Hour:Minute

# Hour from 0 to 23, Minute from 0 to 59

# default value is 00:00

# since V4.00

access_log_rotate_time=00:00# if rotate the error log every day

# default value is false

# since V4.02

rotate_error_log = false# rotate error log time base, time format: Hour:Minute

# Hour from 0 to 23, Minute from 0 to 59

# default value is 00:00

# since V4.02

error_log_rotate_time=00:00# rotate access log when the log file exceeds this size

# 0 means never rotates log file by log file size

# default value is 0

# since V4.02

rotate_access_log_size = 0# rotate error log when the log file exceeds this size

# 0 means never rotates log file by log file size

# default value is 0

# since V4.02

rotate_error_log_size = 0# if skip the invalid record when sync file

# default value is false

# since V4.02

file_sync_skip_invalid_record=false# if use connection pool

# default value is false

# since V4.05

use_connection_pool = false# connections whose the idle time exceeds this time will be closed

# unit: second

# default value is 3600

# since V4.05

connection_pool_max_idle_time = 3600# use the ip address of this storage server if domain_name is empty,

# else this domain name will ocur in the url redirected by the tracker server

http.domain_name=# the port of the web server on this storage server

http.server_port=8888spring Boot集成

pom文件引入工具包

<!--fastDFS--><dependency><groupId>com.github.tobato</groupId><artifactId>fastdfs-client</artifactId><version>1.26.5</version></dependency>

java文件上传,这里因为名称长度有要求,所以我直接不要文件名字了,随机了一个然后加上原文件后缀

File file = new File(s);String fileName = file.getName();StorePath path = storageClient.uploadFile("group1", new FileInputStream(file), file.length(), fileName.substring(fileName.lastIndexOf(".") + 1));System.out.println(path.getFullPath());

上传完成后的访问路径,由client所在的nginx客户端地址和返回回来的存储路径拼合就可以直接访问

http://{{nginx client客户端ip地址}}/group1/M00/00/00/CiQG-2ZeyV-AWmHcAA3vSx2O3n4972.png

参考文献

https://github.com/happyfish100/fastdfs

https://cloud.tencent.com/developer/article/2072195

https://blog.51cto.com/u_12740336/6943977

https://blog.csdn.net/weixin_44002151/article/details/131617686

https://blog.csdn.net/ZHUZIH6/article/details/129973644

https://download.csdn.net/blog/column/12340564/136098096