环境:

hadoop 2.7.2

spark-without-hadoop 2.4.6

hive 2.3.4

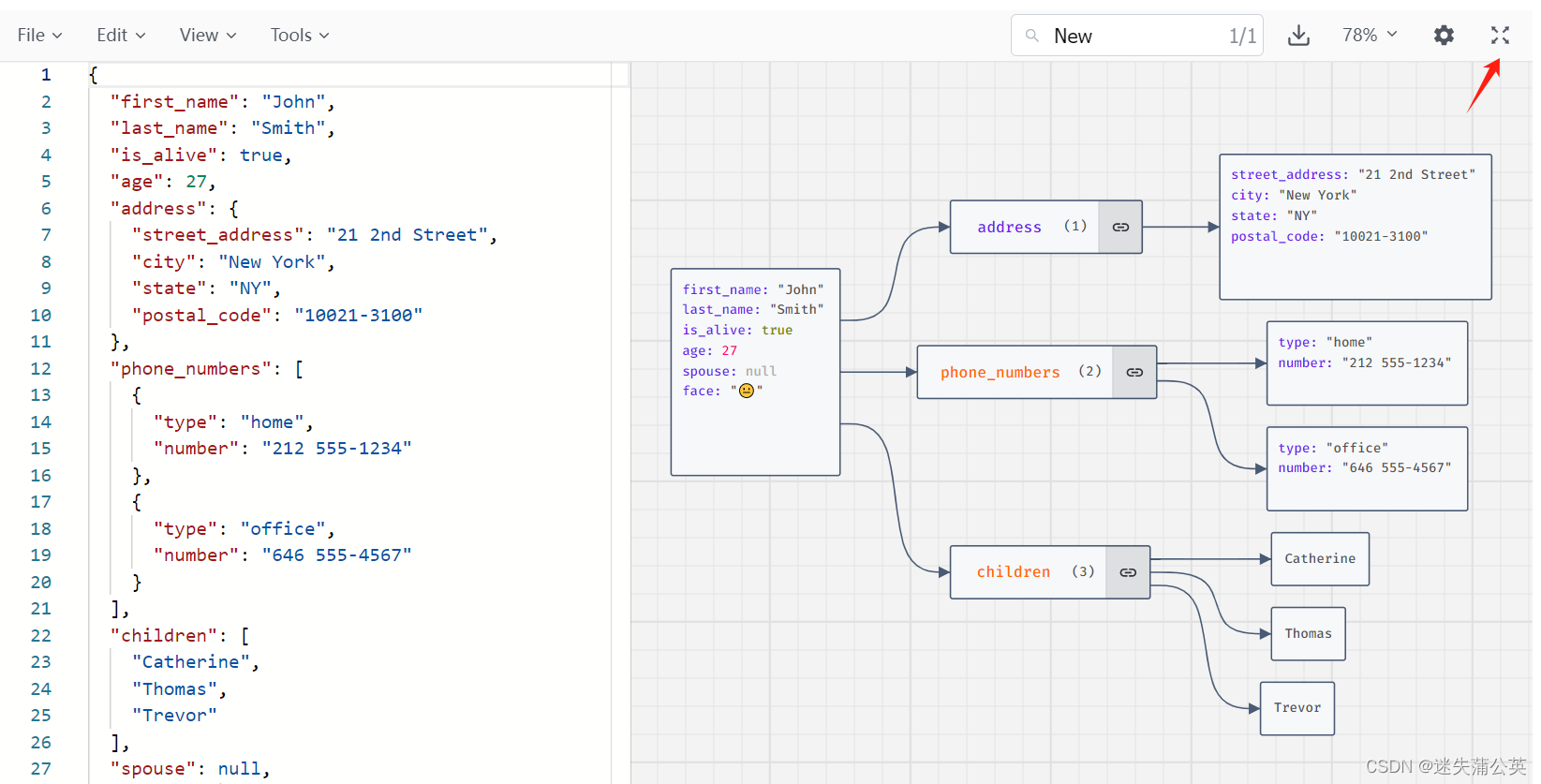

hive-site.xml

<property><name>hive.execution.engine</name><value>spark</value>

</property>

<property><name>spark.yarn.jars</name><value>hdfs://hadoop03:9000/spark-jars/*</value>

</property>

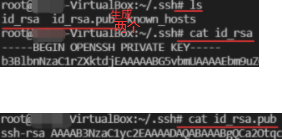

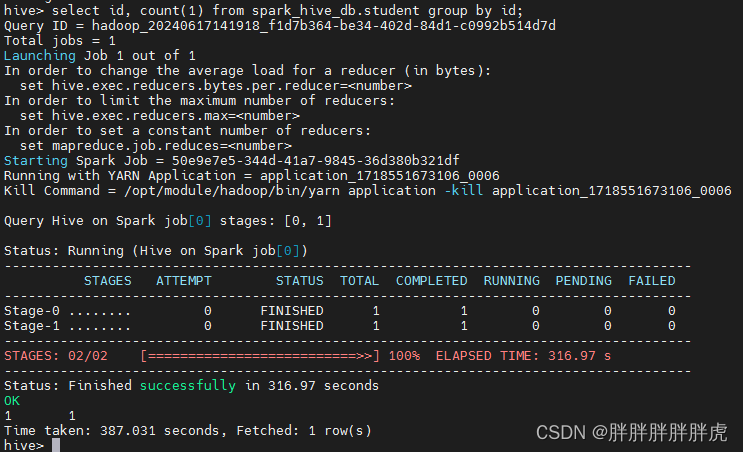

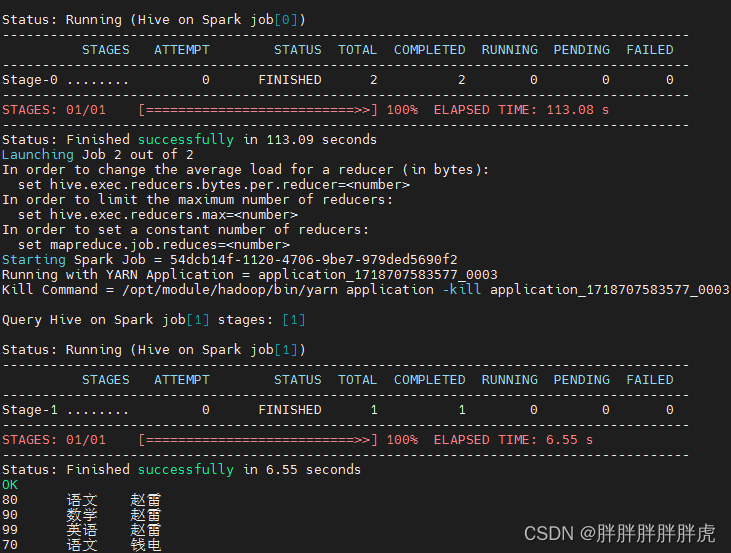

./bin/hive

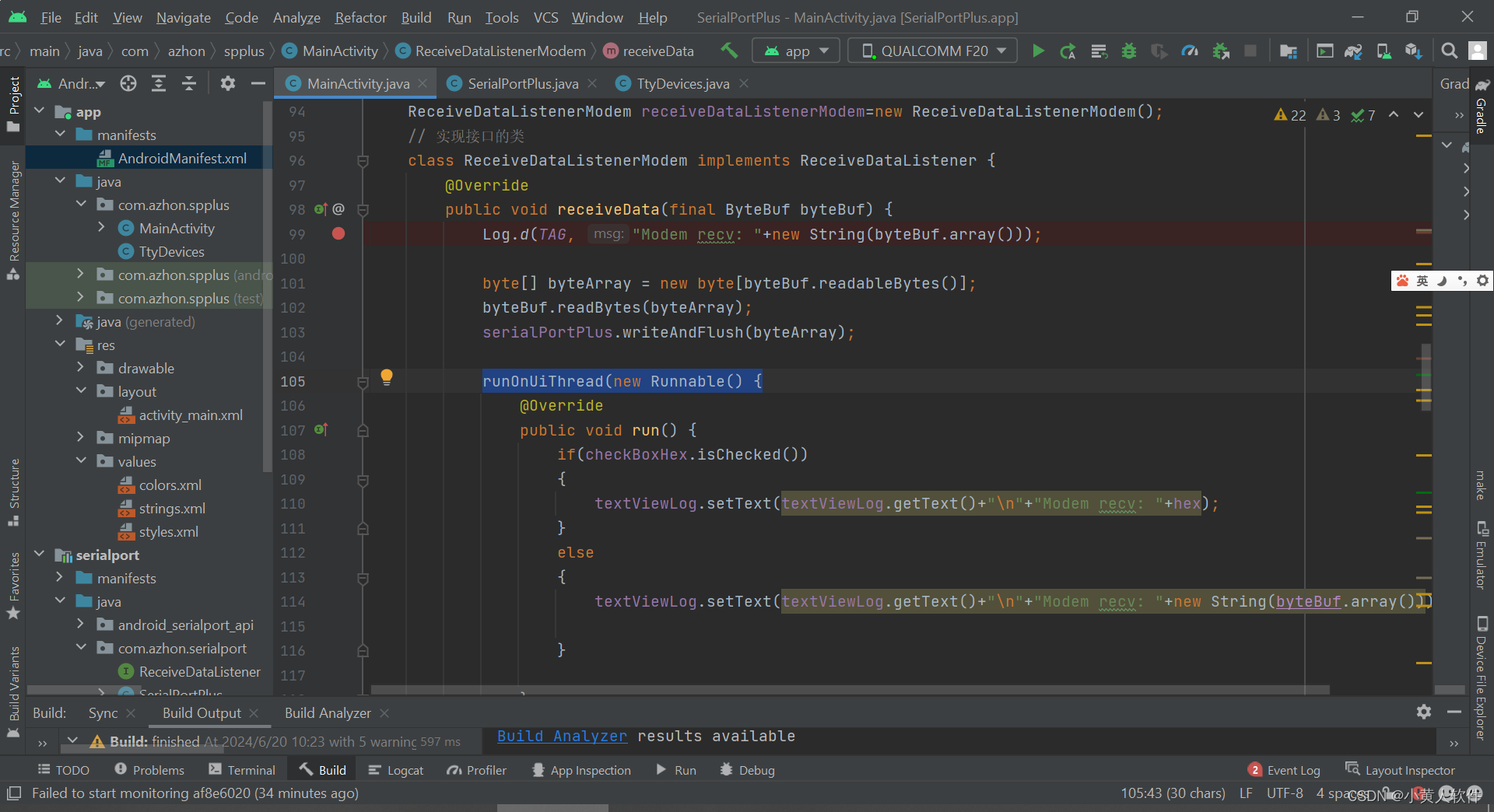

for f in ${SPARK_HOME}/jars/*.jar; doCLASSPATH=${CLASSPATH}:$f;

done

/opt/module/spark-2.4.4-bin-without-hadoop/bin/spark-submit \

--properties-file /tmp/spark-submit.8770948269278745415.properties \

--class org.apache.hive.spark.client.RemoteDriver /opt/module/hive/lib/hive-exec-2.3.4.jar \

--remote-host hadoop03 \

--remote-port 43182 \

--conf hive.spark.client.connect.timeout=1000 \

--conf hive.spark.client.server.connect.timeout=90000 \

--conf hive.spark.client.channel.log.level=null \

--conf hive.spark.client.rpc.max.size=52428800 \

--conf hive.spark.client.rpc.threads=8 \

--conf hive.spark.client.secret.bits=256 \

--conf hive.spark.client.rpc.server.address=null

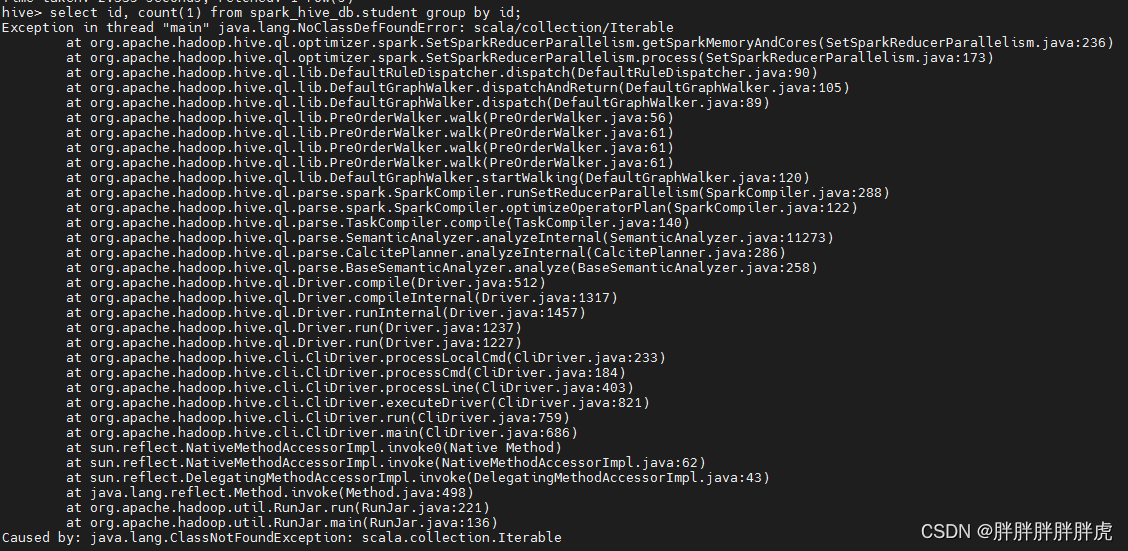

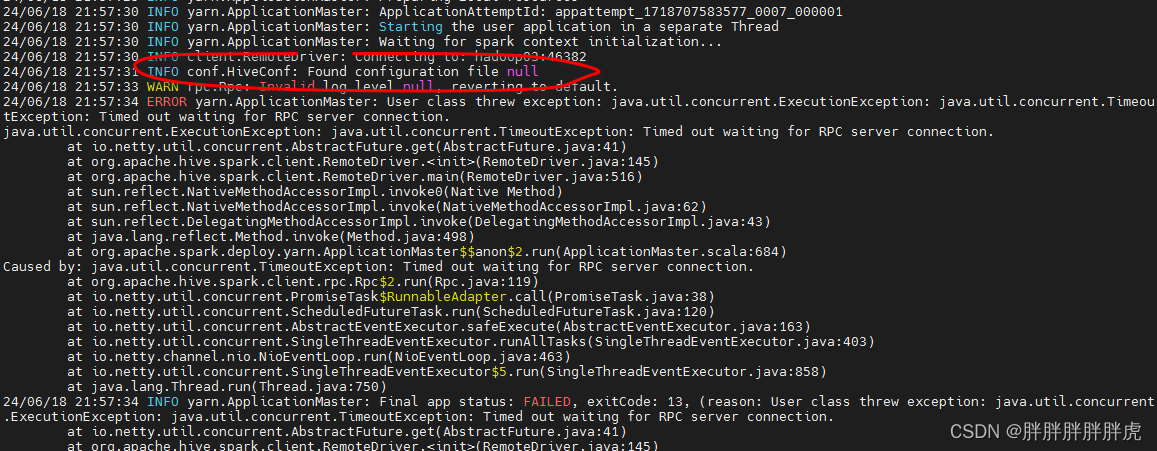

问题记录

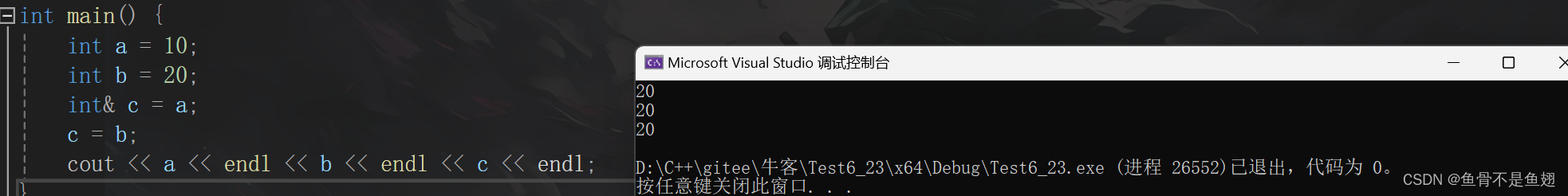

1)java.lang.NoClassDefFoundError: scala/collection/Iterable

https://stackoverflow.com/questions/38345447/apache-hive-exception-noclassdeffounderror-scala-collection-iterable

for f in ${SPARK_HOME}/jars/*.jar; doCLASSPATH=${CLASSPATH}:$f;

done

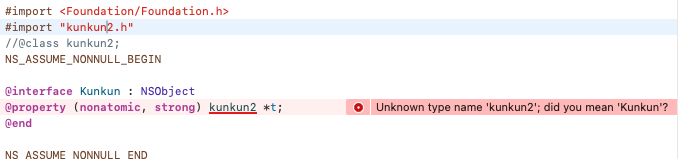

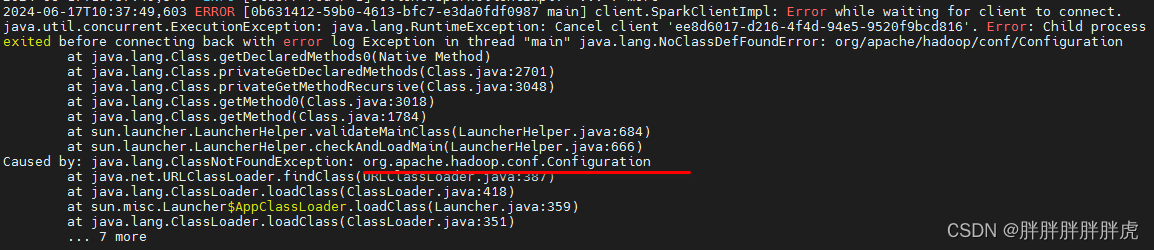

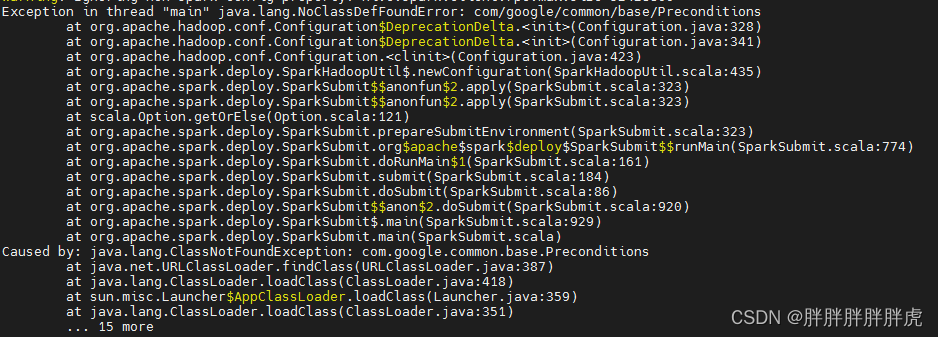

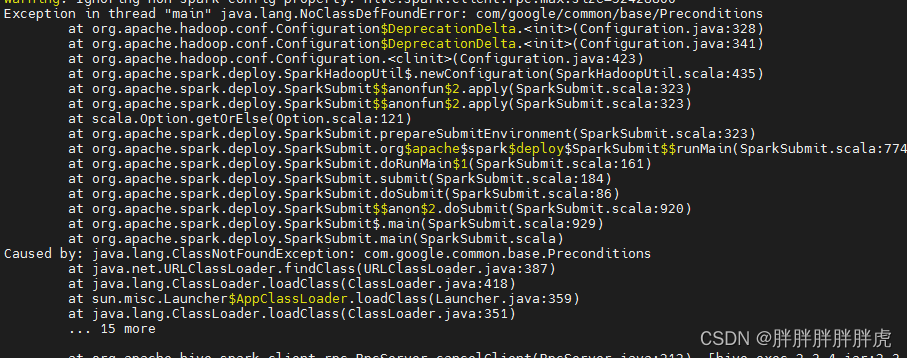

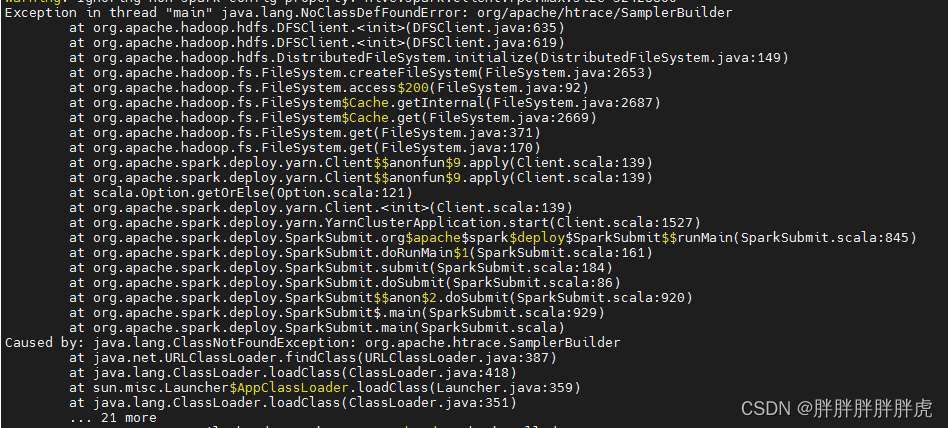

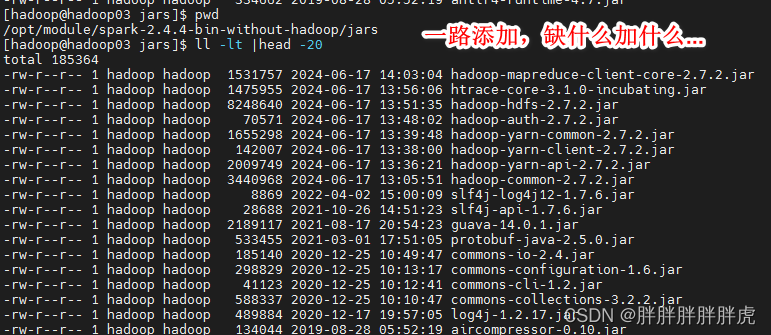

2) 各种 classNotFound

…

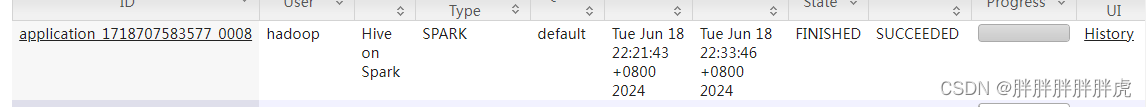

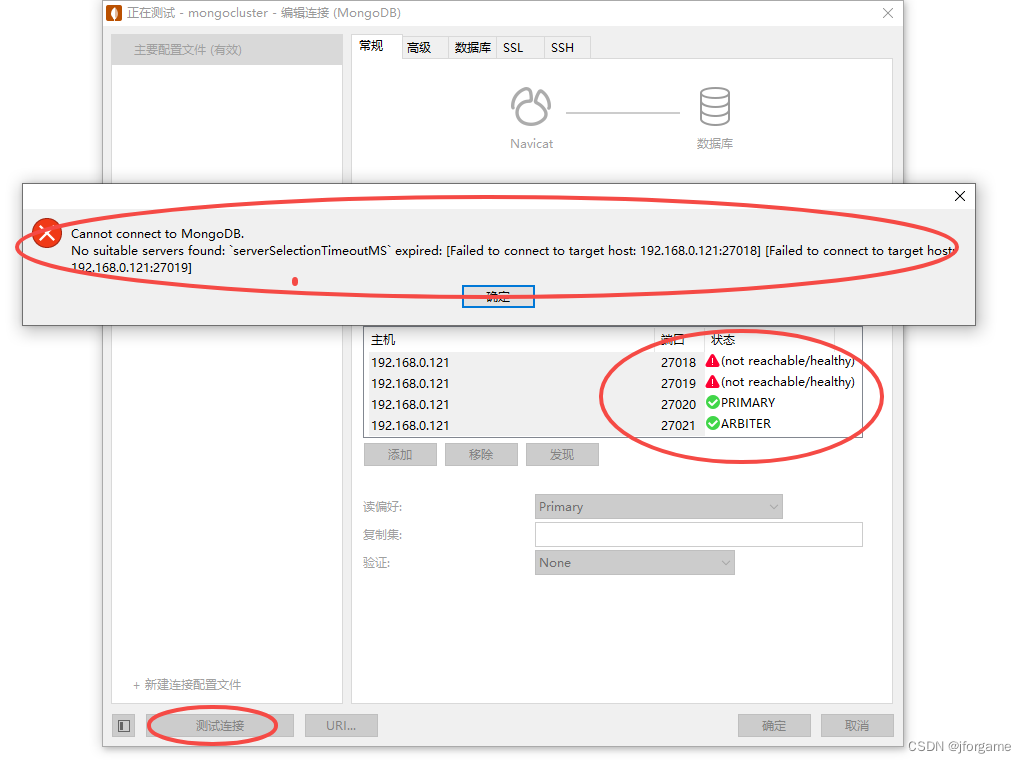

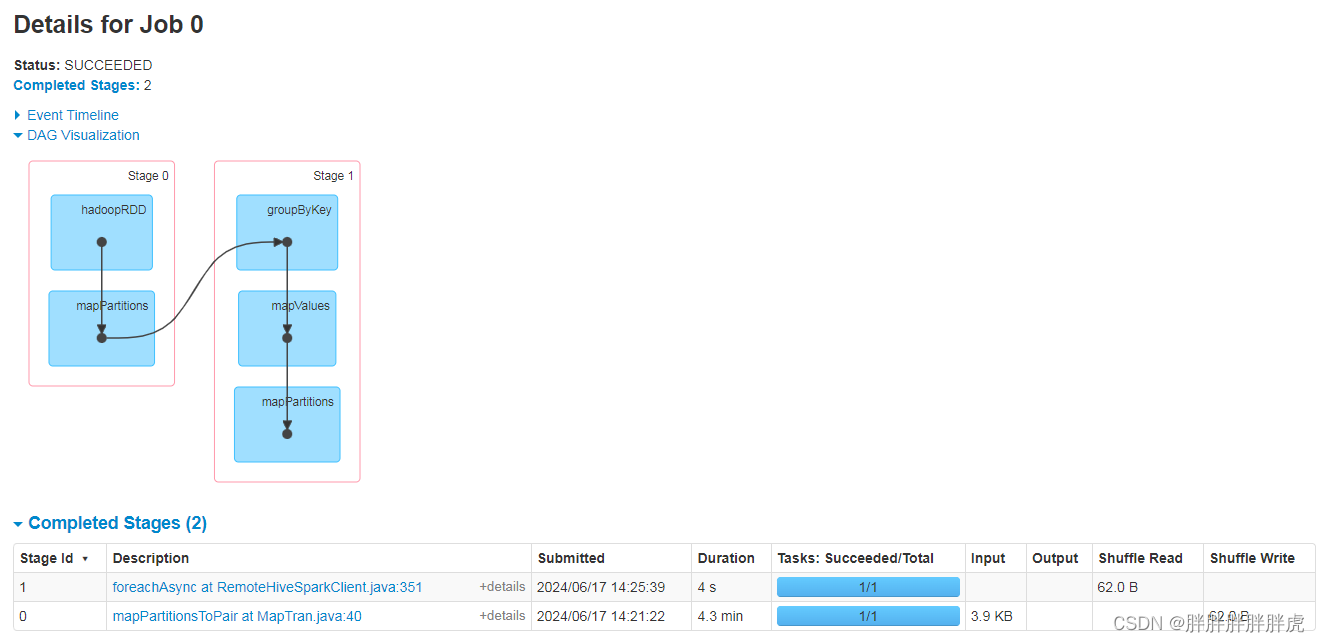

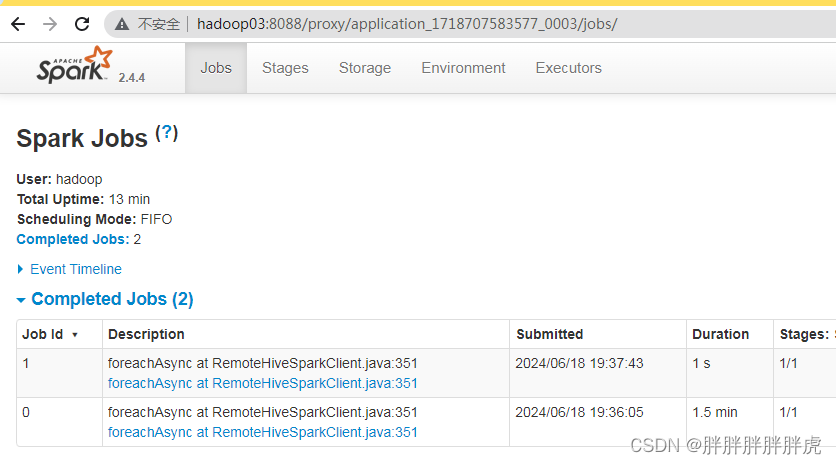

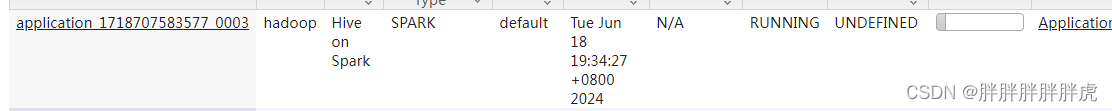

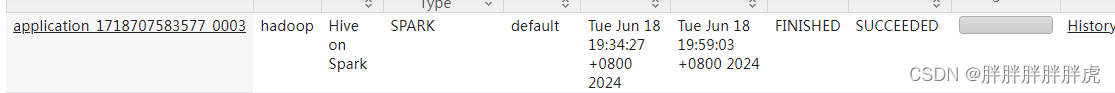

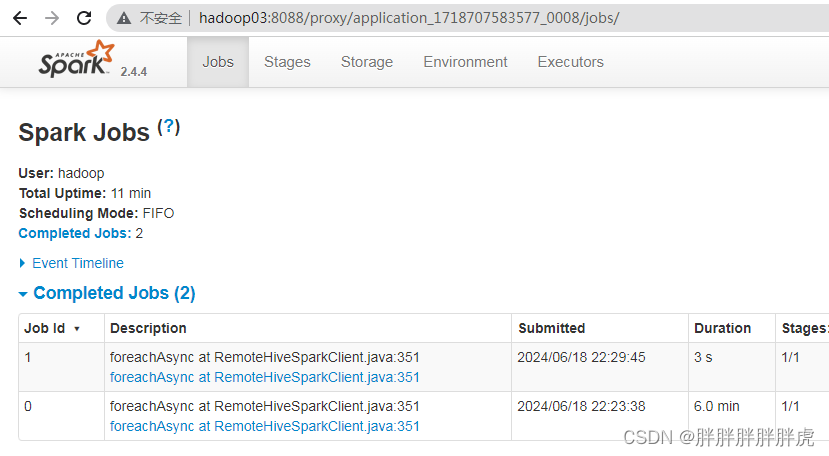

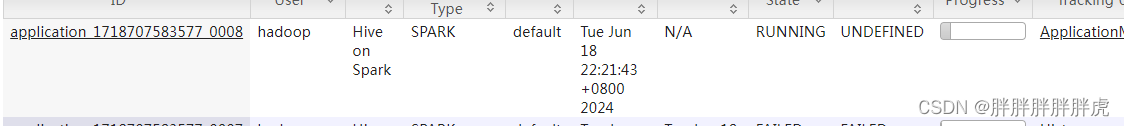

hive on spark 任务结束 | 完成,资源不释放

参考:https://blog.csdn.net/qq_31454379/article/details/107621838

解决方法:

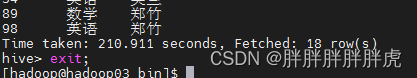

- 如果是hive命令行客户端提交的job,退出hive命令行,资源自动释放

- 如果是脚本提交的job,最好在脚本末尾加入 !quit 主动释放资源

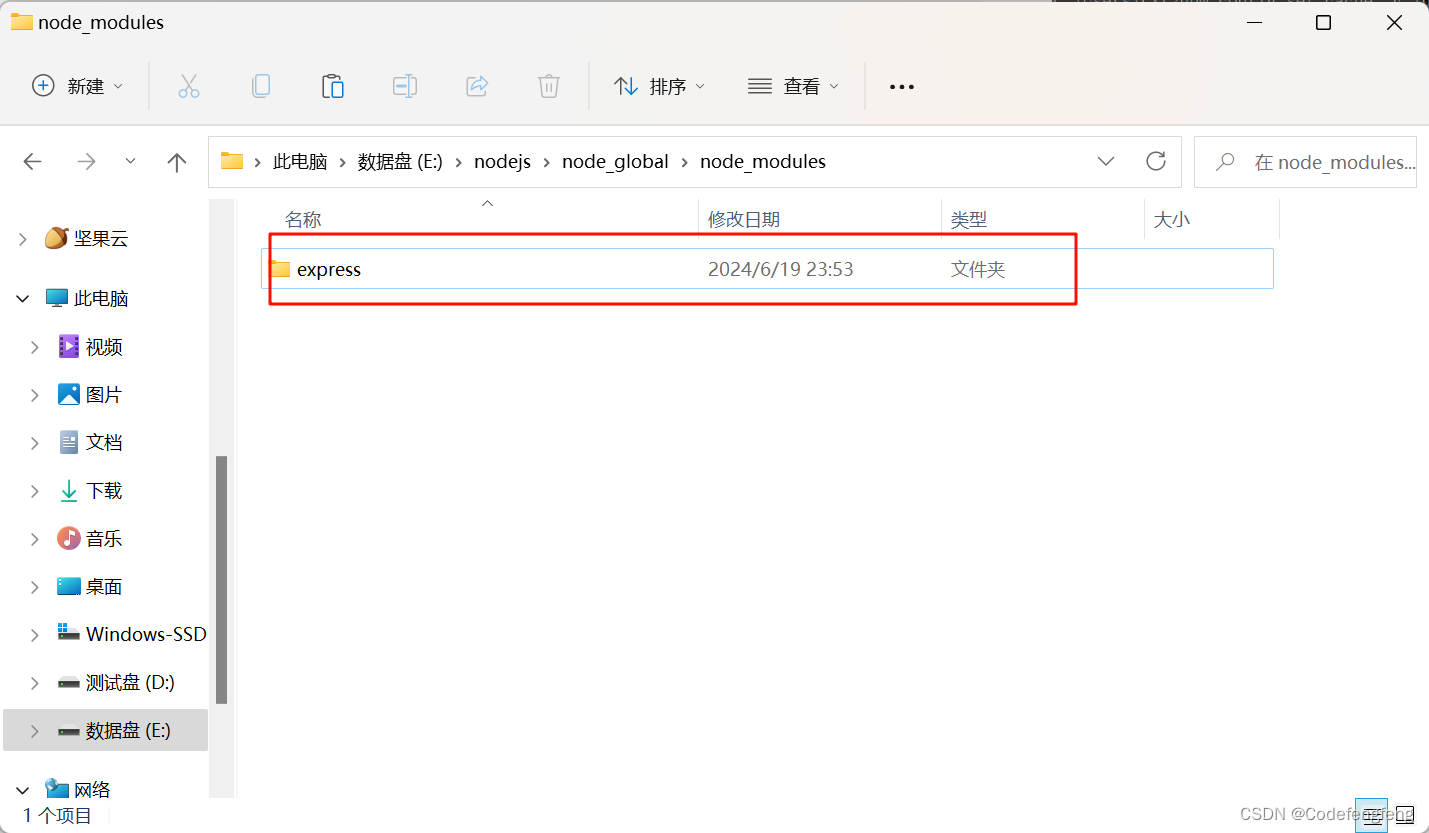

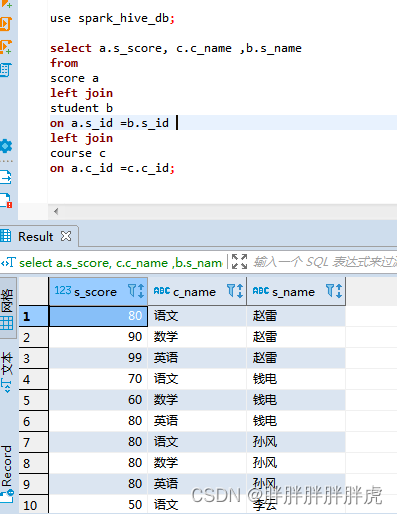

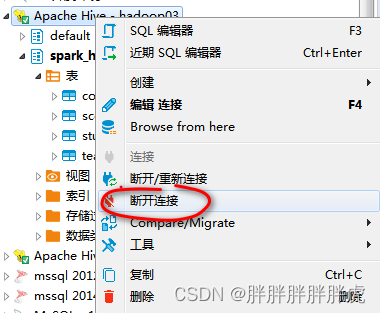

dbvear hive on spark

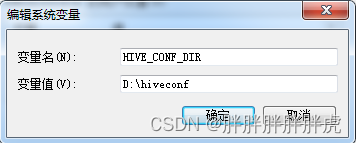

配置 HIVE_CONF_DIR 变量

同样遇到 yarn 资源未释放

断开连接、资源释放