48全连接卷积神经网络(FCN)

1.构造函数

import torch

import torchvision

from torch import nn

from torch.nn import functional as F

import matplotlib.pyplot as plt

import liliPytorch as lp

from d2l import torch as d2l

pretrained_net = torchvision.models.resnet18(pretrained=True)

"""

[Sequential((0): BasicBlock((conv1): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(downsample): Sequential((0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)))(1): BasicBlock((conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(relu): ReLU(inplace=True)(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True))

), AdaptiveAvgPool2d(output_size=(1, 1)), Linear(in_features=512, out_features=1000, bias=True)]

"""

net = nn.Sequential(*list(pretrained_net.children())[:-2])

"""

X = torch.rand(size=(1, 1, 96, 96))

for layer in net:X = layer(X)print(layer.__class__.__name__, 'output shape:\t', X.shape)

# Sequential output shape: torch.Size([1, 64, 24, 24])

# Sequential output shape: torch.Size([1, 64, 24, 24])

# Sequential output shape: torch.Size([1, 128, 12, 12])

# Sequential output shape: torch.Size([1, 256, 6, 6])

# Sequential output shape: torch.Size([1, 512, 3, 3])

前向传播将输入的高和宽减小至原来的 1/32

"""

num_classes = 21

net.add_module('final_conv', nn.Conv2d(512, num_classes, kernel_size=1))

net.add_module('transpose_conv', nn.ConvTranspose2d(num_classes, num_classes,kernel_size=64, padding=16, stride=32))

"""

[Conv2d(512, 21, kernel_size=(1, 1), stride=(1, 1)),

ConvTranspose2d(21, 21, kernel_size=(64, 64), stride=(32, 32), padding=(16, 16))]

"""

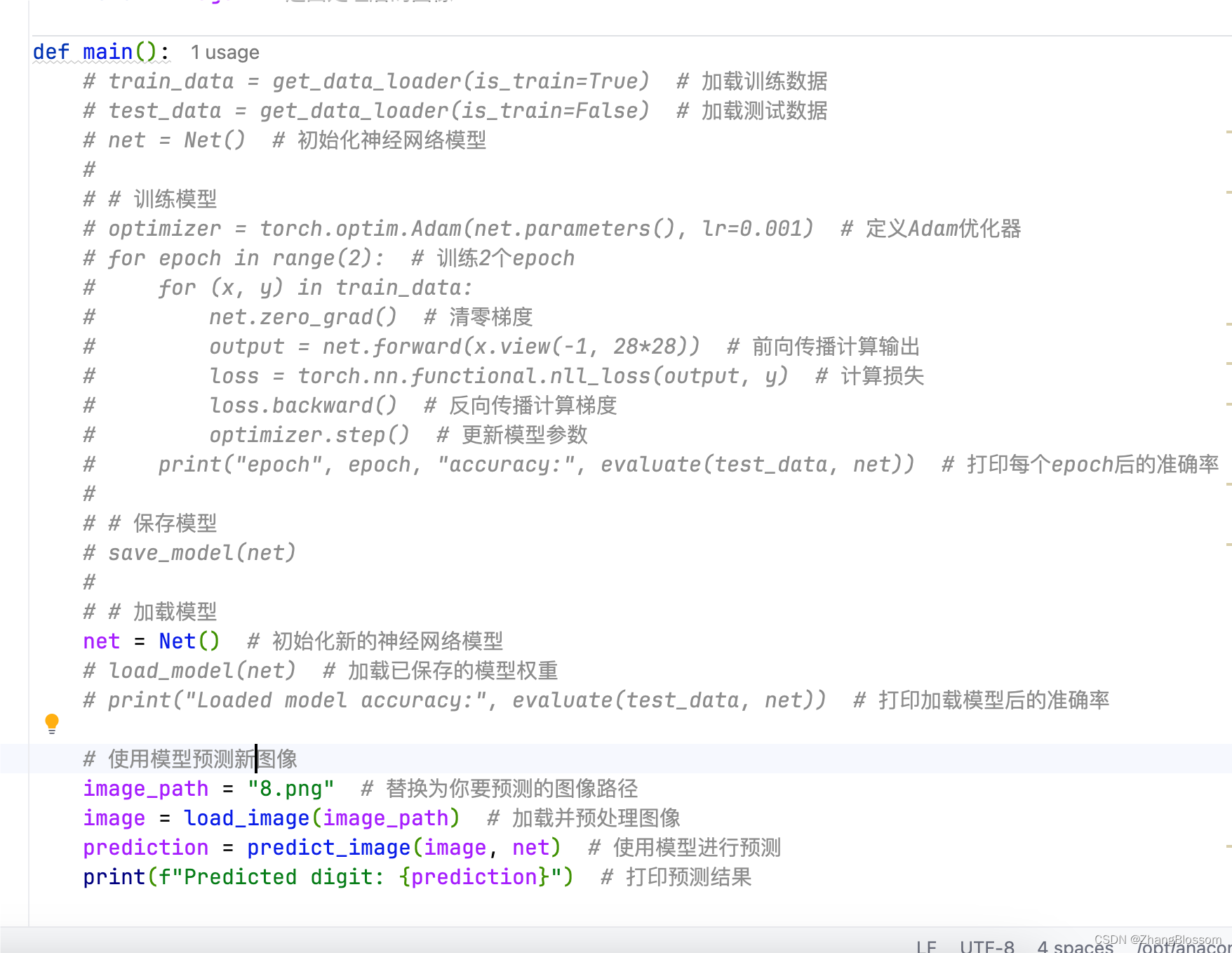

2.双线性插值

def bilinear_kernel(in_channels, out_channels, kernel_size):factor = (kernel_size + 1) // 2 if kernel_size % 2 == 1: center = factor - 1else:center = factor - 0.5og = (torch.arange(kernel_size).reshape(-1, 1), torch.arange(kernel_size).reshape(1, -1)) filt = (1 - torch.abs(og[0] - center) / factor) * \(1 - torch.abs(og[1] - center) / factor)weight = torch.zeros((in_channels, out_channels, kernel_size, kernel_size))weight[range(in_channels), range(out_channels), :, :] = filtreturn weight

conv_trans = nn.ConvTranspose2d(3, 3, kernel_size=4, padding=1, stride=2,bias=False)

conv_trans.weight.data.copy_(bilinear_kernel(3, 3, 4))img = torchvision.transforms.ToTensor()(d2l.Image.open('../limuPytorch/images/catdog.jpg'))

"""

d2l.Image.open('../limuPytorch/images/catdog.jpg') 首先被执行,返回一个 PIL.Image 对象。

然后,torchvision.transforms.ToTensor() 创建一个 ToTensor 对象。

最后,ToTensor 对象被调用(通过 () 运算符),将 PIL.Image 对象作为参数传递给 ToTensor 的 __call__ 方法,

转换为 PyTorch 张量。

"""

X = img.unsqueeze(0)

Y = conv_trans(X)

out_img = Y[0].permute(1, 2, 0).detach()print('input image shape:', img.permute(1, 2, 0).shape)

plt.imshow(img.permute(1, 2, 0))

plt.show()

print('output image shape:', out_img.shape)

plt.imshow(out_img)

plt.show()

3.模型训练

def train_batch_ch13(net, X, y, loss, trainer, devices):"""使用多GPU训练一个小批量数据。参数:net: 神经网络模型。X: 输入数据,张量或张量列表。y: 标签数据。loss: 损失函数。trainer: 优化器。devices: GPU设备列表。返回:train_loss_sum: 当前批次的训练损失和。train_acc_sum: 当前批次的训练准确度和。"""if isinstance(X, list):X = [x.to(devices[0]) for x in X]else:X = X.to(devices[0])y = y.to(devices[0])net.train() trainer.zero_grad()pred = net(X) l = loss(pred, y) l.sum().backward()trainer.step() train_loss_sum = l.sum()train_acc_sum = d2l.accuracy(pred, y)return train_loss_sum, train_acc_sumdef train_ch13(net, train_iter, test_iter, loss, trainer, num_epochs,devices=d2l.try_all_gpus()):"""训练模型在多GPU参数:net: 神经网络模型。train_iter: 训练数据集的迭代器。test_iter: 测试数据集的迭代器。loss: 损失函数。trainer: 优化器。num_epochs: 训练的轮数。devices: GPU设备列表,默认使用所有可用的GPU。"""timer, num_batches = d2l.Timer(), len(train_iter)animator = lp.Animator(xlabel='epoch', xlim=[1, num_epochs], ylim=[0, 1],legend=['train loss', 'train acc', 'test acc'])net = nn.DataParallel(net, device_ids=devices).to(devices[0])for epoch in range(num_epochs):metric = lp.Accumulator(4)for i, (features, labels) in enumerate(train_iter):timer.start() l, acc = train_batch_ch13(net, features, labels, loss, trainer, devices)metric.add(l, acc, labels.shape[0], labels.numel()) timer.stop() if (i + 1) % (num_batches // 5) == 0 or i == num_batches - 1:animator.add(epoch + (i + 1) / num_batches,(metric[0] / metric[2], metric[1] / metric[3], None))test_acc = d2l.evaluate_accuracy_gpu(net, test_iter) animator.add(epoch + 1, (None, None, test_acc))print(f'loss {metric[0] / metric[2]:.3f}, train acc 'f'{metric[1] / metric[3]:.3f}, test acc {test_acc:.3f}')print(f'{metric[2] * num_epochs / timer.sum():.1f} examples/sec on 'f'{str(devices)}')

W = bilinear_kernel(num_classes, num_classes, 64)

net.transpose_conv.weight.data.copy_(W)

batch_size, crop_size = 32, (320, 480)

train_iter, test_iter = lp.load_data_voc(batch_size, crop_size)

def loss(inputs, targets):return F.cross_entropy(inputs, targets, reduction='none').mean(1).mean(1)num_epochs, lr, wd, devices = 5, 0.001, 1e-3, d2l.try_all_gpus()

trainer = torch.optim.SGD(net.parameters(), lr=lr, weight_decay=wd)

train_ch13(net, train_iter, test_iter, loss, trainer, num_epochs, devices)

plt.show()