import numpy as np

from sklearn import datasets

from sklearn.svm import SVC

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

import matplotlib.pyplot as plt加载数据

X,y=datasets.load_wine(return_X_y = True)

display(X.shape)![]()

X_train,X_test,y_train,y_test = train_test_split(X,y,test_size=0.2)

X_train.shape

建模

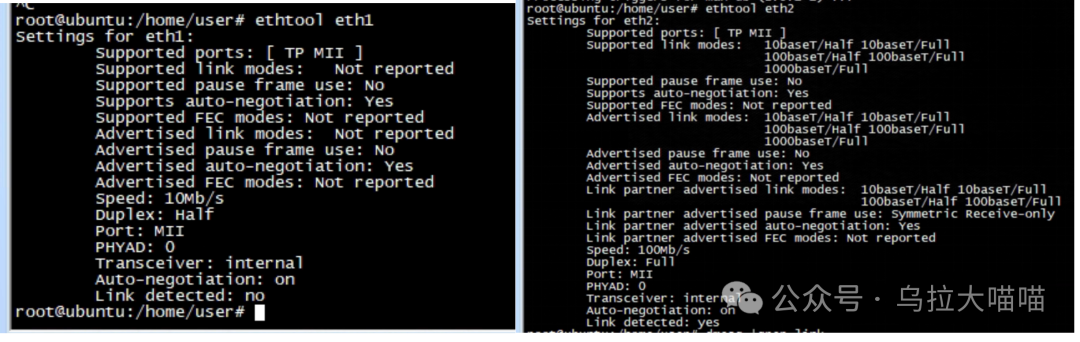

线性linear

svc = SVC(kernel = 'linear')svc.fit(X_train,y_train)y_pred = svc.predict(X_test)score = accuracy_score(y_test,y_pred)

print('使用核函数为linear,得分为:',score)![]()

#二维:shape (3,13),

#三分类问题-->三个方程

#特征13个,所以系数是13

svc.coef_

svc.intercept_

poly多项式(方程幂次大于1)

svc = SVC(kernel = 'poly')#升维,数据由少变多svc.fit(X_train,y_train)y_pred = svc.predict(X_test)score = accuracy_score(y_test,y_pred)print('使用核函数为linear,得分为:',score)![]()

rbf高斯分布,正态分布

svc = SVC(kernel = 'rbf')#默认的,一般这种核函数效果好,属于正态分布svc.fit(X_train,y_train)y_pred = svc.predict(X_test)score = accuracy_score(y_test,y_pred)print('使用核函数为linear,得分为:',score)![]()

sigmoid函数

svc = SVC(kernel = 'sigmoid')svc.fit(X_train,y_train)y_pred = svc.predict(X_test)score = accuracy_score(y_test,y_pred)print('使用核函数为linear,得分为:',score)

非线性核函数

from matplotlib.colors import ListedColormap创造数据

X,y= datasets.make_circles(n_samples=100,factor=0.7)X +=np.random.randn(100,2)*0.03

display(X.shape,y.shape)plt.figure(figsize= (5,5))

cmap = ListedColormap(colors= ['blue','red'])

plt.scatter(X[:,0],X[:,1],c=y,cmap = cmap)

线性核函数

svc = SVC(kernel = 'linear')svc.fit(X,y)svc.score(X,y)

多项式poly(升维)

svc = SVC(kernel = 'poly',degree=2)#二次幂svc.fit(X,y)svc.score(X,y)

高斯核函数rbf

svc = SVC(kernel = 'rbf')svc.fit(X,y)svc.score(X,y)![]()