爬去某大学某学生的课程表

先查看该网站的request方法和数据表单

request方法是get

表单头为u和p

import urllib.request

import http.cookiejar

import urllib.parse

from urllib.request import urlopenurl="https://gsdb.bjtu.edu.cn/client/login/"

agent='Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Ubuntu Chromium/60.0.3112.113 Chrome/60.0.3112.113 Safari/537.36'cookie=http.cookiejar.CookieJar()

opener=urllib.request.build_opener(urllib.request.HTTPCookieProcessor(cookie))headers = {'User-Agent':agent}

postdata=urllib.parse.urlencode({'u':'XXXXXX','p':'××××××'})

postdata=postdata.encode('UTF-8')request=urllib.request.Request(url,postdata,headers)

result=opener.open(request)

print(result.read().decode('UTF-8'))

登录成功~

登录后就可以访问用户的各个网页了。

result=opener.open('https://gsdb.bjtu.edu.cn/course_selection/select/schedule/')

print(result.read().decode('utf-8'))

爬取课程表

pattern=re.compile('<tr>(.*?)</tr>',re.S)

items=re.findall(pattern,pagecode)

for item in items:pat=re.compile('<td>(.*?)</td>',re.S)its=re.findall(pat,item)for it in its:print(it)

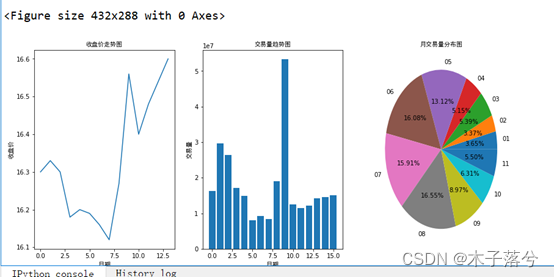

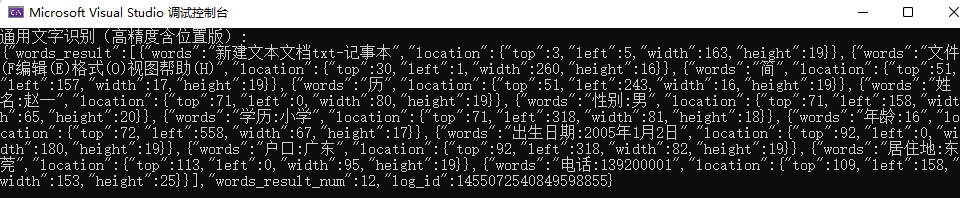

运行成功!

分布运行

cookie.py 把登录网站的cookie信息保存到cookie.txt里。

import urllib.request

import re

import http.cookiejar

import urllib.parsefilename='cookie.txt'

#cookie=http.cookiejar.CookieJar(filename)

cookie=http.cookiejar.MozillaCookieJar(filename)

url="https://gsdb.bjtu.edu.cn/client/login/"

agent='Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Ubuntu Chromium/60.0.3112.113 Chrome/60.0.3112.113 Safari/537.36'opener=urllib.request.build_opener(urllib.request.HTTPCookieProcessor(cookie))

headers = {'User-Agent':agent}

postdata=urllib.parse.urlencode({'u':'xxxxxx','p':'xxxxxx'})

postdata=postdata.encode('UTF-8')request=urllib.request.Request(url,postdata,headers)

result=opener.open(request)

print(result.read().decode('utf-8'))

cookie.save(ignore_discard=True,ignore_expires=True)

spider.py加载cookie.py模块,从cookie.txt里面加载出cookie信息,这样就可以模拟登录。

import urllib.request

import re

import http.cookiejar

import cookiecookie=cookie=http.cookiejar.MozillaCookieJar()

cookie.load('cookie.txt',ignore_discard=True,ignore_expires=True)

opener=urllib.request.build_opener(urllib.request.HTTPCookieProcessor(cookie))

result=opener.open('https://gsdb.bjtu.edu.cn/course_selection/select/schedule/')

# print(result.read().decode('UTF-8'))

pagecode=result.read().decode('utf-8')

pattern=re.compile('<tr>(.*?)</tr>',re.S)

items=re.findall(pattern,pagecode)

for item in items:pat=re.compile('<td>(.*?)</td>',re.S)its=re.findall(pat,item)for it in its:print(it)

运行成功!